Education

The Case for Dropping Out of College

What do students get for that price? I asked myself this question on a class by class basis, and have found an enormous mismatch between price and product in almost all cases.

During the summer, my father asked me whether the money he’d spent to finance my first few years at Fordham University in New York City, one of the more expensive private colleges in the United States, had been well spent. I said yes, which was a lie.

I majored in computer science, a field with good career prospects, and involved myself in several extracurricular clubs. Since I managed to test out of some introductory classes, I might even have been able to graduate a year early—thereby producing a substantial cost savings for my family. But the more I learned about the relationship between formal education and actual learning, the more I wondered why I’d come to Fordham in the first place.

* * *

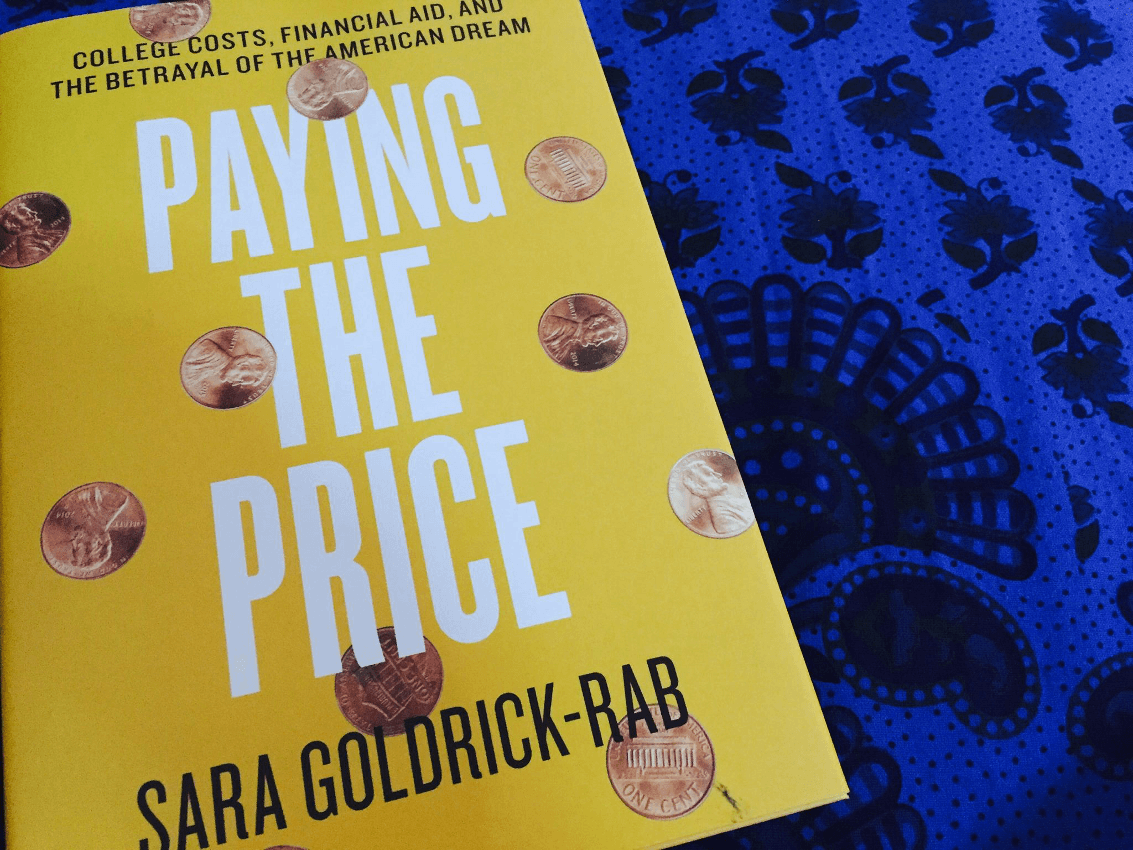

According to the not-for-profit College Board, the average cost of a school year at a private American university was almost $35,000 in 2017—a figure I will use for purposes of rough cost-benefit analysis. (While public universities are less expensive thanks to government subsidies, the total economic cost per student-year, including the cost borne by taxpayers, typically is similar.) The average student takes about 32 credits worth of classes per year (with a bachelor’s degree typically requiring at least 120 credits in total). So a 3-credit class costs just above $3,000, and a 4-credit class costs a little more than $4,000.

What do students get for that price? I asked myself this question on a class by class basis, and have found an enormous mismatch between price and product in almost all cases. Take the two 4-credit calculus classes I took during freshman year. The professor had an unusual teaching style that suited me well, basing his lectures directly on lectures posted online by MIT. Half the class, including me, usually skipped the lectures and learned the content by watching the original material on MIT’s website. When the material was straightforward, I sped up the video. When it was more difficult, I hit pause, re-watched it, or opened a new tab on my browser so I could find a source that covered the same material in a more accessible way. From the perspective of my own convenience and education, it was probably one of the best classes I’ve taken in college. But I was left wondering: Why should anyone pay more than $8,000 to watch a series of YouTube videos, available online for free, and occasionally take an exam?

Another class I took, Philosophical Ethics, involved a fair bit of writing. The term paper, which had an assigned minimum length of 5,000 words, had to be written in two steps—first a full draft and then a revised version that incorporated feedback from the professor. Is $3,250 an appropriate cost for feedback on 10,000 words? That’s hard to say. But consider that the going rate on the web for editing this amount of text is just a few hundred dollars. Even assuming that my professor is several times more skilled and knowledgeable, it’s not clear that this is a good value proposition.

“But what about the lectures?” you ask. The truth is that many students, including me, don’t find the lectures valuable. As noted above, equivalent material usually can be found online for free, or at low cost. In some cases, a student will find that his or her own professor has posted video of his or her own lectures. And the best educators, assisted with the magic of video editing, often put out content that puts even the most renowned college lecturers to shame. If you have questions about the material, there’s a good chance you will find the answer on Quora or Reddit.

Last semester, I took a 4-credit class called Computer Organization. There was a total of 23 lectures, each of 75 minutes length—or about 29 hours of lectures. I liked the professor and enjoyed the class. Yet, once the semester was over, I noticed that almost all of the core material was contained in a series of YouTube videos that was just three hours long.

Like many of my fellow students, I spend most of my time in class on my laptop: Twitter, online chess, reading random articles. From the back of the class, I can see that other students are doing likewise. One might think that all of these folks will be in trouble when test time comes around. But watching a few salient online videos generally is all it takes to master the required material. You see the pattern here: The degrees these people get say “Fordham,” but the actual education often comes courtesy of YouTube.

The issue I am discussing is not new, and predates the era of on-demand web video. As far back as 1984, American educational psychologist Benjamin Bloom discovered that an average student who gets individual tutoring will outperform the vast majority of peers taught in a regular classroom setting. Even the best tutors cost no more than $80 an hour—which means you could buy 50 hours of their service for the pro-rated cost of a 4-credit college class that supplies 30 hours of (far less effective) lectures.

All of these calculations are necessarily imprecise, of course. But for the most part, I would argue, the numbers I have presented here underestimate the true economic cost of bricks-and-mortar college education, since I have not imputed the substantial effective subsidies that come through government tax breaks, endowments and support programs run by all levels of government.

So given all this, why are we told that, far from being a rip-off, college is a great deal? “In 2014, the median full-time, full-year worker over age 25 with a bachelor’s degree earned nearly 70% more than a similar worker with just a high school degree,” read one typical online report from 2016. The occasion was Jason Furman, then head of Barack Obama’s Council of Economic Advisers, tweeting out data showing that the ratio of an average college graduate’s earnings to a similarly situated high-school graduate’s earnings had grown from 1.1 in 1975 to more than 1.6 four decades later.

To ask my question another way: What accounts for the disparity between the apparently poor value proposition of college at a micro level with the statistically observed college premium at the macro level? A clear set of answers appears in The Case against Education: Why the Education System Is a Waste of Time and Money, a newly published book by George Mason University economist Bryan Caplan.

One explanation lies in what Caplan calls “ability bias”: From the outset, the average college student is different from the average American who does not go to college. The competitive college admissions process winnows the applicant pool in such a way as to guarantee that those who make it into college are more intelligent, conscientious and conformist than other members of his or her high-school graduating cohort. In other words, when colleges boast about the “70% income premium” they supposedly provide students, they are taking credit for abilities that those students already had before they set foot on campus, and which they likely could retain and commercially exploit even if they never got a college diploma. By Caplan’s estimate, ability bias accounts for about 45% of the vaunted college premium. Which would means that a college degree actually boosts income by about 40 points, not the oft-cited 70.

Of course, 40% is still a huge premium. But Caplan digs deeper by asking how that premium is earned. And in his view, the extra income doesn’t come from substantive skills learned in college classrooms, but rather from what he called the “signaling” function of a diploma: Because employers lack any quick and reliable objective way to evaluate a job candidate’s potential worth, they fall back on the vetting work done by third parties—namely, colleges. A job candidate who also happens to be someone who managed to get through the college admissions process, followed by four years of near constant testing, likely is someone who is also intelligent and conscientious, and who can be relied on to conform to institutional norms. It doesn’t matter what the applicant was tested on, since it is common knowledge that most of what one learns in college will never be applied later in life. What matters is that these applicants were tested on something. Caplan estimates that signaling accounts for around 80% of the 40-point residual college premium described above, which, if true, would leave less than ten percentage points—from the original 70—left to be accounted for.

There are many signs that the signaling model of education is correct. Consider the case of a Canadian named Guillaume Dumas, who, between 2008 and 2012, attended lectures at prestigious universities such as Yale, Stanford and UC Berkeley without being enrolled. It turned out that this was surprisingly easy, since colleges do little to prevent non-students from sitting in on classes. Many professors are even feel flattered if their lectures attract outsiders, which is why so many of them put their lectures on the web. But think about what this means: If the knowledge conferred by university lectures were so intrinsically valuable—as opposed to just a means to a diploma—why would these schools and professors essentially be giving it away for free? The answer is that they know what Caplan knows—which is that it is the diploma that’s valuable as a signaling artifact, not the actual substance of what’s learned.

The general public, too, is aware of this—which is why almost no one seems interested in following Dumas’ example by attending college lectures without being enrolled in a paid course of study. If it were the actual content of lectures that people wanted, every college would have to post a guard outside of classrooms to prevent unpaid auditors. Instead, the opposite it true: When a professor cancels a class, most students cheer, as this allows them to graduate while attending one fewer lecture.

Till now, I have discussed the value of college education in generic fashion. But as everyone on any campus knows, different majors offer different value. In the case of liberal arts, the proportion of the true college premium attributable to signaling is probably close to 100%. It is not just that the jobs these students seek typically don’t require any of the substantive knowledge they acquired during their course of study: They also aren’t really improving students’ analytical skills, either. In their 2011 book Academically Adrift: Limited Learning on College Campuses, sociologists Richard Arum and Josipa Roksa presented data showing that, over their first two years of college, students typically improve their skills in critical thinking, complex reasoning and writing by less than a fifth of a standard deviation.

According to the U.S. Department of Commerce’s 2017 report on STEM jobs, even the substantive educational benefit to be had from degrees in technical fields may be overstated—since “almost two-thirds of the workers with a STEM undergraduate degree work in a non-STEM job.” Signaling likely play a strong role in such cases. Indeed, since STEM degrees are harder to obtain than non-STEM degrees, they provide an even stronger signal of intelligence and conscientiousness.

However, this is not the only reason why irrelevant coursework pays. Why do U.S. students who want to become doctors, one of the highest paying professions, first need to complete four years of often unrelated undergraduate studies? The American blogger and psychiatrist Scott Alexander, who majored in philosophy as an undergraduate and then went on to study medicine in Ireland, observed in his brilliant 2015 essay Against Tulip Subsidies that “Americans take eight years to become doctors. Irishmen can do it in four, and achieve the same result.” Law follows a similar pattern: While it takes four years to study law in Ireland, and in France it takes five, students in the United States typically spend seven years in school before beginning the separate process of bar accreditation.

In a 2014 interview, the contrarian investor and entrepreneur Peter Thiel, who has raised alarms about the direction of the higher education system for many years, explained that the idea of education has become a mere “abstraction” which serves to obscure problems with the quality of training and knowledge people receive. While the price of college education increased at more than double the baseline rate of inflation between 1980 and 2017, there is evidence that many students are learning less today than their parents and grandparents did.

Over the last 50 years, the amount of time students spend studying has fallen by almost 40%. At the same time, the average GPA has steadily risen, at a rate of about 0.1 point per decade. In 1983, the average GPA was 2.8; in 2013, it was more than 3.1. This may sound like good news: better grades for less work. But a more likely explanation is a simple inflationary dynamic.

In the American imagination, college is a stepping stone to a better life. But the value from the “sheepskin signaling premium” conferred by a diploma isn’t going to those who need it. As Matthew Stewart argued in his June Atlantic magazine cover story, The Birth of a New American Aristocracy, it is the students whose parents already are well off who are capturing the benefits of this system. A 2017 study found that 38 colleges, including five Ivy League Schools, had more students from society’s top 1% earning families than from the bottom 60%.

To some extent, this is the result of informal mechanisms that allow wealthy families to provide more opportunities for their children. Practicing a costly sport such as lacrosse, fencing or squash, for instance, can greatly increase one’s chances of being accepted into an elite college. And in California, the eleven best public elementary schools are located in Palo Alto, where the median house price is $3,310,100. (This reflects a national trend. A 2012 study by the Brookings Institute found that “home values are $205,000 higher on average in the neighborhoods of high-scoring versus low-scoring schools.”) But in some cases, such mechanisms are formally institutionalized. Court documents published in 2018, for instance, reveal that legacy applicants are five times more likely to be accepted to Harvard than non-legacy applicants.

These problems are well-known, and efforts to help admit disadvantaged students have been underway for decades. But a class divide persists on campus even for those students who make the cut: While the average graduation rate at four-year colleges after six years is 59% (an already appallingly low number), the graduation rate among low-income students is only 16%. The other 84% suffer the worst of both worlds: They receive almost none of college’s income-boosting signaling effect, while still paying tuition proportional to the time they stayed in college.

Similar trends play out along racial lines. While the college enrollment gap between white and black high-school graduates has almost vanished, the graduation gap remains substantial. According to a 2017 report by the National Student Clearinghouse Research Center, the six-year completion rate stands at 45.9% for black students and 55% for Hispanics. For white students, it is 67.2%, and for Asians it is 71.7%.

Wealthy families can pay for college out of savings. For most Americans, it is financed through debt. In 2007, the total amount of outstanding student debt was $545-billion. Today, the number is $1.5 trillion. According to the Brookings Institute, nearly 40% of all borrowers may default on their student loans by 2023.

But thanks to the phenomenon known as “degree inflation,” taking on debt to pay for college often seems like the only option in today’s job market. An analysis by Burning Glass Technologies found that “Employers now require bachelor’s degrees for a wide range of jobs, but the shift has been dramatic for some of the occupations historically dominated by workers without a college degree.” Indeed, “65% of postings for Executive Secretaries and Executive Assistants now call for a bachelor’s degree,” despite that fact that “only 19% of those currently employed in these roles have a B.A.” A report by the Harvard Business Review came to a similar conclusion, noting that “in 2015, 67% of production supervisor job postings asked for a college degree, while only 16% of employed production supervisors had one.”

But as multi-faceted as the symptoms of this problem may be, the path forward isn’t a mystery, because the tools to permit an education revolution already exist. While selective, high-cost elite colleges epitomize the problems I am discussing here, those same colleges, ironically, are showing us what an alternative education system would look like—since the MOOCS (Massive Open Online Courses) offered by these prestigious institutions tend to be especially popular. Moreover, the days when students were required to begin every semester at the campus bookstore may also become a thing of the past, as countless inexpensive or even free textbooks now are being uploaded to the web.

But the power of the educational establishment doesn’t lie in its monopoly on actual knowledge or education. As noted above, it comes from its power to signal status, by conferring titles that are widely used as a proxy for desirable qualities. The education system does not promote learning, it takes it hostage by sending the implicit message that anything learned outside of its walls is worthless. As long as titles such as B.A, M.S., PhD remain the primary currency within the job market, the revolution will never succeed. It isn’t just the education system that has to change—it’s the whole culture.

When pundits come to the defense of a traditional college experience, they often will argue that the education one receives on campus happens as much outside the classroom as inside. Students socialize, they figure out what they want out of life, they mature. And it is true that most students I know would say that they have gone through an important transformation since the beginning of their freshman year. But a lot of that growth and transformation would have come anyway—since young adulthood typically is a transformative period in life no matter what one does. Is spending $200,000 and four years pretend-learning really the best, most cost-effective way to become a better person?

* * *

Two years before graduating from high school, I dropped out. While I was doing well academically, the learning experience left me alienated. Lectures were boring, tests and homework stressful.

I was just 16 years old, but I had a plan. In France, where I grew up, a high-school diploma can be earned by taking a two-week long set of exams, the Baccalauréat. It is possible to take the exam even if one is not enrolled in high-school, which is exactly what I did.

I got all the texts I needed, and worked through them. I struggled at the beginning, because I wasn’t used to working without outside pressure or deadlines. But after a few months, I started making progress. Eventually, I got into Fordham University, which granted me 20 transfer credits because of the good results I’d achieved on the Baccalauréat.

Why couldn’t there be such an option for college? For every class a university has to offer, why not just offer a traditional exam that tests all the knowledge and skills taught in the class? This would allow for an order-of-magnitude decrease in the cost of a degree while still permitting the option of a traditional lecture-based university curriculum for those who wanted to pay for it.

The problem with existing MOOCS and competency-based university programs is that they are targeted at non-traditional students, and the exam proctoring happens online. If, however, elite universities decided to create a mainstream open-to-everyone approach, with rigorously proctored examination-hall testing procedures for all classes, it could solve the crisis in education almost at a stroke. But I’m not getting my hopes up: Elite universities know that their cachet comes from a sense of exclusivity. If anyone who didn’t fence or play lacrosse could get a Harvard degree on the basis of mere brains and hard work, that would hurt the institution’s brand—even if it helped society.

Blogger Scott Alexander proposes another, more radical sounding solution: “Make ‘college degree’ a protected characteristic, like race and religion and sexuality. If you’re not allowed to ask a job candidate whether they’re gay, you’re not allowed to ask them whether they’re a college graduate or not. You can give them all sorts of examinations, you can ask them their high school grades and SAT scores, you can ask their work history, but if you ask them if they have a degree then that’s illegal class-based discrimination.” While this may sound extreme, it’s not as crazy as it sounds. As Frederick M. Hess and Grant Addison of the American Enterprise Institute (AEI) have argued, there is a strong civil rights case to be made in this area, since the focus on credentials has a disparate impact on minorities. In the United States, 39% of whites have a college degree, compared to only 29% of blacks and 20% of Hispanics.

As for me, I chose not to return to Fordham University when this past summer ended. I don’t know how this decision will work out. And if I am unsuccessful on the job market, I might end up going back to school and finishing my degree. But before I resign myself to a university system that I know to be harmful, I’d like to try advancing myself outside the bastion of privilege these schools have erected.