Free Speech

The Scientific Importance of Free Speech

These are merely tools that help us to accomplish a far greater mission, which is to choose between rival narratives, in the vicious, no-holds-barred battle of ideas that we call “science”.

Editor’s note: this is a shortened version of a speech that the author was due to give last month at King’s College London which was canceled because the university deemed the event to be too ‘high risk’.

A quick Google search suggests that free speech is a regarded as an important virtue for a functional, enlightened society. For example, according to George Orwell: “If liberty means anything at all, it means the right to tell people what they do not want to hear.” Likewise, Ayaan Hirsi Ali remarked: “Free speech is the bedrock of liberty and a free society, and yes, it includes the right to blaspheme and offend.” In a similar vein, Bill Hicks declared: “Freedom of speech means you support the right of people to say exactly those ideas which you do not agree with”.

But why do we specifically need free speech in science? Surely we just take measurements and publish our data? No chit chat required. We need free speech in science because science is not really about microscopes, or pipettes, or test tubes, or even Large Hadron Colliders. These are merely tools that help us to accomplish a far greater mission, which is to choose between rival narratives, in the vicious, no-holds-barred battle of ideas that we call “science”.

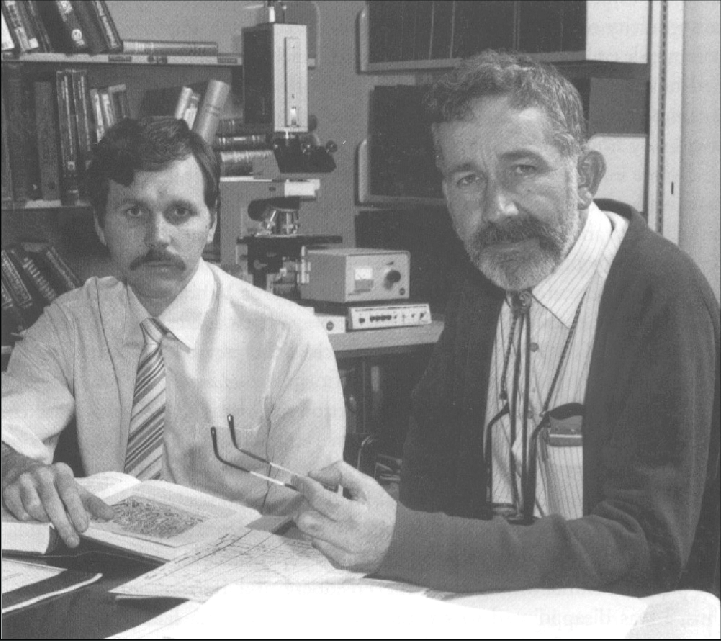

Marshall and Warren, 1984

For example, stomach problems such as gastritis and ulcers were historically viewed as the products of stress. This opinion was challenged in the late 1970s by the Australian doctors Robin Warren and Barry Marshall, who suspected that stomach problems were caused by infection with the bacteria Helicobacter pylori. Frustrated by skepticism from the medical establishment and by difficulties publishing his academic papers, in 1984, Barry Marshall appointed himself his own experimental subject and drank a Petri dish full of H. pylori culture. He promptly developed gastritis which was then cured with antibiotics, suggesting that H. pylori has a causal role in this type of illness. You would have thought that given this clear-cut evidence supporting Warren and Marshall’s opinion, their opponents would immediately concede defeat. But scientists are only human and opposition to Warren and Marshall persisted. In the end it was two decades before their crucial work on H. pylori gained the recognition it deserved, with the award of the 2005 Nobel Prize in Physiology or Medicine.

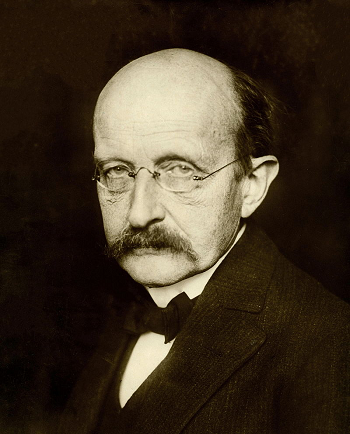

German physicist Max Planck

From this episode we can see that even in situations where laboratory experiments can provide clear evidence in favour of a particular scientific opinion, opponents will typically refuse to accept it. Instead scientists tend cling so stubbornly to their pet theories that no amount of evidence will change their minds and only death can bring an end to the argument, as famously observed by Max Planck:

A new scientific truth does not triumph by convincing its opponents and making them see the light, but rather because its opponents eventually die, and a new generation grows up that is familiar with it.

It is a salutary lesson that even in a society that permits free speech, Warren and Marshall had difficulty publishing their results. If their opponents had the legal power to silence them their breakthrough would have taken even longer to have become clinically accepted and even more people would have suffered unnecessarily with gastric illness that could have been cured quickly and easily with a course of antibiotics. But scientific domains in which a single experiment can provide a definitive answer are rare. For example, Charles Darwin’s principle of evolution by natural selection concerns slow, large-scale processes that are unsuited to testing in a laboratory. In these cases, we take a bird’s eye view of the facts of the matter and attempt to form an opinion about what they mean.

This allows a lot of room for argument, but as long as both sides are able to speak up, we can at least have a debate: when a researcher disagrees with the findings of an opponent’s study, they traditionally write an open letter to the journal editor critiquing the paper in question and setting out their counter-evidence. Their opponent then writes a rebuttal, with both letters being published in the journal with names attached so that the public can weigh up the opinions of the two parties and decide for themselves whose stance they favour. I recently took part in just such an exchange of letters in the elite journal Trends in Cognitive Sciences. The tone is fierce and neither side changed their opinions, but at least there is a debate that the public can observe and evaluate.

The existence of scientific debate is also crucial because as the Nobel Prize-winning physicist Richard Feynman remarked in 1963: “There is no authority who decides what is a good idea.” The absence of an authority who decides what is a good idea is a key point because it illustrates that science is a messy business and there is no absolute truth. This was articulated in Tom Schofield’s posthumously published essay in which he wrote:

[S]cience is not about finding the truth at all, but about finding better ways of being wrong. The best scientific theory is not the one that reveals the truth — that is impossible. It is the one that explains what we already know about the world in the simplest way possible, and that makes useful predictions about the future. When I accepted that I would always be wrong, and that my favourite theories are inevitably destined to be replaced by other, better, theories — that is when I really knew that I wanted to be a scientist.

When one side of a scientific debate is allowed to silence the other side, this is an impediment to scientific progress because it prevents bad theories being replaced by better theories. Or, even worse, it causes civilization to go backward, such as when a good theory is replaced by a bad theory that it previously displaced. The latter situation is what happened in the most famous illustration of the dire consequences that can occur when one side of a scientific debate is silenced. This occurred in connection with the theory that acquired characteristics are inherited. This idea had been out of fashion for decades, in part due to research in the 1880s by August Weismann. He conducted an experiment that entailed amputating the tails of 68 white mice, over 5 generations. He found that no mice were born without a tail or even with a shorter tail. He stated: “901 young were produced by five generations of artificially mutilated parents, and yet there was not a single example of a rudimentary tail or of any other abnormality in this organ.”

These findings and others like them led to the widespread acceptance of Mendelian genetics. Unfortunately for the people of the USSR, Mendelian genetics are incompatible with socialist ideology and so in the 1930s USSR were replaced with Trofim Lysenko’s socialism-friendly idea that acquired characteristics are inherited. Scientists who disagreed were imprisoned or executed. Soviet agriculture collapsed and millions starved.

Henceforth the tendency to silence scientists with inconvenient opinions has been labeled Lysenkoism since it provides the most famous example of the harm that can be done when competing scientific opinions cannot be expressed equally freely. Left-wingers tend to be the most prominent Lysenkoists but the suppression of scientific opinions can occur in other contexts too. The Space Shuttle Challenger disaster in 1986 is a famous example.

The Space Shuttle Challenger disaster happened because the rubber O-rings sealing the joints of the booster rockets became stiff at low temperatures. This design flaw meant that in cold weather, such as the −2 °C of Challenger launch day, a blowtorch-like flame could travel past the O-ring and make contact with the adjacent external fuel tank, causing it to explode. The stiffness of the O-rings at low temperatures was well known to the engineers who built the booster rockets and they consequently advised that the launch of Challenger should be postponed until temperatures rose to safe levels. Postponing the launch would have been an embarrassment and so the engineers were overruled. The launch therefore went ahead in freezing temperatures and, just as the engineers feared, Challenger exploded, causing the death of all seven crew members.

NASA’s investigation into the Challenger disaster was initially secretive, as if to conceal the fact that the well-known O-ring problem was the cause. However, the physicist Richard Feynman was a member of the committee and refused to be silenced. At a televised hearing he demonstrated that the O-rings became stiff when dunked in iced water. In the report on the disaster he concluded that ‘For a successful technology, reality must take precedence over public relations, for nature cannot be fooled.’

Today, there are many reasons to be concerned over the state of free speech, from the growing chill on university campuses to the increased policing of art forms such as literature and film. Discussion of scientific topics on podcasts has also attracted the ire of petty Lysenkoists. But there is also cause for optimism, as long as we stand up for the principle that no one has the right to police our opinions. As Christopher Hitchens remarked. “My own opinion is enough for me, and I claim the right to have it defended against any consensus, any majority, anywhere, any place, any time. And anyone who disagrees with this can pick a number, get in line, and kiss my ass.”

Adam Perkins is a Lecturer in the Neurobiology of Personality at Kings College London and is the author of the book The Welfare Trait: how state benefits affect personality. Follow him on Twitter @AdamPerkinsPhD