Artificial Intelligence

To Accelerate or Decelerate AI: That is the Question

Explaining the “accel/decel” split at the heart of the OpenAI power struggle.

“When you have them by the stock options, their hearts and minds will follow.” This, it seems, is the moral of the OpenAI leadership story, the full truth of which remains shrouded in non-disclosure agreements and corporate confidentiality, dimly illuminated by unattributed comments.

It began with a “blindside” worthy of Survivor. On Friday 17th November, OpenAI had six people on its board. Three were founders: Greg Brockman, the chairman and president; Sam Altman, the CEO; and Ilya Sutskever, the chief science officer. These three worked at OpenAI. The other three did not. These were Quora CEO, Adam D’Angelo, technology entrepreneur Tasha McCauley, and Georgetown Center for Security and Emerging Technology researcher, Helen Toner. Sutskever, D’Angelo, McCauley, and Toner fired Altman on a Google Meet call. Brockman, a long-standing Altman ally, was absent. According to the more lurid accounts of the affair, the effective altruism tribe—the “decelerationists” who see artificial general intelligence (AGI) as an existential risk to humanity—had spoken. They had used their 4–2 majority to vote the “accelerationists” off the island. Altman’s torch had been snuffed out. Or so the board had thought.

Immediately after firing him, the four put out a statement: "Mr. Altman’s departure follows a deliberative review process by the board, which concluded that he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities. The board no longer has confidence in his ability to continue leading OpenAI."

Greg Brockman, who had not been invited to the meeting, had also been “blindsided.” In the same statement, the four announced that Brockman was being removed from the board, but, somewhat naively, they expected him to stay on in his other roles in the company. Later that day, Brockman tweeted: “...based on today’s news i quit.” (Both Brockman and Altman tend to tweet in lowercase.)

Apparently, the only person outside the board not surprised by this move was the chief technology officer Mira Murati. She had been lined up the night before to be the interim CEO in Altman’s place. Outside these five, it seems no one knew about the planned coup. The conspiracy was as tight as that for the assassination of Julius Caesar.

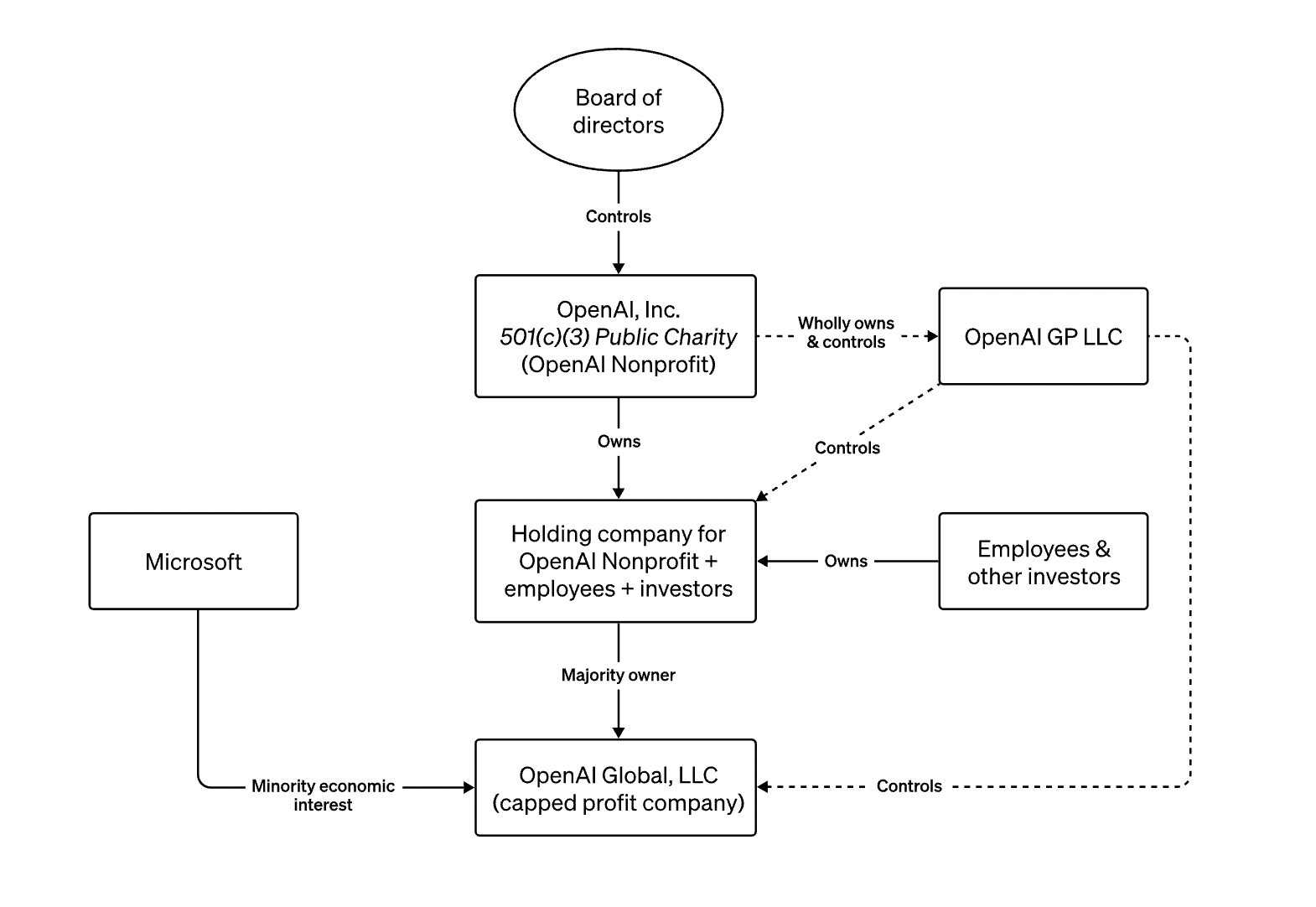

Microsoft was also surprised by Altman's ouster. Reportedly, CEO Satya Nadella was livid when told the news. OpenAI products are incorporated in Microsoft’s Azure cloud computing product. ChatGPT runs on Azure data centers. The main cost for players in the large language model (LLM) market is “compute.” Training LLMs with billions of parameters can cost hundreds of millions of dollars. After the news broke, Microsoft’s stocks dipped.

Altman was obviously not fired for financial reasons. Before the coup, $86 billion USD had been reported as the valuation of OpenAI, based on an oversubscribed share offering. This was much more than the valuation before ChatGPT. Altman had been negotiating a deal for existing shareholders—many of whom are employees, apparently—to “cash in their chips” and sell out. Some employees have been toiling away at OpenAI for years, long before it became the world’s hottest technology company. No doubt this explains the way things panned out.

Over the weekend of November 18–19, Mira wobbled as interim CEO and was removed by the board. The board fought on, sounding out alternative interim CEOs over the course of a hectic weekend. Following his appointment as OpenAI’s third CEO in three days, Emmett Shear declined to say why the board had fired Altman—although he did say it was not about AI safety. On Sunday, while the board was having discussions with prospective CEOs, Microsoft announced it would set up a new AI team led by Altman and Brockman.

The entire senior management team of OpenAI, except for Mira Murati, had been shocked by the announcement. Three of them quit on the Friday to follow Altman and Brockman wherever they were going. Among them was the leader of the wildly successful GPT-4 team. Over the weekend, Microsoft moved aggressively to protect its investment in OpenAI. According to unnamed Microsoft sources cited by the New Yorker, the Redmond software giant had three plans. Plan A was to deal with the new interim CEO. Plan B was to persuade the board to take back Altman, who was a proven performer at generating value for investors. Plan C was to take Altman and anyone else willing to follow him from Open AI into Microsoft. The OpenAI board (reportedly) reached out to rival Anthropic in a desperate attempt to merge with them. Anthropic, which comprises people who had left OpenAI because of disagreements with Altman over AI safety, declined to get involved.

By Monday the position of the board was hopeless. The reaction of most venture capital and finance commentators ranged from bemusement to outrage. They found it incomprehensible and unjustifiable that a CEO who had made OpenAI world-famous and extremely valuable should be fired.

Virtually all of the OpenAI staff agreed. By close of business on Monday, some 738 out of 770 employees (according to Wired) had signed an open letter calling on the board to resign. The signatories included Sutskever and Murati. Its language was as blunt as the board’s when it had fired Altman: “Your actions have made it obvious that you are incapable of overseeing OpenAI. We are unable to work for or with people that lack competence, judgement and care for our mission and employees. We, the undersigned, may choose to resign from OpenAI and join the newly announced Microsoft subsidiary run by Sam Altman and Greg Brockman.”

The board had had no confidence in Altman, but the biggest shareholder and almost all the employees now had no confidence in the board.

Yet, in what seems to be a skillful and tenacious endgame, the CEO of Quora, Adam D’Angelo, orchestrated a deal to bring Altman and Brockman back but maintain a footing for the “decel” camp. By Wednesday night, the deal was done. D’Angelo became the sole survivor of the coup, keeping his seat on the board; Toner and McCauley resigned. Sutskever retained his position as chief science officer but lost his seat on the board. The Microsoft AI subsidiary popped down as rapidly as it had popped up. The OpenAI employees who had resigned rescinded their resignations. The new OpenAI board consists of D’Angelo and two new external directors, Bret Taylor, formerly the co-CEO of Salesforce, and Larry Summers, a former Treasury Secretary. Neither Altman nor Brockman are on the board, but they are both back at OpenAI.

Evidently, Microsoft had decided on Plan B, which involved the restoration of Altman as CEO and the replacement of the board with people more sensitive to the interests of investors so they could continue their push to integrate OpenAI into Azure and Office. Realistically, Plan C would have resulted in intellectual property issues, years of litigation, and a rapid devaluation of the OpenAI stock held by employees. Plan B enabled the recent unpleasantness to be brushed aside with a gushing open letter from Altman wherein he proclaimed his love for all involved, including those who had blindsided him. To prevent a similar disruption in the future, Microsoft was promised a non-voting seat on the OpenAI board.

It took Arya Stark five seasons of Game of Thrones to get her vengeance. It took Sam Altman five days to crush his enemies, see them driven before him, and retweet the lamentations of the decels.

❤️❤️❤️ https://t.co/NL3nqrjKUo

— Sam Altman (@sama) November 20, 2023

Rumour has it that, notwithstanding his repentance, Sutskever’s position as chief science officer is under threat and that he has lawyered up.

This leads us to the Great Question of the Day: Why was Sam Altman sacked in the first place? This remains a mystery. The current board is not talking. Emmett Shear is not talking. He merely said it had not been about safety. Sam Altman is not talking. The previous board members are not talking. Microsoft is not talking, although their CEO said it was not about safety. No one involved is talking. There is a promise of an “independent review.” This will be done by lawyers and may never be made public. Time will tell. In the absence of attributed quotes from sources on record, rumours abound.

Some speak of personality clashes, for example, Altman taking exception to a paper written by Toner—although this seems odd given that no one seems to have read it. However, some suggest Altman was using this as a pretext to seize power. After tipping Toner off the board, he could then replace her with someone more aligned with his views on the future of the company and more in favor of shipping AI products quickly. This may be true, but such drama is boardroom politics as usual in large enterprises.

Others speak of the board getting upset about conflicts of interest, for example, Altman seeking Middle Eastern funding for a new GPU maker. Given that D’Angelo’s Quora has an AI product called Poe that is arguably a competitor to OpenAI and that Altman has no personal equity in OpenAI, it’s hard to take the conflict-of-interest objection too seriously. Everyone in Silicon Valley has a side hustle. Further, there is a shortage of GPUs. (GPUs are Graphics Processing Units. Originally designed for video games, their architecture is also well suited for the matrix algebra calculations that are heavily used in AI.) Demand is high because LLMs devour these things. Why would you not start a GPU maker in 2024? Given that OpenAI rents GPUs from Microsoft and does not make them itself, the conflict is unclear.

Still others speak of the “accel/decel” split between those who say AI should advance full steam ahead (“accelerationists”) and those calling for regulation and guardrails (“decelerationists”). Given that Microsoft denied that the affair was about safety; OpenAI’s interim CEO, Emmett Shear, denied it was about safety; the alleged lead conspirator, Ilya Sutskever, is on record as being deeply concerned about AI safety; and that a previous split in the company that resulted in the departure of staff to found rival AI firm Anthropic was about safety, it is not reckless to believe that safety was the underlying reason for the coup. Even so, people seem reluctant to say so.

Some suggest the coup was triggered by Q* (Q star), a recent leaked OpenAI breakthrough that enables AI to do primary school math. The more lurid accounts suggest this heralds the end of the world. Other scuttlebutt suggests OpenAI’s recent Dev Day, in which they unveiled new products that would make it easier for developers to ship AI products based on ChatGPT, was the trigger. This would make it easier for rogue developers to use AI to do dirty deeds, such as make biological weapons. Advocates of the accel/decel explanation note that two of the departed board members (Toner and MacCauley) have decelerationist links.

According to the OpenAI Charter, “OpenAI’s mission is to ensure that artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity. We will attempt to directly build safe and beneficial AGI, but will also consider our mission fulfilled if our work aids others to achieve this outcome.” To this end, OpenAI has a structure where the for-profit company is ultimately controlled by a nonprofit.

It seems those who fired Altman thought he was not sufficiently concerned with “safe and beneficial AGI” but focused on shipping potentially dangerous, but profitable, products.

There are a range of views about the existential risk posed by AGI. At one extreme, you have Andrew Ng, who famously said that worrying about the rise of evil killer robots was like worrying about overpopulation on Mars when humans have not even got there yet. At the other, you have Ilya Sutskever, who, reportedly, believes that dangerous AGI is quarters away, not decades. In the middle, you have people who think that AGI is vaguely defined and its purported threat based on dubious extrapolations.

The light relief of the saga is the tale of a wit from archrival Anthropic, who sent boxes of paper clips to his ex-colleagues at OpenAI’s office.

BREAKING NEWS: OpenAI offices seen overflowing with paperclips! pic.twitter.com/rnV4dLi0cU

— will depue (@willdepue) September 26, 2023

(Strictly speaking, the paperclip story and tweet predates the leadership coup.) The paper clips were a reference to the concern articulated by philosopher Nick Bostrom that a superintelligent AGI might decide the best thing it could do would be to optimize a goal such as paperclip production and divert all global resources to their production. In the process, it might accidentally starve humanity. The scenario is much-criticized, as it assumes there will only be one superintelligence to rule them all, not a series of competing superintelligences debating each other with impeccable logic and thinking things through in a perfectly rational manner.

Most of the catastrophic AGI scenarios require what is called “FOOM,” a fast take-off singularity in which a recursively self-improving AGI becomes all-powerful in a compressed timeframe. Many accelerationists dispute this possibility. Some cite thermodynamic limitations on information processing or dispute that IQ far more than that of a human is even possible. Others point out that more intelligence is not always useful and that the ability to come up with a plan to eliminate humanity is not the same as the ability to follow through on it. As for ourselves, we have published research pointing out that even a theoretically “optimal” intelligence must account for embodiment, which imposes mathematical upper limits on intelligence. A superintelligence would need to understand cause and effect, and that involves constructing a “causal identity” or “self” that is entirely absent from current AI. Whatever the nature of the objection, many dispute the validity of FOOM as a premise of doomsday scenarios. Certainly, to achieve human extinction, the AI will need robots to prevent humans from resisting by blowing up power lines, internet cables, and data centers. Robotics is notoriously slower to develop than AI because it must deal with the disorderly world of atoms rather than tidy mathematical bits.

Some do not see LLMs as the path to AGI at all. Consequently, they do not regard the likes of ChatGPT as an existential threat. Indeed, there have been suggestions of diminishing marginal returns for ever-larger LLMs. Ben Goertzel, who co-steers the annual AGI conference with Marcus Hutter of Google Deepmind, holds that “incremental improvement of such LLMs is not a viable approach to working toward human-level AGI.”

LLMs require massive amounts of data to mimic the understanding of concepts that a human can gather from a few examples. We see the future of genuine AGI in systems that learn as efficiently as humans do, with novel inventions such as artificial phenomenal consciousness (that, at present, remain purely theoretical) rather than in a few billion more parameters in LLMs obtained in data centers running thousands of GPUs.

The bottom line is that decelerationists are making extraordinary claims and demanding restrictions on the basis of contested theoretical speculations. Smaller competitors contend that the licensing or other red tape that might be applied in the case of AI will serve large incumbents. Heavy regulation and AI compliance costs would make it harder for smaller startup rivals.

OpenAI does not have a monopoly on achieving AGI. There is a horde of competing firms. Days after the coup and counter-coup, Google launched Gemini, claiming it beat ChatGPT on many of the metrics used to measure LLM performance. Besides Google, there are other major rivals such as Meta, Anthropic, and Grok. There are dozens of smaller firms, each seeking to make the next AI breakthrough—and not necessarily by training LLMs. Foundational research is published online. Researchers at Google published the paper that started the transformer boom in 2017. The genie is out of the bottle. The beneficial applications of AI are numerous, and regulation of the technology (rather than its applications) is impractical. Regulations cannot stop countries (or individuals) working towards AGI. If the US Congress and the EU decide to overregulate AI, talent and investment will go elsewhere. Strategic rivals such as China might overtake the West and impose a one-party, AI-enforced dictatorship. We believe it is essential that the open societies of the West retain their technological edge.

Of course, it may not be possible to accelerate faster than we are. There is a limited supply of talent. Cutting-edge AI papers are not easy reads. People focus on successes and tend to forget failures. AI has boomed and busted many times. Software engineers are notoriously optimistic about timelines. What they say will take a month or two will often take a year or ten. Leonardo da Vinci designed helicopters and tanks in the sixteenth century; they did not ship until the twentieth.

Embedding moral competence in AIs is the obvious answer to “aligning AI with human values,” and, indeed, that is what lies at the heart of rival Anthropic’s strategy of “constitutional AI.” Even so, we must recognize that acute and chronic moral disagreement is endemic among human beings. We see this in Gaza and Ukraine. For all their talk of “alignment” as a solution to “safety” and to ensure beneficial AGI, the six members of the OpenAI board could not align themselves. It is perhaps more sensible to align AI with the laws of the jurisdiction in which it operates. No doubt, some AI firms will decide not to operate in certain jurisdictions due to their distaste for local norms and governance. Given that LLMs have already passed bar exams, we see the implementation of law-abiding AI as realistic and achievable. Getting humans to align on which laws to abide by is the real challenge.

To conclude, we do not envisage a future in which some monopolist singularity of an AGI becomes self-aware and, in the manner of Skynet, exterminates humanity. Nor do we see a future when some superintelligent zombie AGI decides to optimize for paper clip production and starve us by accident. Rather, we see a world of beneficial cooperation and competition between firms publishing papers and developing rival products in the pursuit of excellence. We predict a competitive multiplicity of AGIs with empathic functionality that are incrementally improved by a free market and that will brighten the light of human consciousness, not extinguish it.