recent

The Many Faces of Scientific Fraud

Everyday fudging of experimental data in laboratories cannot exclusively be explained by researchers’ desire to get an intuited result in a better-than-perfect form, as was the case with Mendel, or to distinguish themselves through the accuracy of their measurements, as with Millikan.

Is every scientific article a fraud? This question may seem puzzling to those outside the scientific community. After all, anyone who took a philosophy course in college is likely to think of laboratory work as eminently rational. The assumption is that a researcher faced with an enigma posed by nature formulates a hypothesis, then conceives an experiment to test its validity. The archetypal presentation of articles in the life sciences follows this fine intellectual form: After explaining why a particular question could be asked (introduction) and describing how he or she intends to proceed to answer it (materials and methods), the researcher describes the content of the experiments (results), then interprets them (discussion).

This is more or less the outline followed by millions of scientific articles published every year throughout the world. It has the virtue of being clear and solid in its logic. It appears transparent and free of any presuppositions. However, as every researcher knows, it is pure falsehood. In reality, nothing takes place the way it is described in a scientific article. The experiments were carried out in a far more disordered manner, in stages far less logical than those related in the article.

If you look at it that way, a scientific article is a kind of trick. In a radio conversation broadcast by the BBC in 1963, the British scientist Peter Medawar, cowinner of the Nobel Prize in Physiology or Medicine in 1960, asked, “Is the scientific paper a fraud?” As was announced from the outset of the program, his answer was unhesitatingly positive. “The scientific paper in its orthodox form does embody a totally mistaken conception, even a travesty, of the nature of scientific thought.”

To demonstrate, Medawar begins by giving a caustically lucid description of scientific articles in the 1960s, one that happens to remain accurate to this day: “First, there is a section called ‘introduction’ in which you merely describe the general field in which your scientific talents are going to be exercised, followed by a section called ‘previous work’ in which you concede, more or less graciously, that others have dimly groped towards the fundamental truths that you are now about to expound.”

According to Medawar, the “methods” section is not problematic. However, he unleashes his delightfully witty eloquence on the “results” section: “[It] consists of a stream of factual information in which it is considered extremely bad form to discuss the significance of the results you are getting. You have to pretend firmly that your mind is, so to speak, a virgin receptacle, an empty vessel, for information which floods into it from the eternal world for no reason which you yourself have revealed.”

Was Medawar a curmudgeon? An excessively suspicious mind, overly partial to epistemology? Let’s hear what another Nobel laureate in physiology or medicine (1965), the Frenchman François Jacob, has to say. The voice he adopts in his autobiography is more literary than Medawar’s, but no less evocative:

Science is above all a world of ideas in motion. To write an account of research is to immobilize these ideas; to freeze them; it’s like describing a horse race from a snapshot. It also transforms the very nature of research; formalizes it. Writing substitutes a wellordered train of concepts and experiments for a jumble of untidy efforts, of attempts born of a passion to understand. But also born of visions, dreams, unexpected connections, often childlike simplifications, and soundings directed at random, with no real idea of what they will turn up—in short, the disorder and agitation that animates a laboratory.

Following through with his assessment, Jacob comes to wonder whether the sacrosanct objectivity to which scientists claim to adhere might not be masking a permanent and seriously harmful reconstruction of the researcher’s work:

Still, as the work progresses, it is tempting to try to sort out which parts are due to luck and which to inspiration. But for a piece of work to be accepted, for a new way of thinking to be adopted, you have to purge the research of any emotional or irrational dross. Remove from it any whiff of the personal, any human odor. Embark on the high road that leads from stuttering youth to blooming maturity. Replace the real order of events and discoveries by what would have been the logical order, the order that should have been followed had the conclusion been known from the start. There is something of a ritual in the presentation of scientific results. A little like writing the history of war based only on official staff reports.

Any scientific article must be considered a reconstruction, an account, a clear and precise narrative, a good story. But the story is often too good, too logical, too coherent. In a way, all researchers are cooks, given that they cannot write a scientific article without arranging their data to present it in the most convincing, appealing way. The history of science is full of examples of researchers embellishing their experimental results to make them conform to simple, logical, coherent theory.

What could be simpler, for instance, than Gregor Mendel’s three laws on the inheritance of traits, which are among the rare laws found in biology? The life story of Mendel, the botanist monk of Brno, has often been told. High-school students learn that Mendel crossbred smoothseeded peas and wrinkleseeded peas. In the first generation, all the peas were smoothseeded. The wrinkled trait seemed to have disappeared. Yet it reappeared in the second generation, in exactly onequarter of the peas, through the crossbreeding of firstgeneration plants. After reflecting on these experiments, Mendel formalized the three rules in Experiments in Plant Hybridization (1865). These were later qualified as laws and now bear his name.

Largely ignored in his lifetime, Mendel’s work was rediscovered at the beginning of the twentieth century and is now considered the root of modern genetics. But this rediscovery was accompanied by a close rereading of his results. The British biologist and mathematician Ronald Fisher, after whom a famous statistical test is named, was one of Mendel’s most astute readers. In 1936, he calculated that Mendel only had seven out of 100,000 chances to produce exactly onequarter of wrinkleseeded peas by crossbreeding generations. The 25–75 percent proportion is accurate, but given its probabilistic nature, it can only be observed in very large numbers of crossbreeds, far more than those described in Mendel’s dissertation, which only reports the use of ten plants, though these produced 5,475 smooth-seeded peas and 1,575 wrinkle-seeded peas. The obvious conclusion is that Mendel or one of his collaborators more or less consciously arranged the counts to conform to the rule that Mendel had probably intuited. This we can only speculate on, given that Mendel’s archives were not preserved.

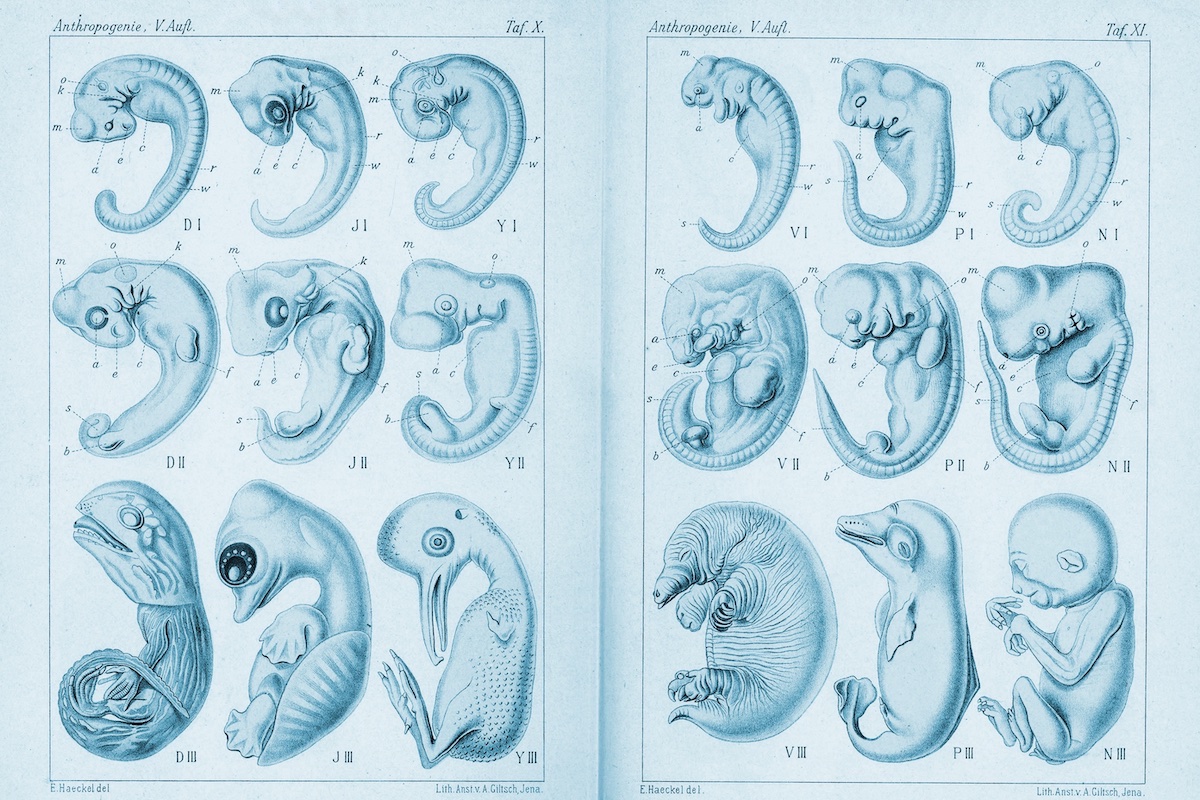

Unfortunately, one’s intuition is not always correct. In the second half of the nineteenth century, the German biologist Ernst Haeckel was convinced that, according to his famous maxim, “ontogeny recapitulates phylogeny”—in other words, that over the course of its embryonic development, an animal passes through different stages comparable to those of the previous species in its evolutionary lineage. In Anthropogenie oder Entwicklungsgeschichte des Menschen (1874), Haeckel published a plate of his drawings showing the three successive stages of the embryonic development of the fish, salamander, turtle, chicken, rabbit, pig, and human being. A single glance at the drawings reveals that the embryos are very similar at an early stage in development.

As soon as the book was published, these illustrations met with serious criticism from some of Haeckel’s colleagues and rival embryologists. Yet it would take a full century and the comparison of Haeckel’s drawings with photographs of embryos of the same species for it to become clear that the former were far closer to works of art than scientific observation. Today, we know that ontogeny does not recapitulate phylogeny, and that the highly talented artist Ernst Haeckel drew these plates of embryos to illustrate perfectly a theory to which he was deeply attached.

Another famous example of this propensity to fudge experimental results to make them more attractive and convincing comes from American physicist Robert A. Millikan, celebrated for being the first to measure the elementary electric charge carried by an electron. Millikan’s experimental setup consisted of spraying tiny drops of ionized oil between two electrodes on a charged capacitor, then measuring their velocity. Millikan observed that the value of the droplets’ charge was always a multiple of 1.592 × 10−19 coulombs, which was therefore the elementary electric charge. His work was recognized with the Nobel Prize in Physics in 1923.

This story is enlightening for two reasons. The first is that Millikan appears to have excluded a certain number of his experimental results that were too divergent to allow him to state that he had measured the elementary electric charge within a margin of error of 0.5 percent. His publication is based on the analysis of the movement of 58 drops of oil, while his lab notebooks reveal that he studied 175. Could the 58 drops be a random sample of the results of an experiment carried out over five months? Hardly, given that nearly all of the 58 measurements reported in the publication were taken during experiments conducted over only two months. The real level of uncertainty, as indicated by the complete experiments, was four times greater.

Millikan was not shy about filling his notebooks with highly subjective assessments of each experiment’s results (“Magnificent, definitely publish, splendid!” or, on the contrary, “Very low. Something is wrong”). This suggests that he was not exclusively relying on the experiment’s verdict to determine the electric charge of the electron.

The second is that we now know that the value Millikan obtained was rendered inaccurate by an erroneous value he used in his calculations to account for the viscosity of air slowing the drops’ movement. The exact value is 1.602 × 10−19 coulomb. But the most interesting part is how researchers arrived at this now well-established result. The physicist Richard Feynman has explained it in layman’s terms:

If you plot [the measurements of the charge of the electron] as a function of time, you find that one is a little bigger than Millikan’s, and the next one’s a little bigger than that, and the next one’s a little bigger than that, until finally they settle down to a number which is higher. Why didn’t they discover that the new number was higher from the beginning? It’s a thing that scientists are ashamed of—this history—because it’s apparent that people did things like this: When they got a number that was too high above Millikan’s, they thought something must be wrong—and they would look for and find a reason why something might be wrong. When they got a number closer to Millikan’s value, they didn’t look so hard. And so they eliminated the numbers that were too far off.

Everyday fudging of experimental data in laboratories cannot exclusively be explained by researchers’ desire to get an intuited result in a better-than-perfect form, as was the case with Mendel, or to distinguish themselves through the accuracy of their measurements, as with Millikan. It can also be due to the more or less unconscious need to confirm a result seen as established, especially if the person who initially discovered it is the recipient of a prestigious prize.

All these (bad) reasons for fudging data are as old as science itself and still exist today. The difference is that technological progress has made it increasingly simple—and therefore increasingly tempting—to obtain results that are easy to embellish.

In a fascinating investigation, the anthropologist Giulia Anichini reported on the way in which experimental data was turned into an article by a French neuroscience laboratory using magnetic resonance imaging (MRI). Her essay brings to light the extent that “bricolage,” to borrow her term, is used by researchers to make their data more coherent than it actually is. Anichini makes clear that this bricolage, or patching up, does not amount to fraud, in that it affects not the nature of the data but only the way in which it is presented. But she also emphasizes that the dividing line between the two is not clear, since the bricolage that goes into adapting data “positions itself on the line between what is accepted and what is forbidden.”

Naturally, the lab’s articles never mention bricolage. According to Anichini, “any doubt about the right way to proceed, the inconsistent results, and the many tests applied to the images disappear, replaced by a linear report that only describes certain stages of the processes used. The facts are organized so that they provide a coherent picture; even if the data is not [coherent], and this has been observed, which implies a significant adaptation of the method to the results obtained.”

Anichini’s investigation is also of interest for revealing that researchers often have a poor grasp on using an MRI machine and depend on the engineers who know how to operate it to obtain their data. This growing distance between researchers and the instruments they use for experiments can be observed in numerous other areas of the life sciences, as well as in chemistry. It can be responsible for another kind of data beautification, which occurs when researchers do not truly understand how data was acquired and do not see why their bricolage is a problem. For example, Anichini quotes the amusing response of a laboratory engineer who accused the researcher he worked with of excessively cooking data: “I told him, you’re not even allowed to do that…Because if you take your data and test it a billion times with different methods, you eventually find something: you scrape the data, you shake it a little and see if something falls out.”

Cell biology provides another excellent example of the new possibilities that technological progress offers for cooking data. In this field, images often serve as proof. Researchers present beautiful microscopic snapshots, unveiling the secrets of cellular architecture. For the last decade, the permanent development of cells has also been shown thanks to films and videos. Spectacular and often very beautiful images are produced through extensive colorization. The Curie Institute, one of the most advanced French centers in the field, even published a coffeetable volume of cell images, the kind of book one buys at Christmas to admire the pictures without really worrying about what they represent or, especially, how they were obtained. Yet one would do well to take a closer look.

“Anyone who hasn’t spent hours on a confocal microscope doesn’t realize how easy it is to make a fluorescent image say exactly what you want,” says Nicole Zsurger, a pharmacologist with the Centre national de la recherche scientifique. Since digital photography replaced analog photography in laboratories in the 2000s, it has become extremely easy to tinker with images. To beautify them. Or falsify them. In the same way that the celebrities pictured in magazines never have wrinkles, biologists’ photos never seem to have the slightest flaw.

When he was appointed editor of the Journal of Cell Biology, one of the most respected publications in the field, in 2002, the American Mike Rossmer decided to use a specially designed software program to screen all the manuscripts he received for photo retouching. Rossmer has since stated that over eleven years he observed that one quarter of manuscripts submitted to the journal contained images that were in some way fudged, beautified or manipulated. These acts did not constitute actual fraud: According to Rossmer, only one percent of the articles were rejected because the manipulations might mislead the reader. However, Rossmer did ask the authors concerned to submit authentic images rather than the beautiful shots that grace the covers of cell biology journals.

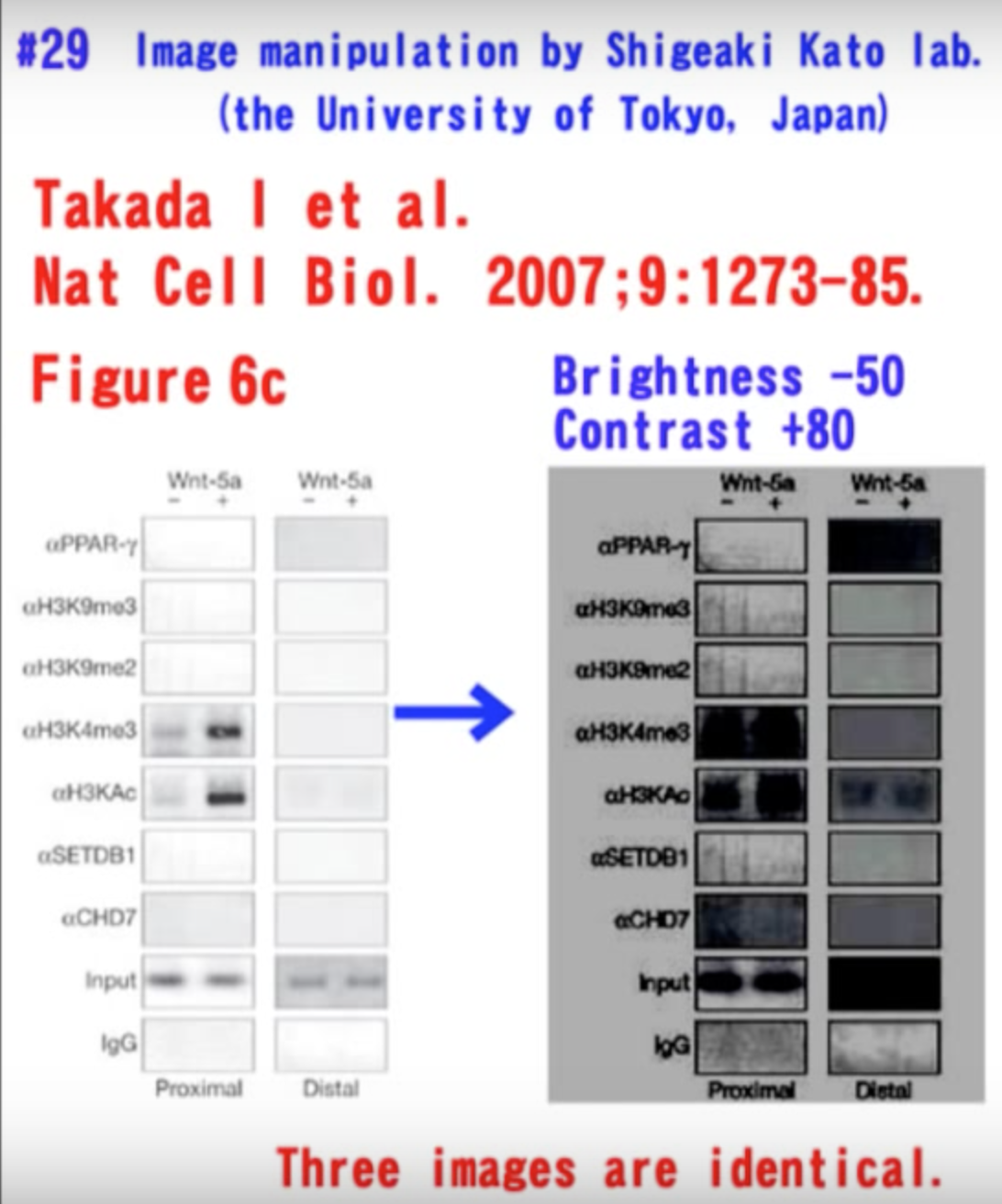

In January, 2012 the mysterious Juuichi Jigen uploaded a genuine user’s guide to the falsification of cell biology data through image retouching. Dealing with twenty-four publications in the best journals, all originating from the institute headed by Shigeaki Kato at the University of Tokyo, this video shows how easy it is to manipulate images allegedly representing experimental results. It highlights numerous manipulations carried out for a single article in Cell. This video had the laudable effect of purging the scientific literature of some falsified data. And a few months after it was uploaded, Kato resigned from the University of Tokyo. Twentyeight of his articles have since been retracted, several of which had been published in Nature Cell Biology—a publication that six years earlier had proclaimed how carefully it avoided manipulations by image-retouching software.

Excerpt adapted from Fraud in the Lab: The High Stakes of Scientific Research, by Nicolas Chevassus-au-Louis. Published by Harvard University Press. Copyright © 2019 by the President and Fellows of Harvard College. Used by permission. All rights reserved.