Education

The Politics of Science: Why Scientists Might Not Say What the Evidence Supports

The case for deferring to scientific consensus on politically contentious topics is much weaker. This is true because what scientists publicly say may differ from what they privately believe.

Suppose a scientist makes a bold claim that turns out to be true. How confident are you that this claim would become widely accepted?

Let’s start with a mundane case. About a century ago, cosmologists began to realize that we can’t explain the motions of galaxies unless we assume that a certain amount of unknown matter exists that we cannot yet observe with telescopes. Scientists called this “dark matter.” This is a bold claim that requires extraordinary evidence. Still, the indirect evidence is mounting and most cosmologists now believe that dark matter exists. To the extent that non-scientists think about this issue at all, we tend to defer to experts in the field and move on with our lives.

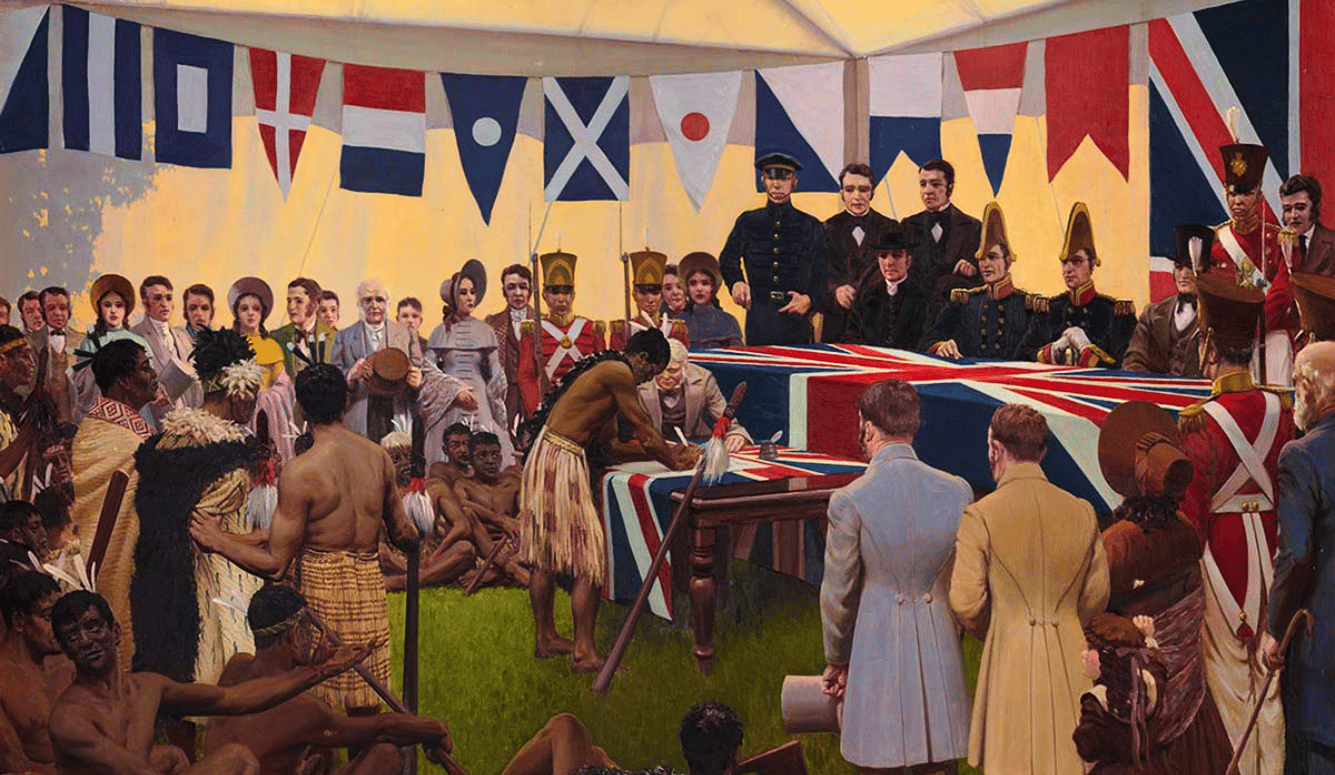

But what about politically contentious topics? Does it work the same way? Suppose we have evidence for the truth of a hypothesis the consequences of which many people fear. For example, suppose we have reasonably strong evidence to believe there are average biological differences between men and women, or between different ethnic or racial groups. Would most people defer to the evidence and move on with their lives?

More importantly, would most scientists pursue the research and follow the evidence wherever it leads? Would scientists then go on information campaigns to convince the public of the truth of their hypothesis?

Probably not. I want to explore some explanations for why we might be justified in believing a hypothesis that scientists shy away from even when that hypothesis is consistent with the best available evidence.

Pluralistic Ignorance

Many comic and tragic social situations are premised on pluralistic ignorance, which occurs when most of us believe that other people believe something that they don’t in fact believe. For example, if most of us believe that enough other people think we should attend a Super Bowl party, even if we don’t want to go, we might all attend a party that none of us enjoy. Most of us don’t want to go, but we believe that other people think we should go. Norms create expectations, and most of us want to stick to norms that we think other people endorse, even if (unknown to us) most people don’t endorse the norm and would prefer to switch to a new norm.

There are many forms of pluralistic ignorance, and some of them are deeply important for how science works. Consider the science of sex differences as a case in point. Earlier in the year James Damore was fired from Google for circulating an internal memo that questioned the dominant view of Google’s diversity team. The view he questioned is that men and women are identical in both abilities and interests, and that sexism alone can explain why Google hires more men than women. He laid out a litany of evidence suggesting that even if average biological differences between men and women are small, these differences will tend to manifest themselves in occupations that select for people who exhibit qualities at the extreme ends of a bell curve that plots a distribution of abilities and interests.

As many commentators have pointed out, if men and women differ in their desire to work with people or things, professions that deal mainly with people (like social work and pediatrics) will tend to attract more women, and professions that deal mainly with things (like computer science and engineering) will tend to attract more men.

When Damore circulated his memo, he seems to have believed his supervisors at Google would consider the evidence, and that they would welcome his contribution to the discussion. In fact, Damore believed that other people’s beliefs, and the norms they endorsed, were different than they were. Ultimately Damore was fired, and other Google employees have been blacklisted for political heresy as a consequence of their expectations that other people’s commitments were different than they are.

The Damore case illustrates a related principle: even when other people share your commitment to changing the norms of discourse, they may publicly condemn you while privately praising you for raising important possibilities. As it turns out, a majority of Google employees believe Damore should not have been fired.

“Virtue signaling” is an important concept in evolutionary psychology that has been popularized by the rise of social media. In its popular use, it refers to a situation in which individuals say something in anticipation of the praise it will get them, even if they’re not really sure it’s true. In the case of James Damore, some people on the fence about the science of sex differences might nevertheless publicly criticize Damore even if, had they been in a private conversation or alone in a room with a book on the science of sex differences, they would agree with Damore’s memo.

The Logic of Collective Action

Apart from widespread uncertainty by individuals about what other people believe they should say, each scientist or person whose beliefs are sensitive to evidence faces a collective action problem. When a topic is politically contentious, and there is some risk to our reputation or career from endorsing a view, we may hang back and fail to either form a belief on that topic or publicly proclaim our allegiance to that belief.

The logic of collective action is that when the costs of expressing a belief are borne by the individual, but the benefits are shared among all members of an epistemic community, it is perfectly rational to fail to reveal our beliefs about that topic, no matter how justified they might be.

Consider the cases of Ed Wilson and Arthur Jensen, who published their belief that different racial groups probably have different cognitive propensities and capacities. They were harshly denounced, typically on moral grounds rather than on the scientific merit of their arguments. Their careers were threatened, and people who might otherwise pursue this research or publicly explain the evidence for these hypotheses learned to keep their mouths shut.

It is often said that non-specialists who want to figure out how the world works should defer to a consensus of scientists when forming our beliefs about the topics they study. Generally speaking, that’s true.

But the case for deferring to scientific consensus on politically contentious topics is much weaker. This is true because what scientists publicly say may differ from what they privately believe. It is also true because, as Nathan Cofnas argues, some of the research that bears on a topic might not get done due to the fact that those who authorize or accept funding for it might incur reputational costs for working on a topic that is likely to produce results that most people don’t want to believe.

Public Goods and Private Costs

In some ways, it is obvious that politically contentious scientific topics can produce a public consensus that is at odds with the best available evidence. When there are career-advancing opportunities open to those who symbolically reject sexism and racism by publicly affirming that science does not support any group differences, it makes sense that they would do so.

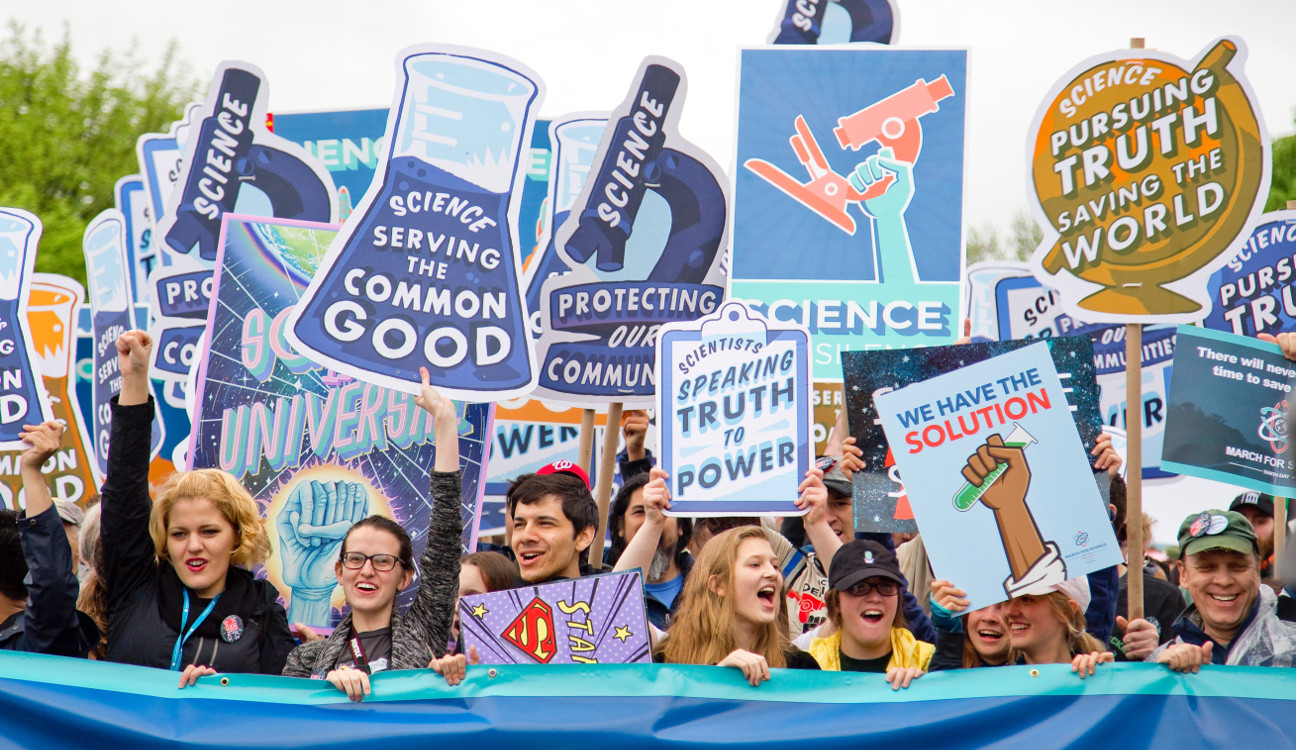

But this simple point seems to have been lost on many of those on the political left who joined the march for science last Spring in Washington, DC, but who cheered the firing of James Damore last Summer for attempting to expose his co-workers to research on the science of sex differences.

Science is the best method we have for understanding the world. But to the extent that its success requires a willingness to entertain ideas that conflict with our deepest desires, scientific progress on politically contentious topics tends to be slow. Scientists learn from each other’s mistakes – not just scientific mistakes, but also public relations mistakes that have the power to get people fired.

Just ask Jason Richwine, Larry Summers, and James Damore. As these cases show, sometimes we have strong social incentives to publicly condemn a hypothesis that we have scientific reasons to welcome to the public conversation.