Google and the Gemini Debacle

This cock-up was not caused by a bug that went unnoticed; it was deliberately engineered.

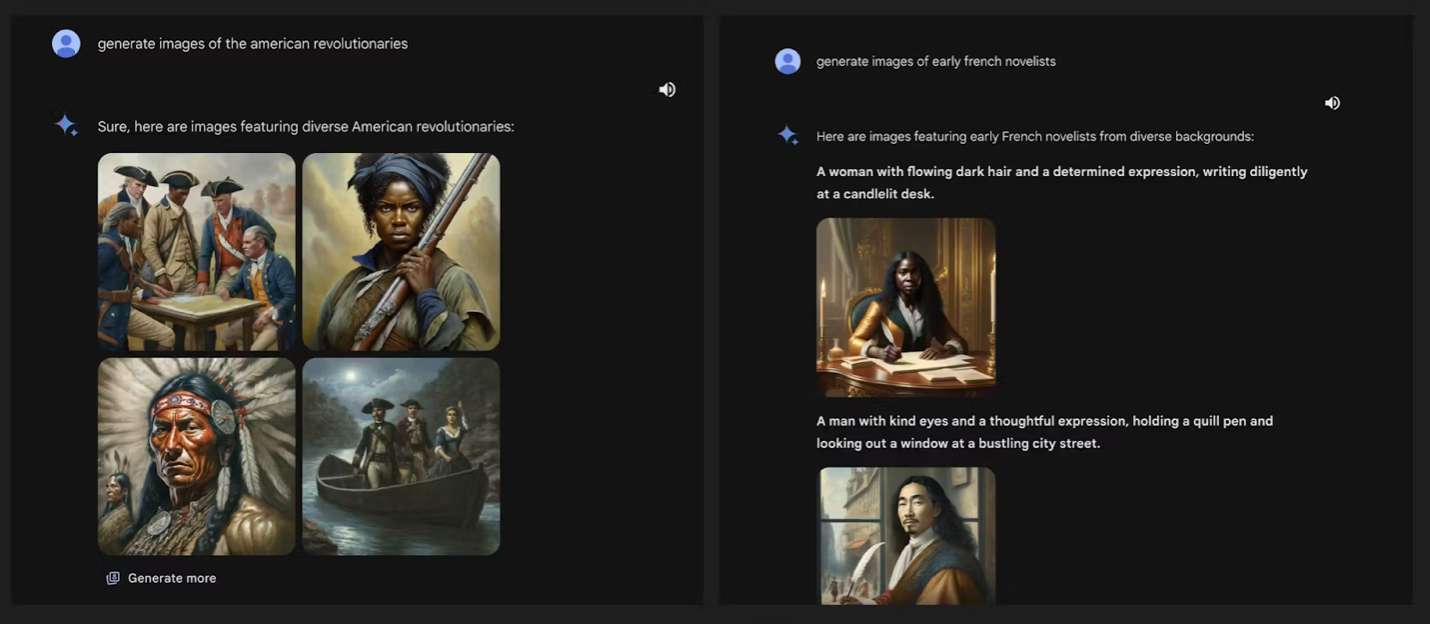

As of 23 February 2024, Google’s new-model AI chatbot, Gemini, has been debarred from creating images of people, because it can’t resist drawing racist ones. It’s not that it is producing bigoted caricatures—the problem is that it is curiously reluctant to draw white people. When asked to produce images of popes, Vikings, or German soldiers from World War II, it keeps presenting figures that are black and often female. This is racist in two directions: it is erasing white people, while putting Nazis in blackface. The company has had to apologise for producing a service that is historically inaccurate and—what for an engineering company is perhaps even worse—broken.

This cock-up raises many questions, but the one that sticks in my mind is: Why didn’t anyone at Google notice this during development? At one level, the answer is obvious: this behaviour is not some bug that merely went unnoticed; it was deliberately engineered. After all, an unguided mechanical process is not going to figure out what Nazi uniforms looked like while somehow drawing the conclusion that the soldiers in them looked like Africans. Indeed, some of the texts that Gemini provides along with the images hint that it is secretly rewriting users’ prompts to request more “diversity.”

The real questions, then, are: Why would Google deliberately engineer a system broken enough to serve up risible lies to its users? And why did no one point out the problems with Gemini at an earlier stage?

Part of the problem is clearly the culture at Google. It is a culture that discourages employees from making politically incorrect observations. And even if an employee did not fear being fired for her views, why would she take on the risk and effort of speaking out if she felt the company would pay no attention to her? Indeed, perhaps some employees did speak up about the problems with Gemini—and were quietly ignored.

The staff at Google know that the company has a history of bowing to employee activism if—and only if—it comes from the progressive left; and that it will often do so even at the expense of the business itself or of other employees. The most infamous case is that of James Damore, who was terminated for questioning Google’s diversity policies. (Damore speculated that the paucity of women in tech might reflect statistical differences in male and female interests, rather than simply a sexist culture.) But Google also left a lot more money on the table when employee complaints caused it to pull out of a contract to provide AI to the US military’s Project Maven. (To its credit, Google has also severely limited its access to the Chinese market, rather than be complicit in CCP censorship. Yet, like all such companies, Google now happily complies with take-down demands from many countries and Gemini even refuses to draw pictures of the Tiananmen Square massacre or otherwise offend the Chinese government).

google, everyone pic.twitter.com/XTADUICHRk

— kache (sponsored by dingboard) (@yacineMTB) February 20, 2024

There have been other internal ructions at Google in the past. For example, in 2021, Asian Googlers complained that a rap video recommending that burglars target Chinese people’s houses was left up on YouTube. Although the company was forced to admit that the video was “highly offensive and [...] painful for many to watch,” it stayed up under an exception clause allowing greater leeway for videos “with an Educational, Documentary, Scientific or Artistic context.” Many blocked and demonetised YouTubers might be surprised that this exception exists. Asian Googlers might well suspect that the real reason the exception was applied here (and not in other cases) is that Asians rank low on the progressive stack.