Science / Tech

What Lies Beneath

In ‘The Hidden Spring,’ psychoanalyst Mark Solms offers a theory of consciousness and the causal mechanisms from which it arises.

A review of The Hidden Spring: A Journey to the Source of Consciousness by Mark Solms, 432 pages, Profile Books (January 2021)

In 1848, an American railway construction supervisor named Phineas Gage was seriously injured after a blasting accident drove an iron bar through his skull, destroying the left frontal lobe of his brain. Amazingly, Gage survived, but the formerly sober and dependable man became emotional, erratic, and unreliable. Nineteenth century neurologists concluded that the frontal lobes had something to do with “sound judgment” in everyday life.

Over a century of neurological observations since then have provided doctors with a better understanding about which psychological functions break when certain physical parts of the brain are damaged. The mystery of consciousness, however, remains. Why doesn’t all the neural information processing go on “in the dark” with no consciousness at all? The term “the hard problem of consciousness” was coined by Australian philosopher David Chalmers in 1995, and in The Hidden Spring, South African psychoanalyst Mark Solms offers a solution to this problem that is grounded in neuroscience and physics and expressible in mathematics and information theory.

Solms describes the hard problem of consciousness like this: “Why and how does the subjective quality of experience arise from objective neurophysiological events?” His answer is detailed yet elegant and succinct:

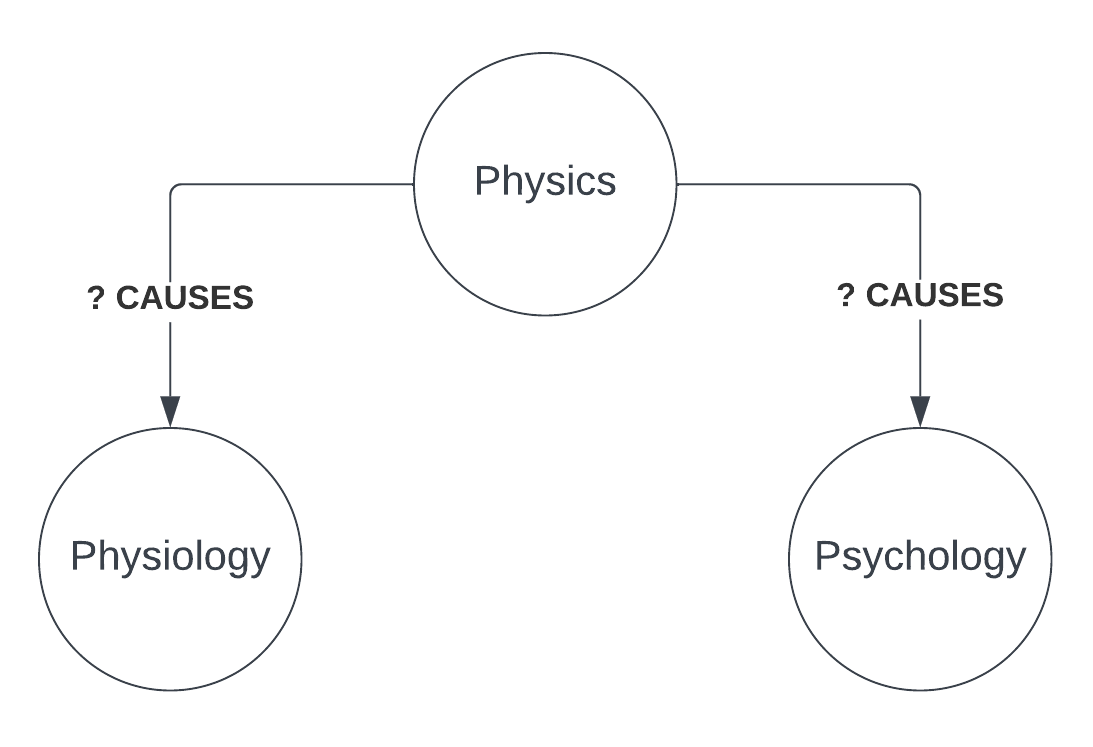

Objectivity and subjectivity are observational perspectives, not causes and effects. Neurophysiological events can no more produce psychological events than lightning can produce thunder. They are parallel manifestations of a single underlying process. The underlying cause of both lightning and thunder is electricity, the lawful mechanisms of which explain them both. Physiological and psychological phenomena can likewise be reduced to unitary causes but not to each other.

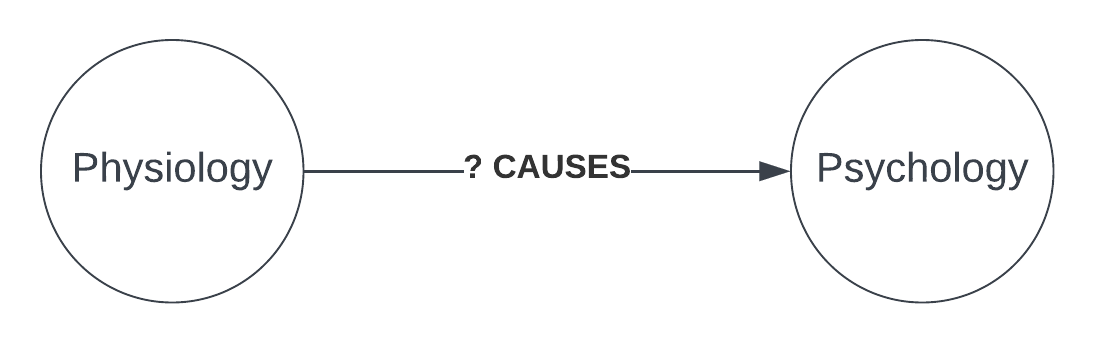

Put simply, Solms deals with Chalmers’s formulation of the hard problem by reframing the question. Chalmers frames the question as follows:

Solms reframes the question like this:

Solms argues that both physiology and psychology have a “unitary” cause, namely, the physics of self-organising systems engaged in “free energy minimisation” at the mid-scale (i.e., neither the subatomic quantum scale nor the cosmological scale of galaxies, stars, and black holes). Examples of such self-organising systems are life forms on Earth such as humans. Solms builds his solution to the hard problem of consciousness on the statistical physics of the “free energy principle” developed by Karl Friston. The essence of the idea, Solms explains, is simple:

All self-organising systems, including you and me, have one fundamental task in common, to keep existing. Friston believes that we achieve this task by minimising our free energy. Crystals, cells and brains, he says, are just increasingly complex manifestations of this basic self-preserving mechanism.

The main link between Friston’s and Solms’s work is homeostasis. Maintaining homeostasis is necessary for life forms to survive, but homeostats feature prominently in arguments about machine consciousness. They are often used as examples of the simplest possible robots. As Solms explains it, every homeostat has three components. A receptor that measures something (e.g., temperature), a control centre (that determines how to maintain temperature within viable bounds), and an effector (that returns the temperature to within viable bounds when they are exceeded). Roboticists typically use terms like sensors, cognition, and actuators instead of receptors, control centres, and effectors. Regardless of the terminology, you have a “sense, think, act” cycle that causes and explains behaviour.

To illustrate, fridges and air-conditioners have homeostats: a thermometer (a sensor or receptor that measures temperature), a chip (“cognition” or a control centre) that processes this data and decides to turn the cooler on or off, and a cooler that returns the temperature to the set bounds (an actuator or effector). While they are homeostats, fridges and air-conditioners are not alive and have no ability to self-organise. They are simple, manufactured items designed to keep humans happy. They are not things that have evolved out of the “primordial soup” to stay alive.

Related to the hard problem of consciousness is an answer to the question: How can we explain evolution from the primordial soup of brainless chemicals in the pre-Cambrian to conscious humans today? Solms’s theory of consciousness was originally published in a 2018 paper, which he co-authored with Friston, titled, “How and Why Consciousness Arises: Some Considerations From Physics and Physiology.” It is based on the mathematics of a statistical physical model of “primordial soup” that Friston had presented in his 2013 article, ‘Life As We Know It.’ As Friston had explained in that paper: “[L]ife—or biological self-organization—is an inevitable and emergent property of any (ergodic) random dynamical system that possesses a Markov blanket.”

The term “ergodic” simply means having a limited number of states. The notion of the “Markov blanket” is critical to explaining how life on Earth emerges from the primordial soup of the pre-Cambrian. The essence of homeostasis is that living organisms must occupy a limited range of states to stay alive. This is what you might call the Goldilocks zone: not too hot, not too cold, just right. This principle applies to a variety of measures such as ambient temperature, ambient pressure, gas concentrations (e.g., not too much carbon dioxide, enough oxygen), and various others. We need a certain amount of food, a certain amount of drink. We need to avoid predators, find prey, and if our species is to survive, find mates.

“Affect” is a neuroscience term that basically means emotion or feeling. Solms argues it is primarily intended to keep us within certain ranges on a wide range of measures. The main enemy of homeostasis is entropy, a result of the First and Second Laws of Thermodynamics. The Laws of Entropy, he says, are “what makes ice melt, batteries lose their charge and billiard balls come to a halt.” Entropy, one might say, is the enemy of life. To stay alive, we must battle the disorder, dissipation, and dissolution of entropy and maintain biological order within our bodies.

Entropy can also be understood in terms of information. Solms refers to the work of Claude Shannon who described the physics of entropy in informatic terms. To illustrate, suppose I take a scuba tank of air 30 metres down to the bottom of the sea. In the tank, the air is compressed, typically at 200 bar, which is roughly 200 times atmospheric pressure at sea level. If my tanks hold 10 litres, at 200 bar I can squeeze 2,000 litres of air into my tank. In information terms, when the air is in the tank, the air molecules are relatively compact. If I release them from the tank, the difference in pressure propels the air out and the molecules disperse. The fact that air is lighter than water means the air bubbles rise to the surface. As water pressure decreases the air bubbles expand. So after a few seconds, I need a greater range of spatial data to express where the air is: some for the tank and some for the various bubbles. During this process, some molecules will dissolve and be carried away by currents in the water but most will rise to the surface and diffuse into the atmosphere. After 10 minutes, if I am tracking where the air that was in the tank has moved to, I will need a lot more spatial expressions to express this. Thus, physical entropy can be measured in terms of information entropy, so to speak.

One can think of this data in terms of yes/no questions such as these: Is molecule A in the tank? Or in a bubble 30 metres down? Or in a bubble 20 metres down? Or dissolved in water 10 metres down? The fewer yes/no questions needed to describe the set, the lower the entropy in information terms. Also, the higher potential for certainty. Ultimately, such statements have a probabilistic dimension. If the tank tap is not open, the probability the air is in the tank is 1 (certain). If the tank tap is open, the probability a given air molecule is in the tank after 10 minutes is close to 0 because the tank will have filled with water. Perhaps some molecules will have dissolved in the water filling the tank but the vast majority will be outside the tank.

The more data needed to describe the internal states of a homeostatic system, the more entropic it is. What the self-organising body aims to do is maximise certainty about its internal homeostatic states. Bayesian laws of probability apply both to gases and psychology. The more scattered the gas, the less predictable the location of molecules. One can apply the same entropic concepts to psychological decision-making processes, but as Solms explains, we need to add the concept of information to the mix. The communication of information requires a source and a receiver. The world as we perceive it results from the need of the body to acquire high-confidence information about the environment around it and its own internal states.

“Shannon’s discovery of information as entropy,” Solms explains, “led the physicist John Wheeler to propose a ‘participatory’ interpretation of the universe.” As Solms explains Wheeler: “Phenomena exist only in the eye of the beholder, a participant-observer, a question-asker.” Our sensors ping reality because they have an agenda of homeostasis. As Wheeler puts it: “That which we call reality arises in the last analysis from the posing of yes/no questions and the registering of equipment-invoked responses; in short … all things physical are information-theoretic in origin.”

Following on from Shannon and Wheeler, Solms introduces Norbert Wiener, who introduced the notion of “feedback” to information. According to Wiener, a system can achieve its goal by receiving feedback about the consequences of its actions. As an aside, this early “cybernetic” theory led to the development of computer programs to play checkers and laid the foundations for what we today call reinforcement learning, a branch of machine learning in artificial intelligence. However, the relevance for evolving animals is that “we receive information about our likely survival by asking questions (i.e. taking measurements) about our biological state in response to unfolding events.”

The key thing is to give a physical explanation for where this question-asking comes from. This is where the Markov blanket comes in. The Markov blanket is a statistical concept. It divides states of a self-organising system into “internal” and “external.” There is a “system” and a “not system” arranged in such a way that internal states are insulated by ones external to the system. Put another way, the external states can only be sensed as states of the blanket by the internal states. The blanket itself is partitioned into “sensory” states that are causally dependent on external states and “active” states that are not. These four states create a circular causality: “The external states influence the internal ones via the sensory states of the blanket, while the internal states couple back to the external ones through its active states.”

So, the sensory and active states of a Markov blanket correspond to the receptors and effectors of a self-organising system. However, there are other properties of Markov blankets. Over time they exhibit “active inference,” which means the internal states produce a probabilistic representation of external states. This is the kernel of “subjectivity” and the “self.” Markov blankets are also autopoietic (self-preserving). Active states will appear to maintain the structural and functional integrity of biological states.

To sum up: biological systems are ergodic (i.e., they must remain in a limited range of states otherwise they will die). They have a Markov blanket. They display active inference and are self-preserving. What this means in practical terms is that “any ergodic random dynamical system that possesses a Markov blanket will appear to actively maintain its structural and dynamical integrity.” Critically, Friston presents this thesis as a mathematical lemma and offers a heuristic proof for it. He shows why such systems can appear to have “intentions.” The mathematics Friston offers is challenging but his “free energy principle” provides the foundation for a theory that “makes consciousness part of the ordinary causal matrix of the universe.”

In The Hidden Spring, Solms does not dive too deeply into Friston’s mathematics. Instead, he summarises its implications, describing Friston’s free energy as a quantifiable measure between the way the world is modelled by a system and the way the world really behaves. The operative principle is not so much “I think, therefore I am” but “I survive, therefore my model is viable.”

This notion leads to a very different idea of consciousness to Descartes’s reason-centric version that set up the puzzling dualism of “mind” and “matter” in the first place. Solms is at pains to stress that consciousness is neither “intelligence” nor “rationality.” He spends a considerable amount of time debunking the “cortical fallacy,” which refers to his view that neuroscientists who have argued that the “seat of consciousness” is in the cortex are wrong. Recent neuroscience has shed very precise light on where this is. As Solms points out, damage to just two cubic millimeters of the upper brainstem will “obliterate all consciousness.” By contrast, humans with severe damage or impairment to the cortex such as children with hydranencephaly (i.e., born without a cortex) and animals who have had their cortexes surgically removed exhibit consciousness. The foundational form of consciousness, Solms holds, is affect. The key function of affect is to tell us how well or badly we are doing in life.

Solms is very clear on this. “Feeling is Being,” he says. To elaborate, consciousness is fundamentally about feeling. Feeling is the result of arousal. Arousal results from “surprisal” which is often a key threat to homeostasis (e.g., the sudden appearance of a predator) or an opportunity (e.g., the sudden appearance of prey). As far as the history of animal brains is concerned, affect has evolved to serve homeostasis. Phylogenetically, affect is very old. Mostly it sits on the brain stem and the components of the human brain that also appear in simpler animals. The more recently evolved human cortex is associated with specifically human developments such as language. However, affect, Solms argues, permeates this more recent functionality. We tend not to perceive things we do not feel anything about. In everyday language, we don’t “notice” them. Solms argues that much cognitive processing in the brain does go on “in the dark” or unconsciously. Feeling, however, does not. Feeling is always present in consciousness. Our default drive, when homeostasis is at its “settling point” (i.e., “all good”), is SEEKING.

Solms follows Jaak Panksepp in capitalising “basic emotions” to distinguish them from common usage. Panksepp reproduced seven basic emotions using deep brain stimulation in hundreds of species: not just primates but other mammals as well. In response to the accusation that he was being anthropomorphic to animals, Panksepp replied he would rather plead guilty to being zoomorphic to humans. SEEKING—which manifests itself as foraging, reconnaissance, and curiosity—is the default basic emotion. The other six are LUST, RAGE, FEAR, PANIC/GRIEF, CARE, and PLAY.

Neuroscientists today have a much greater understanding of what parts of the brain do what, thanks largely to fMRI scanning technology (to which Friston has been a major contributor). Solms argues that consciousness is affect and the reason affect exists is to enable us to decide what to do next. If we are tired, we must sleep. If we are hungry, we must eat. If we are thirsty, we must drink. What we do next depends on environmental context. Our needs must be prioritised by prevailing external conditions. However, our multiple needs cannot be reduced to a single common denominator (e.g., pleasure and pain as in classical utilitarianism). We cannot drink instead of eat. We have to do both. In the wild, this might entail different foraging routes. If we are lions, we might have to decide between heading north to the river for a drink or south to the zebra herd for a feed. We cannot say 3/10 of hunger and 1/10 of thirst equals 4/10 of total need. Each need must be met in its own very biologically specific way.

This explains why affect is qualitatively different. Distinct affects are linked to needs that are met in different ways. Hunger does not feel the same as thirst. But both feel painful. The level of pain helps us prioritise which need must be met first. All affect has a quantitative element of pleasure and pain but each affect is qualitatively different. As Solms sums up: “affects are always subjective, valenced and qualitative.” To expand, feelings or emotions (affects) occur on the “inner” (subjective) side of the Markov blanket. They are good or bad (valenced) and have distinct qualities relating to distinct needs that need distinct actions to meet.

Friston’s mathematics also shows that the Markov blanket is Bayesian (that is to say probabilistic) in its model calculations. Perception and its links to consciousness are driven by the never-ending quest to maintain the viability of the model. The core of consciousness is not perception but affect because it is affect, driven by homeostasis, and the need to provide certainty about the environment to maintain homeostasis that drives the mechanisms of arousal, that make us focus on certain things and not others at any given moment. Arousal is driven by “errors” or deviations (e.g., “surprises”) from the predictions of the homeostatic model.

The “selfhood” of organising systems wrapped in a Markov blanket gives them a point of view. This is why it makes sense to speak of the “subjectivity” of such systems. Solms says: “deviations from their viable states are registered by the system, for the system, as needs.” It is affect that “hedonically valences biological needs so that increasing and decreasing deviations … are felt as unpleasure and pleasure respectively.”

Affective valence (the technical term for our feelings about what is “good” and “bad”) enables us to learn from experience which is a great evolutionary advantage. “Feeling by an organism of fluctuations in its own needs enables choice and thereby supports survival in unpredicted contexts. This is the biological function of experience.”

Affect has a critical role in prioritising needs and thus prioritising action. Actions generated by prioritised affects are voluntary and driven by environmental context. They are subject to “here-and-now” choices rather than pre-established algorithms. These choices are felt in exteroceptive consciousness, which contextualises affect. The choices are made on the basis of signals rendered salient by prioritised need. In the absence of any pressing homeostatic need, we default to SEEKING, “proactive engagement with uncertainty, with the aim of resolving it in advance.” When prioritised this is felt as curiosity and interest in the world.

I hope to have given a clear, accessible summary of what Solms considers to be the causal mechanisms of consciousness and how these functions are ultimately reducible to natural laws. Overall, I find Solms’s views insightful, well-grounded in evidence and plausible. Solms holds that consciousness is a part of nature and that it is mathematically tractable. There can be a science of consciousness based on physics, such as Freud in his early (abandoned) work envisaged. Indeed, Solms argues that if the free-energy theory of consciousness is correct, it would, in principle, be possible to build an artificial consciousness, though he is (quite rightly) very cautious on this point as it raises a myriad of ethical and verification issues.

Regarding current concerns with superintelligent AI becoming conscious and deciding to exterminate us, if it is true as Solms claims that consciousness is affect and affect as we know it requires carbon-based organic neurochemicals such as oxytocin (joy) and cortisol (fear) to function then it would not be possible to implement affect in silicon-based computer architectures. Consequently, there is no danger of LLMs like ChatGPT spontaneously evolving into Skynet, becoming self-aware and implementing a malevolent screenplay scenario of AI doom motivated by RAGE.

Overall, The Hidden Spring is fluently written and engaging. It has dense parts (which I have tried to summarise clearly here), but in the main, Solms provides explanations in words that summarise the mathematics rather than formulae. While difficult in parts, his book is well worth the effort. One could hardly expect a solution to the hard problem of consciousness to be an easy read, but Solms enlivens the narrative with vivid anecdotes and descriptions. I found his description of Freud’s little-known early work enthralling, and his story of his motivation for getting into neuroscience is moving.

It is worth noting that Solms’s views are not accepted by all neuroscientists. However, his book has been favourably reviewed in academic outlets by eminent figures such as Thomas Nagel and Daniel Dennett. Even reviewers disputing Solms’s views say it is worth reading. I unreservedly recommend it to anyone interested in the subject of consciousness, be it human or artificial.