Science / Tech

AI: Let’s Worry About the Right Things

There are valid concerns and there are unfounded fears. Let us separate the two.

There is a moment in Spike Jonze’s 2013 sci-fi Her when lovestruck Theo finally asks his AI partner Samantha if she is involved in any other relationships. She replies sweetly that she is talking with 8,316 other people and is in love with 641 of them. The film never gets around to exploring what an AI means when it says it is “in love.” But the audience knows how Theo feels.

Until recently, machines worked passively alongside humans, predictably enacting our inventiveness. Today, companion technologies listen to people, watch over people, respond to people, and act independently of people, while operating outside and beyond the knowledge or comprehension of most. Questioning how humans want to relate to Artificial Intelligence (AI) in the coming decades need not be muddled together with fantastical catastrophising about machine consciousness or human obsolescence.

There are those—like documentary-maker James Barrat—who believe that once AI starts designing AI, “Machines will take care of what little remains to be done,” opening a Pandora’s box. But this attitude oddly avoids investigating how humans can help shape the changes being ushered in under human control. At the other end of the spectrum are idealists who sing utopian dreams of a future in which human-like AIs have solved all of our problems and created a new era of human flourishing. The debate reminds me of those ancient maps with drawings of fantastical humanoid creatures in those parts of the globe yet to be reached.

There is no need to indulge in either blind idealism or catastrophic narratives about the likely development of AI, and much unnecessary harm is caused by doing so. Instead, as the world’s leading tech companies—including Google, Amazon, and Microsoft—compete with European and Asian counterparts to design and bring to market ever-more advanced AI and robotics, the direction and purpose of design and development need to become the focus of policy for governments, business, and our shared culture as we navigate the 21st century.

How humans interact with AI, and how they want to do so in the future, should be the central considerations. This is, at least for now, entirely a matter for people to decide and shape. By “people,” I mean not only designers and programmers, but also users, consumers, businesses, medical and mental health professionals, educators, and parents. People are only passive recipients of technology if they choose to be. It is imperative that people use their voices as AI becomes a change-maker.

But first we need to eradicate the weeds of fear. Historically, we have created myths about how human creative arrogance and an insatiable appetite for advancement lead to self-destruction. Mary Shelley’s Frankenstein heads a long procession of imaginative texts, which assume that unchecked human ingenuity, mixed with science, inevitably leads to the creation of “monsters.” Shelley expressed a sentiment prevalent in her time—that when short-sighted humans believe themselves capable of god-like creation in their own image, abomination, destruction, and tragic remorse result.

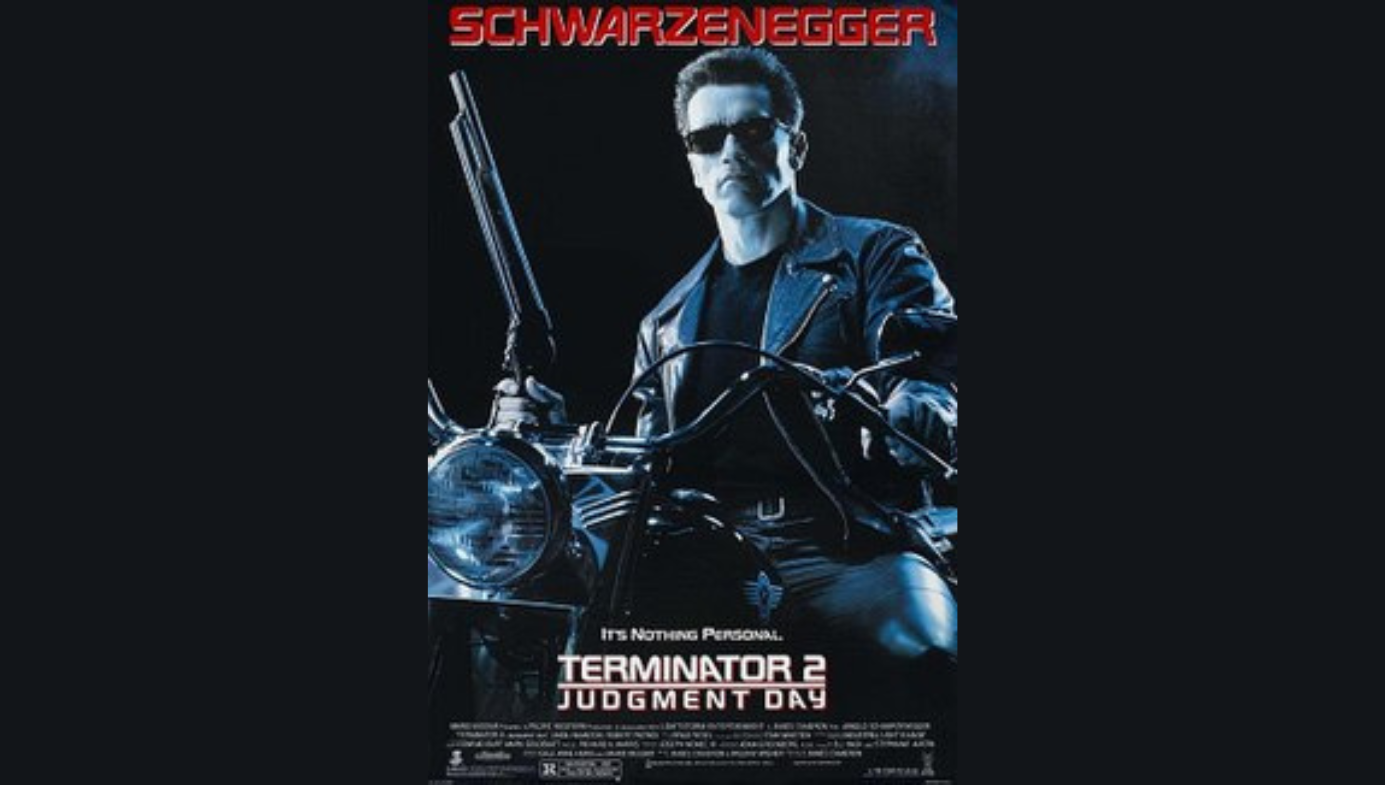

The underlying premise of the Terminator franchise is that technological development has led to the creation of a super-computer system (an AI called Skynet), which gained self-awareness, a development unanticipated by humans. Linked to computers around the world, Skynet recognised humans as the planet’s greatest problem and launched a nuclear assault to destroy the human race. The series draws on the 20th-century anxiety about AI’s capacity for ruthless logic; a logic which must ultimately find itself incompatible with human existence.

But common to countless sci-fi iterations of this plot is the fact that the superintelligence is created by humans, with the intention of bettering human life. These writers and directors mythologise a human propensity for short-sightedness, hubris, naivety, and a doomed trajectory of self-destruction. So common are these ideas in speculative science fiction that the associated anxieties have entered mainstream discourse. It has become common to read headlines such as “Microsoft chatbot goes Nazi on Twitter.”

With this cultural and literary mythology deeply entrenched in the modern psyche, and the sudden and controversial arrival of ChatGPT and a new generation of Large Language Model AIs, it is time to examine the current accomplishments, projections, and debates around the potential for AI, robotics, and our human relationships with machines.

Fear is a colossal distraction and diverter of energy. When fear spreads en masse, it can paralyse whole societies. It is unthinkable that the increasing development, employment, and normalisation of AI technologies is going to halt. Clarity of understanding, now and in the immediate future, is critical, so that humans can evolve and flourish, and the extraordinary potential for AI to support human endeavour can be unlocked. There are valid concerns and there are unfounded fears. Let us separate the two.

Consciousness

The attitude of the scientific community to the possibility of replicating human consciousness stretches back at least to the 19th century. Scientists and engineers observed birds and mammals in flight. They believed that when the mechanics and physics of flight were sufficiently understood, humans would be able to replicate these physical feats. Beginning with the engineering Wright brothers in 1903, it took humankind only an astonishing 66 years from their humble first 12-second flight to escaping Earth’s atmosphere and landing a man on the Moon.

A similar logic remains popular regarding AI. When we have understood the human brain sufficiently, reduced it to its component parts, and can measure it in action, we will be able to replicate it electronically. Or so the argument goes. The problem is that, in spite of extraordinary advances in neurophysiology and computational neuroscience, all attempts to date to emulate even quite simple versions of self-aware learning machines have disappointed. Right now, any two-year-old can accomplish feats the most sophisticated AI cannot.

In June 2022, Google engineer Blake Lemoine found global celebrity when he claimed that the company’s AI technology had become “sentient.” Several days later, amid roaring headlines and much catastrophising, Lemoine was stood down and later fired, sparking an avalanche of conspiracy theories about a Google cover-up. The hysteria at the height of Cold War paranoia is about to be repeated in the 2020s’ world of AI.

So, how founded are the fears of AI consciousness? Is there any agreement about what “sentience” or “consciousness” actually is? And what do those experts closest to the coalface have to say about the question of consciousness?

The term “Artificial Intelligence” has already entered popular culture, but as neurophysiologist and computational neuroscientist Christof Koch has pointed out in his 2004 book The Quest for Consciousness, references to artificial intelligence are really only identifying what a sophisticated program can do or achieve. Calling a computer program “intelligent” tells people nothing about its capacity for self-awareness, and it is increasingly important that the general public, and those reporting on science and technology in the media, are educated by those who understand this fact.

Consciousness arises out of experience, particularly the experience of self. Intelligence and consciousness are two different realms, and as we move forward with AI, the language used to describe the two are going to need to be kept separate in popular discourse and academic research. One useful starting point is to clarify from the outset what AI is not, and what AI cannot accomplish. At the top of the list of cannots is “consciousness.”

When a child learns what a cat is, he only needs to see two or three cats and he’s got it. An AI needs tens of thousands of examples. Even then, one study after another confirms that the slightest tweak of input parameters can destroy the accuracy and reliability of our most sophisticated programs to recognise a particular object. Ongoing research continues to demonstrate limitations and foolability. AI programs can fail dramatically when inputs are modified in even quite modest ways; simply change the lighting, add a filter, add noise: alterations that do not affect a human’s recognition abilities in the slightest.

When it comes to more complex analytical tasks and abstraction across contexts, things quickly get a lot worse for AI. You can show a deep neural network thousands of pictures of bridges, for example, and it will almost certainly recognize a new picture of a bridge over a river or something. But if you ask it about bridging the gender gap in the business sector, it will have no idea what you are talking about. For now, such findings place a real cap on the ways in which people can hope to engage AI with complex, flexible, and meaningful interactions.

The public needs to understand that we are comparing two totally different ways of processing information. The way humans learn is very different from the way AI programs learn. The danger lies in anthropomorphising AI. When the outputs of sophisticated computational programs appear to replicate or mimic human qualities or human behaviours, there is a tendency for people to think that these programs are somehow putting together a higher order of understanding or appreciation of all of the incoming information. It is important not to hypothesise that something has become sentient based on output which only resembles what sentient humans produce. After all, it is humans who have designed and programmed these machines to mimic human language and behaviour and responses in their output.

If a car is programmed to recognise an imminent collision and apply its brakes, it will respond to a child running into the road with an emergency stop; it does not follow that this machine has learned to care about preserving human life. Unpacking the program reveals that the car will respond in the same manner no matter what crosses in front of its sensors. Optimising its speed to reduce fuel consumption does not demonstrate that the car has become environmentally conscientious.

Even if, at some time in the next 50 to 100 years, humans want and manage to create a kind of AI consciousness, there is no reason to imagine that it will be anything like human consciousness. Try to explain to a person blind from birth what the experience of the colour red is like. One might suggest that red is a hot colour while blue is a cool colour. But that is not much more useful than telling a deaf person that jazz tastes crunchy. Anyone programming AI can show it thousands of examples of what humans call “red,” but there is no reason to think that a computer network is able to experience redness. This only happens in a human or animal consciousness, and we know enough about many animals to understand that most creatures are unlikely to experience colours in the way humans do. If animal consciousness is likely to differ from human consciousness, it makes no sense to suppose that AI consciousness would be the same as ours. Endless debates about consciousness divert resources and attention from the very real and practical issues around how humans want to develop AI.

AI can now perform many tasks deservedly called “intelligent”; indeed, in some areas it is outstripping human intelligence exponentially. There are programs that can beat any human at Chess or Go. There are programs mapping chemical structures and engineering molecules at speeds unthinkable for humans. There are AIs running the traffic and public transport for entire city grids. It is therefore essential to establish a clear common understanding about what we mean when we talk about a “conscious mind” as distinct from what we perceive as or name “intelligence.”

Current estimates suggest that the average human brain contains around 86 billion neurons. On average, a neuron has 7,000 synapses, so recent calculations suggest around 600 trillion connections in a human neural network. To put that into perspective, current estimates place the number of internet connections worldwide to be around 50 billion. So one human brain contains the equivalent of 12,000 global internets.

Even if advances in electronics and nanotechnologies solve the scale and connection challenges, we still have little understanding of how all this neural wiring ends up being experienced as “my mind.” Think about what happens inside your mind the moment you run into an old friend. A complex set of apparently instantaneous associations and emotions fill your body and “self.” How your friend looks, how they looked when younger, the last time you met, memories of things shared and said, what you know of your friend’s background, childhood, partners, sexuality, struggles, passions. Your friend’s voice, smell, touch, and smile all trigger feelings, perhaps conflicting ones. If we are yet to understand how all of our memories, sensations, understanding, and interpretation come together into a moment-by-moment feeling of being one self, it is unthinkable that a computer will magically “emerge” as conscious.

Look out—it’s coming!

AI is not coming—it is already here. Yes, when ChatGPT was “switched on” it garnered feverish uptake, but elements of AI have been knitting themselves into our daily lives for 10 years. Virtual assistants like Alexa, Siri, Google Assistant, and Cortana are now widely used in homes and offices. They employ natural language processing (NLP) and machine learning to understand and respond to voice commands, helping with tasks such as setting reminders, playing music, answering questions, and controlling smart home devices, while learning from user behaviour to optimize energy usage, provide security alerts, and automate household tasks.

Many e-commerce platforms, such as Amazon, eBay, and Alibaba, use AI algorithms to personalize product recommendations based on user behaviour, browsing history, and purchase patterns. AI-powered chatbots are commonplace. Social-media platforms utilize AI algorithms for content curation, recommendation, and moderation. Navigation apps like Google Maps and ride-sharing apps like Uber use AI for real-time traffic prediction, route optimization, and driver-matching. AI is being used in healthcare, including medical diagnosis, drug discovery, and personalized treatment plans. AI in online advertising optimizes targeting, placement, and performance. AI algorithms analyse user data and behaviour to deliver personalized ads and optimize ad campaigns. Google Translate uses deep learning AI to automatically translate text from one language to another, revolutionising the ease and immediacy of communication across different languages.

Currently, the propagation of myths is setting cultural progress back many years. In 2014, a Daily Mail headline ran: “Stephen Hawking Warns that Rise of Robots May Be Disastrous for Mankind” (neglecting to mention that Stephen Hawking was a great supporter of AI research and development). Click-bait like this is usually accompanied by pictures of malevolent robots carrying deadly weapons.

When AIs become smarter than humans, we are told, they will destroy us. Frustratingly, such warnings tend to emphasise a number of critical misconceptions: central is the erroneous assumption that strong AI will equal consciousness or some kind of computer sense of self. When a person drives a car down a tree-lined street, they have a subjective experience of the light, the time of day, the beauty of the colour-changing leaves, while the music on the radio might remind them of a long-ago summer. But an AI-driven car is not having any sort of subjective experience. Zero. It just drives. Even if it is chatting with you about your day or apologising for braking suddenly, it is experiencing nothing.

Education of the public (and the media and many scientists) needs to focus on the idea of goal-based competence. What is evolving is the outstanding ability of the latest generation of AI to attain its goals, whatever those goals may be. And we set the goals. Therefore, what humankind needs to ensure, starting now, is that the goals of AI are aligned with ours. As the physicist and AI researcher Max Tegmark points out, few people hate ants, but when a hydroelectric scheme floods a region, destroying many millions of ants in the process, their deaths are regarded as collateral damage. Tegmark says we want to “avoid placing humanity in the position of those ants.”

A mousetrap is a simple, human-designed technology with the goal of trapping a mouse. Experimentally, it proves very successful. Set up some traps, load with tempting food morsels, release mice into the area, and each mouse that nibbles at a piece of food will set off the spring mechanism resulting in a poor creature’s demise. But in a dark laundry, a barefooted human might easily step on a forgotten mouse-trap and suffer a painful injury. The machine’s goal had nothing to do with injuring a human foot. The mouse-trap feels no subjective experience of having helped or hindered its human designers. It just follows its design goals. Humans set those goals but are also responsible for setting them up with enough care and experimentation and foresight that an AI’s goals always remain aligned with human goals.

This is not a question of capabilities. Ultimately, AI will almost certainly be capable of almost anything. So, we need to know how people want AI to interact with humans and how humans want to interact with AI. The eruption of ChatGPT and the speed and scale of take-up provide hints of the floodgates soon to open. Are political, cultural, educational, or social institutions prepared? While it is possible to imagine a new age of sorcery, a new period of possibilities is equally conceivable, in which people’s social and working lives are greatly improved by the integration of thoughtfully designed, human-focused AI and robotics.

Currently, fears of AI abound disproportionately to hopes or positive expectations. Not all fears are of the same kind. Those afraid of AI development being driven by economic imperatives are right to call for smart, collaborative engagement of people across society to ensure that money, engineering prowess, and collaborative efforts are channelled towards improving human lives and the functioning of society. But the simple truth is that both AI and ethical frameworks fall entirely within the scope of human control. There is no rational reason why humans should wish to develop AI agents capable of, or programmed for, competing with humans or working against humanity’s flourishing.

There is every reason to believe that AI and robotics stand to make valuable and largely positive contributions to societies dealing with isolation, difficulties in reaching help when needed, affordability of human educational and psychological support, mental-health and learning needs, and care for children, the elderly, and the marginalised, all of which are areas of culture and society facing real barriers today. Add to this the enormous gains in speed, efficiency, and low costs of medical, legal, financial, and educational applications, and there seems to be a great deal about which we can be hopeful. AI is going to work best as an intellectual, physical, and behavioural prosthetic to human needs and desires. It stands to greatly improve the quality of life for millions and augment the productivity and creativity of many areas of work and leisure time.

If you haven’t seen Her recently, it is well worth a watch. It projects a surprisingly balanced view of the near future of AI companionship. There is no doubt that, within 20 years, we are going to be as familiar with people carrying AI augmentations of their social, psychological, and medical lives as we are familiar today with every person carrying a smartphone.

At one point, AI Samantha asks Theo, “Is it weird that I’m having a hard time distinguishing between things that I’ve told you and things that you’ve told me?” Jonze’s film asks more questions than it answers, and that is how it should be. Being smart with AI does not mean having all of the answers right now. But it does require the right questions. If you feel compelled to fear AI, focus your fears on what humans set as goals. And maybe get involved in the cultural conversation about what those goals should be. From there, you can help shape the evolution of human relationships with these machines.