enlightenment

Be Rational

When we apply reason to reason itself, we find that it is not just an inarticulate gut impulse, a mysterious oracle that whispers truths into our ear.

Rationality is uncool. To describe someone with a slang word for the cerebral, like nerd, wonk, geek, or brainiac, is to imply they are terminally challenged in hipness. For decades, Hollywood screenplays and rock-song lyrics have equated joy and freedom with an escape from reason. “A man needs a little madness or else he never dares cut the rope and be free,” said Zorba the Greek. "Stop Making Sense," advised Talking Heads; “Let’s go crazy,” adjured the Artist Formerly Known as Prince. Fashionable academic movements like postmodernism and critical theory (not to be confused with critical thinking) hold that reason, truth, and objectivity are social constructions that justify the privilege of dominant groups. These movements have an air of sophistication about them, implying that Western philosophy and science are provincial, old-fashioned, naïve to the diversity of ways of knowing found across periods and cultures. To be sure, not far from where I live in downtown Boston there is a splendid turquoise and gold mosaic that proclaims, “Follow reason.” But it is affixed to the Grand Lodge of the Masons, the fez- and apron-sporting fraternal organization that is the answer to the question “What’s the opposite of hip?”

My own position on rationality is “I’m for it.” Though I cannot argue that reason is dope, phat, chill, fly, sick, or da bomb, and strictly speaking I cannot even justify or rationalize reason, I will defend the message on the mosaic: we ought to follow reason.

To begin at the beginning: what is rationality? As with most words in common usage, no definition can stipulate its meaning exactly, and the dictionary just leads us in a circle: most define rational as “having reason,” but reason itself comes from the Latin ration-, often defined as “reason.”

A definition that is more or less faithful to the way the word is used is “the ability to use knowledge to attain goals.” Knowledge in turn is standardly defined as “justified true belief.” We would not credit someone with being rational if they acted on beliefs that were known to be false, such as looking for their keys in a place they knew the keys could not be, or if those beliefs could not be justified—if they came, say, from a drug-induced vision or a hallucinated voice rather than observation of the world or inference from some other true belief.

The beliefs, moreover, must be held in service of a goal. No one gets rationality credit for merely thinking true thoughts, like calculating the digits of pi, or cranking out the logical implications of a proposition (“Either 1 + 1 = 2 or the moon is made of cheese,” “If 1 + 1 = 3, then pigs can fly”). A rational agent must have a goal, whether it is to ascertain the truth of a noteworthy idea, called theoretical reason, or to bring about a noteworthy outcome in the world, called practical reason (“what is true” and “what to do”). Even the humdrum rationality of seeing rather than hallucinating is in the service of the ever-present goal built into our visual systems of knowing our surroundings.

A rational agent, moreover, must attain that goal not by doing something that just happens to work there and then, but by using whatever knowledge is applicable to the circumstances. Here is how William James distinguished a rational entity from a nonrational one that would at first appear to be doing the same thing:

Romeo wants Juliet as the filings want the magnet; and if no obstacles intervene he moves toward her by as straight a line as they. But Romeo and Juliet, if a wall be built between them, do not remain idiotically pressing their faces against its opposite sides like the magnet and the filings with the card. Romeo soon finds a circuitous way, by scaling the wall or otherwise, of touching Juliet’s lips directly. With the filings the path is fixed; whether it reaches the end depends on accidents. With the lover it is the end which is fixed; the path may be modified indefinitely.

With this definition the case for rationality seems all too obvious: do you want things or don’t you? If you do, rationality is what allows you to get them.

Now, this case for rationality is open to an objection. It advises us to ground our beliefs in the truth, to ensure that our inference from one belief to another is justified, and to make plans that are likely to bring about a given end. But that only raises further questions. What is “truth”? What makes an inference “justified”? How do we know that means can be found that really do bring about a given end? But the quest to provide the ultimate, absolute, final reason for reason is a fool’s errand. Just as an inquisitive three-year-old will reply to every answer to a “why” question with another “Why?,” the quest to find the ultimate reason for reason can always be stymied by a demand to provide a reason for the reason for the reason. Just because I believe P implies Q, and I believe P, why should I believe Q? Is it because I also believe [(P implies Q) and P] implies Q? But why should I believe that? Is it because I have still another belief, {[(P implies Q) and P] implies Q} implies Q?

This regress was the basis for Lewis Carroll’s 1895 story What the Tortoise Said to Achilles, which imagined the conversation that would unfold when the fleet-footed warrior caught up to (but could never overtake) the tortoise with the head start in Zeno’s second paradox. (In the time it took for Achilles to close the gap, the tortoise moved on, opening up a new gap for Achilles to close, ad infinitum.) Carroll was a logician as well as a children’s author, and in this article, published in the philosophy journal Mind, he imagines the warrior seated on the tortoise’s back and responding to the tortoise’s escalating demands to justify his arguments by filling up a notebook with thousands of rules for rules for rules. The moral is that reasoning with logical rules at some point must simply be executed by a mechanism that is hardwired into the machine or brain and runs because that’s how the circuitry works, not because it consults a rule telling it what to do. We program apps into a computer, but its CPU is not itself an app; it’s a piece of silicon in which elementary operations like comparing symbols and adding numbers have been burned. Those operations are designed (by an engineer, or in the case of the brain by natural selection) to implement laws of logic and mathematics that are inherent to the abstract realm of ideas.

Now, Mr. Spock notwithstanding, logic is not the same thing as reasoning. But they are closely related, and the reasons the rules of logic can’t be executed by still more rules of logic (ad infinitum) also apply to the justification of reason by still more reason. In each case the ultimate rule has to be “Just do it.” At the end of the day the discussants have no choice but to commit to reason, because that’s what they committed themselves to at the beginning of the day, when they opened up a discussion of why we should follow reason. As long as people are arguing and persuading and then evaluating and accepting or rejecting the arguments—as opposed to, say, bribing or threatening each other into mouthing some words—it’s too late to ask about the value of reason. They’re already reasoning, and have tacitly accepted its value.

When it comes to arguing against reason, as soon as you show up, you lose. Let’s say you argue that rationality is unnecessary. Is that statement rational? If you concede it isn’t, then there’s no reason for me to believe it—you just said so yourself. But if you insist I must believe it because the statement is rationally compelling, you’ve conceded that rationality is the measure by which we should accept beliefs, in which case that particular one must be false. In a similar way, if you were to claim that everything is subjective, I could ask, “Is that statement subjective?” If it is, then you are free to believe it, but I don’t have to. Or suppose you claim that everything is relative. Is that statement relative? If it is, then it may be true for you right here and now but not for anyone else or after you’ve stopped talking. This is also why the recent cliché that we’re living in a “post-truth era” cannot be true. If it were true, then it would not be true, because it would be asserting something true about the era in which we are living.

This argument, laid out by the philosopher Thomas Nagel in The Last Word, is admittedly unconventional, as any argument about argument itself would have to be. Nagel compared it to Descartes’s argument that our own existence is the one thing we cannot doubt, because the very fact of wondering whether we exist presupposes the existence of a wonderer. The very fact of interrogating the concept of reason using reason presupposes the validity of reason. Because of this unconventionality, it’s not quite right to say that we should “believe in” reason or “have faith in” reason. As Nagel points out, that’s “one thought too many.” The masons (and the Masons) got it right: we should follow reason.

Now, arguments for truth, objectivity, and reason may stick in the craw, because they seem dangerously arrogant: “Who the hell are you to claim to have the absolute truth?” But that’s not what the case for rationality is about. The psychologist David Myers has said that the essence of monotheistic belief is: (1) There is a God and (2) it’s not me (and it’s also not you). The secular equivalent is: (1) There is objective truth and (2) I don’t know it (and neither do you). The same epistemic humility applies to the rationality that leads to truth. Perfect rationality and objective truth are aspirations that no mortal can ever claim to have attained. But the conviction that they are out there licenses us to develop rules we can all abide by that allow us to approach the truth collectively in ways that are impossible for any of us individually.

The rules are designed to sideline the biases that get in the way of rationality: the cognitive illusions built into human nature, and the bigotries, prejudices, phobias, and -isms that infect the members of a race, class, gender, sexuality, or civilization. These rules include principles of critical thinking and the normative systems of logic, probability, and empirical reasoning. They are implemented among flesh-and-blood people by social institutions that prevent people from imposing their egos or biases or delusions on everyone else. “Ambition must be made to counteract ambition,” wrote James Madison about the checks and balances in a democratic government, and that is how other institutions steer communities of biased and ambition-addled people toward disinterested truth. Examples include the adversarial system in law, peer review in science, editing and fact-checking in journalism, academic freedom in universities, and freedom of speech in the public sphere. Disagreement is necessary in deliberations among mortals. As the saying goes, the more we disagree, the more chance there is that at least one of us is right.

Though we can never prove that reasoning is sound or the truth can be known (since we would need to assume the soundness of reason to do it), we can stoke our confidence that they are. When we apply reason to reason itself, we find that it is not just an inarticulate gut impulse, a mysterious oracle that whispers truths into our ear. We can expose the rules of reason and distill and purify them into normative models of logic and probability. We can even implement them in machines that duplicate and exceed our own rational powers. Computers are literally mechanized logic, their smallest circuits called logic gates.

Another reassurance that reason is valid is that it works. Life is not a dream, in which we pop up in disconnected locations and bewildering things happen without rhyme or reason. By scaling the wall, Romeo really does get to touch Juliet’s lips. And by deploying reason in other ways, we reach the moon, invent smartphones, and extinguish smallpox. The cooperativeness of the world when we apply reason to it is a strong indication that rationality really does get at objective truths.

And ultimately even relativists who deny the possibility of objective truth and insist that all claims are merely the narratives of a culture lack the courage of their convictions. The cultural anthropologists or literary scholars who avow that the truths of science are merely the narratives of one culture will still have their child’s infection treated with antibiotics prescribed by a physician rather than a healing song performed by a shaman. And though relativism is often adorned with a moral halo, the moral convictions of relativists depend on a commitment to objective truth. Was slavery a myth? Was the Holocaust just one of many possible narratives? Is climate change a social construction? Or are the suffering and danger that define these events really real—claims that we know are true because of logic and evidence and objective scholarship? Now relativists stop being so relative.

For the same reason there can be no tradeoff between rationality and social justice or any other moral or political cause. The quest for social justice begins with the belief that certain groups are oppressed and others privileged. These are factual claims and may be mistaken (as advocates of social justice themselves insist in response to the claim that it’s straight white men who are oppressed). We affirm these beliefs because reason and evidence suggest they are true. And the quest in turn is guided by the belief that certain measures are necessary to rectify those injustices. Is leveling the playing field enough? Or have past injustices left some groups at a disadvantage that can only be set right by compensatory policies? Would particular measures merely be feel-good signaling that leaves the oppressed groups no better off? Would they make matters worse? Advocates of social justice need to know the answers to these questions, and reason is the only way we can know anything about anything.

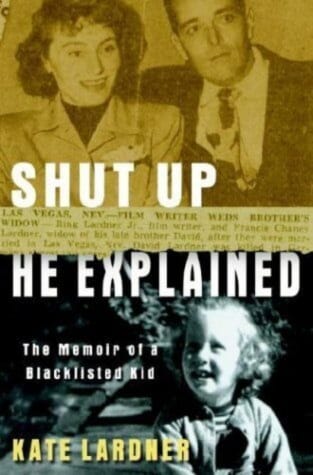

Admittedly, the peculiar nature of the argument for reason always leaves open a loophole. In introducing the case for reason, I wrote, “As long as people are arguing and persuading…,” but that’s a big “as long as.” Rationality rejecters can refuse to play the game. They can say, “I don’t have to justify my beliefs to you. Your demands for arguments and evidence show that you are part of the problem.” Instead of feeling any need to persuade, people who are certain they are correct can impose their beliefs by force. In theocracies and autocracies, authorities censor, imprison, exile, or burn those with the wrong opinions. In democracies the force is less brutish, but people still find means to impose a belief rather than argue for it. Modern universities—oddly enough, given that their mission is to evaluate ideas—have been at the forefront of finding ways to suppress opinions, including disinviting and drowning out speakers, removing controversial teachers from the classroom, revoking offers of jobs and support, expunging contentious articles from archives, and classifying differences of opinion as punishable harassment and discrimination. They respond as Ring Lardner recalled his father doing when the writer was a boy: “‘Shut up,’ he explained.”

If you know you are right, why should you try to persuade others through reason? Why not just strengthen solidarity within your coalition and mobilize it to fight for justice? One reason is that you would be inviting questions such as: Are you infallible? Are you certain that you’re right about everything? If so, what makes you different from your opponents, who also are certain they’re right? And from authorities throughout history who insisted they were right but who we now know were wrong? If you have to silence people who disagree with you, does that mean you have no good arguments for why they’re mistaken? The incriminating lack of answers to such questions could alienate those who have not taken sides, including the generations whose beliefs are not set in stone.

And another reason not to blow off persuasion is that you will have left those who disagree with you no choice but to join the game you are playing and counter you with force rather than argument. They may be stronger than you, if not now then at some time in the future. At that point, when you are the one who is canceled, it will be too late to claim that your views should be taken seriously because of their merits.

Excerpted, with permission, from Rationality: What It Is, Why It Seems Scarce, Why It Matters, by Steven Pinker. Published by Viking, an imprint of Penguin Random House LLC. Copyright © 2021 by Steven Pinker.