The Need for Rationality in a Hostile World

A Review of Rationality: What It Is, Why It Seems Scarce, Why It Matters by Steven Pinker. Viking, 432 pages (September 28, 2021).

“Academic Writes Engaging Book for the Masses.” Now there’s a shocking headline. Totally man bites dog. Oh, oh, scratch that. It’s not man bites dog at all. It’s another book by Steven Pinker.

He’s done it again, folks. It’s everything you’d expect from our most erudite disseminator of contemporary cognitive science. So, that’s the review of the prose and the readability and the style of his new book, Rationality. Many words can be saved when the author is Pinker. So let’s move on to the content.

The high status accorded rationality in Pinker’s book is at odds with characterizations that deem rationality either trivial (little more than the ability to solve textbook-type logic problems) or antithetical to human fulfillment (as an impairment to an enjoyable emotional life). Dictionary definitions of rationality tend to be vague (“the state or quality of being in accord with reason”). The meaning of rationality in cognitive science is—in contrast to these weak characterizations—much more richly defined, and Pinker’s book captures this extremely well. He orients the reader with a clear definition of rationality as “the ability to use knowledge to attain goals.” This definition captures the two types of rationality that cognitive scientists study: instrumental rationality and epistemic rationality.

Instrumental rationality focuses on optimizing goal fulfillment: Behaving in the world so that you get what you want, given the resources (physical and mental) available to you. Economists and cognitive scientists have further refined the notion of optimization of goal fulfillment into the technical notion of expected utility. Epistemic rationality (the “knowledge” in Pinker’s definition) concerns how well beliefs map onto the real world. The two types of rationality are related. In order to take actions that fulfill our goals, we need to base those actions on beliefs that reflect reality.

Although many people believe that they could do without the ability to solve textbook logic problems, virtually no one wishes to eschew epistemic rationality and instrumental rationality, properly defined. Virtually everyone wants their beliefs to be in some correspondence with reality, and they also want to achieve their goals. As Pinker notes, most people want to know what is true and what to do.

Likewise, Pinker’s treatment of the relation between emotion and rationality gets it just right. In folk psychology, emotion is often seen as antithetical to rationality. This idea is not correct. Like other heuristics, emotions get us “in the right ballpark” of the correct response rather quickly. They are part of the “fast thinking” System 1 referred to in Kahneman’s Thinking, Fast and Slow. If more accuracy is needed for a particular response, then a more precise type of analytic cognition using System 2 will be required. Of course, we can rely too much on emotions. We can rely too heavily on “ballpark” solutions when what we need is a more precise type of analytic thought. More often than not though, like most adaptive System 1 processes, emotional regulation facilitates rational thought and action.

People who are intelligent tend to be more rational, but the two are not the same. Rationality, in fact, is the more encompassing construct. Pinker points us to the propensities and knowledge bases that go beyond anything that is assessed on an intelligence test—such as actively open-minded thinking. The malleability of thinking dispositions is still an open research question, but it is beyond dispute that the knowledge bases necessary for rational thought are teachable (just learn all the concepts in the middle chapters of Rationality well enough, and I guarantee you will end up a more rational person!).

Thus, Pinker gets all of the meta-theoretical issues about rationality correct and avoids all of the caricatures. Appropriately, just one chapter of the book is devoted to logic. The other chapters cover the multifarious knowledge bases and thinking styles of the modern conception of rationality: probabilistic reasoning, belief updating, signal detection theory, expected utility theory, causal reasoning, game theory, actively open-minded thinking, and myside bias.

The heuristics and biases literature is covered in the book with aplomb—the examples are all well-chosen and not displayed as “trick problems” but instead as gateways to larger issues surrounding rational thinking. Even when Pinker is critical of the standard interpretation of a classic task, there are always larger issues at play in his critique. He illustrates the many biases that lead people to violate the various strictures of epistemic and instrumental rationality.

In fact, Pinker is particularly good at amalgamating the best insights from the various positions taken in the Great Rationality Debate in cognitive science—the debate about how much irrationality to attribute to human cognition, also known as the debate between the “Meliorists,” the “Panglossians” and the “Apologists.”

The so-called Meliorists tend to work in the Kahneman and Tversky heuristics-and-biases tradition, and assume that human reasoning is not as good as it could be. The Panglossians, on the other hand, have more faith in human reasoning and see the laboratory experiments of the Kahneman and Tversky tradition as not necessarily reflecting real-world decision-making. They default to the assumption that human reasoning is maximally rational. And finally we have the Apologists, who sit somewhere in between. Like the Meliorists, the Apologists can recognise that human reason is often suboptimal, but like the Panglossians, they do not always ascribe these limitations as instances of irrationality.

Apologists have argued that reasoners have limited short-term memory spans, limited long-term storage capability, limited perceptual abilities, and limited knowledge that may prevent them from giving a perfectly rational response (an exemplar of this position discussed by Pinker is Herb Simon’s concept of bounded rationality). Ascriptions of irrationality seem appropriate only when it was possible for the person to have done better.

The Meliorist position motivates remediation efforts much more strongly than does the Panglossian position. The Apologist is like the Panglossian in seeing little that can done given existing cognitive constraints. However, the Apologist position does emphasize the possibility of enhancing performance in another way—by presenting information in a way that is better suited to what our cognitive machinery is designed to do. Pinker represents this position well in his book. As he notes, “it’s better to work with the rationality people have and enhance it further than to write off the majority of our species as chronically crippled by fallacies and biases.”

Pinker’s book does not deal directly with the Great Rationality Debate in cognitive science. However, he implicitly advocates an intellectual cease-fire that I have long championed—that dual process theory (the theory of “fast” and “slow” thinking described in Kahneman’s book) can provide a rapprochement in the debate because it jettisons all the strawmen on all sides. As Pinker says, we are not “Stone Age bumblers,” but, nonetheless, the many laboratory findings showing that humans have a host of cognitive deficiencies (the so-called biases) represent serious errors with implications in the real world.

Although Pinker makes many Apologist defenses throughout the book, he balances this with ample acknowledgment that conflicting goals often need to be adjudicated by System 2, especially when current goals arise from the ultimate goals of the genes—as in the conflict between the goal of “a slim healthy body” and a delicious dessert. The goal of consuming the delicious dessert arose from evolutionary reasons—“the ultimate goal of hoarding calories in an energy-stingy environment”—whereas the desire for a “slim healthy body” is more likely to have arisen from the present milieu. Meliorists have long stressed that when faced with this choice, the statistical best bet for present and long-term personal well-being is to override present wants that originate from the ultimate goals of the genes.

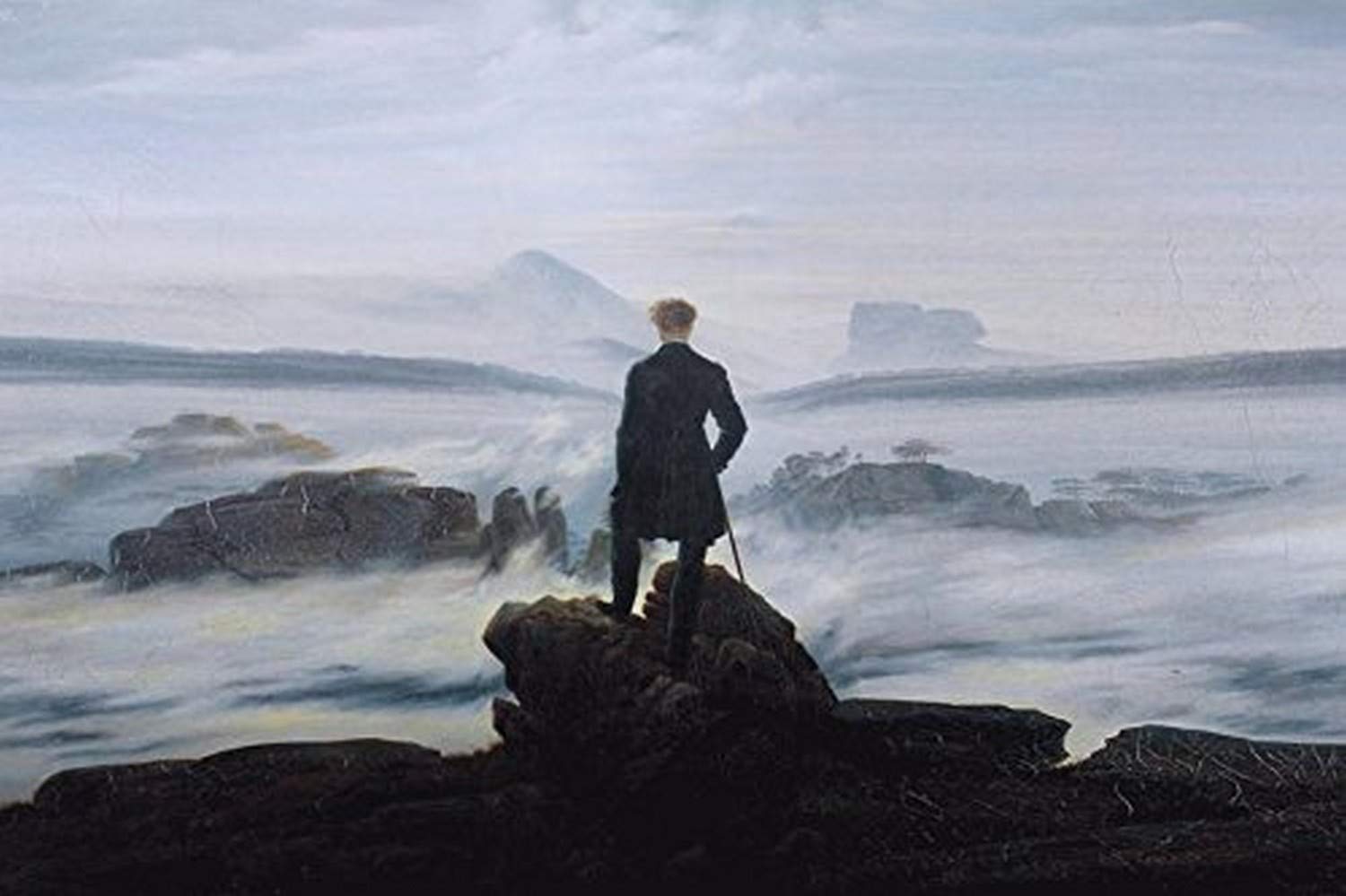

My own writings had a strong Meliorist bent many years ago, but I have since tacked back a bit in the Apologist/Panglossian direction. As a result, I find Pinker’s mix of these positions pretty congenial. The various positions have different costs and benefits. For example, if Panglossians happen to be wrong in their assumptions, then we might miss opportunities to remediate reasoning. Conversely, Meliorism might well waste effort on unjustified cognitive remediation efforts. Apologists sometimes fail to acknowledge that a real cognitive disability results when a technological society confronts the human cognitive apparatus with a problem for which it is not evolutionarily adapted. The three camps remain in disagreement about the degree of mismatch between evolutionarily adapted mechanisms and the cognitive requirements of modern technological society—in short, whether from our evolved brain’s standpoint, the world is benign or hostile.

A hostile world requires rational thinking

System 1 processing heuristics depend on benign environments providing obvious cues that elicit adaptive behaviors. A benign environment is one that contains useful cues that can be exploited by various heuristics. To be classified as benign, an environment must also contain no other individuals who will adjust their behavior to exploit those relying only on System 1 processing. In contrast, a hostile environment for heuristics is one in which there are few cues that are usable by System 1 processes—or there are misleading cues. Also, an environment can turn hostile for a user of System 1 processing when other agents discern the simple cues that are being used and arrange them for their own advantage (for example, the $350 billion per year advertising industry). When in hostile environments, System 1 processes must be overridden by System 2.

The assumption of a hostile environment is behind many tasks devised by researchers used to assess rational thinking. The fact that many problems have an intuitively compelling wrong answer is often seen as an attempt to “trick” the participant. In fact, the presence of the compelling intuitive response is precisely what makes the problem a System 2 problem. Rational thinking tasks often require unnatural types of decontextualization—often forcing people to “ignore what they know” or ignore salient features because they are irrelevant. Such tasks are designed to mimic a hostile world rather than a benign one.

Apologists and Panglossian theorists have shown us that many reasoning errors might have an evolutionary or adaptive basis. But the Meliorist perspective on this is that however much these responses make sense from the standpoint of evolutionary history, they are not instrumentally rational in the world we presently live in. Critics who bemoan the “artificial” problems and tasks in the heuristics and biases literature and imply that since these tasks are not like “real life” sometimes forget that, ironically, the argument that the laboratory tasks are not like “real life” is becoming less and less true. “Life,” in fact, is becoming more like the tests!

Try arguing with your health insurer about a disallowed medical procedure, for example. In such circumstances, we invariably find out that our personal experience, our emotional responses, our System 1 intuitions about social fairness—are all worthless. All are for naught when talking over the phone to the representative looking at a computer screen displaying a spreadsheet with a hierarchy of branching choices and conditions to be fulfilled. The social context, the idiosyncrasies of individual experience, the personal narrative—the “natural” aspects of System 1 processing—all are abstracted away as the representatives of modernist technological-based services attempt to “apply the rules.”

Unfortunately, the modern world tends to create situations where the default values of evolutionarily adapted cognitive systems are not optimal. This puts a premium on the use of System 2 to override System 1 responses. Modern technological societies continually spawn situations where humans must decontextualize information—where they must deal abstractly and in a depersonalized manner with information rather than in the context-specific way of System 1. The abstract tasks studied by the heuristics and biases researchers often accurately capture this real-life conflict. Additionally, market economies contain agents who will exploit automatic System 1 responding for profit (better buy that “extended warranty” on a $150 electronic device!). This again puts a premium on overriding System 1 responses that will be exploited by others in a market economy.

Pinker discusses many rational thinking tasks that require the subject to “ignore what they know” or ignore irrelevant context. The science on which modern technological societies is based often requires “ignoring what we know or believe.” Testing a control group when you fully expect it to underperform compared to an experimental group is a form of ignoring what you believe. Science is a way of systematically ignoring what we know, at least temporarily (during the test), so that we can recalibrate our belief after the evidence is in. Likewise, many aspects of the contemporary legal system put a premium on detaching prior belief and world knowledge from the process of evidence evaluation. Modernity increasingly requires decontextualizing in the form of stripping away what we personally “know” by its emphasis on such characteristics as: fairness, rule-following despite context, even-handedness, sanctioning of nepotism, unbiasedness, universalism, inclusiveness, and legally mandated equal treatment. That is, all of these requirements of modernity necessitate overriding the narrative and personalized knowledge tendencies of System 1.

These requirements include: the vivid advertising examples we must ignore; the unrepresentative sample we must disregard; the favored hypothesis we must not privilege; the rule we must follow that dictates we ignore a personal relationship; the narrative we must set aside because it does not square with the facts; the “pattern” we must not infer because we know a randomizing device is involved; the sunk cost that must not affect our judgment; the judge's instructions we must follow despite their conflict with common sense; the professional decision we must make because we know it is beneficial in the aggregate even if unclear in a given case.

Memes and myside bias

In the first nine chapters of Rationality, we learn that humans have many tools of rationality at their disposal. System 1 is full of automatic propensities that have been honed over millennia to optimally regulate our responses to stimuli in environments that are not rapidly changing. Also available to us are all the tools of rational thought that Pinker discusses. By the process of cultural ratcheting, we can use the tools that previous thinkers have labored for centuries to create for us. Cultural diffusion allows knowledge to be shared and short-circuits the need for separate individual discovery. Most of us are cultural freeloaders—adding nothing to the collective knowledge or rationality of humanity. Instead, we benefit every day from the knowledge and rational strategies invented by others. The development of probability theory, concepts of empiricism, mathematics, scientific inference, and logic throughout the centuries have provided humans with conceptual tools to aid in the formation and revision of belief and in their reasoning about action.

By such cultural ratcheting, we have accomplished any number of supreme achievements such as curing illness, decoding the genome, and uncovering the most minute constituents of matter. To these achievements, Pinker adds “vaccines likely to end a deadly plague have been announced less than a year after it emerged.” Yet, to mention the latter is to force the admission that the pandemic triggered a “carnival of cockamamie conspiracy theories.” The list seems endless, including conspiracies involving implantable microchips in people’s bodies. Substantial minorities refuse the COVID-19 vaccine, including a portion of the well-educated part of the population. All of this exists alongside surveys showing that 41 percent of the population believes in extrasensory perception, 32 percent in ghosts and spirits, and 25 percent in astrology—just a few of the pseudoscientific beliefs that Pinker lists. These facts highlight what Pinker calls the rationality paradox: “How, then, can we understand this thing called rationality which would appear to be the birthright of our species yet is so frequently and flagrantly flouted?”

Pinker admits that the solution to this “pandemic of poppycock” is not to be found in correcting the many thinking biases that are covered in the book. Those particular biases are in the fourth class of rational thinking error that I have identified in previous writings. First, some rational thinking errors (many in the domains of probabilistic reasoning and scientific reasoning) result from inappropriate System 1 biases that must be overridden by the cognitively taxing operations of System 2, and some people don’t have the capacity to sustain this type of decoupling. This is the class of error that is highly related to intelligence. A second class however, arises when people have the decoupling ability but don’t tend to employ it because they are too impulsive and accept the outputs of System 1 too readily. This kind of error is less related to intelligence and more related to thinking dispositions like actively open-minded thinking.

The third class of error arises when people have adequate intelligence and sufficient reflective tendencies, but have not acquired the specialized knowledge (so-called mindware) that is necessary to compute the response that overrides the incorrect intuitive response. These errors occur when people lack precisely the causal reasoning and scientific thinking skills that Pinker covers in the book. A fourth class of error arises, however, because not all mindware is helpful. In fact, some mindware is the direct cause of irrational thinking. I have called this the problem of contaminated mindware. The “pandemic of poppycock” that Pinker describes at the outset of Chapter 10 comes precisely from this category of irrational thinking. And that’s bad news.

It’s bad news because we can’t remediate this kind of rational thinking through teaching. People captured by this poppycock have too much mindware—not too little. Yes, learning scientific reasoning more deeply, or learning more probabilistic reasoning skills might help a little. But Pinker agrees with my pessimism on this count, arguing that “nothing from the cognitive psychology lab could have predicted QAnon, nor are its adherents likely to be disabused by a tutorial in logic or probability.”

This admission uncomfortably calls to mind a quip by Scott Alexander that:

Of the fifty-odd biases discovered by Kahneman, Tversky, and their successors, forty-nine are cute quirks, and one is destroying civilization. This last one is confirmation bias—our tendency to interpret evidence as confirming our pre-existing beliefs instead of changing our minds.

This quip is not literally correct, because the “other 49” are not “cute quirks” with no implications in the real world. In his final chapter, Pinker describes and cites research showing that these biases have been linked to real-world outcomes in the financial, occupational, health, and legal domains. They are not just cute quirks. Nevertheless, the joke hits home, and that’s why I wrote a whole book on the one bias that is “destroying civilization.”

And it’s why in the penultimate chapter titled “What’s Wrong With People” Pinker focuses on motivated reasoning, myside bias, and contaminated mindware. Having studied these areas myself, it was no surprise to me that this chapter was not an encouraging one from the standpoint of individual remediation. Most cognitive biases in the literature have moderate correlations with intelligence. This provides some ground for optimism because, even for people without high cognitive ability, it may be possible to teach them the thinking propensities and stored mindware that makes the highly intelligent more apt to avoid the bias. This is not the case with the “one that’s destroying civilization.” Although belief in conspiracy theories (the quintessential contaminated mindware) has a modest negative correlation with intelligence, the tendency to display myside bias is totally uncorrelated with intelligence.

Myside bias also has little domain generality: a person showing high myside bias in one domain is not necessarily likely to show it in another. On the other hand, specific beliefs differ greatly in the amount of myside bias they provoke. Thus, myside bias is best understood by looking at the nature of beliefs rather than the generic psychological characteristics of people. A different type of theory is needed to explain individual differences in myside bias. Memetic theory becomes of interest here, because memes differ in how strongly they are structured to repel contradictory ideas. Even more important is the fundamental memetic insight itself: that a belief may spread without necessarily being true or helping the human being who holds the belief in any way. For our evolved brains, such beliefs represent another aspect of a hostile world.

Properties of memes such as non-falsifiability have obvious relevance here, as do consistency considerations that loom large in many of the rational strictures that Pinker discusses. Memes that have not passed any reflective tests such as falsifiability or consistency, are more likely to be those memes that are serving only their own interests—that is, ideas that we believe only because they have properties that allow them to easily acquire hosts. Pinker views conspiracy theories as well-adapted memes.

People need to be more skeptical of the memes that they have acquired. Utilizing some of the thinking dispositions like actively open-minded thinking that Pinker discusses, we need to learn to treat our beliefs less like possessions and more like contingent hypotheses. People also need to be particularly skeptical of the memes that were acquired in their early lives—those that were passed on by parents, relatives, and their peers. It is likely that these memes have not been subjected to selective tests because they were acquired during a developmental period when their host lacked reflective capacities.

All of this is heavy lifting at the individual level, however. Ultimately, we all need to rely on the “institutions of rationality” that provide the epistemic tools to deal with what Pinker, aptly channeling the work of Dan Kahan, calls the “Tragedy of the Belief Commons.” Cultural institutions can enforce rules whereby people benefit from rational tools without having to learn the tools themselves. Pinker describes some institutional reforms within the media and the Internet, but shares my pessimism about universities and their “suffocating left-wing monoculture, with its punishment of students and professors who question dogmas on gender, race, culture, genetics, colonialism, and sexual identity and orientation.” He describes how “on several occasions correspondents have asked me why they should trust the scientific consensus on climate change, since it comes out of institutions that brook no dissent.” In short, the public is coming to know that the universities have approved positions on certain topics, and thus the public is quite rationally reducing its confidence in research that comes out of universities.

Despite the pessimism of the penultimate chapter, What’s Wrong with People, showing that there is no easy remedy for “the bias that is destroying civilization,” the final chapter of the book ends on a positive note. Pinker recounts the history and statistics of both material and moral progress that he has covered in more detail in his previous books. But his most powerful argument is that the history of moral progress clearly has compelling reasoned argument as a prime mover. This point is exemplified by using the powerful speeches and writings of Frederick Douglass, Erasmus, Jeremy Bentham, Mary Wollstonecraft, and Martin Luther King, among others.

The principles that these great thinkers were leading us toward were universal—and by being universal, necessarily decontextualized. Their arguments “are designed to sideline the biases that get in the way of rationality”—and contain the seeming paradox that we must decontextualize and depersonalize in order to uplift all of humanity. A powerful underlying theme of Pinker’s book is that: “ideas are true or false, consistent or contradictory, conducive to human welfare or not, regardless of who thinks them.” This flies in the face of the identity politics that is sweeping through all our major institutions. However, it accurately reflects the price we will pay for giving up our hard-won universal principles for the temporary good feeling of affirming people’s identities. Pinker warns that “our ability to eke increments of well-being out of a pitiless cosmos and to be good to others despite our flawed nature depends on grasping impartial principles that transcend our parochial experience.”

Pinker sums up the insights of the book by noting that the principles of rationality and reason “awaken us to ideas and expose us to realities that confound our intuitions but are true for all that”—a view that puts him close to the dual process Meliorists he often rightly corrects for their overzealous critiques of our thinking proclivities.