Books

'Science Fictions' Review: Begone, Science Swindlers

Science Fictions is engaging, story-led, and well-organised. It will equip my sad young friend to articulate what went wrong with his charity’s study on literacy and, as importantly, to do the next one well.

A review of Science Fictions: How Fraud, Bias, Negligence, and Hype Undermine the Search for Truth by Stuart Ritchie, Bodley Head, 353 pages (July, 2020).

As I sat down to review Stuart Ritchie’s new book, Science Fictions, I was interrupted immediately by mournful texts from a young man who was being hosed for his write-up of the results from a study. He’d asked me to take a look at it. A charity wanted to improve literacy in poor children. Children’s literacy had been measured before and after a “treatment” or intervention. There was no “control group” in the design. No similar sample of children who trundled along without the intervention, nor an intervention designed to match the treatment in all but the supposed crucial component. Had literacy increased at the second assessment because of the treatment or because the children were a year older? Your guess is as good as mine. The young man fed this problem back to his superiors and was called, peremptorily, to an online meeting. The charity had wanted a glowing report and were unhappy they didn’t get it. The young man said he felt like his bones were filling up with lead. I’ll send him a copy of Stuart’s book. I hope his bosses at the charity will read it too. After all, they are spending (wasting) tens of thousands of dollars on a bad study.

Science Fictions bites down hard on four key problems that beset the institution of science. It begins with the spooky story of a paper, which appeared in a top-flight psychology journal, showing that the fundamental laws of physics had been broken by undergraduates; they had reversed time.

In one experiment, in this now infamous paper, students were shown words on a screen, one at a time. Then they were asked to type as many as they could recall. Next 20 randomly chosen words were shown to the students from the original list. The surprising finding was that the students were more likely to remember the 20 words they were about to see for a second time, even though they had only psychic intuition to guide them. Astonishing! And, yes, it was parapsychological nonsense, but the story warms us up for Ritchie’s key themes.

Chapters begin with tales of various kinds of malfeasance, followed by cogent analyses of what went wrong and why. Sometimes the fault lies in an individual rogue player; after all villains exist in art, commerce, finance, and writing, so why shouldn’t some scoundrels haunt the halls of science and medicine? Sometimes it’s a matter of over-egging, or a hapless mistake. When the research question concerns the placing of Whalleyanidae in the Lepidoptera Tree of Life, mistakes may be forgiven and the dear Whalleyanidae (a genus of moth endemic to Madagascar) will find its correct taxonomic home eventually. But when the research question concerns medicine or a surgical protocol (as in the shameful tale of Dr Paolo Macchiarini, with his fraudulent plastic tracheas), bad practice leads to lost lives.

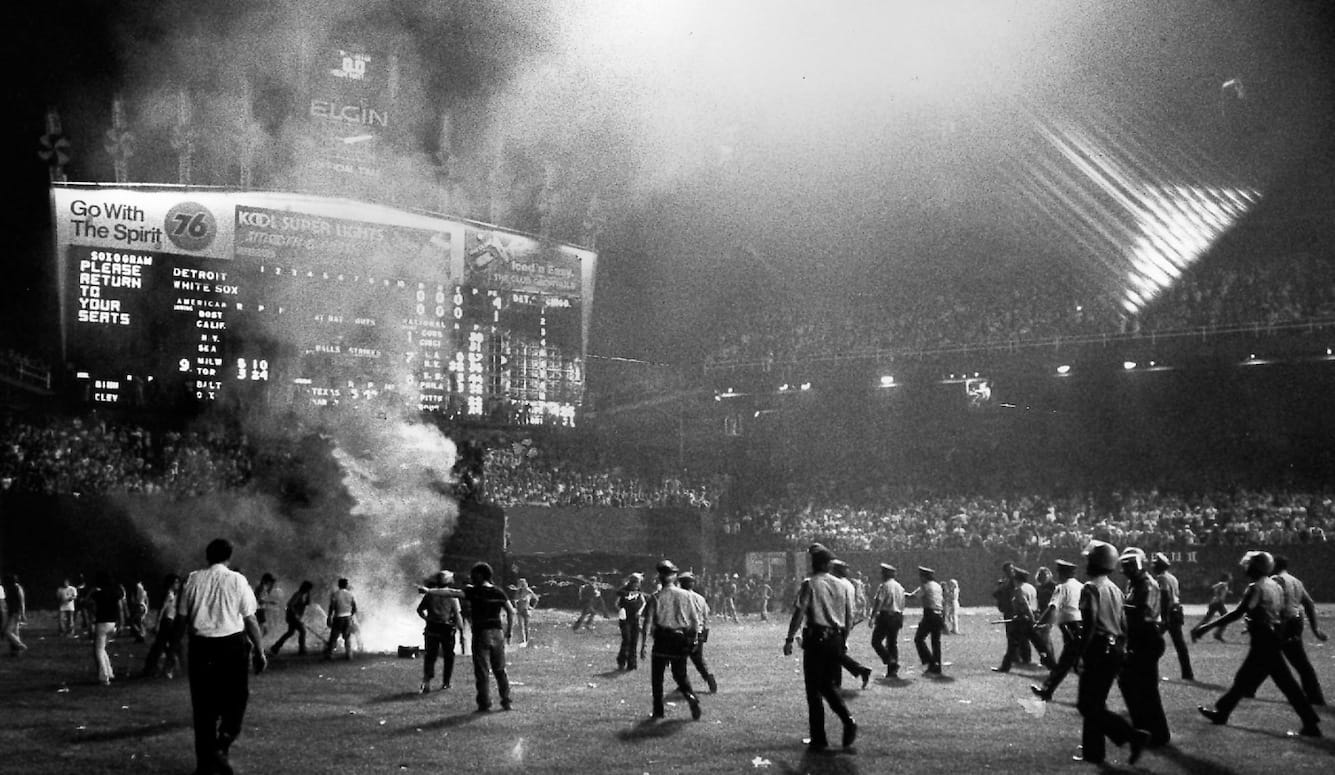

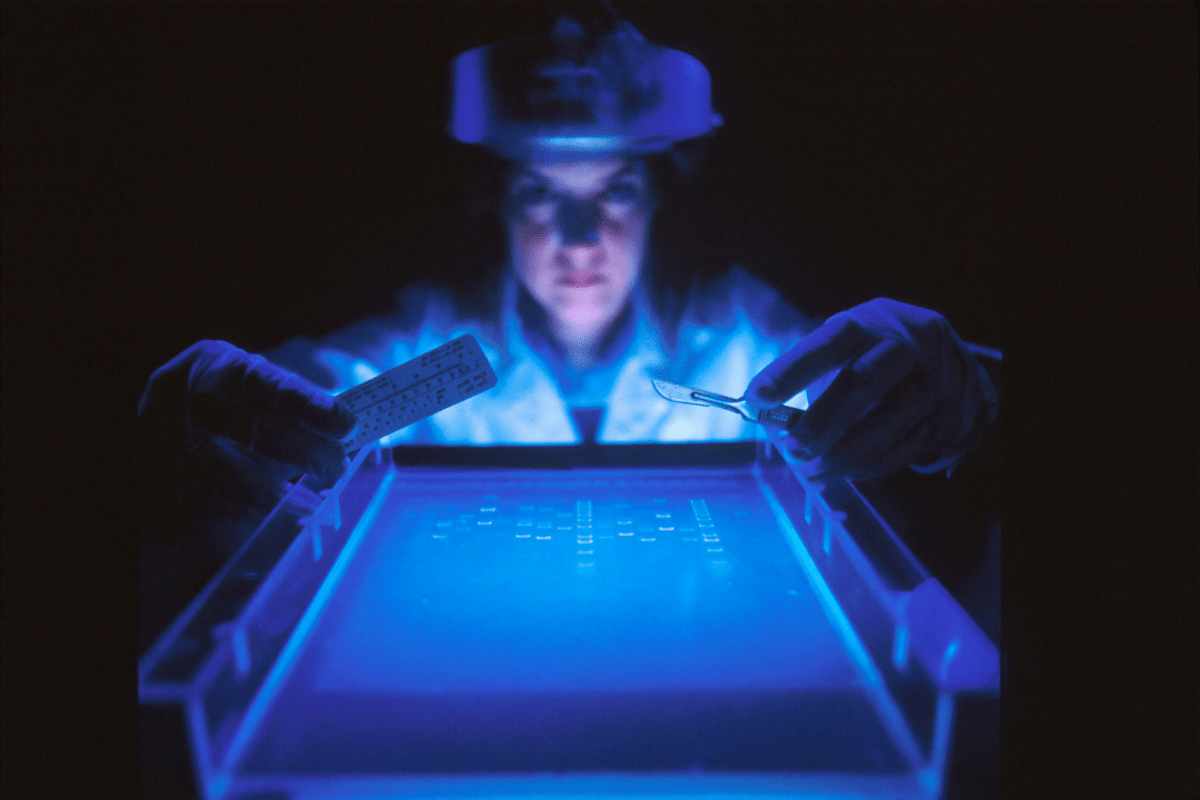

Given the constellation of demanding behaviours that get you to the start line as a professional scientist (such as protracted, detailed work which is regularly scrutinised by others), one might expect that fraud-inclined people would find the going easier elsewhere. Yet microbiologist Elisabeth Bik, who conducted a painstaking search through papers in 40 biology journals, found evidence of cheating in many images of Western blots (those blurry blobs in columns produced by proteins in gel). She found many published images that had been manipulated in something like Photoshop. When you look at some of her examples, the duplication seems obvious. Why wasn’t it picked up by reviewers? It was probably partly due to our biases: We expect honesty. Intentional data distortion is such a weird thing to do, it simply wouldn’t occur to most reviewers to check for it. Fraud harms trust in science; how common is it? The largest study to date, using data from seven pooled self-report surveys found that 1.97 percent of scientists admitted to faking their data at least once. Low prevalence, but reason for greater vigilance, better training, and maintenance of scepticism—especially when the laws of physics are overturned by undergraduates.

Science Fictions contains elaborations of p-values, p-hacking, statistical significance, and the importance of including null results. It is written in such everyday language it could lead any Ariadne out of a dark jargon maze and into the daylight. The section in the “Bias” chapter explaining why we’d expect to see a funnel-shaped set of data points in a meta-analysis, and what to worry about when we don’t, is handled with great clarity. The appendix on “How to read a scientific paper” is a superb crib sheet for students, journalists, or anyone who wants to paddle around Google Scholar in their own canoe. References are expanded, informative, and worth their own read; there’s a lovely quote from Ronald Fisher: “[T]o consult the statistician after an experiment is finished is often merely to ask him to conduct a post mortem examination. He can perhaps say what the experiment died of.” Short headings at the top of each page in the Notes section would make it easier to navigate between main text and references, but if you own the book you can fix that with a pencil.

I have some criticisms. I found a hint of hype in this anti-hype book. The journal that published the parapsychological paper is shamed for rejecting Ritchie’s own study (which contradicted the paranormal findings), but his study included many fewer subjects (50 in each of three experiments) whereas the original offending article looked stronger with 1,000 subjects and nine experiments. A study with 3,289 subjects showing the laws of physics are intact (phew!) was published by Galak et al only a year after the super-sensory paper, and in the offending journal. A year is quick for science publishing.

The “replication crisis” could just as well be called the Replication Revolution. Stale old blunders are being called out, and the record corrected. Figuring out what went wrong and how to do better is a sign of health and vigour in science practice.

This is a golden age for learning how to do science well. It has never before been easier to obtain free help from qualified experts. Eager, informed, scientists on Twitter help strangers with readings, methods, and statistical approaches. The open science movement, the reproducibility project, and registered report repositories all foster greater transparency and better research.

In the chapter on “Negligence,” the hunt for candidate genes (that may influence any trait: height, extraversion, chocolate preference) is presented as a sorry tale of failed science. Is that fair? Seems like business as usual: formerly we knew less, now we know more. Most people who worked on candidate genes jumped on new techniques, with their greater statistical power, as soon as they nosed out of the gate. Genetics is a fast-moving field; it’s a sign of success, not failure, that we make horse-eyes at work done 10 years ago. Isn’t this how science has always worked historically? It wasn’t a failure that physicist Albert Michelson spent years fiddling with an interferometer designed to demonstrate the existence of the luminiferous aether, it was a win when he concluded there was no aether. He went on to measure the speed of light with spectacular accuracy; Nobel Prize deserved and given.

Have the most important crimes against science been identified in Science Fictions? I can’t speak to the whole of science, but I can see two muggings being played out on the scientific stage. They were known to ancient rhetoricians as suggestio falsi and suppressio veri. Let’s talk first about suppressing the truth.

Science can expose uncomfortable facts. Ritchie raises concerns about political bias in science, but too softly. Science needs ruthless defenders. No liberal or conservative bias will do.

Science should generate new knowledge about the world. Those who are institutionally involved in knowledge-generation (universities, scientists, and others) should be disinterested in research questions and findings. It takes guts. Every scholar knows, especially after recent publicised sackings and so on, that it would be tough to get a grant, or a paper in a top journal, for a study showing: that teams comprising men from elite academic institutions function well; that women are better at looking after babies than men; that fracking is fine; that intelligence is mostly genetic (insert your own worst nightmare top-line). No caveats like “on average”; I’m illustrating a point here—those findings would press our buttons (they press mine, too). But as scientists, our social and political perspectives belong at the coat check. Academia, scientific institutions, and much of the press strongly favour some answers and detest others. This hurts our capacity for generating knowledge about the world. The world does not have a social conscience. We do. Institutions in science and education should be clear about the distinction. Careers have been ruined because institutions have failed to grasp it. I’m looking at you, Cambridge.

There’s another kind of suppression. When a truth has become generally known, is it ethical for funding agencies, universities, researchers to ignore it? In my view, this flirts with fraud. We have known for decades that people who are more closely genetically-related are more similar to each other. Human behaviour is heritable; all of it. This is possibly the most reproduced datum in the whole of the human behavioural sciences. So why do large-scale, longitudinal, social science studies that are not genetically-informative still get funded?

The answer is that many social scientists and their funders don’t like genes. Even a top UK university whose motto is “rerum cognoscere causas” (to know the causes of things) appears to conduct little, if any, empirical research in criminology, economics, gender studies, health policy, or psychology that incorporates genetically-informative studies. Whether genetics are relevant or not depends on what problem you want to solve, but if your research question includes causes and human behaviour, a genetic component ought to be essential. So why don’t genes more often have a place at the table? And why is this not a causum for concernum to boards and trustees?

What about suggestio falsi—not a pasta, but a statement of untruth? What do we do when ideas that once seemed useful (think of phlogiston) have expired? Social science may need something analogous to the Cochrane Reviews which synthesize medical evidence. Power Posing, the Implicit Association Test, the Myers-Briggs test, Stereotype Threat, and most priming studies would vanish like stains in sunlight.

The last chapter is on “Fixing Science.” It locates the problem in the wrong place. Science is fine; it’s our tendency to game it that needs an armed guard. Evolutionary anthropologist Richard McElreath recently tweeted: “Science is one of humanity’s greatest inventions. Academia, on the other hand, is not.” I’m with Richard on that.

Ritchie correctly identifies problems with organising the outputs from science. Friends in other professions scratch their heads on hearing that all the content, and all reviewing, are supplied gratis to journals. The original author hands over copyright, the publisher retains 100 percent of the profit and the public, who subsidise the whole shamoozle via income tax, can’t read it without paying. It seems a tad unfair.

Current remedies include open access publishing in which authors, or their funders, pay around $3,000 per article to the journal in order to make their work freely accessible to all. And pre-print servers to which scholars can upload manuscripts for anyone to read and comment on before peer review. This idea originated with physicist Paul Ginsparg, who founded the original pre-print server, the arXiv (pronounced archive), in 1991. The bioRxiv (biology archive server) followed in 2013, psyRxiv (psychology) and socRxiv (social science) in 2016, medRxiv (medicine) in 2019. Ginsparg reflected, after 20 years of the arXiv, that publishing is still in transition: “There is no consensus on the best way to implement quality control (top-down or crowd-sourced, or at what stage), how to fund it or how to integrate data and other tools needed for scientific reproducibility.”1 Problems of how to organise the knowledge generated by science still exist, but there is now widespread agreement that well-designed, replicated, reproducible studies are essential.

Replication is not the only fruit. A second edition of this book could include the total evidence rule. We should probe our problems from different methodological approaches to see if the general findings converge. Our provisional knowledge about the world increases as we take into account what the Vienna Circle philosopher, Rudolf Carnap, described as the “total observational knowledge available to a person at the time of decision-making.”2

Science Fictions is engaging, story-led, and well-organised. It will equip my sad young friend to articulate what went wrong with his charity’s study on literacy and, as importantly, to do the next one well. If he absorbs Ritchie’s lessons, he will become a science ninja. His shuriken will chasten scammers and chisellers, he’ll lance those weaselly “trending” p-values, and forego the forking paths. Go slay the enemies of science young man.

References:

1 Ginsparg, P. ArXiv at 20. Nature 476. 2011. pp.145–147.

2 Rieppel, Olivier. The philosophy of total evidence and its relevance for phylogenetic inference. Papéis Avulsos de Zoologia 45(8). 2005. pp. 77–89.