Psychology

George Orwell and the Struggle against Inevitable Bias

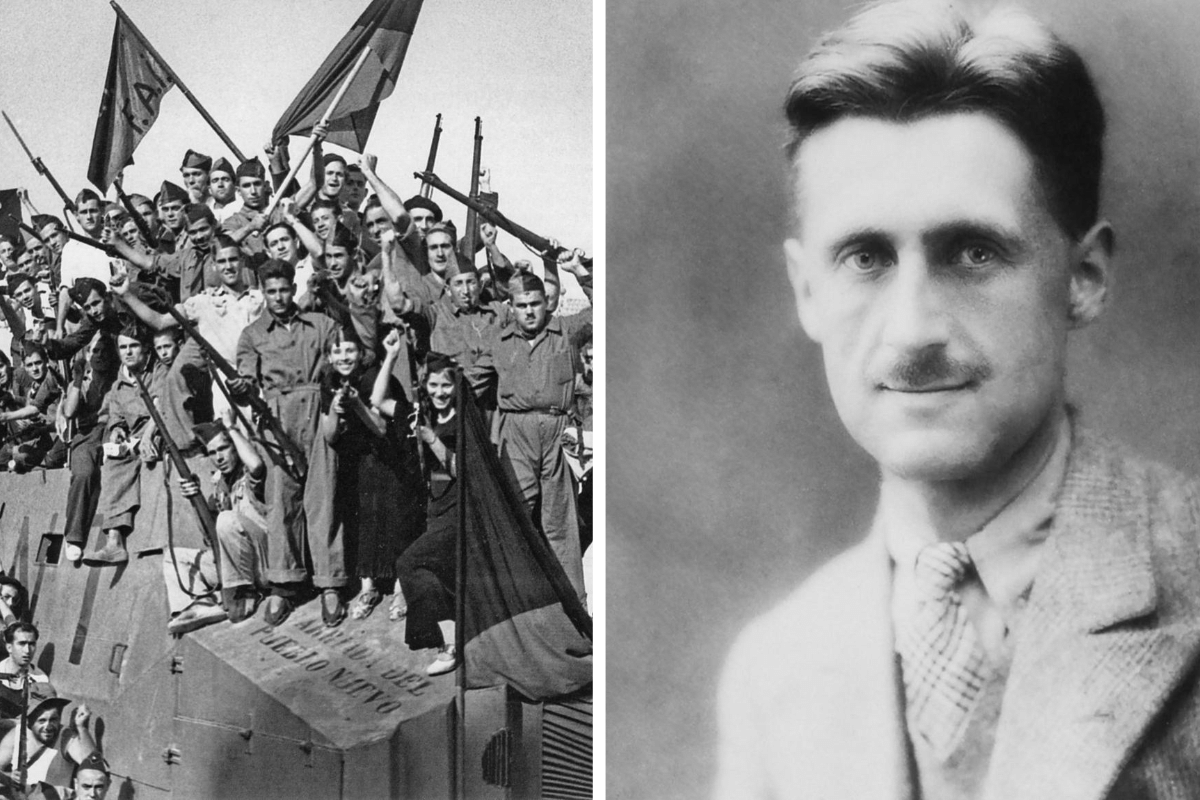

Orwell’s most personally searing experience, though, had come in Barcelona in 1937.

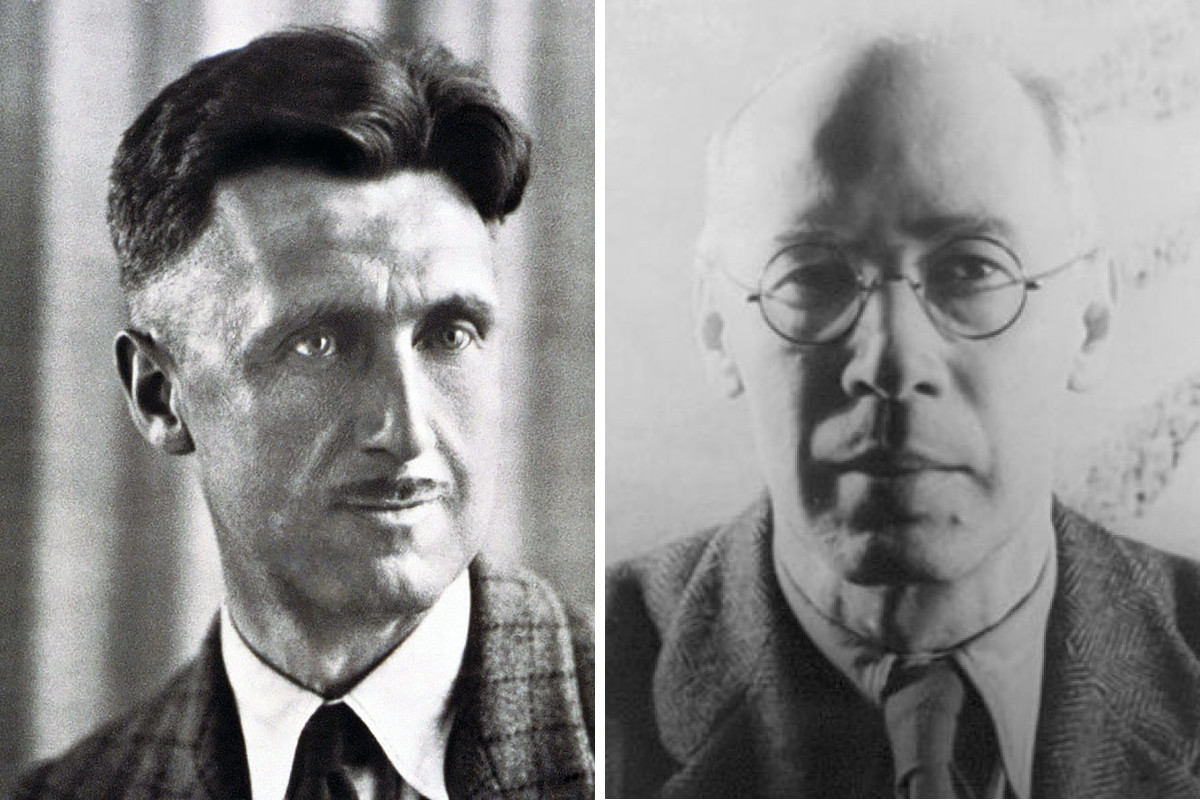

In the bleak post-war Britain of October 1945, an essay by George Orwell appeared in the first edition of Polemic. Edited by abstract artist and ex-Communist Hugh Slater, the new journal was marketed as a “magazine of philosophy, psychology, and aesthetics.” Orwell was not yet famous—Animal Farm had only just started appearing on shelves—but he had a high enough profile for his name to be a boon to a new publication. His contribution to the October 1945 Polemic was “Notes on Nationalism,” one of his best and most important pieces of writing. Amidst the de-Nazification of Germany, the alarmingly rapid slide into the Cold War, and the trials of German and Japanese war criminals, Orwell set out to answer a question which had occupied his mind for most of the past seven years—why do otherwise rational people embrace irrational or even contradictory beliefs about politics?

As a junior colonial official in Burma, the young Eric Blair (he had not yet adopted the name by which he would be known to posterity) had been disgusted by his peers and superiors talking up the British liberty of Magna Carta and Rule Britannia while excusing acts of repression like the massacre of Indian protestors at Amritsar in 1919. As a committed socialist in the late 1930s, he openly ridiculed those who claimed to be champions of the working class while holding actual working-class people in open contempt. And he had watched the British Communist Party insist that the Second World War was nothing more than an imperialist adventure right up until the moment when the first German soldier crossed the Soviet frontier, at which point it instantly became a noble struggle for human freedom.

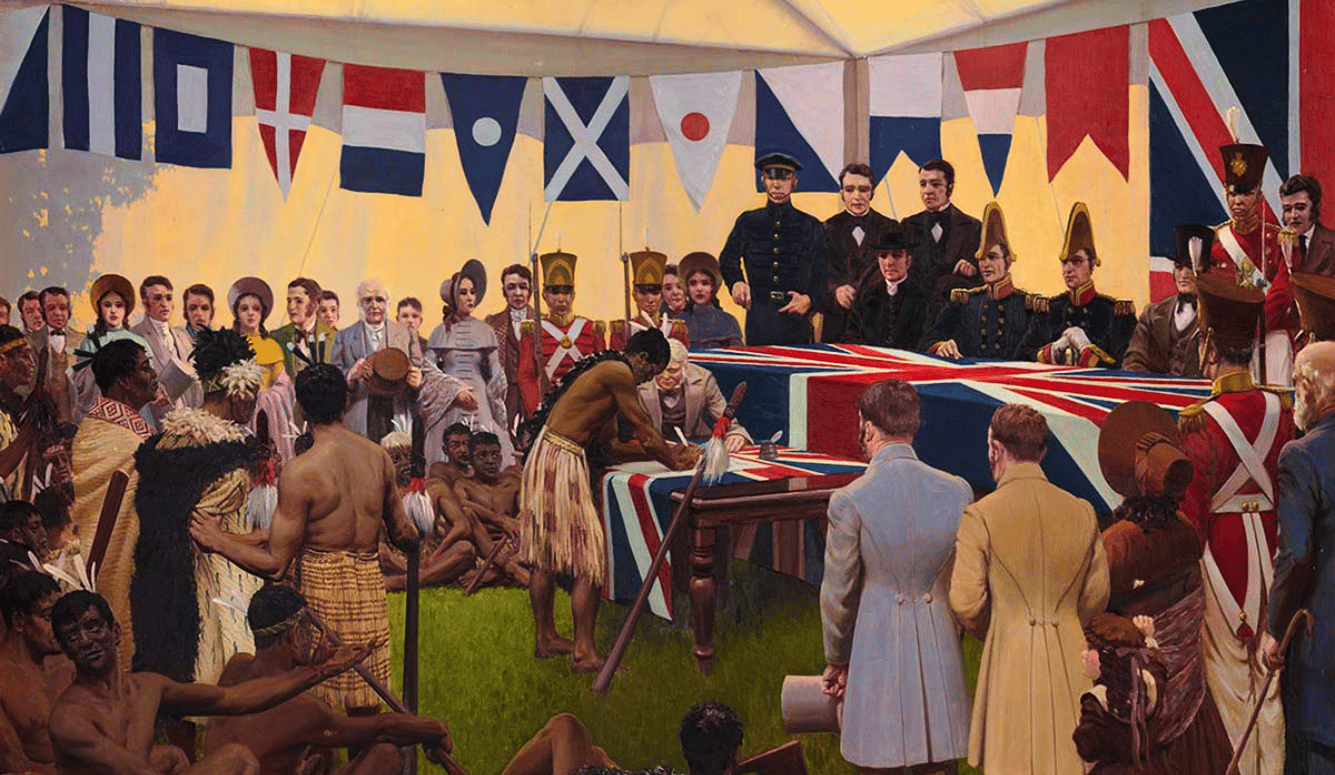

Orwell’s most personally searing experience, though, had come in Barcelona in 1937. The previous year, he had travelled to Spain to fight in the Civil War on the Republican side. His poor relationship with the British Communist Party led him to enlist in the militia of an anti-Stalinist socialist party, the POUM (Partido Obrero de Unificación Marxista, or Workers’ Party of Marxist Unification). Even while it was fighting a bitter winter campaign in the Aragon mountains, the POUM was subject to a relentless propaganda campaign by pro-Soviet Republicans who insisted it was a secret front for fascism.

Over May and June 1937, the POUM and the other independent left-wing organisations in Barcelona were brutally suppressed by the Republican Government and Soviet-backed Communists. Orwell saw his friends and comrades smeared, arrested, and in some cases shot. He only made a narrow escape back into France himself. Upon his return to Britain, he found the British Communist Party resolute in its line that the POUM was a fascist party. Admitting that there could be a difference of opinion among left-wing groups with respect to the Soviet Union, or that the Spanish Communists could have acted unjustly, was unacceptable. And when Orwell published his own account of the events in Spain, Homage to Catalonia, few were interested in reading it. The betrayal of the POUM weighed on Orwell’s mind through the Second World War, and Animal Farm provided an outlet for his anger. But those bloody spring days in Barcelona also informed “Notes on Nationalism.”

“Notes on Nationalism” is not an ideal title, as Orwell was not talking only about loyalty to country. Rather, he used nationalism as a short-hand for any type of group loyalty—to a country, but also to a religion, a political party, or an ideology itself. A nationalist may be defined by his membership of a group, or by his opposition to one, which Orwell called “negative” nationalism. Orwell used anti-Semites as an example of the latter, as well as the “minority of intellectual pacifists whose real though unadmitted motive appears to be hatred of Western democracy and admiration of totalitarianism.” He then set out to explain how everyone—no matter how reasoned and level-headed—is capable of irrational and biased thinking when our sense of group identity is challenged.

He identified three characteristics of “‘nationalistic’ thinking.” First, obsession—the ideologue’s need to filter everything through an ideological lens. Entertainment is not entertaining unless it is orthodox. Second, instability—the ability of the ideologue to go from believing one thing to quickly believing another to follow the party line. And thirdly, indifference to reality. One of the most interesting aspects of “Notes on Nationalism” is the “inadmissible fact”—something which can be proven to be true and is generally accepted but cannot be admitted by the adherents of a particular ideology. Or, if the fact is admitted, it is explained away or dismissed as unimportant.

The ideas explored in “Notes on Nationalism” run through much of Orwell’s writing, most obviously his anti-totalitarianism and hatred of hypocritical pieties. But central to his argument is how nationalistic thinking exposes our inescapable biases. “The Liberal News Chronicle,” he wrote, “published, as an example of shocking barbarity, photographs of Russians hanged by the Germans, and then a year or two later published with warm approval almost exactly similar photographs of Germans hanged by the Russians.” This anticipated the doublethink of Nineteen Eighty-Four, in which atrocities “are looked upon as normal, and, when they are committed by one’s own side and not by the enemy, meritorious.” The first step down the deceptively short road to totalitarianism is believing that our political enemies pose such a grave threat that defeating them takes precedence over truth, consistency, or common sense.

The limits of reason

Man is a rational animal, as Aristotle put it. Not that he is always rational, but that he is capable of reason. Reason, trained, leads to happiness. Orwell wasn’t the first person to observe that this didn’t always work in practice.

“The human understanding when it has once adopted an opinion (either as being the received opinion or as being agreeable to itself) draws all things else to support and agree with it” wrote Francis Bacon in his 1620 “Novum Organum,” one of the major early works of the European Enlightenment and Scientific Revolution. Today, we call this confirmation bias. We don’t form opinions based on the evidence—we often shape the evidence to suit our opinions. We attribute importance to facts which back our preferred theory and dismiss as unimportant those which do not. “It is the peculiar and perpetual error of the human intellect to be more moved and excited by affirmatives than by negatives; whereas it ought properly to hold itself indifferently disposed towards both alike,” Bacon added. We continue to cling to ideas which have been discredited, a phenomenon called belief perseverance. Or worse, our faith in discredited ideas becomes even stronger when we are presented with contrary evidence—the backfire effect. Or we focus on successes and ignore failures, a phenomenon called survivorship bias. Bacon reminds us of the story of Diagoras of Melos, who was shown a picture of those who had escaped shipwreck after making vows to the gods hanging in a temple. Diagoras asked where he could find a picture of those who made vows to the gods but drowned anyway.

Bacon wrote that humans are afflicted with “idols of the mind,” and he identified four. The first are idols of the tribe, flaws in thinking common to all people that come from human nature itself. Second are idols of the cave, or den. All of us, Bacon argued, have a cave in our mind where the light of reason is dimmed, and this cave varies from person to person depending on his or her character, experiences, and environment. Third are idols of the marketplace, associated with the exchange of ideas. As language can never be perfectly precise, it’s possible for falsehoods to develop and spread as a concept as explained by one person to another. Finally come idols of the theatre, ideas which have been presented to us and taken root so deeply and firmly they’ve become hard to remove. In Bacon’s time, this was the philosophy of Aristotle, which had become so fundamental to Western thought that even parts of it which could easily be disproven remained unchallenged for centuries. To manage the effect of the idols, Bacon proposed “radical induction”—the forerunner to the modern scientific method.

Other Enlightenment thinkers commented on the same theme. “Earthly minds, like mud walls, resist the strongest batteries: and though, perhaps, sometimes the force of a clear argument may make some impression, yet they nevertheless stand firm, and keep out the enemy, truth, that would captivate or disturb them” wrote John Locke in his “Essay on Human Understanding.” “Tell a man passionately in love that he is jilted; bring a score of witnesses of the falsehood of his mistress, it is ten to one but three kind words of hers shall invalidate all their testimonies.” This applies to the falsehood of political candidates, pundits, and quacks as much as to the falsehood of mistresses. And, of course, David Hume reminded us that reason can only ever be the slave of the passions.

Understanding cognitive bias

Modern research has vindicated Bacon, Locke, Hume, and Orwell, and shone some light on why our brains are so strangely susceptible to cognitive bias. In one study, Geoffrey Cohen was able to get different levels of support for a proposed welfare policy from Republicans and Democrats depending on whether he told them it was a Republican or a Democratic policy. Funnily enough, everyone claimed they were not influenced by the party which proposed the policy, but insisted those on the other side would be.

In another study conducted by Yale Law School, subjects were asked their political views and given a short numeracy test. They were then divided into groups and asked to interpret the results of a fictional study. When the study dealt with the efficacy of a skin cream, those subjects who had the best results in the numeracy test understood the study’s results best. But when the study related to the effect of gun control on crime, ideology and partisan affiliation played a much stronger role. Self-identified conservative Republicans struggled to correctly interpret results which suggested that gun control reduced crime, while self-identified liberal Democrats were equally stumped by results suggesting it increased it. They didn’t challenge the results or complain about them—they just couldn’t make the sums work.

Among those in the top 90th percentile for numeracy, 75 percent of people got the answer right for the skin cream question, but only 57 percent for the gun control question. In fact, people who were good at maths often did worse than those without a bent for numbers. The experiment seemed to vindicate Michael Shermer’s maxim that “smart people believe weird things because they are skilled at defending beliefs they arrived at for non-smart reasons.” In “Notes on Nationalism,” Orwell noted that some of the best-educated embraced some of the most bizarre ideas. “One has to belong to the intelligentsia to believe things like that,” he wrote, after describing some 1940s-era conspiracy theories. “No ordinary man could be such a fool.”

Why do we have cognitive biases? They seem like a colossal failure of the evolution of the human brain. But the more we learn about them, the better we understand their purposes. One is that they save us time and effort—the frugal reasoning argument. Suppose we go into the supermarket to buy cereal. You carefully read all the boxes, figuring out whether the All-Bran or the Just Right gives you the best nutritional balance and value in dollars per kilogram or pound. I grab a box because I like the colour or because it has a sponsorship deal with my football team. You make the more rational choice, but I spend much less of my life in the cereal aisle at the supermarket and more doing other things. It’s easy to see how the same logic gets applied to, for example, voting. Assuming that the consequences of voting the “wrong” way don’t cause me to lose as much time and effort as I would have given up to carefully select the right candidate, I come out ahead in the end.

And then there’s the prospect that cognitive biases could simply be side-effects of useful and valuable short-cuts our brains have developed. Our tendency to see patterns in randomness leads to the spread of conspiracy theories. Our ability to generalise from a few points of information leads to prejudice. The knee-jerk reactions to danger which kept our ancestors from being eaten by sabre-tooth tigers can also lead to irrational decisions in the face of more abstract threats like crime, terrorism, natural disasters, or stock-market crashes. And finally, shared beliefs, even incorrect beliefs, might promote group cohesion. Our brains are imperfect at reasoning, but they may be so for good reason.

The moral effort

“For those who feel deeply about contemporary politics, certain topics have become so infected by considerations of prestige that a genuinely rational approach to them is almost impossible,” Orwell wrote. Is there any hope for us, then? Or do we need to avoid politics altogether? Notwithstanding his famous pessimism, Orwell disagreed. “I think one must engage in politics—using the word in a wide sense—and that one must have preferences: that is, one must recognize that some causes are objectively better than others, even if they are advanced by equally bad means,” he wrote. But we should be aware of our biases and be willing to confront them. This, he wrote, was a moral effort, and “contemporary English literature, so far as it is alive at all to the major issues of our time, shows how few of us are prepared to make it.”

In principle, we all admire those who are free from hypocrisy and who apply equal scrutiny to the ideas of their friends as those of their enemies. In practice, these people seem to amass far more of the latter than the former. Their opponents give them little credit unless they change sides completely, and their allies turn on them for handing ammunition to the enemy. Orwell himself was an example of this. Until Animal Farm and Nineteen Eighty-Four made him a hero to the global anti-Communist movement, he was something of a pariah, shunned by the Right for his revolutionary socialism and by the Left for his unrelenting criticism of the Soviet Union and willingness to expose hypocrisy within the mainstream socialist movement. He continued to condemn British imperialism even when Luftwaffe bombs were falling on London, and refused to soften his line on Stalin when even the most blue-blooded Conservatives were moderating their rhetoric towards Britain’s wartime ally. Consistency isn’t really all that popular. Even Orwell, an unusually clear thinker, was not without biases of his own. For example, his belief that the capitalist system was facing imminent collapse in the face of mounting evidence to the contrary. “Capitalism itself has manifestly no future” he wrote confidently in Towards European Unity in 1947, holding the line almost until the end of his life.

As we approach the maelstrom of a US presidential election, we are certain to see a lot of what Orwell described as nationalistic thinking across the mainstream media, blogs, YouTube, and Twitter. It is very easy to find examples among those with whom we disagree. It is harder to find them among those with whom we agree. And it is hardest of all to find them within ourselves. But, to co-opt Paul’s letter to the Romans, we deceive ourselves if we think we are without sin when it comes to perfectly rational thinking. The challenge is to recognise that “nationalistic” prejudices are wired into our brains. They are efficient and comfortable and help us to fit in, but they can cloud our thinking by making us either hyper-critical or wilfully blind. As Orwell wrote, we must make allowance for the “inevitable bias.”

So the next time you invoke Nineteen Eighty-Four to accuse an opponent of doublethink, pause and consider if you’ve taken the advice of its author and examined and acknowledged your own nationalistic biases. It is, as Orwell said, an effort, but at least we can try.

Adam Wakeling is an Australian writer, lawyer, and historian. His next book, A House of Commons for a Den of Thieves: Australia’s Journey from Penal Colony to Democracy, will be published by Australian Scholarly Publishing in October. You can follow him on Twitter @AdamMWakeling.