The Ever-Shrinking Transistor and the Invention of Google

The developments of the search engine and social media follow the usual path of innovation: incremental, gradual, serendipitous, and inexorable; few eureka moments or sudden breakthroughs.

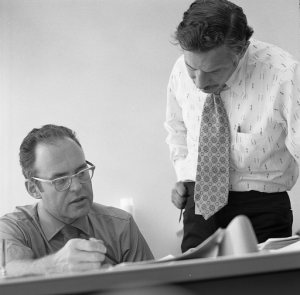

Innovators are often unreasonable people: restless, quarrelsome, unsatisfied, and ambitious. Often, they are immigrants, especially on the west coast of America. Not always, though. Sometimes they can be quiet, unassuming, modest, and sensible stay-at-home types. The person whose career and insights best capture the extraordinary evolution of the computer between 1950 and 2000 was one such. Gordon Moore was at the centre of the industry throughout this period and he understood and explained better than most that it was an evolution, not a revolution. Apart from graduate school at Caltech and a couple of unhappy years out east, he barely left the Bay Area, let alone California. Unusually for a Californian, he was a native, who grew up in the small town of Pescadero on the Pacific coast just over the hills from what is now called Silicon Valley, going to San Jose State College for undergraduate studies. There he met and married a fellow student, Betty Whitaker.

As a child, Moore had been taciturn to the point that his teachers worried about it. Throughout his life he left it to partners like his colleague Andy Grove, or his wife, Betty, to fight his battles for him. “He was either constitutionally unable or simply unwilling to do what a manager has to do,” said Grove, a man toughened by surviving both Nazi and Communist regimes in his native Hungary. Moore’s chief recreation was fishing, a pastime that requires patience above all else. And unlike some entrepreneurs he was—and is, now in his 90s—just plain nice, according to almost everybody who knows him. His self-effacing nature somehow captures the point that innovation in computers was and is not really a story of heroic inventors making sudden breakthroughs, but an incremental, inexorable, inevitable progression driven by the needs of what Kevin Kelly calls “the technium” itself. More so than flamboyant figures like Steve Jobs, who managed to make a personality cult in a revolution that was not really about personalities.

In 1965 Moore was asked by an industry magazine called Electronics to write an article about the future. He was then at Fairchild Semiconductor, having been one of the “Traitorous Eight” who defected from the firm run by the dictatorial and irascible William Shockley to set up their own company six years before, where they had invented the integrated circuit of miniature transistors printed on a silicon chip. Moore and Robert Noyce would defect again to set up Intel in 1968. In the 1965 article Moore predicted that miniaturization of electronics would continue and that it would one day deliver “such wonders as home computers… automatic controls for automobiles, and personal portable communications equipment”. But that prescient remark is not why the article deserves a special place in history. It was this paragraph that gave Gordon Moore, like Boyle and Hooke and Ohm, his own scientific law:

The complexity for minimum component costs has increased at a rate of roughly a factor of two per year. Certainly over the short term this rate can be expected to continue, if not to increase. Over the longer term, the rate of increase is a bit more uncertain, although there is no reason to believe it will not remain nearly constant for at least ten years.

Moore was effectively forecasting the steady but rapid progress of miniaturization and cost reduction, doubling every year, through a virtuous circle in which cheaper circuits led to new uses, which would lead to more investment, which would lead to cheaper microchips for the same output of power. The unique feature of this technology is that a smaller transistor not only uses less power and generates less heat, but can be switched on and off faster, so it works better and is more reliable. The faster and cheaper chips got, the more uses they found. Moore’s colleague Robert Noyce deliberately under-priced microchips so that more people would use them in more applications, thereby growing the market.

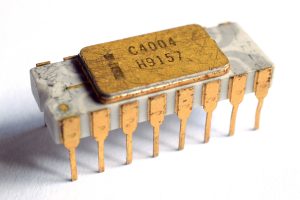

By 1975 the number of components on a chip had passed 65,000, just as Moore had forecast, and it kept on growing as the size of each transistor shrank and shrank, though in that year Moore revised his estimate of the rate of change to doubling the number of transistors on a chip every two years. By then Moore was chief executive of Intel and presiding over its explosive growth and the transition to making microprocessors, rather than memory chips: essentially programmable computers on single silicon chips. Calculations by Moore’s friend and champion, Carver Mead, showed that there was a long way to go before miniaturization hit a limit.

Moore’s Law kept on going not just for 10 years but for about 50 years, to everybody’s surprise. Yet it probably has now at last run out of steam. The atomic limit is in sight. Transistors have shrunk to less than 100 atoms across, and there are billions on each chip. Since there are now trillions of chips in existence, that means there are billions of trillions of transistors on Planet Earth. They are probably now within an order of magnitude of equalling the number of grains of sand on the planet. Most sand grains, like most microchips, are made largely of silicon, albeit in oxidized form. But whereas sand grains have random—and therefore probable—structures, silicon chips have highly non-random, and therefore improbable, structures.

Looking back over the half-century since Moore first framed his Law, what is remarkable is how steady the progression was. There was no acceleration, there were no dips and pauses, no echoes of what was happening in the rest of the world, no leaps as a result of breakthrough inventions. Wars and recessions, booms and discoveries, seemed to have no impact on Moore’s Law. Also, as Ray Kurzweil was to point out later, Moore’s Law in silicon turned out to be a progression, not a leap, from the vacuum tubes and mechanical relays of previous years: The number of switches delivered for a given cost in a computer trundled upwards, showing no sign of sudden breakthrough when the transistor was invented, or the integrated circuit. Most surprising of all, discovering Moore’s Law had no effect on Moore’s Law. Knowing that the cost of a given amount of processing power would halve in two years ought surely to have been valuable information, allowing an enterprising innovator to jump ahead and achieve that goal now. Yet it never happened. Why not? Mainly because it took each incremental stage to work out how to get to the next stage.

This was encapsulated in Intel’s famous “tick-tock” corporate strategy: Tick was the release of a new chip every other year, tock was the fine-tuning of the design in the intervening years, preparatory to the next launch. But there was also a degree of self-fulfilling prophecy about Moore’s Law. It became a prescription for, not a description of, what was happening in the industry. Gordon Moore, speaking in 1976, put it this way:

This is the heart of the cost reduction machine that the semiconductor industry has developed. We put a product of given complexity into production; we work on refining the process, eliminating the defects. We gradually move the yield to higher and higher levels. Then we design a still more complex product utilizing all of the improvements, and put that into production. The complexity of our product grows exponentially with time.

Silicon chips alone could not bring about a computer revolution. For that, there needed to be new computer designs, new software, and new uses. Throughout the 1960s and 1970s, as Moore foresaw, there was a symbiotic relationship between hardware and software, as there had been between cars and oil. Each industry fed the other with innovative demand and innovative supply. Yet even as the technology went global, more and more the digital industry became concentrated in Silicon Valley, a name coined in 1971, for reasons of historical accident: Stanford University’s aggressive pursuit of defence research dollars led it to spawn a lot of electronics startups, and those startups gave birth to others, which spawned still others. Yet the role of academia in this story was surprisingly small. Though it educated many of the pioneers of the digital explosion in physics or electrical engineering, and though of course there was basic physics underlying many of the technologies, neither hardware nor software followed a simple route from pure science to applied.

Companies as well as people were drawn to the west side of San Francisco Bay to seize opportunities, catch talent and eavesdrop on the industry leaders. As the biologist and former vice-chancellor of Buckingham University, Terence Kealey, has argued, innovation can be like a club: you pay your dues and get access to its facilities. The corporate culture that developed in the Bay Area was egalitarian and open: In most firms, starting with Intel, executives had no reserved parking spaces, large offices, or hierarchical ranks, and they encouraged the free exchange of ideas sometimes to the point of chaos. Intellectual property hardly mattered in the digital industry: There was not usually time to get or defend a patent before the next advance overtook it. Competition was ruthless and incessant, but so were collaboration and cross-pollination.

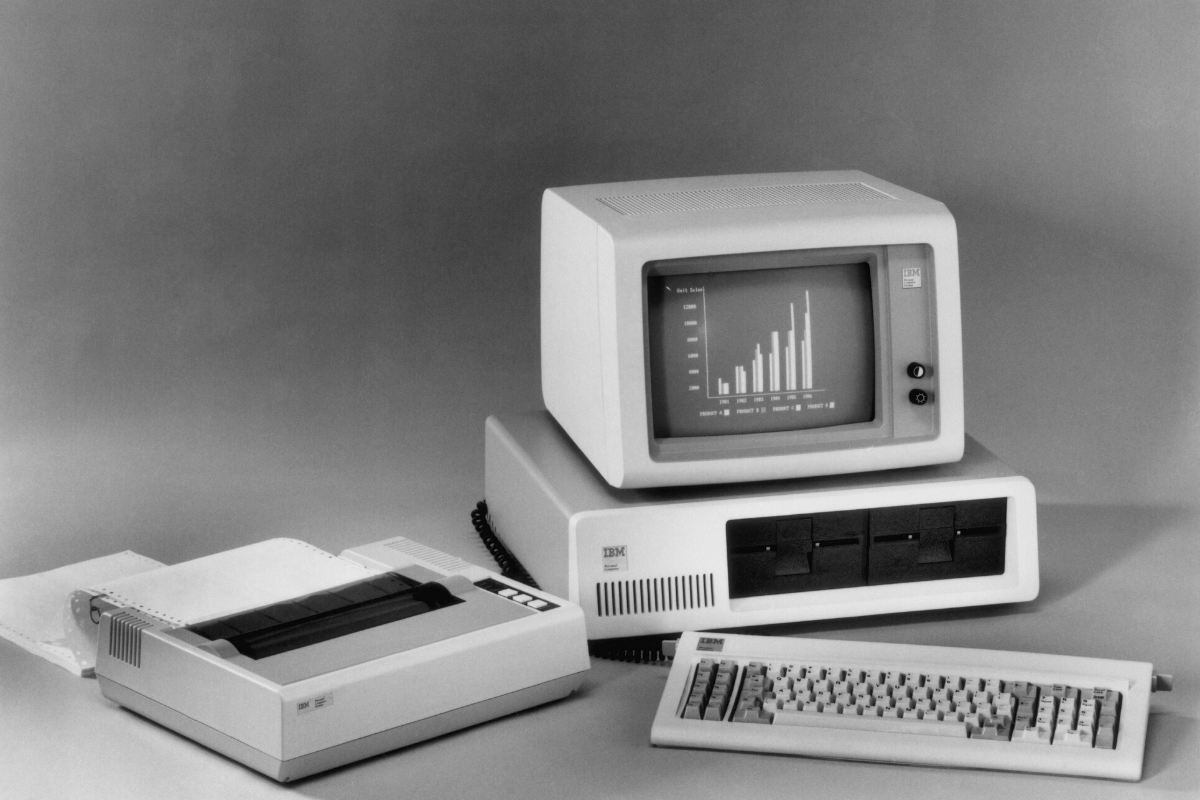

The innovations came rolling off the silicon, digital production line: the microprocessor in 1971, the first video games in 1972, the TCP/IP protocols that made the Internet possible in 1973, the Xerox PARC Alto computer with its graphical user interface in 1974, Steve Jobs’s and Steve Wozniak’s Apple 1 in 1975, the Cray 1 supercomputer in 1976, the Atari video game console in 1977, the laser disc in 1978, the “worm”, ancestor of the first computer viruses, in 1979, the Sinclair ZX80 hobbyist computer in 1980, the IBM PC in 1981, Lotus 123 software in 1982, the CD-ROM in 1983, the word “cyberspace” in 1984, Stewart Brand’s Whole Earth ’Lectronic Link (Well) in 1985, the Connexion machine in 1986, the GSM standard for mobile phones in 1987, Stephen Wolfram’s Mathematica language in 1988, Nintendo’s Game Boy and Toshiba’s Dynabook in 1989, the World Wide Web in 1990, Linus Torvald’s Linux in 1991, the film Terminator 2 in 1991, Intel’s Pentium processor in 1993, the zip disc in 1994, Windows 95 in 1995, the Palm Pilot in 1996, the defeat of the world chess champion, Garry Kasparov, by IBM’s Deep Blue in 1997, Apple’s colourful iMac in 1998, Nvidia’s consumer graphics processing unit, the GEForce 256, in 1999, the Sims in 2000. And on and on and on.

It became routine and unexceptional to expect radical innovations every few months, an unprecedented state of affairs in the history of humanity. Almost anybody could be an innovator, because thanks to the inexorable logic unleashed and identified by Gordon Moore and his friends, the new was almost always automatically cheaper and faster than the old. So invention meant innovation too.

Not that every idea worked. There were plenty of dead ends along the way. Interactive television. Fifth-generation computing. Parallel processing. Virtual reality. Artificial intelligence. At various times each of these phrases was popular with governments and in the media, and each attracted vast sums of money, but proved premature or exaggerated. The technology and culture of computing were advancing by trial and error on a massive and widespread scale, in hardware, software and consumer products. Looking back, history endows the tryers who made the fewest errors with the soubriquet of genius, but for the most part they were lucky to have tried the right thing at the right time. Gates, Jobs, Brin, Page, Bezos, Zuckerberg were all products of the technium’s advance, as much as they were causes. In this most egalitarian of industries, with its invention of the sharing economy, a surprising number of billionaires emerged.

Again and again, people were caught out by the speed of the fall in cost of computing and communicating, leaving future commentators with a rich seam of embarrassing quotations to mine. Often it was those closest to the industry about to be disrupted who least saw it coming. Thomas Watson, the head of IBM, said in 1943 that “there is a world market for maybe five computers.” Tunis Craven, commissioner of the Federal Communications Commission, said in 1961: “there is practically no chance communications space satellites will be used to provide better telephone, telegraph, television or radio service inside the United States.” Marty Cooper, who has as good a claim as anybody to have invented the mobile phone, or cell phone, said, while director of research at Motorola in 1981: “Cellular phones will absolutely not replace local wire systems. Even if you project it beyond our lifetimes, it won’t be cheap enough.” Tim Harford points out that in the futuristic film Blade Runner, made in 1982, robots are so life-like that a policeman falls in love with one, but to ask her out he calls her from a payphone, not a mobile.

* * *

The surprise of search engines and social media

I use search engines every day. I can no longer imagine life without them. How on Earth did we manage to track down the information we needed? I use them to seek out news, facts, people, products, entertainment, train times, weather, ideas, and practical advice. They have changed the world as surely as steam engines did. In instances where they are not available, like finding a real book on a real shelf in my house, I find myself yearning for them. They may not be the most sophisticated or difficult of software tools, but they are certainly the most lucrative. Search is probably worth nearly a trillion dollars a year and has eaten the revenue of much of the media, as well as enabled the growth of online retail. Search engines, I venture to suggest, are a big part of what the Internet delivers to people in real life—that and social media.

I use social media every day too, to keep in touch with friends, family and what people are saying about the news and each other. Hardly an unmixed blessing, but it is hard to remember life without it. How on Earth did we manage to meet up, to stay in touch or to know what was going on? In the second decade of the 21st century social media exploded into the biggest and second most lucrative use of the Internet and is changing the course of politics and society.

Yet here is a paradox. There is an inevitability about both search engines and social media. If Larry Page had never met Sergei Brin, if Mark Zuckerberg had not got into Harvard, then we would still have search engines and social media. Both already existed when they started Google and Facebook. Yet before search engines or social media existed, I don’t think anybody forecast that they would exist, let alone grow so vast, certainly not in any detail. Something can be inevitable in retrospect, and entirely mysterious in prospect. This asymmetry of innovation is surprising.

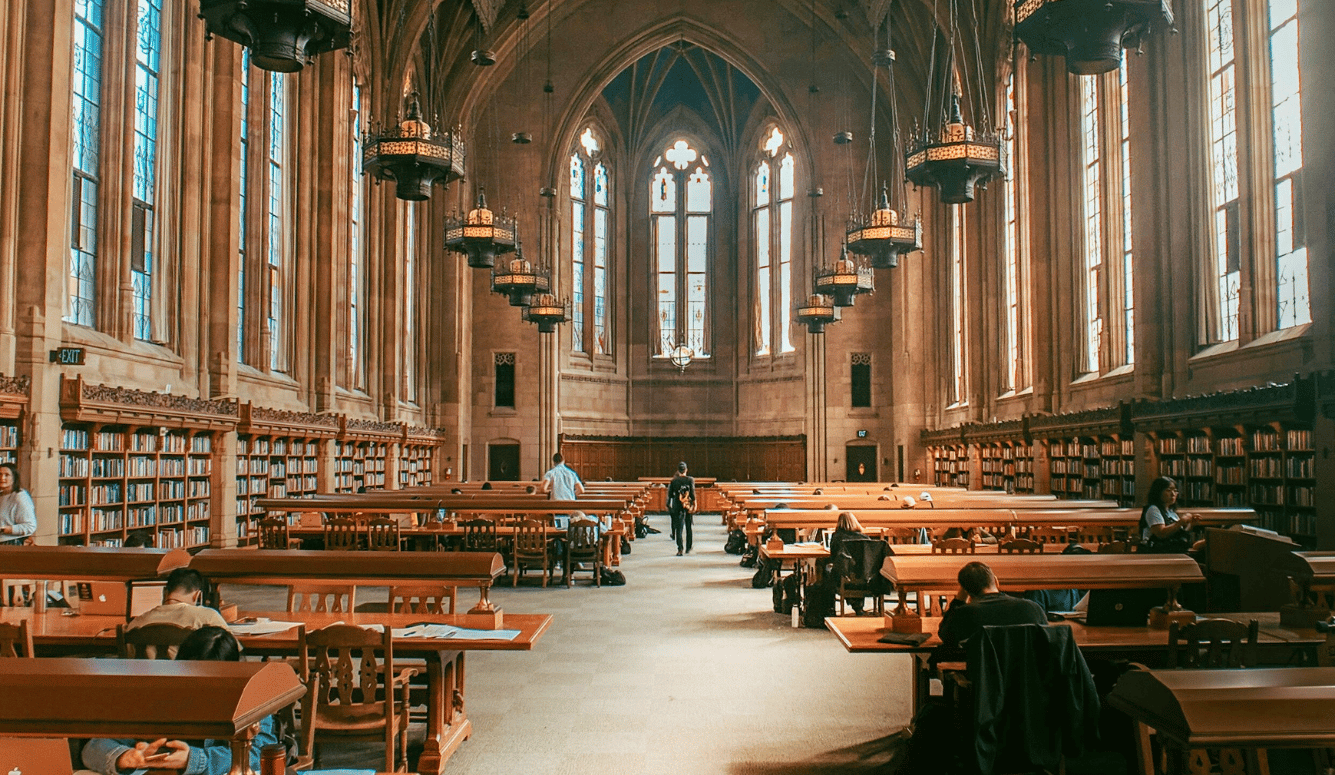

The developments of the search engine and social media follow the usual path of innovation: incremental, gradual, serendipitous, and inexorable; few eureka moments or sudden breakthroughs. You can choose to go right back to the posse of MIT defence-contracting academics, such as Vannevar Bush and J. C. R. Licklider, in the post-war period, writing about the coming networks of computers and hinting at the idea of new forms of indexing and networking. Here is Bush in 1945: “The summation of human experience is being expanded at a prodigious rate, and the means we use for threading through the consequent maze to the momentarily important item is the same as was used in the days of square-rigged ships.” And here is Licklider in his influential essay, written in 1964, on “Libraries of the Future”, imagining a future in which, over the weekend, a computer replies to a detailed question: “Over the weekend it retrieved over 10,000 documents, scanned them all for sections rich in relevant material, analyzed all the rich sections into statements in a high-order predicate calculus, and entered the statements into the data base of the question-answering subsystem.” But frankly such prehistory tells you only how little they foresaw instant search of millions of sources. A series of developments in the field of computer software made the Internet possible, which made the search engine inevitable: time sharing, packet switching, the World Wide Web, and more. Then in 1990 the very first recognizable search engine appeared, though inevitably there are rivals to the title.

Its name was Archie, and it was the brainchild of Alan Emtage—a student at McGill University in Montreal—and two of his colleagues. This was before the World Wide Web was in public use and Archie used the FTP protocol. By 1993 Archie was commercialized and growing fast. Its speed was variable: “While it responds in seconds on a Saturday night, it can take five minutes to several hours to answer simple queries during a weekday afternoon.” Emtage never patented it and never made a cent.

By 1994 Webcrawler and Lycos were setting the pace with their new text-crawling bots, gathering links and key words to index and dump in databases. These were soon followed by Altavista, Excite, and Yahoo!. Search engines were entering their promiscuous phase, with many different options for users. Yet still nobody saw what was coming. Those closest to the front still expected people to wander into the Internet and stumble across things, rather than arrive with specific goals in mind. “The shift from exploration and discovery to the intent-based search of today was inconceivable,” said Srinija Srinivasan, Yahoo!’s first editor-in-chief.

Then Larry met Sergey. Taking part in an orientation programme before joining graduate school at Stanford, a university addicted by then to spinning out tech companies, Larry Page found himself guided by a young student named Sergey Brin. “We both found each other obnoxious,” said Brin later. Both were second-generation academics in technology. Page’s parents were academic computer scientists in Michigan; Brin’s were a mathematician and an engineer in Moscow, then Maryland. Both young men had been steeped in computer talk, and hobbyist computers, since childhood.

Page began to study the links between web pages, with a view to ranking them by popularity, and had the idea, reportedly after waking from a dream in the night, of cataloguing every link on the exponentially expanding web. He created a web crawler to go from link to link, and soon had a database that ate up half of Stanford’s Internet bandwidth. But the purpose was annotating the web, not searching it. “Amazingly, I had no thought of building a search engine. The idea wasn’t even on the radar,” Page said. That asymmetry again.

By now Brin had brought his mathematical expertise and his effervescent personality to Page’s project, named BackRub, then Page Rank, and finally Google, a misspelled word for a big number that worked well as a verb. When they began to use it for search, they realized they had a much more intelligent engine than anything on the market because it ranked sites that the world thought were important enough to link to higher than those that happened to contain key words. Page discovered that three of the four biggest search engines could not even find themselves online. As Walter Isaacson has argued:

Their approach was in fact a melding of machine and human intelligence. Their algorithm relied on the billions of human judgments made by people when they created links from their own websites. It was an automated way to tap into the wisdom of humans—in other words, a higher form of human–computer symbiosis.

Bit by bit, they tweaked the programs till they got better results. Both Page and Brin wanted to start a proper business, not just invent something that others would profit from, but Stanford insisted they publish, so in 1998 they produced their now famous paper ‘The Anatomy of a Large-Scale Hypertextual Web Search Engine’, which began: “In this paper, we present Google…” With eager backing from venture capitalists they set up in a garage and began to build a business. Only later were they persuaded by the venture capitalist Andy Bechtolsheim to make advertising the central generator of revenue.

Extracted from How Innovation Works: And Why It Flourishes In Freedom.