Science / Tech

How Innovation Works—A Review

Ridley presents an inspiring view of history, because we are not merely the passive recipients of thousands of years of innovations. We can contribute to this endless chain of progress, if we so choose.

A review of How Innovation Works and How it Flourishes in Freedom by Matt Ridley, Harper (May 19th, 2020), 416 pages.

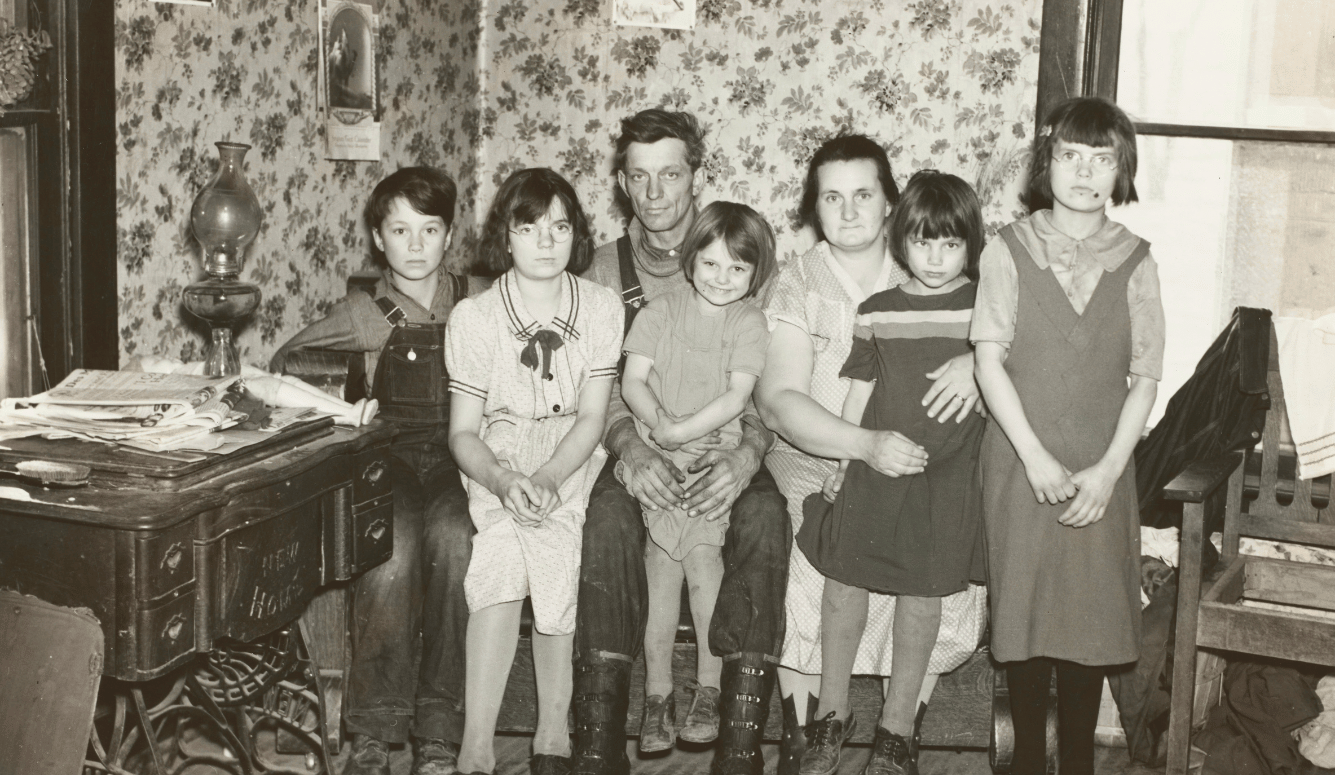

If you are reading this, then you are taking advantage of the global information network we call the Internet and a piece of electronic hardware known as a computer. For much of the world, access to these technologies is commonplace enough to be taken for granted, and yet they only emerged in the last century. A few hundred thousand years ago, mankind was born into a Hobbesian state of destitution, literally and figuratively naked. Yet we’ve come to solve an enormous sequence of problems to reach the heights of the modern world, and continue to do so (global extreme poverty currently stands at an all-time low of just nine percent). But what are the necessary ingredients in solving such problems, in improving the conditions of humanity? In How Innovation Works, author Matt Ridley investigates the nature of progress by documenting the stories behind some of the developments that make our modern lives possible.

Early in the book, Ridley writes that “Innovation, like evolution, is a process of constantly discovering ways of rearranging the world into forms that are unlikely to arise by chance… The resulting entities are… more ordered, less random, than their ingredients were before.” He emphasizes that mere invention is not enough for some newly created product or service to reach mass consumption—it must be made “sufficiently practical, affordable, reliable, and ubiquitous to be worth using.” This distinction is not intuitively obvious, and Ridley goes on to support his case with historical examples from transportation, medicine, food, communication, and other fields.

The story of humanity is often told as a series of singular heroes and geniuses, and yet most innovations are both gradual and impossible to attribute to any one person or moment. Ridley offers the computer as an illustrative example, the origin of which can be traced to no definitive point in time. While the ENIAC, the Electronic Numerical Integrator and Computer, began running at the University of Pennsylvania in 1945, it was decimal rather than binary. Furthermore, one of its three creators, John Mauchly, was found guilty of “stealing” the idea from an engineer who built a similar machine in Iowa years before. And just a year before the ENIAC was up and running, the famous Colossus began running as a digital, programmable computer, although it was not intended to be a general-purpose machine.

Besides, neither the ENIAC nor the Colossus themselves were the product of one mind—they were unambiguously collaborative creations. Attributing credit to the inventor of the computer becomes even more difficult when we consider that the very idea of a machine that can compute was theoretically developed by Alan Turing, Claude Shannon, and John von Neumann. And, as Ridley documents, the back-tracing does not stop there. There is simply no single inventor or thinker to whom we may credit the birth of the computer, nor are its origins fixed at a single point in time.

Interestingly, Ridley argues that inventions often precede scientific understanding of the relevant physical principles. In 1716, Lady Mary Wortley Montagu witnessed women in Turkey perform what she called “engrafting”—the introduction of a small amount of pus drawn from a mild smallpox blister into the bloodstream of a healthy person via a scratch in the skin of the arm or leg. This extremely crude form of vaccination, it was discovered, led to fewer people falling ill from smallpox. Lady Mary boldly engrafted her own children, which was not received well by some of her fellow British citizens. In a case of parallel innovation, a similar understanding of inoculation emerged in the United States in 1706, when physician Zabdiel Boylston attempted it on 300 individuals. A mob tried to kill him for this creative endeavor. These precursors to modern vaccination demonstrate just how much of innovation is a matter of trial-and-error. Neither Lady Mary nor Zabdiel Boylston understood the true nature of viruses, which would not emerge for more than a century, nor did they know why injecting people with a small amount of something would produce immunity. Nevertheless, it seemed to work, and that was enough. It wouldn’t be until the late 19th century that the success of vaccination was explained by Louis Pasteur.

Ridley points out that there have always been opponents of innovation. Such people often have an interest in maintaining the status quo but justify their objections with reference to the precautionary principle. Even margarine was once called an abomination by the governor of Minnesota—in 1886, the United States government implemented inhibitive regulations in order to reduce its sales. The burgeoning popularity of coffee in the 16th and 17th centuries was met with fierce and moralistic condemnation by both rulers and winemakers, though for different reasons. King Charles II of Scotland and England was uncomfortable with the idea of caffeinated patrons of cafes gathering and criticizing the ruling elite. The winemakers, meanwhile, correctly viewed this strange new black beverage as a competitor, and gave their support to academics who said that coffee gave those who consumed it “violent energy.” Ridley regards the war against coffee as emblematic of the resistance to novelty wherein “we see all the characteristic features of opposition to innovation: an appeal to safety; a degree of self-interest among vested interests; and a paranoia among the powerful.”

Another feature of many of the innovations examined in Ridley’s book is that they were often made simultaneously by independent actors. Thomas Edison wasn’t the only one to have invented the light bulb. Apparently, 21 different people had come up with the design of—or had made crucial improvements to—the incandescent light bulb by 1880. The light bulb is not the only example of convergent invention—“six different people invented or discovered the thermometer, five the electric telegraph, four decimal fractions, three the hypodermic needle, two natural selection.” Ridley conjectures that this is the rule, rather than the exception, and goes on to apply it to scientific theories. Even Einstein’s theory of relativity, Ridley argues, may have been discovered shortly thereafter by Hendrik Lorenz. And although Watson and Crick are world-famous for their discovery of the structure of DNA, evidence suggests that they may have barely beaten other scientists to the finish line, who were on similar trails.

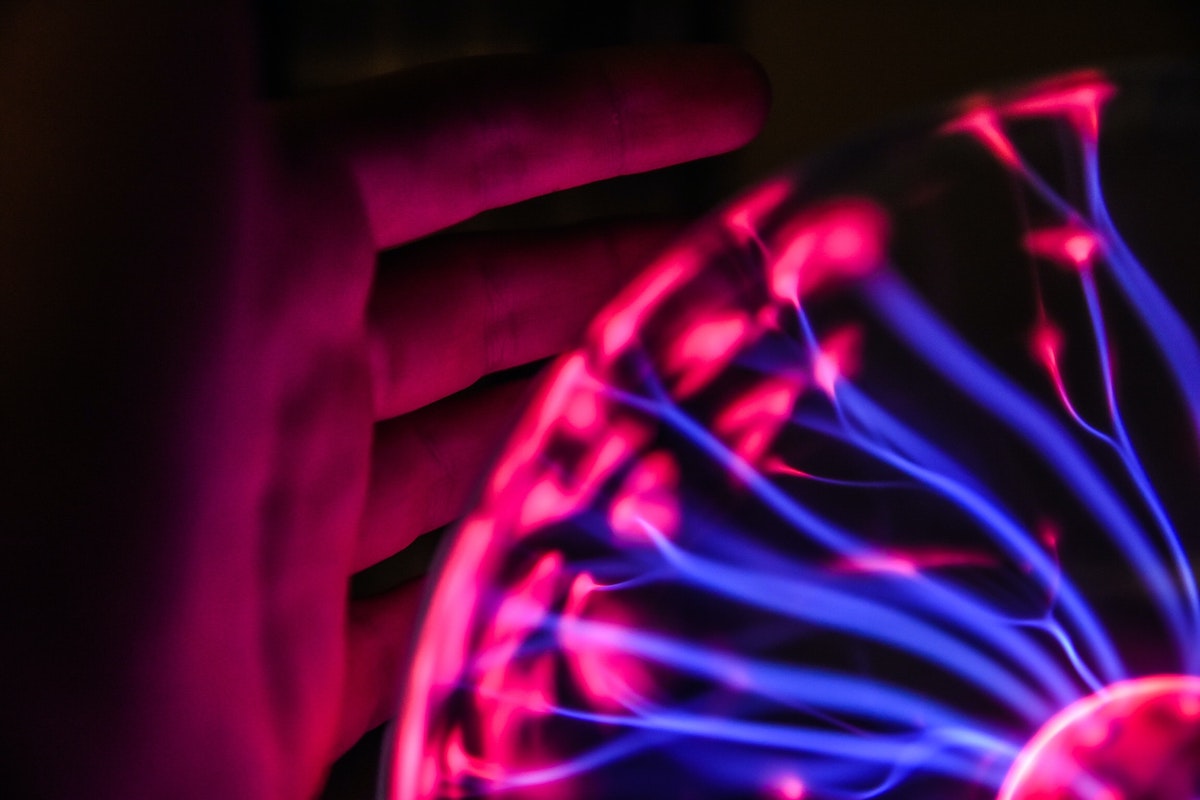

As fundamental as the innovation is to the story of humanity, Ridley argues that innovation predates our species by a few billion years. Touching on an almost spiritual note, he writes that “The beginning of life on earth was the first innovation: the first arrangement of atoms and bytes into improbable forms that could harness energy to a purpose…” Of course, there are differences between the creativity of a human mind and that of the biosphere, but many of the same principles govern both: the necessity of trial-and-error, a tendency toward incremental improvement, unpredictability, and trends of increasing complexity and specialization.

What is the relationship between our anticipation of innovation and its actual process and products? Ridley adheres to Amara’s Law, which states that “people tend to overestimate the impact of a new technology in the short run, but to underestimate it in the long run.” He tracks the intense excitement around the Internet in the 1990s, which faced the disappointing reality of the dotcom bust of 2000, but was then vindicated by the indisputable digital explosion of the 2010s. Human genome sequencing has followed the same pattern—after its disappointing early years, the technology may be gearing up for a period of success. If we indeed initially overestimate the impact of innovation and eventually underestimate it, then, as Ridley deduces, we must get it about right somewhere in the middle. He chalks this up to one of his central theses, namely that inventing something is only the first step—the creation must then be innovated until it’s affordable for mass consumption. Applying Amara’s Law to contemporary trends, Ridley thinks that artificial intelligence is in the “underestimated” phase while blockchain is in the “overestimated” phase.

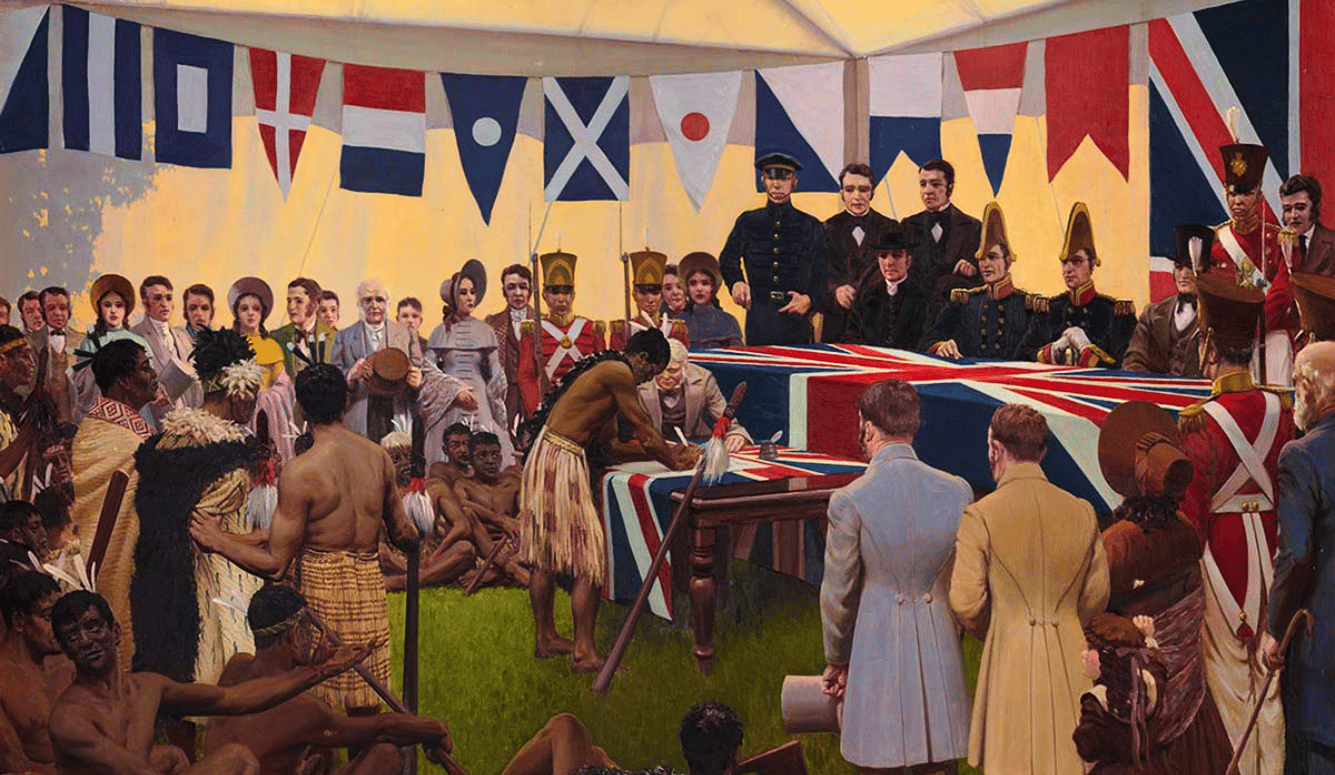

Ridley dispels many of the falsehoods surrounding innovation, from “regulations help protect consumers” to “such-and-such innovation will cause mass unemployment.” In doing so, one does not get the sense that he approaches these issues from an ideological perspective, but rather that he has first studied history and then deemed such common beliefs to be misplaced. Similarly, How Innovation Works explores the relationship between innovation and empire, concluding that as ruling elites expand their territory and centralize their control, the creative engine of their people stalls out and technological progress stagnates. This, in turn, contributes to those empires’ eventual downfall. In contrast to such ossified behemoths, regions of multiple, fragmented city-states were often the home of intense innovation, such as those of Renaissance Italy, ancient Greece, or China during its “warring states” period. In short, larger states tended to be more wasteful, bureaucratic, and legislatively restrictive than smaller ones and freedom, as the book’s subtitle indicates, is critical to progress.

In How Innovation Works, Matt Ridley is trying to solve a problem—that “innovation is the most important fact about the modern world, but one of the least well understood. It is the reason most people today live lives of prosperity and wisdom compared with their ancestors, [and] the overwhelming cause of the great enrichment of the past few centuries…” Many people are simply unaware that generations of creativity have been necessary for humanity to progress to its current state (and of course, problems will always remain to be solved).

Ridley’s book is his valiant attempt to change that. There are many more lessons, stories, and patterns expounded upon in How Innovation Works that I’ve not touched on, and Ridley weaves them all together in a kind of historical love letter to our species. It is the latest contribution in a growing list of recent books defending a rational case for optimism and progress, including Michael Shermer’s The Moral Arc, Steven Pinker’s Enlightenment Now, and David Deutsch’s The Beginning of Infinity. Unlike those other authors, Ridley’s primary focus is technological progress, and his passion and gratitude for it is infectious:

For the entire history of humanity before the 1820s, nobody had travelled faster than a galloping horse, certainly not with a heavy cargo; yet in the 1820s suddenly, without an animal in sight, just a pile of minerals, a fire and a little water, hundreds of people and tons of stuff are flying along at breakneck speed. The simplest ingredients—which had always been there—can produce the most improbable outcome if combined in ingenious ways… just through the rearrangement of molecules and atoms in patterns far from thermodynamic equilibrium.

Ridley presents an inspiring view of history, because we are not merely the passive recipients of thousands of years of innovations. We can contribute to this endless chain of progress, if we so choose.