psychology

Stereotypes Are Often Harmful, and Accurate

Stereotyping begets many social problems, but you seldom solve a problem by mischaracterizing its nature.

Stereotypes have a bad reputation, and for good reasons. Decades of research have shown that stereotypes can facilitate intergroup hostility and give rise to toxic prejudices around sex, race, age and multiple other social distinctions. Stereotypes are often used to justify injustice, validate oppression, enable exploitation, rationalize violence, and shield corrupt power structures. Stereotype-based expectations and interpretations routinely derail intimate relationships, contaminate laws (and their enforcement), poison social commerce, and stymie individual achievement.

For example, research has shown how individual performance may be affected adversely by heightened awareness of negative group stereotypes, a phenomenon known as ‘stereotype threat.’ If I show up for a pickup basketball game, and I know that all the young players around me hold a negative stereotype about the athleticism of middle-aged Jewish guys, the knowledge that I’m being thus judged will affect adversely my confidence and concentration, and with that my overall performance on the court (thus perpetuating the stereotype).

But you don’t even have to go to the research to develop your distaste for stereotypes. Looking around, most of us have seen with our own eyes the harm that can come from stereotyping, from stuffing complex human beings into categories at once too broad and too narrow and using those to justify all manner of unfair and vicious conduct.

Looking inward, most of us resent it when our deeply felt complexity is denied; when we are judged by those who don’t know us well; when we and robbed of our uniqueness, our genetic, biographical, psychological one of a kindness. We want our story to be the fully fleshed narrative, nuanced and rich and singular as we feel ourselves to be, as we actually are. Judge me solely by my external group resemblances, by how others who share some of my features have behaved, or by any measure that does not require actual knowledge of me, and you are doing me some injustice.

Indeed, one can hardly quarrel with the notion that we are all individuals and should be judged as such, on our own merit and the contents of our character, rather than seen as merely abstractions or derivatives of group averages. There appears to be a broad consensus, among lay persons and social scientists alike, that stereotypes—fixed general images or sets of characteristics that a lot of people believe represent particular types of persons or things—are patently lazy and distorted constructions, wrong to have and wrong to use.

The impulse to dismiss stereotype accuracy (and by proxy group differences as a whole) as wrongheaded fiction is mostly well-intentioned, and has no doubt produced much useful knowledge about individual variation within groups as well as the myriad commonalities that exist across groups and cultures. Yet, the fact that stereotypes are often harmful does not mean that they are merely process failures, bugs in our software. The fact that stereotypes are often harmful also does not mean that they are often inaccurate. In fact, quite shockingly to many, that prevailing twofold sentiment, which sees stereotypical thinking as faulty cognition and stereotypes themselves as patently inaccurate, is itself wrong on both counts.

First, stereotypes are not bugs in our cultural software but features of our biological hardware. This is because the ability to stereotype is often essential for efficient decision-making, which facilitates survival. As Yale psychologist Paul Bloom has noted, “you don’t ask a toddler for directions, you don’t ask a very old person to help you move a sofa, and that’s because you stereotype.”

Our evolutionary ancestors were often called to act fast, on partial information from a small sample, in novel or risky situations. Under those conditions, the ability to form a better-than-chance prediction is an advantage.

Our brain constructs general categories, from which it derives predictions about category-relevant specific, and novel, situations. That trick has served us well enough to be selected into our brain’s basic repertoire. Wherever humans live, so do stereotypes. The impulse to stereotype is not a cultural innovation, like couture, but a species-wide adaptation, like color vision. Everyone does it. The powerful use stereotypes to enshrine and perpetuate their power, and the powerless use stereotypes just as much when seeking to defend or rebel against the powerful.

Per Paul Bloom:

Our ability to stereotype people is not some sort of arbitrary quirk of the mind, but rather it’s a specific instance of a more general process, which is that we have experience with things and people in the world that fall into categories and we could use our experience to make generalizations of novel instances of these categories. So everyone here has a lot of experience with chairs and apples and dogs, and based on this, you could see these unfamiliar examples and you could guess — you could sit on the chair, you could eat the apple, the dog will bark.

Second, contrary to popular sentiment, stereotypes are usually accurate. (Not always to be sure. And some false stereotypes are purposefully promoted in order to cause harm. But this fact should further compel us to study stereotype accuracy well, so that we can distinguish truth from lies in this area). That stereotypes are often accurate should not be surprising to the open and critically minded reader. From an evolutionary perspective, stereotypes had to confer a predictive advantage to be elected into the repertoire, which means that they had to possess a considerable degree of accuracy, not merely a ‘kernel of truth.’

The notion of stereotype accuracy is also consistent with the powerful information-processing paradigm in cognitive science, in which stereotypes are conceptualized as “schemas,” the organized networks of concepts we use to represent external reality. Schemas are only useful if they are by and large (albeit imperfectly) accurate. Your ‘party’ schema may not include all the elements that exist in all parties, but it must include many of the elements that exist in many parties to be of any use to you as you enter a room and decide whether a party is going on and, if so, how you should behave.

Conceptual coherence notwithstanding, the question of stereotype accuracy is at heart an empirical one. In principle, all researchers need to do is ask people for their perceptions of a group trait, then measure the actual group on that trait, and compare the two. Alternately, they may ask people about the difference on a certain trait between two groups and compare that to the actual difference.

Alas, as you might have noticed, life is complex, and measuring stereotype accuracy in the real world is not easy. First, we have to agree on what constitutes ‘accuracy.’ Clearly, 100 percent accuracy is too high a bar, and, say, 3 percent may be too low; but what about 65 percent? Deciding what hit rate will constitute acceptable accuracy is a challenge. Similarly, we also need to agree on what constitute ‘stereotype.’ In other words, when does a belief become ‘widely held?’ Again, a belief held by 100 percent of people is too high a bar, by 3 percent too low; but what about 65 percent?

Second, it is difficult to assess the differences between perceived and actual traits in a group without relying on self-report measures — what people think about others, and what they think about themselves. Self-report measures are notoriously susceptible to social desirability and other biases. People may lie to look good, or shift their standard of comparison (I compare myself to people who are like me and you to people who are like you, as opposed to comparing both of us to the same standard), thus mucking up the results.

Moreover, even if we can get beyond self-report and achieve an objective measurement of a group’s trait of interest, we still must contend with the possibility that this trait may itself be largely a product of stereotyping. In that scenario, speaking of stereotype accuracy becomes cynical, like killing your parents and then demanding sympathy for being an orphan.

Another complication with measuring stereotypes is deciding what aspect of the score distribution curve we should focus on. For example, stereotypes are often assessed using a central tendency statistic — averages — rather than other qualities of the distribution curve, like mode (the most common score in a distribution), median (the score that divides the distribution into equal halves), or variability (the average distance from the mean of individual scores). This is problematic since measuring averages is not necessarily the best way to measure things, and because even those who estimate the average right may estimate mode, median, or variability wrong.

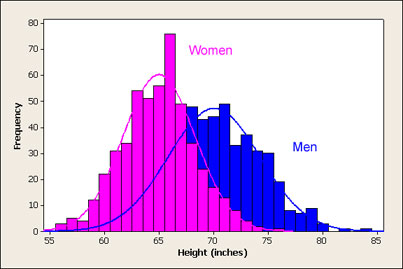

For example, the stereotype about men being bigger than women is based on the correct perception that the average man is bigger than the average woman. In this case, averages may suffice to support a claim of accuracy, since there is no stereotype concerning how dispersed the male distribution is compared to the female distribution. But variability stereotypes do exist. For example, in-group members are usually perceived (wrongly, in this case) as more variable than out-group members (this is known as the ‘out-group homogeneity bias’).

Interestingly, looking at the variability of groups’ trait distributions creates added wrinkles related to evaluating stereotype accuracy. For one, the distribution curves of different groups for most important traits overlap. Thus, even though a true and robust difference in average male vs. female height exists, some women are going to be taller than some men. Therefore, in looking for, say, tall employees, an employer cannot judge individual candidates fairly by gender status alone. The woman who just walked in may be one of those high on the female height distribution, thus towering over many male recruits who happen to reside low on the male height distribution curve. Score one against stereotypes.

At the same time, if we consider variability parameters such as the overlapping curves, then we must consider not just the overlapping middle of the distribution, but also the edges, which may not overlap. In other words, the small average difference between men and women allows for some women to be taller than some men, but the male distribution tail may extend further at the highest end. This will mean that in the case of height, if you look at the top .001 percent of the tallest humans, you will find only men. So, if you’re looking for the very tallest people in the world to join your team. You may safely, and fairly, turn down willy-nilly all female candidates. Score one for stereotypes.

These difficulties in defining and measuring stereotypes create inevitable system ‘noise,’ error, and imprecision. But a less than perfect assessment is not at all useless. The stereotype that men are more violent than women is accurate, and can serve as useful predictive heuristic without implying that the man you’re with is violent, or that most men you’ll meet are. People who say that grapes are sweet don’t mean to say that all grapes everywhere are always sweet, and they may not know the whole range of grape flavor distribution. Yet in real world terms the statement is more accurate and useful than it is inaccurate and useless. In other words, the stereotype is true, even if it is neither the whole truth nor nothing but.

This fact may, in the mind of some, undermine the accuracy claim. Yet those who wish to hold stereotype accuracy measures to a strict standard should be willing to apply it to evaluating stereotype inaccuracy as well. When you say: ‘Stereotypes are inaccurate,’ is that the whole truth and nothing but? I think not. When you claim to be a unique individual, like no one else, you are definitely telling an important truth, but not all of or nothing but it. After all, you are also in some ways like everyone else (you follow the rewards; you sleep); and in other ways you are like some people but not others (you are an extrovert, an American).

Conceptual, methodological, and ideological obstacles notwithstanding, research on stereotype accuracy has been accumulating at quite a pace since the 1960s. The results have converged quite decisively on the side of stereotype accuracy. For example, comparing perceived gender stereotypes to meta-analytic effect sizes, Janet Swim (1994) found that participants were, “more likely to be accurate or to underestimate gender differences than overestimate them.” Such results have been amply replicated since. According to Lee Jussim (2009) and his colleagues at Rutgers University–New Brunswick, “Stereotype accuracy is one of the largest and most replicable effects in social psychology.”

Likewise, reviewing the literature, Koenig and Eagly (2014) concluded that, “in fact, stereotypes have been shown to be moderately to highly accurate in relation to the attributes of many commonly observed social groups within cultures.”

Moreover, research findings of stereotype accuracy are compatible with the adjacent (but much less controversial) literature on interpersonal accuracy, an interdisciplinary field probing the accuracy of people’s beliefs, perceptions, and judgments of individuals. Communication, personality and social psychology studies have generally shown that people are quite accurate at judging the states and traits of other people.

Now, this would be a good time to remind ourselves that just as stereotype perniciousness does not imply inaccuracy, so does stereotype accuracy not negate perniciousness. That a tendency to stereotype is adaptive does not mean that it comes at no cost. Every adaptation extracts a price. The fact that stereotypes are often accurate does not render their existence socially benign.

As Alice Eagly has shown, stereotypes exert much of their harmful social influence at the sub-category level, when an individual violates group expectations (a process known as, ‘role incongruity’). The average woman is less knowledgeable about cars than the average man, but a woman mechanic is not, yet she will be wrongly perceived as such. Likewise, with women stereotyped as weak, a strong woman will be viewed as less womanly, and may face doubt, ridicule, or rebuke for failing to comply with stereotype (as will a weak man).

Stereotyping begets many social problems, but you seldom solve a problem by mischaracterizing its nature. Speaking of nature, even if we concede that stereotyping is adaptive and that many stereotypes (and mean group differences) are accurate, the question often comes up as to whether the source of these observed differences is nature or nurture.

The traditionalist, old school claim is, of course, that the stereotypical behaviors and traits we associate with men and women, for example, are in fact nature carved at its joints, manifesting our biological evolutionary heritage. While this claim has been used to pernicious ends (“letting women do x is against nature,”etc.), that in itself does not make it patently inaccurate. We are biology-in-environment systems. It is foolhardy to deny that biology constantly tugs at us, in the least leashing our potentials. The fact that women have a uterus and men produce sperm must find expression in the sexes’ respective survival and reproductive strategies, and with that the processes of their brains. If I have swift feet and you have big wings, when the hungry lion comes for us, I will run and you will fly. To predict otherwise is folly.

Often, the argument over the source of stereotyped group differences masks a fight over the politics of social change. The biology, ‘nature’ side, endorsed more often by those in power, hopes that winning the argument will enshrine the status quo as natural and justified, thus branding attempts to change it as misguided and dangerous. The social constructionist, ‘nurture’ view, appealing to the socially marginalized, embodies the hope that if stereotypes are merely social artifacts, then they can be eradicated by changing the way we are socialized, the way we speak, and the ways we interact.

And so they go at it, to neither end nor avail, in part because both approaches are rooted in the old ‘nature vs. nurture’ mode of thinking, which is all but obsolete. A better way, perhaps, is to see the biology-society relationship as integrated and reciprocally determined. Biology shapes society, and society shapes the meaning of biology. (It also shapes biology itself. Climate change, anyone?). In other words, to the extent that stereotypes are biologically based, they are given meaning to only in social contexts, using socially constructed tools, such as the concept of ‘meaning.’ To the extent that stereotypes are social constructions they are constructed by biologically evolved brains.

So it seems likely that stereotype harm may not be mainly due to perception inaccuracy, but to the increasingly awkward fit between ancient adaptations and current social conditions. This lack of fit is implicated in many a modern woe. For example, the fact that we are dying of obesity is not because storing fat is inherently bad, but because this adaptation has evolved in a time when our food was scarce and supply unpredictable. As food becomes abundant and easy to obtain, the old tendency begins to work against us. The polar bear’s thick fur, great for storing heat, is adaptive in cold weather. If (or when, as it were) the ice cap turns to desert, the same fur will become a death trap.

Considering stereotypes, the stereotyping process has evolved in a time when tribe was the defining unit of identity. Today, in the epoch of the differentiated self, tribal distinctions, however accurate, may no longer provide sufficiently useful and important cues for adaptive action. Rapid social change, in other words, is rendering stereotyping superfluous, and certain previously relevant stereotypes gratuitous.

For example, male physical superiority, and the attendant stereotype, may have been sufficient to justify and support a social system of male dominance during a time when physical strength was a crucial survival and social asset. Due to socio-cultural innovation, it no longer is. The most socially powerful people around, and those most likely to survive, are no longer the most physically strong. The old stereotype that women are physically weak is still accurate, but the right question in our new social times might be: So what?