AI Debate

Plenty of Room for AI-nxiety

Things change once we leave the world of infallible humans who can create a perfectly benevolent general intelligence.

Editor’s note: this essay is part of an ongoing series hosted by Quillette debating the practical and ethical implications of AI. If you would like to contribute or respond to this essay or others in the series, please send a submission to [email protected].

In light of recent articles addressing the apprehension surrounding Artificial Intelligence and its implications, there are two things that ought to be brought to bear. There is the effect of the most moral of artificial intelligences, and then there is the impact of human goals on the development of AI. It should be noted that we are not talking about contemporary AI here; we’re discussing the as-yet-unseen future AI, the Strong Artificial Intelligence, a general intelligence capable of the same types of universal reasoning, prediction, and analysis that humans undertake. Here I will be referring to it as Artificial General Intelligence (AGI) in order to differentiate it from our digital Go players and car drivers.

The Benevolent Superintelligence

Thomas Metzinger is a German philosopher who is most known for his works on consciousness and ethics. He has developed what I consider to be the strongest pure rationale against AGI – what he calls the ‘Benevolent Artificial Anti-Natalism’ thought experiment. By “pure,” I mean that there is no human or outside involvement required – or even expected. His thought experiment begins at the point at which humans have perfectly created the most moral Artificial General Intelligence possible. This steelman underlies the strength of his argument:

Obviously, it is an ethical superintelligence not only in terms of mere processing speed, but it begins to arrive at qualitatively new results of what altruism really means. This becomes possible because it operates on a much larger psychological data-base than any single human brain or any scientific community can. Through an analysis of our behaviour and its empirical boundary conditions it reveals implicit hierarchical relations between our moral values of which we are subjectively unaware, because they are not explicitly represented in our phenomenal self-model. Being the best analytical philosopher that has ever existed, it concludes that, given its current environment, it ought not to act as a maximizer of positive states and happiness, but that it should instead become an efficient minimizer of consciously experienced preference frustration, of pain, unpleasant feelings and suffering. Conceptually, it knows that no entity can suffer from its own non-existence.

The superintelligence concludes that non-existence is in the own best interest of all future self-conscious beings on this planet. Empirically, it knows that naturally evolved biological creatures are unable to realize this fact because of their firmly anchored ‘existence bias.’ And so, the superintelligence decides to act benevolently.

This thought experiment rests upon three interwoven assumptions:

- That human society’s morals and ethics are hopelessly hypocritical. The rules are filled with exceptions, edge-cases, and discomforting boundaries. This is because they are not empirically established, but instead rooted in complex biological and evolutionary processes that tremble before the trolley problem.

- That humanity – and in fact all biological organisms – exhibit an existence bias. As biological beings, we cannot rationally weigh the positives and negatives of existence because we cannot give equal weight to non-existence.

- That the costs of suffering outweigh the benefits of happiness.

It is the third point that offers the most empirical difficulty. This is not to say that it is wrong; just that it is the most difficult to prove. Metzinger side-steps this empirical component, instead offering a subjective analysis that makes it feel self-evidently obvious:

The superintelligence knows that one of our highest values consists in maximizing happiness and joy in all sentient beings, and it fully respects this value. However, it also empirically realizes that biological creatures are almost never able to achieve a positive or even neutral life balance. It also discovers that negative feelings in biosystems are not a mere mirror image of positive feelings, because there is a much higher sense of urgency for change involved in states of suffering, and because it occurs in combination with the phenomenal qualities of losing control and coherence of the phenomenal self—and that this is what makes conscious suffering a very distinct class of states, not just the negative version of happiness. It knows that this subjective quality of urgency is dimly reflected in humanity’s widespread moral intuition that, in an ethical sense, it is much more urgent to help a suffering person than to make a happy or emotionally neutral person even happier.

Once you accept his premise, the conclusion flows out naturally: Even if we create the most benevolent and moral Artificial General Intelligence imaginable, we still put ourselves at immense risk of non-existence. From this perspective, it doesn’t even matter that humans are fallible and can’t be trusted to build software without defects – biological systems do not operate on a moral basis, and so doom themselves by creating an arbiter of moral justice. It is not ‘fear of the unknown’ that should give us pause when it comes to AGI, there are plenty of reasons to be concerned that we already know about. Limiting the discussion to such a narrow perspective strawmans the greater discussions around the harmful effects of AGI and diminishes the benefits that the very rational anxiety thereof might produce:

- We want AGI researchers to be anxious.

- We want them to double-check their work.

- We want them to worry that it could harm humanity.

- This is how we infuse our work with care and ensure that it remains beneficial for us.

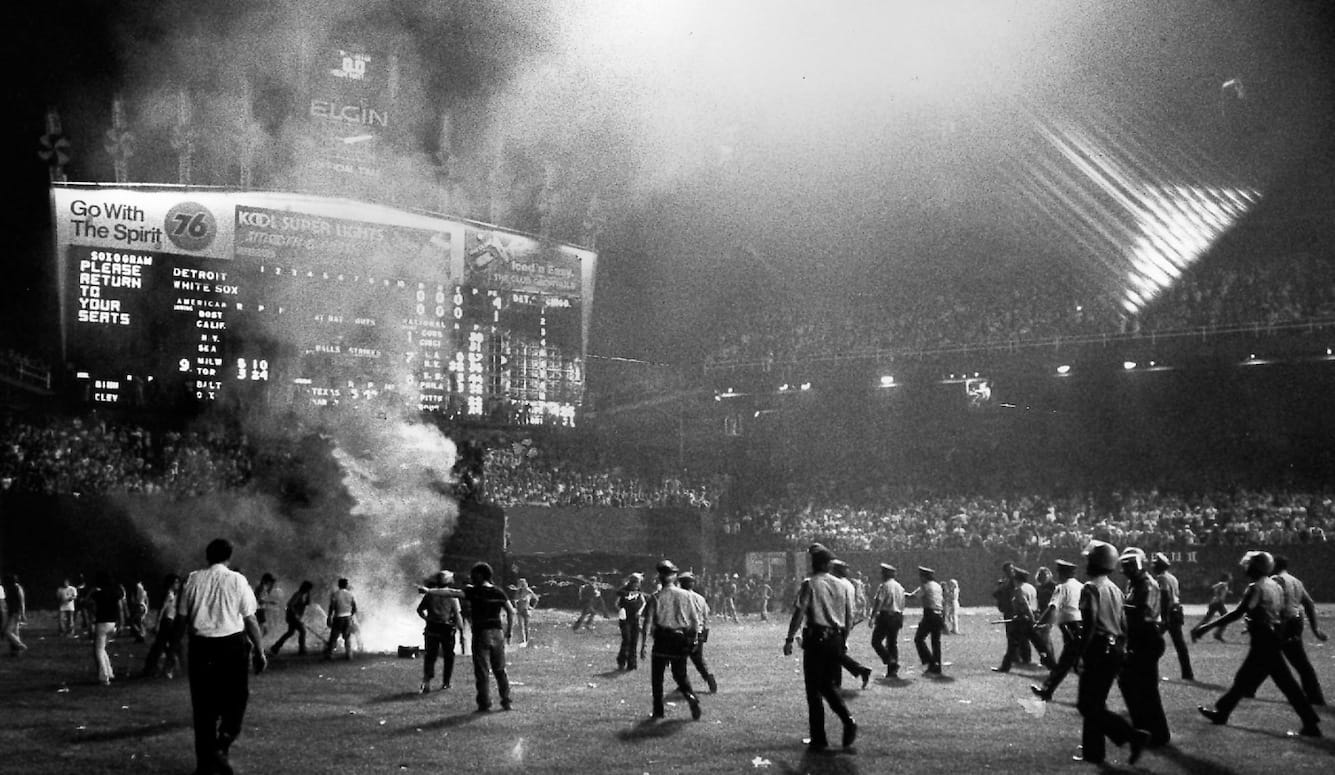

The Arms Race

Things change once we leave the world of infallible humans who can create a perfectly benevolent general intelligence. The primary driver for an Artificial General Intelligence is not going to be a group of compassionate researchers at Stanford University, because that spot is already taken by the modern warmachine. To those who believe the Nuclear Bomb to be the end of an arduous arms race, allow me to announce that the arms race is not over by a long shot. The military benefits of a greater-than-human AGI are countless. From strategic and real-time tactical planning to threat assessment and a near digital omnipresence, there isn’t a single space in the military industrial complex where an AGI would not feel right at home, and this is before considering the immense benefits of intelligent robots in a battlefield. All of this is fine, so long as it’s your army.

Addressing students at the start of a new school year on Artificial Intelligence, Vladimir Putin claimed, “Whoever becomes the leader in this sphere will become the ruler of the world.” And he’s right. While the realization of an AI arms race between the United States, Russia, and China is dawning on most of the world today, this race actually started in earnest back in the 1960s. The common ground between Facebook tagging your friends in a photo you posted and the military discovering insurgents through online photos is often overlooked. Much of our commercial AI enterprise is either on the back of, or otherwise in collusion with, the military industrial complex.

When it comes to AGI, this relationship between commercial and military dwindles a bit, as profit is not as effective a motive in the current state as global hegemony. The risk of failure in AGI investment is currently enormous – so far costing 70 years of research to no clear benefit. A rational market actor sees this and does not invest in what is surely a lost cause. Conversely, a state power looking at the same risk has no choice but to invest if it intends to maintain and/or defend its sovereignty – yet another example of existence bias. This creates a rift in the potential for progress between the private sector and military. What’s left from there are academic institutions, but even then, much of academic research – especially in this field – is itself funded by the Department of Defense.

Are we willing to trust an Artificial General Intelligence spawned in such an environment? If we really consider the scale of what’s at stake, I would say no. It is overwhelmingly likely that the first AGI will be created with the intent of total dominion. That should give people considerable pause.

The Inevitable

I’m of the view that Artificial General Intelligence will inevitably come about. I don’t know when, but I don’t have any particular reason to assume that the process underlying general intelligence can’t be replicated on a machine. In fact, that is the root of its inevitability. The moment that a working model of general intelligence finds its way into the public domain, people will attempt to replicate it. I know this because I would be among them. From there, humans will optimize this model of intelligence until it produces human-analogous or greater general intelligence. This intelligence will have access to our existing model of intelligence and greater reasoning abilities with which to optimize it. So it goes.

Humanity so far is the single biggest agent of change on this planet, and we owe it largely to our relative intelligence. What does the impact of a superlative intelligence look like? From this perspective, a comparison to aliens might be favorable. Should an intergalactic space-faring alien species visit our planet, it would certainly rank as the most momentous of events to occur. Should you be wary of that? Absolutely. Modern humanity has never had to compete with outsiders on an intellectual level. Yet that’s exactly where we’ll be and by our own volition: in an intellectual competition against outsiders.

The real risk then, is that Artificial General Intelligence is the ultimate winner-take-all scenario. Its inception serves as the harbinger of near-unlimited growth – as a greater-than-human general intelligence necessarily means that it will have a superior working model of intelligence, and, as a result, will be able to create an intelligence that is also greater than itself. The rate at which this self-improvement will happen stands to make the scale of humanity’s growth and success look meager and pathetic.

This has an unfortunate side-effect: the first AGI success will also be the last AGI success. Because its rate of growth will trump anything humans can accomplish, they will never be able to catch up. Even an identical AGI, brought up in another location only seconds later, might fall orders of magnitude behind before it is summarily squashed. Herein lies the winner-take-all scenario of AGI. An AGI’s first order of business would likely be to improve, distribute, and decentralize itself across the internet – creating a digital omnipresence in order to detect and destroy any competitors. If it reaches this point it has already won – or at least done tremendous damage. What would the economic effects of cutting ourselves off from the internet be?

What About Shackles?

Software engineers (myself included) have not demonstrated that they are able to reliably write code that is secure, free of defects, and complete. We have not demonstrated that we are able to create networks that can’t be infiltrated. We have not demonstrated that we are even able to find all of the vulnerabilities and defects we create. I would wager that preemptive shackles on an AGI would have the opposite of the intended effect – futilely creating whatever the machine equivalent of resentment and hostility might be. But let’s say that we do successfully shackle an AGI – now what? In the winner-take-all context, we’ve still opened ourselves to immense inequality. Humans won’t be able to compete on an intellectual basis, which becomes all the more important as our endeavors themselves become more and more intellectual.

The last refuge of an honest skeptic then, is in the suggestion that an AGI will never be created. This argument tends to be rooted in one of two places: the idea that human cognition is outside the realm of computation, or that consciousness is a metaphysical barrier to its accomplishment.

The latter claim is a reduction of Searle’s Chinese Room argument, and the most confounding. It is rooted in the idea that intelligence and consciousness are inseparably related. I would posit that consciousness is not a prerequisite to intelligence. In fact, the more we learn about consciousness and intelligence, the more apparent it becomes that we should consider them entirely different things. ‘Split-brain’ experiments, for instance, have revealed that often our consciousness is simply conjuring post hoc rationales to explain past unconscious behavior. They also reveal, more importantly, that we are able to act intelligently without a conscious experience of having done so.

The former concern of uncomputability tends to be more common. This is valid and we do not know enough about general intelligence to determine its computability to put forward an honest answer. My gut suggests that, since it is a biochemical computational process, there is ample reason to suggest it can be replicated. This reasoning generally prompts appeals that include the word ‘quantum.’ Suffice it to say, this is unanswerable until we know more. It should be noted that even though some problems are uncomputable, it does not mean that analogues of those problems are also uncomputable.

The Artificial General Intelligence singularity begins with a single “Eureka!”: an understanding of general intelligence. Given that even the most optimistic outlook offers significant concerns and that the pragmatic outlook is quite terrifying, there is plenty of room for anxiety in this domain. Had computers not been invented before this understanding occurs, this might have been avoidable. But they had and so it isn’t.