Science / Tech

Dogmatism, Data, and Public Health

A look back on the 2003 BMJ controversy over passive smoking and mortality.

I.

Over the past three-and-a-half years, we have been exposed to a more sustained discussion of health risks than possibly at any time in the past century. But as the still-simmering debates over the origins and health impact of SARS-CoV-2 and the vaccines used to contain it demonstrate, we are barraged by pervasive “misinformation” and “disinformation.” Throughout the pandemic, health agencies and authorities have, with the best of intentions, made some terrible missteps. At the same time, people with advanced degrees and influential podcasts have sown mistrust of vaccines and championed bogus “miracle” treatments for COVID-19. Given the conflicting narratives and reversals by health authorities, it is hardly surprising that many people are confused about what to believe and distrustful.

Twenty years ago, I found myself in the middle of a different health controversy, which seems almost quaint in retrospect. This was the question of how dangerous it is for a non-smoker to be exposed to other people’s tobacco smoke. This exposure was referred to by different names: “passive smoking,” “secondhand tobacco smoke,” and “environmental tobacco smoke.” In contrast to a pandemic caused by a totally novel virus, conceptually, the question of passive smoking would seem to be remarkably straight-forward and susceptible to rational management and amelioration. However, because it involved tobacco and the raging “tobacco wars” of the 1970s and 1980s, what should have been a relatively simple scientific question became transmuted into a moral campaign.

The result has been an unqualified success in terms of public health—the prevalence of smoking declined from 45 percent in 1954 to 12 percent in 2023, with a concomitant decline in exposure to passive smoking. However, the moral crusade overshadowed the science, and an opportunity was lost to use the question of passive smoking to convey essential principles of science and logic to the public. My involvement in research on the question of passive smoking culminated in the publication of a paper in 2003 in the British Medical Journal, now BMJ, which provoked an uproar. Because this incident shows the extent to which a scientific question can get distorted and confused by political and professional agendas, it is worth recounting in detail.

My interest in the topic dated back to the early 1980s, when I was working as a cancer epidemiologist at the American Health Foundation, a National Cancer Institute-designated cancer center in New York City. The Foundation’s president Ernst L. Wynder had published the first study in the US showing that cigarette smoking was strongly associated with lung cancer in 1950. Although most lung cancers are caused by cigarette smoking, lung cancer does occur in people—particularly women—who have never smoked, and the question of what factors besides smoking cause lung cancer interested me.

While a number of possible risk factors merited further study, in 1981 a study by a Japanese epidemiologist, Takeshi Hirayama, appeared in the BMJ indicating that the non-smoking wives of heavy smokers had double the risk of lung cancer compared to the non-smoking wives of non-smokers. Spurred by the Hirayama paper, my colleagues and I drew up a number of questions about exposure to other people’s cigarette smoke to add to the questionnaire we used in our large multi-center case-control study of tobacco-related cancers.

In 1985, we published an article in the American Journal of Epidemiology presenting preliminary data obtained using these added questions. We emphasized the need for researchers to collect detailed information on lifetime exposure to secondhand smoke in different situations (in childhood, in adulthood, at home, at work, and in social situations) and to try to quantify the exposure as much as possible in terms of cigarettes smoked and hours of exposure. We did our best to design questions that would identify people with particularly heavy smoke exposure (e.g., in addition to asking about the amount smoked by the spouse, we asked “does your spouse smoke in the bedroom?”). We did not see any clear associations between exposure to passive smoking and lung cancer in the data. However, because of the relatively small number of cases accrued at that point, and because we would expect any effect of passive smoking to be small (due to dilution of the smoke in the surrounding air), we refrained from drawing any conclusions.

I continued to follow the literature on the health effects of environmental tobacco smoke, aware of how studies—often of poor quality—from different countries were interpreted as additional evidence of the effects of secondhand smoke. Furthermore, several regulatory agencies published reports concluding that exposure to secondhand cigarette smoke was carcinogenic (National Research Council 1986; US Environmental Protection Agency 1992; International Agency for Research on Cancer 2004).

In the early 1990s, I had been a member of the EPA panel charged with evaluating the evidence for an association of passive smoking with lung cancer. It was clear that the leadership of the committee was intent on declaring that passive smoking caused lung cancer in non-smokers. I was the sole member of the 15-person panel to emphasize the limitations of the published studies—limitations that stemmed from the rudimentary questions used to characterize exposure. Many members of the committee voiced support for my comments, but in the end, the committee endorsed what was clearly a predetermined conclusion that exposure to secondhand smoke caused approximately 3,000 lung cancers per year among never-smokers in the United States.

This is where things stood in the late 1990s, when I was contacted by James Enstrom of UCLA. He asked if I would be interested in collaborating on an analysis of the American Cancer Society’s “Cancer Prevention Study I” to examine the association between passive smoke exposure and mortality. I had been aware of Enstrom’s work since the early 1980s through the medical literature. We were both cancer epidemiologists interested in lung cancer occurring in people who had never smoked, and we had both published numerous studies documenting the health risks associated with smoking as well as diet and other behaviors. In addition, Enstrom had begun his collaboration with the American Cancer Society with Lawrence Garfinkel, the vice president for epidemiology there from the 1960s through the 1980s. Garfinkel was one of the advisors on my (later published) master’s thesis on the topic of lung cancer occurring in never-smokers, which I completed at the Columbia School of Public Health in the early 1980s.

From his work, I had a strong impression that Enstrom was a rigorous and capable scientist, who was asking important questions. Because I had been involved in a large case-control study of cancer, I welcomed the opportunity to work with data from the American Cancer Society’s prospective study, since such studies have certain methodologic advantages. In a case-control study, researchers enroll cases who have been diagnosed with the disease of interest and then compare the exposure of cases to that of controls—people of similar background, who do not have the disease of interest. In a prospective study, on the other hand, researchers enroll a cohort, which is then followed for a number of years. Since information on exposure is obtained prior to the onset of illness, possible bias due to cases reporting their exposure differently from controls is not an issue.

After several years of work, our paper was published by the BMJ on May 17th, 2003, addressing the same question Takeshi Hirayama had posed 22 years earlier in the same journal: whether living with a spouse who smokes increases the mortality risk of a spouse who never smoked. Based on our analysis of the American Cancer Society’s data on over 100,000 California residents, we concluded that non-smokers who lived with a smoker did not have elevated mortality and, therefore, the data did “not support a causal relation between environmental tobacco smoke and tobacco related mortality.”

The publication caused an immediate outpouring of vitriol and indignation, even before it was available online. Some critics targeted us with ad hominem attacks, as we disclosed that we received partial funding from the tobacco industry. Others claimed that there were serious flaws in our study. But few critics actually engaged with the detailed data contained in the paper’s 3,000 words and 10 tables. The focus was overwhelmingly on our conclusion—not on the data we analyzed and the methods we used. Neither of us had never experienced anything like the response to this paper. It appeared that simply raising doubts about passive smoking was beyond the bounds of acceptable inquiry.

The response to the paper was so extreme and so unusual that it merits a fuller account, which I will offer below. Only then can we examine the scientific issues on which the question of passive smoking hinges and which the brouhaha over the article served to obscure. Finally, I will discuss how passive smoking provides an instructive example of a common phenomenon in environmental epidemiology—that is, how health risks are identified and assessed and how public-health guidance is disseminated to the public.

II.

Unbeknownst to us, our paper was selected as the BMJ cover story for that week in May 2003. The cover photo showed a state of California sign warning about the dangers of breathing other people’s cigarette smoke posted at the entrance to a building. The provocative headline proclaimed, “Passive Smoking May Not Kill.”

The article had undergone a rigorous review by editors at the BMJ and outside reviewers. (The full peer review history is posted on the journal’s website.) We included a 208-word disclaimer stating that we had both grown up in non-smoking households and, as epidemiologists, we recognized the enormous toll of smoking on health—a topic that we ourselves had done work on. We were simply reporting our results.

But before the article even came out (i.e., while it was under a two-day embargo), it was attacked by the American Cancer Society, by many doctors, researchers, public health officials, and by anti-smoking advocates, who believed that our results had to be wrong. The journal received more than 180 electronic letters—“rapid responses”—the majority of which attacked the study as “flawed” and “supported by the tobacco industry,” while a smaller number of respondents defended the paper. There were calls for the journal to retract the paper. The British Medical Association, the parent organization, which publishes the BMJ, added its voice to the chorus of denunciation.

It should also be mentioned that, at the time the paper appeared, in mid-May 2003, the city of London was about to vote on an ordinance outlawing smoking in public places, including restaurants and pubs; our paper with its sensational packaging was seen as a threat to these efforts. Critics resorted to personal attacks. No one mentioned our track record as scientists, our publications in the peer-reviewed medical literature, or the fact that each of us had worked for years with a giant in the field of epidemiology and smoking-related disease (in my case, Ernst Wynder; in Enstrom’s case, Lawrence Garfinkel). In addition, Enstrom had collaborated with the path-breaking epidemiologist Lester Breslow, who later became dean of the UCLA School of Public Health, as well as with Linus Pauling.

Particularly striking was the fact that not one of our epidemiology colleagues wrote in to vouch for our integrity. A few letters from colleagues saying something like, “You ought to know that these guys are reputable scientists, with proven track records, and they should be able to present the findings from their study without being attacked because their results are unpopular,” would have provided a counterpoint to what bordered on hysteria. But then again, when one sees how poisonous these incidents can get, you understand why people would keep their heads down.

One who did not was the editor-in-chief of the BMJ journal, Richard Smith, who defended publication of the paper on British TV and in a comment in the BMJ, stating that when solid research has been done it must be published or else the scientific record is distorted. One year later, when he stepped down from his 25-year leadership of the journal, Smith wrote a column on editorial independence, in which he said this:

Everybody supports editorial independence in principle, although it sometimes feels to editors as if the deal is “you can have it so long as you don't use it.” Problems arise when editors publish material that offends powerful individuals or groups, but that’s exactly why editorial independence is needed. Journals should be on the side of the powerless not the powerful, the governed not the governors. If readers once hear that important, relevant, and well-argued articles are being suppressed or that articles are being published simply to fulfil hidden political agendas, then the credibility of the publication collapses—and everybody loses.

Twenty years after its publication, our findings remain well within the range of results from other studies conducted in the US, including those of the second American Cancer Society prospective study, Cancer Prevention Study II. But while hostile critics were unable to find serious errors in our analysis, they were quick to point to various factors, which they claimed invalidated the paper.

For example, the new vice president for epidemiology at the American Cancer Society, Michael Thun, argued that the Cancer Prevention Study I (CPS-I) dataset, launched in 1959, which we had used, could not be used to examine the association of secondhand smoke exposure with mortality because in the decades prior to 1960 “everyone was exposed to cigarette smoke.” If everyone was exposed to secondhand smoke, as Thun asserted, clearly one couldn’t compare people exposed to smoke to a group that was not exposed.

Thun provided no citation in support of his sweeping claim, and in fact, it was untrue. The prevalence of smoking at its height in 1965 was about 42 percent—about 51 percent in men and 33 percent in women. It is true that, in the absence of smoking restrictions, people were exposed to more smoke in public places; however, in the home roughly half of the population would have been unexposed. Since less than half of all women worked outside the home during the years of the study (1950: 34 percent; 1960: 38 percent; 1970: 43 percent; 1980: 52 percent), exposure to cigarette smoke in the workplace would have made a limited contribution to overall exposure among women over time. Thun himself had included other studies from the 1950s and 1960s in a meta-analysis of the question.

While these alleged flaws did not stand up to scrutiny, the claim that our paper was “supported by the tobacco industry” was undoubtedly effective in tainting the paper and its authors in the minds of many people. However, the actual facts were quite different. Enstrom had collaborated with the two heads of epidemiology at the American Cancer Society for decades (Lawrence Garfinkel and Clark Heath, Jr.) and had published previous papers using the CPS-I dataset. During these years, Enstrom’s research had been supported by the ACS and the state of California. But when it became clear to the new vice president for epidemiology at the society, Michael Thun, that Enstrom was skeptical about the passive smoking association, Thun withdrew his support for Enstrom’s proposed analysis of passive smoking and mortality in the entire CPS-I using extended follow-up of the cohort.

At that point, Enstrom could not obtain funding for his analysis from any of the sources that had previously supported his research. His only option was to accept funds from the Center for Indoor Air Research (CIAR), a body that funded research relating to indoor air quality, including secondhand cigarette smoke, with funds from the tobacco industry. These funds were awarded by an independent committee of scientists. As a result, seven years of the 39-year follow-up were funded by CIAR.

Contrary to the implication that the work was somehow suspect due to this support, as we stated in our authors’ declaration, no one other than Enstrom and myself saw or had any say in the analysis or the writing of the paper. It should also be noted that many researchers, whose integrity was never questioned, received funding from CIAR. The only differences were that (a) these researchers were, for the most part, measuring secondhand smoke exposure in various workplace settings, rather than carrying out epidemiologic studies relating exposure to health outcomes, and (b) more significantly, they had not expressed skepticism about the effects of passive smoke exposure.

Three months after publication, we published a detailed response and a letter in the BMJ addressing the criticisms.

III.

To evaluate a scientific paper objectively, one must examine both its strengths and its weaknesses. While identifying what it claimed were weaknesses, the ACS said nothing about the considerable strengths of the paper. One of these is that we had gone to great lengths to address the Achilles’ heel of passive-smoking studies—and of epidemiologic studies generally. This is what is referred to as “misclassification of exposure,” which can lead to distorted results. Most studies only assess exposure to secondhand smoke at one point in time. But exposure can change over time (as a result of a smoker quitting or dying). In order to address this concern, we recontacted surviving participants in the California cohort in the late 1990s to ask them about their exposure to other people’s cigarette smoking, and demonstrated that reports of exposure at different points in time showed good agreement.

Another huge strength of the ACS dataset is that, in this prospective study, participants were recontacted during follow-up to ascertain health status (whether they had developed cancer or other disease) as well as their smoking status. Verification of smoking status is essential. Without reliable information on smoking status, one runs the risk of including former smokers in the never-smoker group, which, of course, could lead to a spurious association.

Furthermore, we conducted a number of sensitivity analyses—that is, analyses designed to test whether the results held up under different assumptions and different conditions. As a result of the detailed data we presented, the paper was longer than the usual paper and included 10 tables. But few of the rapid responses made any reference to the details of the paper. Rather, they followed the lead of the American Cancer Society and the British Medical Association, expressing outrage at a conclusion they felt had to be wrong.

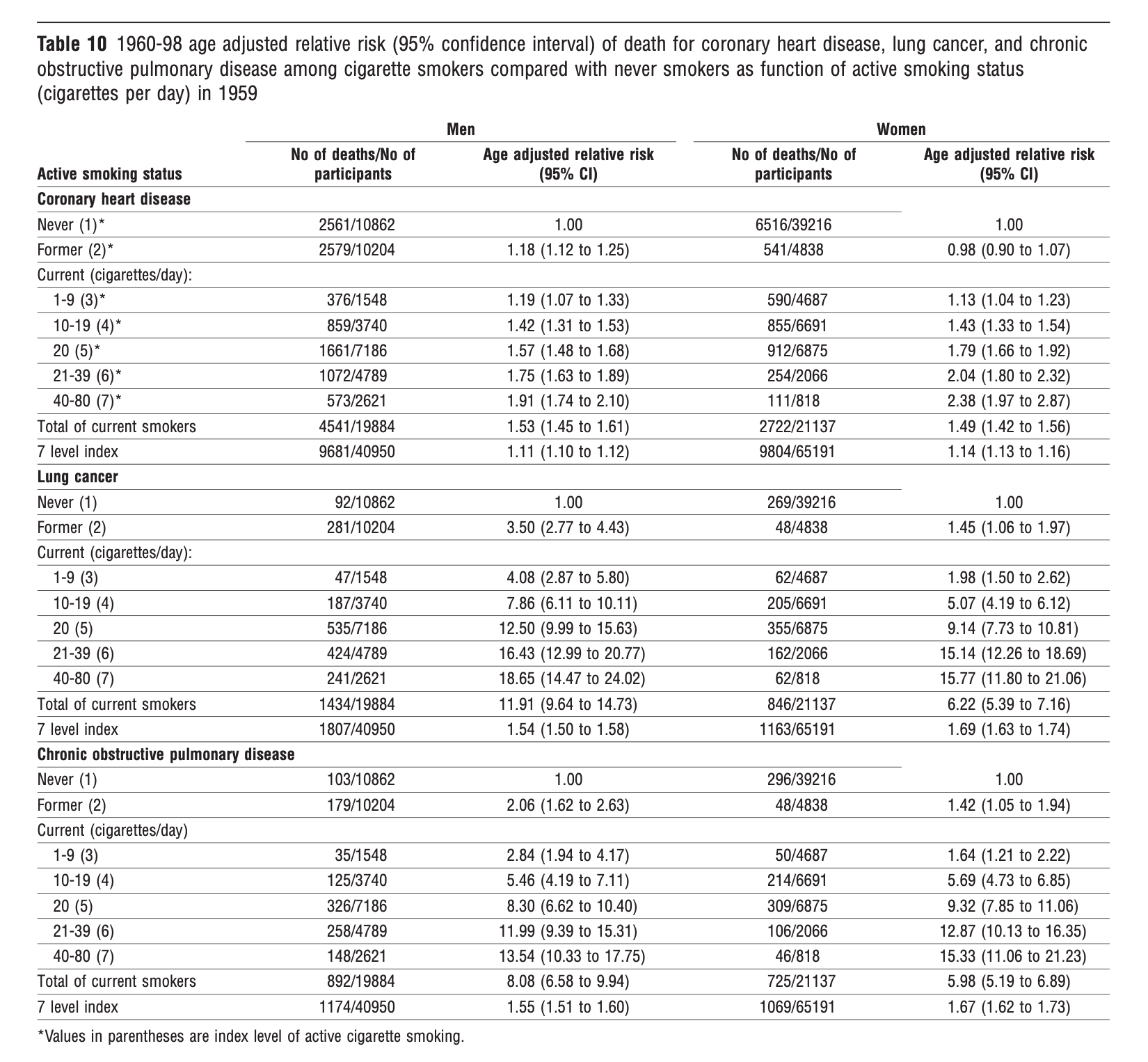

The way in which the promotion of the paper was managed by the journal (i.e., making it the cover story, with an eye-catching photograph and title) and its reception by the American Cancer Society succeeded in distracting attention from the crux of the matter, which we had been at pains to make clear. No reader acknowledged that (in Table 10, below) we demonstrated a clear dose-response relationship between cigarette smoking and mortality from heart disease, lung cancer, and chronic obstructive lung disease (COPD) in both men and women who currently smoked. That is, among current smokers, as the intensity of smoking increased, the risk of dying increased. Even at the lowest level of smoking (one to nine cigarettes per day), we were able to detect a significantly elevated risk for all three types of mortality. And the risk increased, reaching nearly ten-fold in the heaviest smokers (40–80 cigarettes per day). This is the crucial background against which one needs to interpret our finding of no significant elevations in risk and no trends indicating increasing risk with increasing level of exposure to secondhand cigarette smoke in never-smokers.

The furor was successful in drowning out the paper’s careful focus on the dose of exposure to cigarette smoke, which could range from a non-smoker inhaling a passing whiff of a smoker’s side-stream and exhaled smoke to what a five-pack-a-day smoker inhales into his lungs. In the 16th century, the Swiss physician and alchemist Paracelsus had articulated the principle that is the cornerstone of modern toxicology—“the dose makes the poison.” But instead of putting the passive smoking issue in the perspective we provided in Table 10, activists, many scientists, and even regulatory agencies treated passive smoking as a thing apart, which was known, a priori, to be fatal.

Since the new leadership of the ACS had attacked the paper, alleging “fatal flaws,” we responded that the ACS had the data for the entire cohort of a million people, who were enrolled in 1959 and had been followed for mortality to 1972. Thus, the ACS was in a position to do their own analysis to see whether the results in the total cohort agreed with our results in the California portion of the cohort. This happens all the time in science. Usually, scientists are eager to analyze their data on a question of great interest to advance the discussion. Enstrom challenged Thun to conduct such an analysis of the entire cohort and even sent him the blank tables so that he could fill them in, following the same analysis plan we had used. Thun never responded to this proposal.

Rather than documenting flaws which would invalidate the paper, the ACS used a variety of arguments from “everyone was exposed” to conflicts of interest, hoping something would stick. And how could one expect the public and health professionals not versed in these matters to see that the cancer society’s criticisms were motivated by the desire to discredit the paper rather than a commitment to honest inquiry?

The results of epidemiologic studies on the question of passive smoking were inconsistent, with some of the largest and most powerful studies, including some using the ACS data, failing to show a clear association. This allowed advocates to choose among the various results and give more weight to those appearing to show an association. There is the well-known tendency among those who favor a particular hypothesis to “cherry-pick” the data, selecting those results which support their position. In a systematic review and meta-analysis published in 2006, Enstrom and I showed how this occurred in the passive-smoking literature. We laid out all the results of the major epidemiologic studies and highlighted which results were selected for inclusion in different meta-analyses and how the selection influenced the results. It is telling that this paper, which demonstrated a high degree of overlap among studies, got very little attention, in contrast to our BMJ paper.

In order to assess the magnitude of the risk associated with exposure to secondhand smoke, in addition to the relatively crude epidemiologic studies, it was crucial to have reliable measurements of actual exposure in the population to other people’s cigarette smoke. How did the average exposure of non-smoker compare to the exposure of a smoker, who actively drew cigarette smoke into his lungs? It turns out that one of the things that got lost in the discussion of passive smoking generally, and in the uproar over our BMJ paper in particular, is that, by the mid-1990s, we had good measurements of the actual dose of particulate matter inhaled by a non-smoker who was exposed to other people’s cigarette smoke.

Two careful studies—one in the US conducted by researchers at Oak Ridge National Laboratory; the other in the UK conducted by Covance Laboratories—basically came up with the same number. The average dose of particulate matter inhaled by a non-smoker exposed to other people’s cigarette smoke was about 8–10 cigarettes PER YEAR. Now, this is not nothing, but it is much less than the average exposure of a regular smoker. If one assumes that the average smoker smokes somewhere in the vicinity of a pack a day, this comes out to 7,300 cigarettes per year—a difference of about three orders of magnitude. When you take this difference in exposure into account, it’s hardly surprising that epidemiologic studies, which rely on fairly crude self-reported exposure information, don’t show a clear-cut association. But the two exposure studies were rarely cited, presumably because, by putting the passive smoking issue in perspective, they weakened the activists’ case.

It took two sociologists to bring out the deeper significance of the responses to the paper. In an article published in 2005 under the title “Silencing Science: Partisanship and the Career of a Publication Disputing the Dangers of Secondhand Smoke,” Sheldon Ungar and Dennis Bray analyzed the rapid responses to the BMJ that appeared in the first two months following publication of the article, as well as international newspaper coverage. The authors started from the premise that the scientific enterprise presupposes an openness to divergent ideas, even though contemporary science is increasingly subject to pressures stemming from “commercial exploitation of science, expanded media coverage of research, growing public concern with risks, and the greater harnessing of science to social and policy goals.” Unger and Bray used the term partisans to describe individuals who not only have an unreasoned allegiance to a particular cause but who also engage in efforts to silence opposing points of view.

Among their findings were that the rapid responses can best be understood as an attempt by partisans to silence results that go against the reigning consensus concerning the health effects of passive smoking. Drawing attention to the vehemence of many of the negative rapid responses, the authors commented that, “Silencing is based on intimidation, as partisans employ a strident tone full of sarcasm and moral indignation. There are elements of an authoritarian cult involved here: uphold the truth that secondhand smoke kills—or else!” They proceeded to examine the concern, voiced by many who attacked the paper, that it would attract extensive media coverage and set back progress in achieving bans on smoking.

But contrary to this expectation, they found that the study received relatively little media attention and that only one tobacco company referred to the study on its website. Their explanation for this surprising lack of media coverage, given the strength of public opinion regarding smoking and passive smoking, was that the media engaged in “self-silencing.” Their overall conclusion was that “the public consensus about the negative effects of passive smoke is so strong that it has become part of a truth regime that cannot be intelligibly questioned.”

IV.

When a study comes out reporting an “interesting” result—that is, a striking finding regarding a potential threat to health or health benefit—other researchers are spurred to try to replicate the results. Results from a subsequent study may appear to confirm the initial finding or may contradict it. However, it is important to realize that positive findings are more likely to be published by medical journals, and when published, will tend to receive more attention than null findings.

If the initial publication showed what appeared to be an impressive effect, a subsequent paper that fails to confirm the result may nevertheless report a result in a subgroup that appears to support the initial finding. In this way, a provocative initial result can give rise to a line of studies that keep the topic in the public consciousness, thereby producing a bandwagon effect or “availability cascade.” This is what happened with the question of the pesticide DDT and breast cancer, with exposure to electromagnetic fields (EMF) from power lines and leukemia, with cell phone use and brain cancer, and numerous other questions. In some cases, the succession of studies kept the potential risk in the public eye for decades.

But when one looks back after the line of research has run its course, one sees that, in some cases, the initial study showed a stronger association than any subsequent study. This was seen when 24 publications on DDT and breast cancer risk published from 1993 to 2001 were examined by Lopez-Cervantes et al. in 2004. The initial study showed a four-fold increased risk of breast cancer in women with a relatively high level of a DDT metabolite in their blood—a finding that received enormous attention in both medical journals and the media. In the second published study, the risk was only 1.3-fold. In the third study, the risk was slightly above 1.0. In all subsequent 21 studies, there was no elevated risk, and therefore no association of DDT with breast cancer.

This phenomenon of a strong association followed weaker associations or no association at all is known as the Proteus effect. It means that many results that receive massive media attention are actually “false positives”—results that are initially accepted as important but which later prove to be false. In a highly cited 2005 paper, the statistician John Ioannidis argued that, due to factors ranging from basic study design features to sociological and psychological factors, as much as half of all published research findings may be false.

We have to bear in mind that scientists are human, and it is only human to want your results to be meaningful and to attract attention. Positive results are the currency of science and are essential to future grant support and career advancement. Furthermore, positive findings are simply more psychologically satisfying to both researchers and the general public than findings of no association. The issue of passive smoking should have been examined in the context of all that is known about the well-studied topic of cigarette smoking. Instead, treating it as a thing apart led to all sorts of distortions. So, how are we to guard against findings which appeal to our deepest fears but that never seem to receive solid confirmation in spite of repeated attempts?

First, we need to realize that finding an association in a study does not justify a claim that the association demonstrates a relationship in the real world. There are just too many factors which, in spite of the researchers’ best efforts, can distort or bias the results. And yet, in their papers, epidemiologists often speak as if their findings support a real-world association, and this message is then conveyed to the public at large. This is why skepticism remains a vital part of scientific literacy. When brought to bear on scientific findings, skepticism in the interest of understanding a phenomenon should never be given a bad name.

In my 2016 book Getting Risk Right: Understanding the Science of Elusive Health Risks, I take a broader view. In order to calibrate our sense of what is important in the area of public health, we should pay greater attention to progress made in identifying factors that have a truly important effect on our health. Unlike the questionable risks that receive so much media attention, the work that leads to transformative achievements typically takes place over a long period of time and away from the spotlight. We should develop an appreciation for the arduous process of trial-and-error that has led to such triumphs as the development of vaccines against human papillomavirus, the development of anti-retrovirals for the treatment of HIV-AIDS, and the development of effective mRNA vaccines against SARS-CoV-2 built on research conducted 15 years earlier. Armed with examples like these, we will be better able to resist the siren-call of risks that never seem to reach the level of having a detectable effect.