Politics

On Pleasurable Beliefs

This erosion of trust has created an epistemic crisis that makes it difficult to defend a position, because one’s sources of information can always be called into question.

In November 2021, a few hundred people set up camp outside Dealey Plaza in Dallas, Texas, where John F. Kennedy was assassinated 58 years earlier. The people gathered there believed that the slain president’s son, John F. Kennedy Jr., who died in a plane crash in 1999, was about to return. He would become vice president under former president Donald Trump, who would then step down, making JFK Jr. the president. This would precipitate a chain of increasingly bizarre events that would turn US politics upside-down. This is a fringe belief even among the disciples of QAnon. What makes it intriguing, however, is that it is so preposterous that it strains the credulity of most reasonable people. Do QAnon believers really buy into this stuff, or is this just an elaborate prank of some kind?

Of course, this weird story also attracted attention because it helped to confirm what those on the Left like to think about their opponents on the Right—that they’re deranged lunatics clinging to increasingly absurd conspiracy theories borne of bigotry. The radical Left believe that racism, sexism, homophobia, transphobia, and all 31 flavors of hateful prejudice are hiding in every corner, sitting in every corporate boardroom, and lurking in the hearts of anyone who disagrees with them. This eldritch threat is unseeable and unprovable, but the belief has become mainstream over the last decade, even so. Still, it is just a belief, and one that makes up with conviction what it lacks in evidence. Indeed, it spurns the very notion that evidence is required.

Right and Left agree that the Western world currently has a problem with misinformation. Some on the Right have declared the news media an enemy of the people, dismissing anything emerging from major news outlets—CNN, MSNBC, the New York Times, the Washington Post, NPR, etc.—as deceit intended to push a radical progressive agenda. Some on the Left, meanwhile, have taken aim at social media, advocating regulations and trust-busting, and call for the vilification of anyone who deviates from their preferred narrative.

But don’t people want to know what is true? After all, we check the weather to find out whether or not to pick up an umbrella before leaving the house. Meteorologists are not always right, but a person is unlikely to conclude that it won’t rain just because they don’t want it to rain. We generally form beliefs about the world because we want to know how the world actually is. We also want what we believe to be true and what is true to align as seamlessly as possible. Someone who forms a belief in hope that it is untrue has surely taken leave of his senses.

This is known as the correspondence theory of truth, which holds that knowledge is produced when our beliefs correspond to what is true in the world as it exists independent of our desires. It is why humans invented the scientific method—the best process for discovering truths about objective reality and discarding falsehoods. But this presupposes belief in a testable hypothesis. I might believe, for example, that there are an odd number of stars in the universe, and I might even be correct. But I do not—cannot—know this to be true. The belief itself, right or wrong, is asinine. Aside from its unknowability, the answer to this question really makes no difference to our day-to-day lives, and the effort required to justify such a belief would vastly outweigh the utility of upgrading the belief into knowledge.

This is one of the problems with the correspondence view of belief formation. It does not explain why humans have come to care about whether their beliefs correspond to reality, or which beliefs are worth having or attempting to justify. It is teleological to claim that humans evolved the capacity to formulate beliefs in order to gain true knowledge about the world, and belief formation predates any meta-epistemic concern for accuracy. It took centuries to construct the scientific method precisely because humans did not evolve to think scientifically. The ideal scientist is objective, impartial, and willing to part ways with a hypothesis as soon as it is falsified, regardless of how elegant or intellectually taxing the hypothesis was. But humans are not like this. Which is why our hypothetical person cares little about disconfirming evidence, and if he is confronted with any, he will probably just dig his heels in.

Nevertheless, a person still needs some sort of justification for a belief. Evolutionary psychology tells us that humans evolved to formulate beliefs for the purpose of survival—to increase evolutionary fitness. A person formulates beliefs by abstracting from their experiences and applying a belief toward goal-oriented behavior. So, a belief is true when it can lead to successfully accomplishing goals. This is the epistemic theory of pragmatism.

From an evolutionary perspective, the pragmatic view of belief formation is persuasive. Those of our ancestors capable of forming beliefs that were useful for survival would have been more likely to reproduce and pass those useful-belief-forming faculties on to their offspring. Of course, forming beliefs that correspond to reality tends to be better at helping an individual survive. Believing that a plant is toxic or that a creature is dangerous confers positive fitness if (a) it helps avoid poisonous food and predators, and (b) the plant really is poisonous and the creature really is a predator. If, on the other hand, a person comes to believe that all plants are poisonous, that person will probably not survive long because some are plants are helpful, or even necessary, to our survival.

Let us imagine a hypothetical person who holds an extreme belief. This person has formed this belief in hope that it is true. But he believes he is right and has formed his belief with the explicit aim of being right. He will therefore believe that the actions that follow from that belief are making the world a better place. Shouldn’t this person, when confronted with counter-evidence that contradicts his beliefs, revise them? What justifies continued belief even when predictions fail and the supporting evidence falls apart?

Our hypothetical person now lives within conditions quite different from those faced by his evolutionary ancestors. Many modern beliefs rely on the testimony of others. In a highly complex society, it would be nearly impossible to navigate the world without trusting other people—their expertise, at least, if not their motives. Our ancestors trusted what they experienced directly and the testimony of a small group of dependable relatives and tribespeople who also valued their survival. But today, the world runs on trusting strangers: doctors, scientists, lawyers, politicians, administrators, bureaucrats, business executives, utilities suppliers, vehicle repairmen, engineers of all stripes, and on and on.

The problem is that trust in institutions is at an all-time low. Experts and politicians are increasingly seen as either incompetent, dishonest, or both. This grim view of society is not completely unfounded. In other words, our hypothetical person has at least some justification for refusing to accept what “elites” are telling him. The conflicts in Iraq and Afghanistan, the 2008 economic crisis and subsequent recession, Russiagate, and the COVID-19 pandemic have all offered reminders that experts can get things badly wrong. A pathological liar like Trump is elected president, and the Republican Party abandons its principles to join a cult of personality, while the Left concludes that bigotry is as ubiquitous now as it was during Jim Crow. Then our cultural institutions—universities, news media, the entertainment industry—all enjoin the rest of us to believe things that are dubious at best and sometimes demonstrably untrue. The loss of trust in institutions is regrettable but hardly incomprehensible.

This erosion of trust has created an epistemic crisis that makes it difficult to defend a position, because one’s sources of information can always be called into question. Even if the expertise of a source is unimpeachable, their motives may not be and this makes them suspect. The source may be trying to deceive others into believing untrue or harmful things to further an agenda. In such an environment, the rational thing to do would be to suspend judgement until more data can be gathered and better analyses carried out.

Our hypothetical ideologue, however, cannot occupy the liminal spaces between beliefs for very long. Unable to formulate a belief that leads to goal-oriented action, he will find himself paralyzed by indecision. Eventually, action must be taken, and therefore a position must be adopted. Unable to trust politicians and experts, he is willing to immerse himself instead in the silos and echo chambers of Twitter, YouTube, and Facebook, populated by self-confident amateurs and demagogues. Saturated with half-baked ad hoc theories and ideas, he credulously absorbs beliefs untethered from any sort of intellectual rigor or epistemic responsibility.

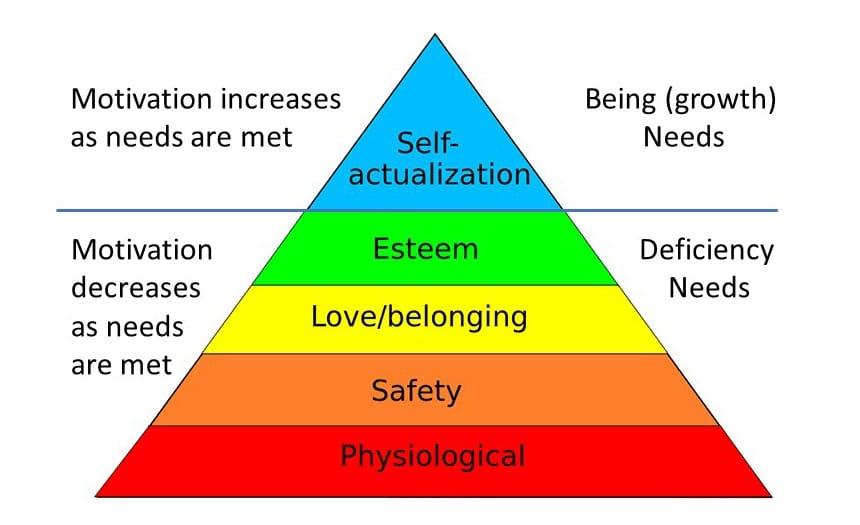

But how do humans justify these new non-expert beliefs in the absence of an impartial faculty for belief formation? And how do these beliefs help further goal-oriented behavior helpful to survival? Justification does not appear to arise from correspondence with reality, nor through basic survival utility. A possible answer is that, in the comfort of a post-survival world in which the two bottom categories of Maslow’s hierarchy of needs are largely satisfied at all times, beliefs become accessories.

That belief formulation is shaped primarily by evolution to help ensure survival does not restrict us to pure life-and-death survival. Belief formation also helped our ancestors survive an increasingly complex social environment—the next two categories in Maslow’s hierarchy of needs, love/belonging and esteem. Universal structures of our cognitive processes like groupthink, myside/confirmation bias, and our need to fit in with others (see the Asch Conformity Experiment) combine in a powerful evolutionary pressure to maintain social cohesion.

This strong desire for social cohesion, combined with our globalized environment and aggravated by social media and feelings of loneliness and isolation (an inability to fulfill the uppermost category in Maslow’s hierarchy of needs), has led to belief formation and justification becoming a kind of fashion statement. Because we no longer need to make much effort to stay alive and safe, we formulate beliefs not because they correspond to reality, nor even because they confer survival fitness, but because they provide us with pleasure.

Beliefs can provide a feeling of belonging and allow induction into a tribe that accepts the adherent for believing the right things and esteems them for their conviction. In both cases the weight of the evidence against the beliefs is adequately countered by the discovery that believing such things feels good. These things become untrue beliefs, justified by the mere fact that believing them brings a person pleasure.

Pleasure and suffering are powerful incentives and have been instrumental in our evolution. Pleasure informs us that something is good and ought to be pursued or repeated while pain informs us that something is bad and ought to be avoided. Our modern world has short-circuited pleasure acquisition. This has led to a great many problems, including obesity, addiction, and hollow consumerism. At the same time, we have elevated pleasure to the position of highest Good. Advertisements promise that their product will confer pleasure. Guidance counselors assure children that they can grow up to be whatever makes them happiest. Luxuries like a college education become a human right. The only thing that matters is how experiences feel from the inside. Society is to be styled after a sort of Nozickian experience machine. And so pleasure, not survival, is now what matters most when justifying any belief.

Our identity is the sum of our beliefs. This identity is largely shaped by our genetics, our culture, and our social interactions. Conversely, our social interactions are largely determined (particularly in online spaces) by the beliefs we hold. So, a positive feedback loop is created in which we adopt beliefs to construct an identity, then seek out others who share that identity, which then reinforces those beliefs through further justification by means of pleasure (echo chambers). Being agreed with feels good, and so having people agree with us becomes an end in itself. Conversely, any ideas that contradict our beliefs, thereby causing displeasure, are inflated to the level of a threat to safety and survival.

The degree of cognitive dissonance a person can endure attests to how important it is to hold onto the “right” beliefs. This mindset leads to “virtue signaling” and purity testing. Shouting our beliefs into social media and following those who espouse opinions that satisfy our confirmation bias lets everyone know the kind of person we are. Subjecting others to a purity test—questioning their own commitment to the beliefs—serves a similar function. It allows the truly committed to identify posers of dubious reliability and loyalty while reaffirming their own.

I experienced this when I immersed myself in libertarianism in the early twenty-teens. Among this crowd, the highest virtue was figuring out how any societal problem could be blamed on the government, which led in turn to purity testing. One might think that an ideology that values individualism above all else would be willing to tolerate differences in opinion, but this is not the case. Anyone unwise enough to suggest that a given problem might be caused by free actors exercising their freedom was no better than Stalin. And so, libertarianism comes saddled with a basket of other beliefs, such as the conviction that anthropogenic climate change isn’t real, or at least isn’t a problem. Climate change and pandemics are massive flies in the philosophically elegant ointment of libertarianism, because they remind us that our cherished freedoms may be causing harm to others, and that our own behavior might have to be adjusted.

The convenient thing about fashionable beliefs is that the actions they produce are easy. Our hypothetical person can join a social media pile-on, or retire to their echo chamber, and experience a rewarding sense of accomplishment. But this pleasure is fleeting. The ephemeral sense of belonging he gains from this sort of short-circuited desire for community becomes just like any other shallow consumerist drive. He needs to continue filling that hole, which leads to radicalization.

So what do we do about all this? Is the problem fixable? The unfortunate answer is: probably not. It does not result from some sort of misguided policy or unregulated industry, it is baked into human nature. Totalitarian regimes are predicated on the idea that policy can adjust for the incompatibility of human nature with the modern world we’ve created for ourselves. Indeed, radical progressive ideas currently finding their way into policy discussions are just that—an attempt to tweak the system to fix human nature and achieve “equity.”

There are three things that might be done, each as terrible as the last.

The first would be to restrict access to anything but a stringent orthodoxy in order to prevent people from acquiring “wrong” information that produces “wrong” beliefs. Cancel culture and the banning of certain figures from social media is doing this to a degree. It is how book burnings and state propaganda arise—radicals on the Left and Right wish to control the narrative and insist upon fidelity to their own orthodoxies. If we really want to prevent people from adopting pleasurable but pernicious beliefs, we would need to enforce an even stronger version of their totalitarianism.

The second solution would be some sort of return to nature so that we start caring about survival and safety again, thereby lessening concern with pleasure-justified beliefs. This is what ideas like anarcho-primitivism and rewilding advocate. Barring some cataclysm that ushers in a new dark age, this solution is very unlikely to be popular.

The third solution is to somehow alter human nature so that the pursuit of pleasure doesn’t rank so highly among our priorities. Organized religions have attempted to do this for centuries using doctrinal laws and taboos. A theme central to many religions, particularly the Abrahamic faiths, is the self-denial of pleasure. This project has been a colossal failure. Humans will continue to be human, even if they feel remorseful about it later. Secular variants on this idea, such as the Chinese social credit system, have also been attempted, but there are more benign examples. Weight Watchers attempts to get people to deny themselves the pleasure of overeating. The world of self-help is piled with abortive attempts to curb human nature. Some sort of brain implant that encourages rationality and rigor in belief formation over the pursuit of pleasure might achieve the same end, but it would have to be mandated and would be fiercely resisted by many (myself included).

The sad truth is that the solutions are even worse than the problem they are intended to solve. Really, the only thing a person can do is to follow Jordan Peterson’s advice and clean their own room. As individuals, we each have (some) control over our own belief formation that allows us to discard poorly formulated or unsupported beliefs. This advice is unlikely to be widely embraced, and where it is, commitment is likely to be weak and inconsistent. But it is better than the alternatives.