Science

An Evolutionary Explanation for Unscientific Beliefs

To understand morality from an evolutionary point of view, one needs to realize that humans have always existed in groups.

“Another theory is that humans were created by God,” announced my tenth-grade biology student as she clicked past PowerPoint slides of Darwin’s finches and on to images of a catastrophic flood. After her presentation, I carefully avoided inane debate and simply reiterated the unique ways in which science helps us make accurate predictions. I then prepared for pushback from parents and administrators. Sure enough, the next day the superintendent of the school district came to my classroom with some creationist literature that he was confident would change my mind on the whole theory of evolution by natural selection thing. It didn’t, but it did lead me to pursue a PhD in educational psychology in my search to explain how such beliefs could be maintained in modern times, particularly in the face of such strong counterevidence.

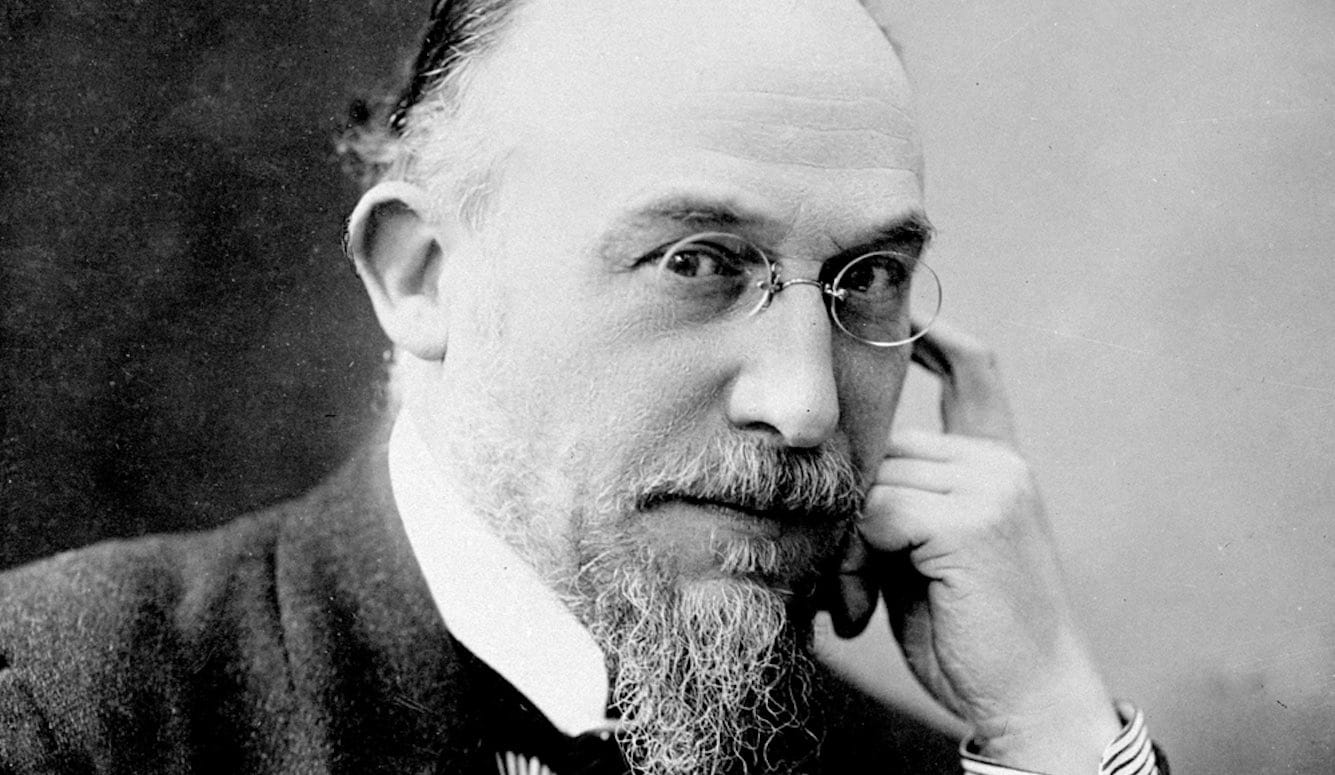

As it turns out, the theory of evolution by natural selection provides a strong explanation for how and why some people don’t believe evolution by natural selection has ever taken place. I initially thought the problem was a matter of knowledge and the standards people have for what constitutes knowledge, but eventually it became clear that holders of anti-scientific beliefs (from William Jennings Brian of the Scopes Monkey Trial to modern day conspiracy theorists) typically root their convictions in moral obligation.

To understand morality from an evolutionary point of view, one needs to realize that humans have always existed in groups. Often, these groups compete with one another, and this means group-level selection pressures have influenced individual traits, including psychological traits. For example, if two tribes come into conflict with one another, the tribe with members better able to cooperate will prevail. Thus, psychological phenomena such as empathy, concern for fairness and reciprocity, in-group loyalty, and respect for hierarchy have a selective advantage in contexts of group competition, and we immediately recognize the lack of these traits as psychopathy. In other words, most humans have innate tendencies that guide moral development—participating in fair exchanges and seeing moral violators punished are both inherently pleasurable, whereas witnessing injustice and suffering are inherently uncomfortable (just as sweet tastes are innately pleasurable and bitter tastes are innately aversive, even for infants).

Thus, what we consider moral and why we consider it moral are not arbitrary nor are they solely guided by social learning. Our moral intuitions are rooted in natural selection’s answers to social problems that have consistently arisen throughout our evolutionary past. Nonetheless, what we readily recognize as moral is dependent on a wide range of conceptual abilities that must be flexible enough to adapt to cultural contexts and be utilized correctly in specific social circumstances (for instance, empathy for an in-group member’s loss but pleasure in an enemy’s loss), so a significant part of our moral intuitions are dependent on learning and social experience as well.

This dynamic interaction of genes, culture, and learning is often referred to as gene-culture coevolution, which posits that as humans began to rely on the transmission of cultural knowledge for survival, cultural learning became a factor that interacts with genes to guide evolutionary outcomes. For example, Inuit people living in the arctic rely on complex strategies for obtaining food and shelter. The loss of even a small amount of this knowledge could be catastrophic, so the ability for an individual to learn (and for the group to teach) the necessary knowledge and skills is a selective pressure that has influenced Inuit genes and traits.

Scientists have a pretty good idea of when this cultural learning gained traction as a selective pressure. Paleontologists have found right-handed cutting and carving tools from about two million years ago. Handedness is a result of specialized functions for the left and right halves of the brain (brain lateralization), and brain lateralization is evidence of abstract language abilities, the foundation of cultural transmission. Thus, for about two million years, maybe more, gene-culture coevolution has influenced many of our learning biases, including providing psychological mechanisms by which cultural and social pressures can override firsthand experiences and rational thoughts.

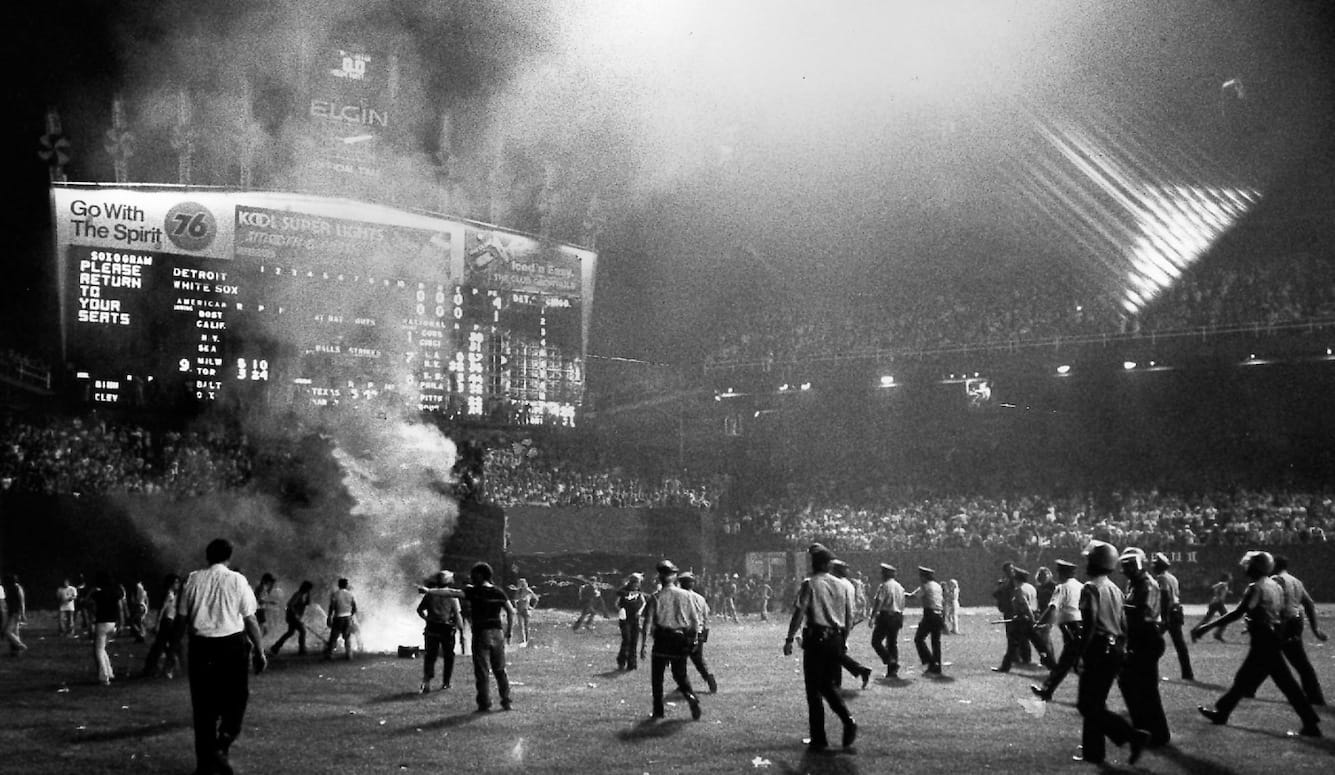

Prior to science and accurate causal models for natural phenomena, cultures themselves evolved through trial and error, relying on superstition, myth, and tradition to perpetuate survival-enhancing knowledge and skills. In such circumstances, an ability to override rational thoughts in favor of conformity could have a reproductive benefit. We can see evidence of such conformity biases in neuroimaging studies—when participants change their opinions to match with others, the part of the brain involved in feelings of pleasure and happiness becomes more active. We’re also more likely to imitate and learn from high-prestige individuals, which is why high-prestige individuals are paid so much to market products (a phenomenon known as the “prestige effect”). Finally, in the most extreme cases, high-emotional arousal can completely shut down a person’s rational faculties. Why would an academic discussion of sediment layers on the earth’s crust catalyze a high-intensity emotional response from someone? Because it threatens their membership in their tribe, and our brains are wired so that our emotional faculties can shut down our rational faculties in the face of such a threat.

Which brings us back to anti-scientific beliefs. If you are a member of a group in which membership is dependent on maintaining certain beliefs, there will be nothing more emotionally arousing than threats to those beliefs, which are in effect threats to the integrity of your group and your membership in it. For millions of years the greatest threat to our ancestors came not from lions or snakes but from ostracism, which would have left them vulnerable to all sorts of harms. If you doubt that these psychological mechanisms exist, try using evidence to convince a creationist that evolution by natural selection occurs or a climate change denier that human-induced climate change is real, or a die-hard Cowboys fan that the Green Bay Packers are a better football team. Most likely you already have tried something like this, and you know very well that it is futile, enraging, and sometimes even traumatic.

However, there is hope. Emotions themselves do not drive moral intuitions or these in-group biases, and neurological evidence shows that brains are sensitive to moral relevance prior to emotional relevance. The brain is not evolutionarily wired for the “purpose” of emotions. The brain is wired to accomplish complex social goals, and it uses emotions as another source of information to that end. This is relevant in science education and communication because when rational explanations and evidence for one’s position are lacking (for example, in the case of creationism), proponents will default to reframing the debate as a moral one. Appeals will be made to moral foundations such as care, harm, justice, respect for authority, in-group loyalty, cleanliness, purity, or sanctity, either implicitly or explicitly. It is this moral framing that stimulates the emotional response, not the other way around; and this moral framing is designed to take advantage of some of our most deeply evolved psychological traits.

In conclusion, science communication and science education are done by humans, and each human is the result of millions of years of social selective pressures that have come to prioritize groupishness. As societies, we build conceptual domains of morality on the foundations of our evolved moral traits, and we have designed cultural tools and institutions in support of this process. Certain facets of our moral world and our ability to learn are innate, but the ways in which these moral tools are deployed are culturally constructed. We are responsible for creating our moral world using the vestigial remnants of our tribal pasts and the accumulated wisdom of our evolved moral intuitions. Ignoring this leads to failed science education and communication efforts and baffled science teachers and communicators.

Finally, astute observers might have noticed a recent trend toward moralized rationality in which scientists are readily framing issues in science as moral issues (e.g., GMOs, climate change, and vaccinations), and group membership and social standing are contingent upon taking a particular stance. This seems to be an inevitability of humans doing science. But, given the power of human social groups and their ability to think and act irrationally when riled by moral concern, it is also risky.