Education

When ‘Ethics Review’ Becomes Ideological Review: The Case of Peter Boghossian

To a modern research scientist, all of this will seem like common sense, and such principles now are taught even in some undergraduate courses.

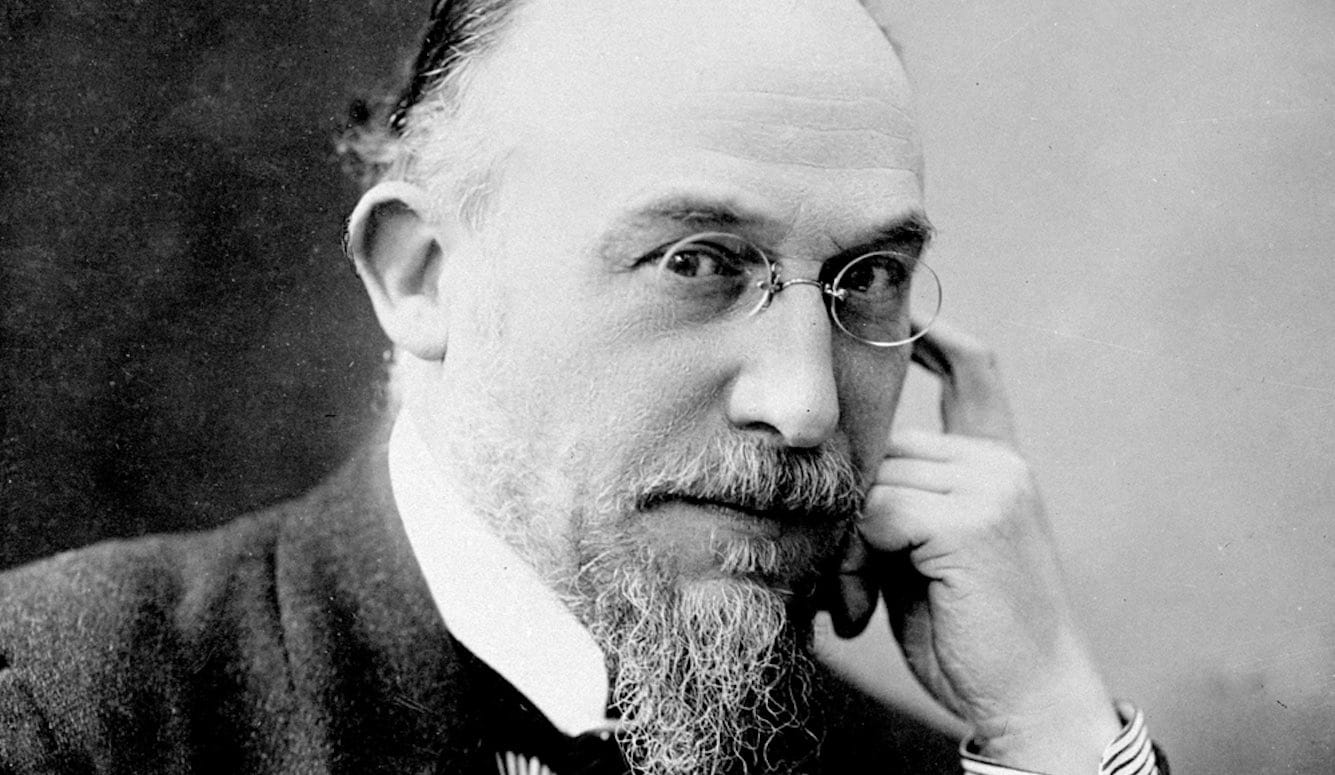

On Tuesday, Peter Boghossian, an assistant professor of philosophy at Portland State University (PSU) in Oregon, publicly shared a letter he’d received from his employer, outlining the results of an academic misconduct investigation into his now-famous 2018 “grievance-studies” investigation. As was widely reported in the Wall Street Journal, Quillette and elsewhere, Boghossian, researcher James Lindsay and Areo editor Helen Pluckrose submitted nonsensical faux-academic papers to journals in fields such as gender, race, queer and fat studies, some of which passed peer review—and were even published—despite their ludicrous premises. The project was defended by 1990s-era academic hoaxer Alan Sokal, who famously performed a somewhat similar send-up of fashionable academic culture two decades ago. While many cheered Boghossian’s exposé, some scholars within these fields were horrified, and it has long been known that Boghossian, by virtue of his PSU affiliation, would be vulnerable to blowback.

PSU’s Institutional Review Board decided that Boghossian has committed “violations of human subjects’ rights and protection”—the idea here being that the editors who operate academic journals, and their peer reviewers, are, in a broad sense, “human subjects”—and that his behavior “raises concerns regarding a lack of academic integrity, questionable ethical behavior and employee breach of rules.” As punishment, he is “forbidden to engage in any human-subjects-related [or sponsored] research as principal investigator, collaborator or contributor.”

This is a bit rich, of course, since the point of Boghossian’s project was to expose the lack of “academic integrity” in whole academic fields. Such a project would be impossible to conduct if editors were made aware of the research methods in advance, so the effect of the sanctions against Boghossian may be reasonably interpreted by Boghossian’s defenders (including me) as a means to punish him for exposing the rot in certain sectors of academia—and to deter others from following his example.

For those who are not steeped in the protocols of university life, all of this may seem confusing: How could the gatekeeping function of research-ethics oversight be used to achieve this result? The answer is that this field has drastically changed since its original conception seven decades ago. It once was about preventing harm. Now, it’s about protecting ideas.

Before the Second World War, there weren’t really any systematic rules in place governing what kind of research could be done by academics on human subjects. But the horrific experiments conducted by the Nazis raised awareness of the need to impose limits. Indeed, the basis for modern research ethics policy, the Nuremberg Code, emerged from the Nuremberg trials in the late 1940s.

The code, which begins with the sentence, “the voluntary consent of the human subject is absolutely essential,” outlines principles that medical scientists today take as fundamental truths: the risk of harm must be outweighed by the potential for benefit; testing on animals and other methods should be used to estimate risk of harm to humans; and experiments “should be so conducted as to avoid all unnecessary physical and mental suffering and injury.”

U.S. officials refined the principles in the Nuremberg Code and incorporated them into the 1974 National Research Act, which formalized the requirements for institutional review boards (IRBs), also called research ethics boards (REBs). (As names vary by region, I’ll use “IRB” here to mean a group responsible for ethics review at a research institution.) This was done both through statute, and by implementation of the National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research, a body created under the National Research Act. This commission produced a highly influential 1979 document called the Belmont Report, which instructed IRBs on three high-level principles: respect for persons, beneficence and justice.

The report acknowledges the sometimes difficult-to-define nature of “consent,” noting, by way of example, that “under prison conditions, [potential subjects] may be subtly coerced or unduly influenced to engage in research activities for which they would not otherwise volunteer.” It also notes that the act of participating in research presents an opportunity to be helpful to humankind, and so consent should not be unreasonably withheld.

In discussing the idea of “beneficence,” the authors direct researchers to ensure they “maximize possible benefits and minimize possible harms,” while acknowledging the difficulty of ascertaining when it is justifiable to seek certain benefits despite the risks involved. Under the heading of “justice” comes the principle that benefits and harms should be allocated fairly. The report discusses examples of how a minority group with little power could be more easily persuaded or even subtly coerced to participate in a study, perhaps for administrative convenience: “Social justice requires that distinctions be drawn between classes of subjects that ought, and ought not, to participate in any particular kind of research, based on the ability of members of that class to bear burdens and on the appropriateness of placing further burdens on already burdened persons.”

To a modern research scientist, all of this will seem like common sense, and such principles now are taught even in some undergraduate courses. But their universal adoption can mask real questions of interpretation, such as what the term “harm” means. In some cases—such as Nazi experiments aimed at determining a prisoner’s capacity for pain—the presence of harm is horrifyingly obvious. But in many modern contexts, the project of defining such terms is much more difficult, and subject to all sorts of political and ideological influences.

I am Canadian, and affiliated with the University of Alberta. But modern academic culture is little concerned with borders. And research ethics principles have become somewhat standardized internationally, at least in western countries.

In Canada, enforcement of ethics principles is done in large part through universities themselves, and through the application of funding criteria by granting bodies. This, too, is typical of how other countries operate.

Canadian institutions are bound by the Tri-Council Policy Statement: Ethical Conduct for Research Involving Humans, 2nd edition (TCPS2), a set of guidelines federal research funding agencies first published in 1998 that has evolved over time. Today the TCPS2 repeats familiar calls for researchers to procure informed consent and to be mindful of risk-reward ratios, and uses very similar language to that in the Belmont Report. But the document also takes considerable linguistic liberties with the word “harm.” The word now is taken to include not only actual physical and psychological harms, as the Nuremberg Code authors would have recognized them, but the causing of mere offence or reputational damage.

For example, the TCPS2’s chapter on genetics research warns against research that “may raise ethical concerns regarding stigmatization,” or cause “social disruption in communities or groups.” It goes on to direct researchers to discuss with group leaders “the risks and potential benefits of the research to the community or group.”

The TCPS2 also has a chapter on research involving Indigenous peoples. It notes that scientific research approaches “have not generally reflected Aboriginal world views,” and requires that Indigenous peoples’ “distinct world views” are represented in all aspects of research, including “analysis and dissemination of results.” An obvious problem here is that not all “world views” (whether they emerge from traditional Indigenous societies or from traditional Western societies) are consistent with the scientific method.

The policy directs researchers to plan their research such that it “should enhance [Indigenous peoples’] capacity to maintain their cultures, languages, and identities.” These may be worthy social and political goals, but embedding them explicitly in a document that purports to instruct academics on ethics has the effect of turning researchers into de facto activists. Over time, sound research and science generally will improve the human condition as an indirect result of the accumulation of knowledge. But recognizing this fact is very different from a policy of insisting that every act of research must be justified according to its role in directly promoting a specific social and political agenda regarding a specific demographic group.

The document warns that the requirement of “justice,” a fundamental principle listed in both the Belmont Report and the TCPS2, may be compromised by such actions as “misappropriation of sacred songs, stories and artefacts, devaluing Aboriginal peoples’ knowledge as primitive or superstitious…and dissemination of information that has misrepresented or stigmatized entire communities.” The problems here begin to multiply. The word “misappropriation,” for instance, now has a completely elastic definition that expands daily, with every new social-media outrage. Moreover, the very idea of the “sacred”—whether it is being applied in an Indigenous or non-Indigenous context—is in complete tension with the truth-seeking project that informs true research, which always should be aimed at dispelling dogma and superstition, “primitive” or otherwise.

Regarding research that may uncover “sacred knowledge,” the policy informs us that “determination of what information may be shared, and with whom, will depend on the culture of the community involved.” The policy also warns that “territorial or organizational communities or communities of interest engaged in collaborative research may consider that their review and approval of reports and academic publications is essential to validate findings, correct any cultural inaccuracies, and maintain respect for community knowledge (which may entail limitations on its disclosure).”

Though the authors seem to have been careful to craft this language in a way that doesn’t explicitly permit an outright publication veto, this seems to be what is effectively mandated. When a disagreement between researchers and Indigenous communities arises on the interpretation of data, the policy directs the researchers to either provide the offended community with an opportunity to make its views known, or report their side of the disagreement. This “opportunity to contextualize the findings,” in the euphemistic wording of the TCPS2, is analogous to forcing immunologists to reserve a few paragraphs at the end of their papers for a dissent penned by anti-vaccine activists.

Seen in light of the history of research ethics, the trend here is clear, and it helps us understand the real reasons why Peter Boghossian was sanctioned. Over the last 70 years or so, we’ve moved from (a) preventing actual harm to an individual, to (b) protecting a group’s reputation, stature or prestige. In effect, some groups in our society are to be protected from research that may cast into doubt preferred narratives, even if those narratives are completely at odds with what we learn about the natural world. By couching these principles in the language of ethics codes, violators may be decried not only as misguided or even ignorant, but literally “unethical.” And while those accused of poor scholarship often get a fair trial, so to speak, those accused of being unethical are seen differently.

Most of these disputes play out behind closed doors. But Boghossian, a rarity among academics, received a deluge of public support from both the broad public and from other high-profile intellectuals, who recognized that, far from engaging in unethical behaviour, he was exposing a wider culture of incompetence and cultishness in the constellation of liberal-arts disciplines that he and his colleagues called grievance studies. He wasn’t accused of risking the infliction of injury or harm to anyone (as any ordinary person would understand those terms). He wasn’t even accused of poor scholarship.

I spoke to Boghossian on the phone while preparing this article. He wondered aloud whether an analogous sort of disciplinary process could unfold in other fields. If, for instance, the peer-reviewed engineering literature promoted an obviously unstable design for a bridge (for fear of offending the “sacred” architectural principles of some professional subculture or other), anyone who exposed the practice would be thanked for their efforts—as such exposure likely would serve to save many lives. In the case of grievance studies, however, there are no lives at stake. The purpose of scholars in these fields isn’t to improve human societies, but rather to find new ways of impugning them.

As we spoke, Boghossian laughed about the many demands he got from critics for proof that this research was approved by an IRB (which, of course, it wasn’t). I have seen the same process in regard to my own controversial research, as discussed recently in Quillette. When there’s nothing wrong with one’s research, but people don’t like the results or its implications, they can always fire off an ethics complaint. When you don’t have a coherent counterargument, a well-staffed ethics office often is the only body that will return your emails.

Moreover, since these offices often are staffed and served by academics who share the same cliquish views—and enforce the same no-go zones—the whole idea of reviewing ethics effectively can blur into enforcing ideology. I dare say that some might even call it unethical.