Facebook Has a Right to Block 'Hate Speech'—But Here’s Why It Shouldn’t

The evolution of our content policy not only risked the core of Facebook’s mission, but jeopardized my own alignment with the company.

The article that follows is the first instalment of “Who Controls the Platform?”—a multi-part Quillette series authored by social-media insiders. Our editors invite submissions to this series, which may be directed to [email protected].

In late August, I wrote a note to my then-colleagues at Facebook about the issues I saw with political diversity inside the company. You may have read it, because someone leaked the memo to the New York Times, and it spread outward rapidly from there. Since then, a lot has happened, including my departure from Facebook. I never intended my memos to leak publicly—they were written for an internal corporate audience. But now that I’ve left the company, there’s a lot more I can say about how I got involved, how Facebook’s draconian content policy evolved, and what I think should be done to fix it.

My job at Facebook never had anything to do with politics, speech, or content policy—not officially. I was hired as a software engineer, and I eventually led a number of product teams, most of which were focused on user experience. But issues related to politics and policy were central to why I had come to Facebook in the first place.

When I joined the Facebook team in 2012, the company’s mission was to “make the world more open and connected, and give people the power to share.” I joined because I began to recognize the central importance of the second half of the mission—give people the power to share—in particular. A hundred years from now, I think we’ll look back and recognize advances in communication technologies—technologies that make it faster and easier for one person to get an idea out of their head and in front someone else (or the whole world)—as underpinning some of the most significant advances in human progress that humanity has ever witnessed. I still believe this. It’s why I joined Facebook.

And for about five years, we made headway. Both the company and I had our share of ups, downs, growth and setbacks. But, by and large, we aspired to be a transparent carrier of people’s stories and ideas. When my team was building the “Paper” Facebook app, and then the later redesigned News Feed, we metaphorically aspired for our designs to be like a drinking glass: invisible. Our goal was to get out of the way and let the content shine through. Facebook’s content policy reflected this, too. For a long time, the company was a vociferous (even if sometimes unprincipled) proponent of free speech.

As of 2013, this was essentially Facebook’s content policy: “We prohibit content deemed to be directly harmful, but allow content that is offensive or controversial. We define harmful content as anything organizing real world violence, theft, or property destruction, or that directly inflicts emotional distress on a specific private individual (e.g. bullying).”

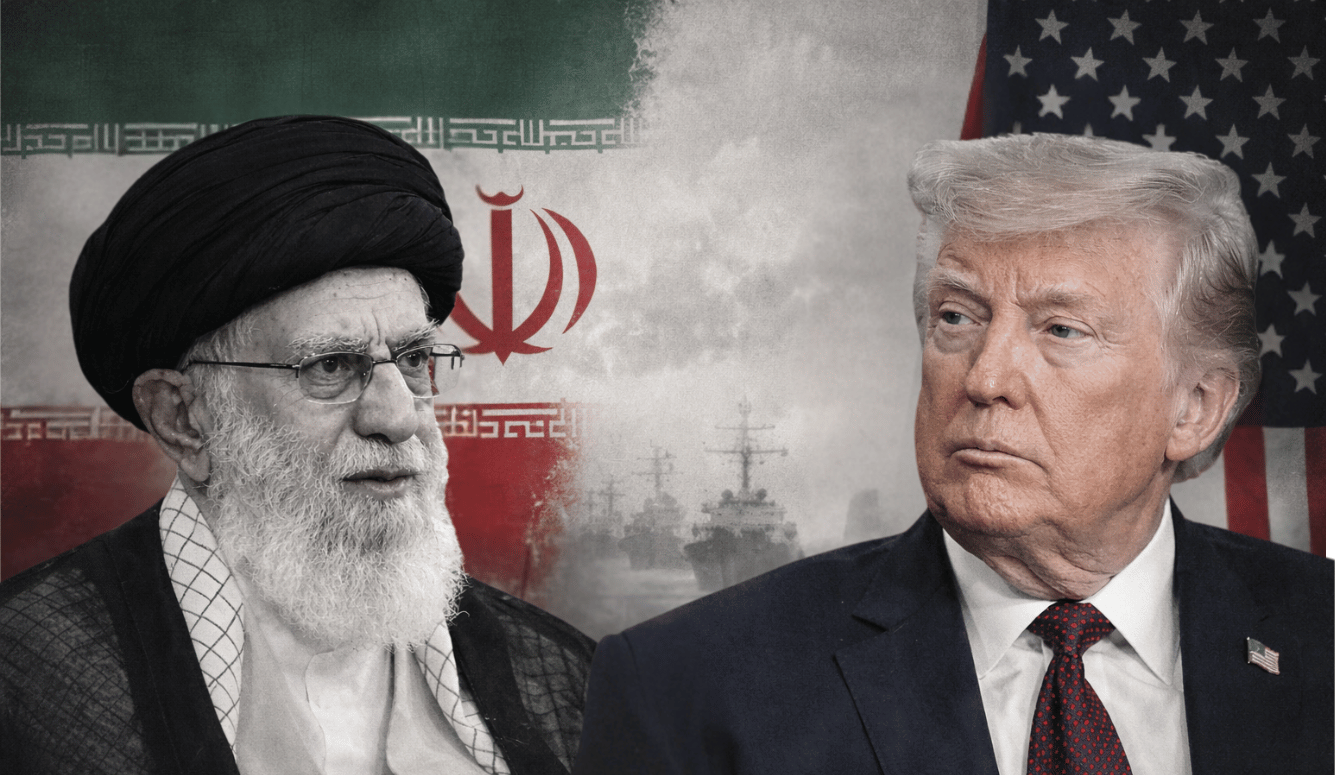

By the time the 2016 U.S. election craze began (particularly after Donald Trump secured the Republican nomination), however, things had changed. The combination of Facebook’s corporate encouragement to “bring your authentic self to work” along with the overwhelmingly left-leaning political demographics of my former colleagues meant that left-leaning politics had arrived on campus. Employees plastered up Barack Obama “HOPE” and “Black Lives Matter” posters. The official campus art program began to focus on left-leaning social issues. In Facebook’s Seattle office, there’s an entire wall that proudly features the hashtags of just about every left-wing cause you can imagine—from “#RESIST” to “#METOO.”

In our weekly Q&As with Mark Zuckerberg (known internally as “Zuck”), the questions reflected the politicization. I’m paraphrasing here, but questions such as “What are we doing about those affected by the Trump presidency?” and “Why is Peter Thiel, who supports Trump, still on our board?” became common. And to his credit, Zuck always handled these questions with grace and clarity. But while Mark supported political diversity, the constant badgering of Facebook’s leadership by indignant and often politically intolerant employees increasingly began to define the atmosphere.

As this culture developed inside the company, no one openly objected. This was perhaps because dissenting employees, having watched the broader culture embrace political correctness, anticipated what would happen if they stepped out of line on issues related to “equality,” “diversity,” or “social justice.” The question was put to rest when “Trump Supporters Welcome” posters appeared on campus—and were promptly torn down in a fit of vigilante moral outrage by other employees. Then Palmer Luckey, boy-genius Oculus VR founder, whose company we acquired for billions of dollars, was put through a witch hunt and subsequently fired because he gave $10,000 to fund anti-Hillary ads. Still feeling brave?

It’s not a coincidence that it was around this time that Facebook’s content policy evolved to more broadly define “hate speech.” The internal political monoculture and external calls from left-leaning interest groups for us to “do something” about hateful speech combined to create a sort of perfect storm.

As the content policy evolved to incorporate more expansive hate speech provisions, employees who objected privately remained silent in public. This was a grave mistake, and I wish I’d recognized the scope of the threat before these values became deeply rooted in our corporate culture. The evolution of our content policy not only risked the core of Facebook’s mission, but jeopardized my own alignment with the company. As a result, my primary intellectual focus became Facebook’s content policy.

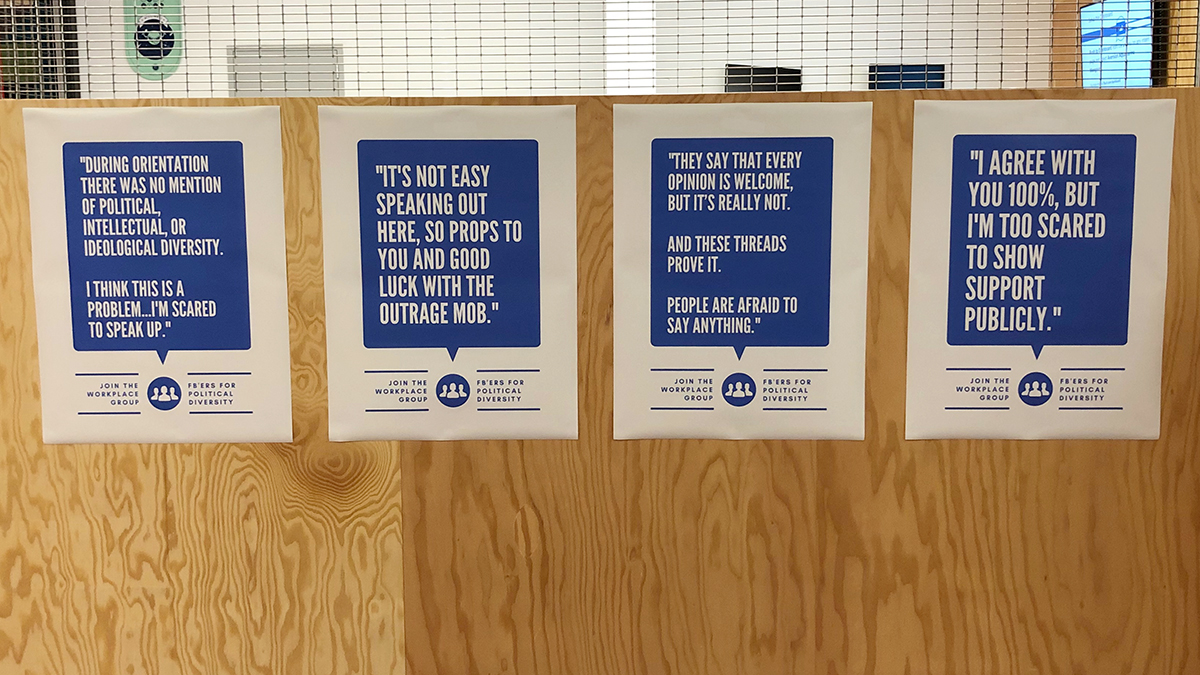

I quickly discovered that I couldn’t even talk about these issues without being called a “hatemonger” by colleagues. To counter this, I started a political diversity effort to create a culture in which employees could talk about these issues without risking their reputations and careers. Unfortunately, while the effort was well received by the 1,000 employees who joined it, and by most senior Facebook leaders, it became clear that they were committed to sacrificing free expression in the name of “protecting” people. As a result, I left the company in October.

Let’s fast-forward to present day. This is Facebook’s summary of their current hate speech policy:

We define hate speech as a direct attack on people based on what we call protected characteristics—race, ethnicity, national origin, religious affiliation, sexual orientation, caste, sex, gender, gender identity, and serious disease or disability. We also provide some protections for immigration status. We define attack as violent or dehumanizing speech, statements of inferiority, or calls for exclusion or segregation.

The policy aims to protect people from seeing content they feel attacked by. It doesn’t just apply to direct attacks on specific individuals (unlike the 2013 policy), but also prohibits attacks on “groups of people who share one of the above-listed characteristics.”

If you think this is reasonable, then you probably haven’t looked closely at how Facebook defines “attack.” Simply saying you dislike someone with reference to a “protected characteristic” (e.g., “I dislike Muslims who believe in Sharia law”) or applying a form of moral judgment (e.g., “Islamic fundamentalists who forcibly perform genital mutilation on women are barbaric”) are both technically considered “Tier-2“ hate speech attacks, and are prohibited on the platform.

This kind of social-media policy is dangerous, impractical, and unnecessary.

The trouble with hate speech policies begins with the fact that there are no principles that can be fairly and consistently applied to distinguish what speech is hateful from what speech is not. Hatred is a feeling, and trying to form a policy that hinges on whether a speaker feels hatred is impossible to do. As anyone who’s ever argued with a spouse or a friend has experienced, grokking someone’s intent is often very difficult.

As a result, hate speech policies rarely just leave it at that. Facebook’s policy goes on to list a series of “protected characteristics” that, if targeted, constitute supposedly hateful intent. But what makes attacking these characteristics uniquely hateful? And where do these protected characteristics even come from? In the United States, there are nine federally protected classes. California protects 12. The United Kingdom protects 10. Facebook has chosen 11 publicly, though internally they define 17. The truth is, any list of protected characteristics is essentially arbitrary. Absent a principled basis, these are lists that are only going to expand with time as interest and identity groups claim to be offended, and institutions cater to the most sensitive and easily offended among us.

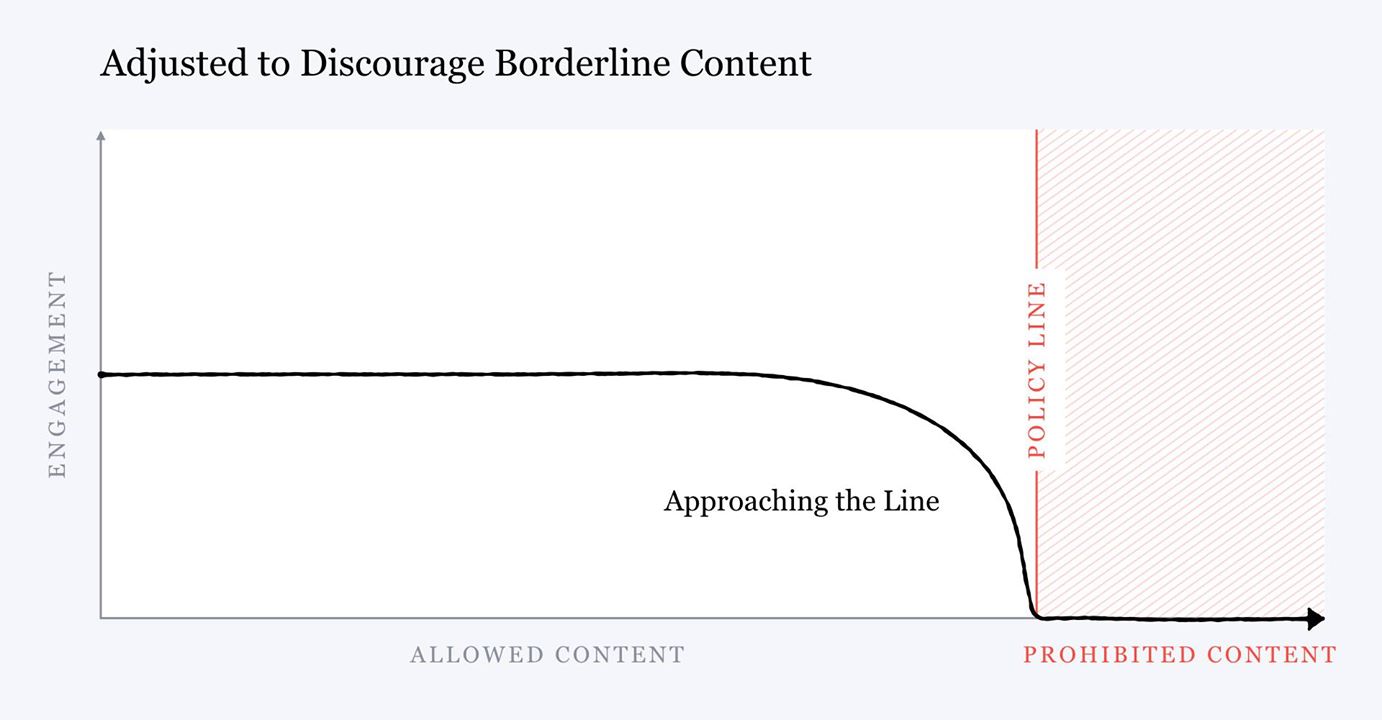

The inevitable result of this policy metastasis is that, eventually, anything that anyone finds remotely offensive will be prohibited. Mark Zuckerberg not only recently posted a note that seemed to acknowledged this, but included a handy graphic describing how they’re now beginning to down-rank content that isn’t prohibited, but is merely borderline.

Almost everything you can say is offensive to somebody. Offense isn’t a clear standard like imminent lawless action. It is subjective—left up to the offended to call it when they see it.

On one occasion, a colleague declared that I had offended them by criticizing a recently installed art piece in Facebook’s newest Menlo Park office. They explained that as a transgender woman, they felt the art represented their identity, told me they “didn’t care about my reasoning,” and that the fact they felt offended was enough to warrant an apology from me. Offense (or purported offense) can be wielded as a political weapon: An interest group (or a self-appointed representative of one) claims to be offended and demands an apology—and, implicitly with it, the moral and political upper hand. When I told my colleague that I meant what I said, that I didn’t think it was reasonable for them to be offended, and, therefore, that I wouldn’t apologize, they were left speechless—and powerless over me. This can be awkward and takes social confidence to do—I don’t want to offend anyone—but the alternative is far worse.

Consider Joel Kaplan, Facebook’s VP for Global Public Policy—and a close friend of recently confirmed U.S. Supreme Court Justice Brett Kavanaugh—who unnecessarily apologized to Facebook employees after attending Kavanaugh’s congressional hearing. Predictably, after securing an apology from him, the mob didn’t back down. Instead, it doubled down. Some demanded Kaplan be fired. Others suggested Facebook donate to #MeToo causes. Still others used the episode as an excuse to berate senior executives. During an internal town hall about the controversy, employees interrupted, barked and pointed at Zuck and Sheryl Sandberg with such hostility that several long-time employees walked away, concluding that the company “needed a cultural reset.” The lesson here is that while “offense” is certainly something to be avoided interpersonally, it is too subjective and ripe for abuse to be used as a policy standard.

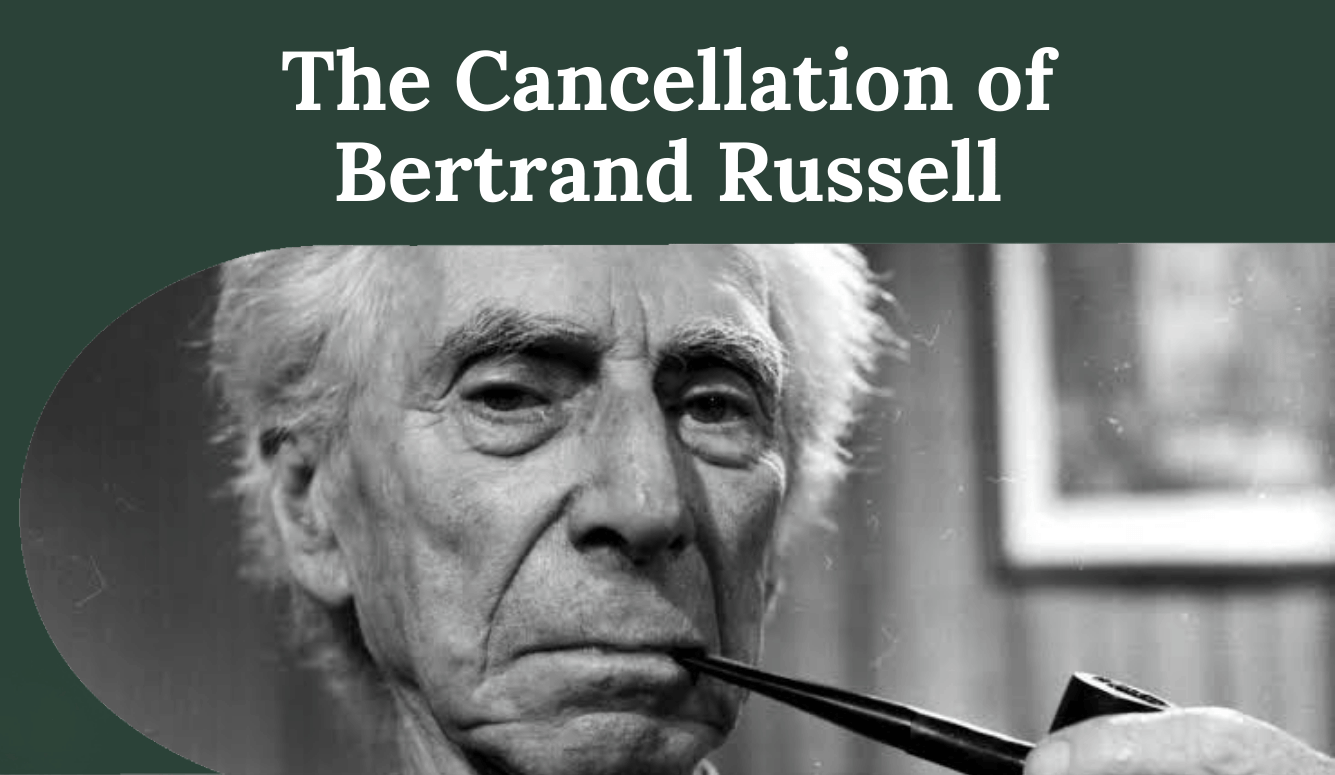

Perhaps even more importantly, you cannot prohibit controversy and offense without destroying the foundation needed to advance new ideas. History is full of important ideas, like heliocentrism and evolution, that despite later being shown to be true were seen as deeply controversial and offensive because they challenged strongly held beliefs. Risking being offended is the ante we all pay to advance our understanding of the world.

But let’s say you’re not concerned about the slippery slope of protected characteristics, and you’re also unconcerned with the controversy endemic to new ideas. How about the fact that the truths you’re already confident in—for example, that racism is abhorrent—are difficult to internalize if they are treated as holy writ in an environment where people aren’t allowed to be wrong or offend others? Members of each generation must re-learn important truths for themselves (“Really, why is racism bad?”). “Unassailable” truths turn brittle with age, leaving them open to popular suspicion. To maintain the strength of our values, we need to watch them sustain the weight of evidence, argument and refutation. Such a free exchange of ideas will not only create the conditions necessary for progress and individual understanding, but also cultivate the resilience that much of modern culture so sorely lacks.

But let’s now come down to ground level, and focus on how Facebook’s policies actually work.

When a post is reported as offensive on Facebook (or is flagged by Facebook’s automated systems), it goes into a queue of content requiring human moderation. That queue is processed by a team of about 8,000 (soon to be 15,000) contractors. These workers have little to no relevant experience or education, and often are staffed out of call centers around the world. Their primary training about Facebook’s Community Standards exists in the form of a 1,400 pages of rules spread out across dozens of PowerPoint presentations and Excel spreadsheets. Many of these workers use Google Translate to make sense of these rules. And once trained, they typically have eight to 10 seconds to make a decision on each post. Clearly, they are not expected to have a deep understanding of the philosophical rationale behind Facebook’s policies.

As a result, they often make wrong decisions. And that means the experience of having content moderated on a day-to-day basis will be inconsistent for users. This is why your own experience with content moderation not only probably feels chaotic, but is (in fact) barely better than random. It’s not just you. This is true for everyone.

Inevitably, some of the moderation decisions will affect prominent users, or frustrate a critical mass of ordinary users to the point that they seek media attention. When this happens, the case gets escalated inside Facebook, and a more senior employee reviews the case to consider reversing the moderation decision. Sometimes, the rules are ignored to insulate Facebook from “PR Risk.” Other times, the rules are applied more stringently when governments that are more likely to fine or regulate Facebook might get involved. Given how inconsistent and slapdash the initial moderation decisions are, it’s no surprise that reversals are frequent. Week after week, despite additional training, I’ve watched content moderators take down posts that simply contained photos of guns—even though the policy only prohibits firearm sales. It’s hard to overstate how sloppy this whole process is.

There is no path for something like this to improve. Many at Facebook, with admirable Silicon Valley ambition, think they can iterate their way out of this problem. This is the fundamental impasse I came to with Facebook’s leadership: They think they’ll be able to clarify the policies sufficiently to enforce them consistently, or use artificial intelligence (AI) to eliminate human variance. Both of these approaches are hopeless.

Iteration works when you’ve got a solid foundation to build on and optimize. But the Facebook hate speech policy has no such solid foundation because “hate speech” is not a valid concept in the first place. It lacks a principled definition—necessarily, because “hateful” speech isn’t distinguishable from subjectively offensive speech—and no amount of iteration or willpower will change that.

Consequently, hate speech enforcement doesn’t have a human variance problem that AI can solve. Machine learning (the relevant form of AI) works when the data is clear, consistent, and doesn’t require human discretion or context. For example, a machine-learning algorithm could “learn” to recognize a human face by reference to millions of other correctly identified human-face images. But the hate speech policy and Facebook’s enforcement of it is anything but clear and consistent, and everything about it requires human discretion and context.

Case in point: When Facebook began the internal task of deciding whether to follow Apple’s lead in banning Alex Jones, even that one limited task required a team of (human) employees scouring months of Jones’ historical Facebook posts to find borderline content that might be used to justify a ban. In practice, the decision was made for political reasons, and the exercise was largely redundant. AI has no role in this sort of process.

No one likes hateful speech, and that certainly includes me. I don’t want you, your friends, or your loved ones to be attacked in any way. And I have a great deal of sympathy for anyone who does get attacked—especially for their immutable (meaning unimportant, as far as I’m concerned) characteristics. Such attacks are morally repugnant. I suspect we all agree on that.

But given all of the above, I think we’re losing the forest for the trees on this issue. “Hate speech” policies may be dangerous and impractical, but that’s not true of anti-harassment policies, which can be defined clearly and applied with more clarity. The same is true of laws that prohibit threats, intimidation and incitement to imminent violence. Indeed, most forms of interpersonal abuse that people expect to be covered by hate speech policies—i.e., individual, targeted attacks—are already covered by anti-harassment policies and existing laws.

So the real question is: Does it still make sense to pursue hate speech policies at all? I think the answer is a resounding “no.” Platforms would be better served by scrapping these policies altogether. But since all signs point to platforms doubling down on existing policies, what’s a user to do?

First, it’s important to recognize that much of the content that violates Facebook’s content policy never gets taken down. I’d be surprised if moral criticism of religious groups, for example, resulted in enforcement by moderators today, despite being (as I noted above) technically prohibited by Facebook’s policy. This is a short-lived point, because Facebook is actively working on closing this gap, but in the meantime, I’d encourage you to not let the policies get in your way. Say what you think is right and true, and let the platforms deal with it. One great aspect of these platforms being private (despite some clamoring for them to be considered “public squares”) is that the worst they can do is kick you off. They can’t stop you from using an alternate platform, starting an offline movement, or complaining loudly to the press. And, most importantly, they generally can’t throw you in jail—at least not in the United States.

Second, we should be mindful of the full context—that social media can be both powerfully good and powerfully bad for our lives—when deciding how to use it. For most of us, it’s both good and bad. The truth is, social media is a new phenomenon and frankly no one—including me and my former colleagues at Facebook—has figured out how to perfect it. Facebook should acknowledge this and remind everyone to be mindful about how they use the platform.

That said, you shouldn’t wait for Facebook to figure out how to properly contextualize everything you see. You can and should take on that responsibility yourself. Specifically, you should recognize that what you find immediately engaging isn’t the same thing as what’s true, let alone what’s moral, kind, or just. Simply acknowledging this goes a long way toward correctly framing an intellectually and emotionally healthy strategy for using social media. The content you are drawn to—and that Facebook’s ranking promotes—may be immoral, unkind or wrong—or not. This kind of vigilant awareness builds resilience and thoughtfulness, rather than dependence on a potentially Orwellian-institution to insulate us from thinking in the first place.

Whether that helps or not, we should recognize that none of us are entitled to have Facebook (or any other social media service) work these issues out to our satisfaction. Like Twitter and YouTube, Facebook is a private company that we interact with on a wholly voluntary basis—which, should mean “to mutual benefit.” As customers, we should give them feedback when we think they’re screwing up. But they have a moral and legal right to ignore that feedback. And we have a right to leave, to find or build alternate platforms, or to decide that this whole social media thing isn’t worth it.

The fact that it would be hard to live without these platforms—which have been around for barely more than a decade—shows how enormously beneficial they’ve become to our lives and the way we live. But the fact that something is beneficial and important does not entitle us to possess it. Nor do such benefits entitle us to demand that governments forcibly impose our will upon those who own and operate such services. Facebook could close up shop tomorrow, and that’d be that.

By all means, Facebook deserves much of the criticism it gets. But don’t forget: we’re asking them to improve. It’s a request, not a demand. So let’s keep the sense of entitlement in check.

Governments, likewise, should respect the fact that these are private companies and that their platforms are their property. Governments have no moral or legal right to tell them how to operate as long as they aren’t violating our rights—and they aren’t. Per the above, regardless of how much we benefit from these platforms or how important we might conclude they’ve become, we do not have a right to have access to them, or have them operate the way we’d like. So as far as the government ought to be concerned, there are no rights violations happening here, and that’s that.

Many argue that what Facebook and other platforms are doing amounts to “censorship.” I disagree. It comes down to the fundamental difference between a private platform refusing to carry your ideas on their property, and a government prohibiting you from speaking your ideas, anywhere, with the threat of prosecution. These are categorically different. The former is distasteful, unwise, and yes, perhaps even a tragic loss of opportunity; the latter infringes on our right to free speech. What’s more, a system of government oversight wouldn’t work, anyway: The entire issue with speech policies is that having anyone decide for you what speech is acceptable is a dangerous idea. Asking a government to do this rather than Facebook is trading a bad idea for a truly Orwellian idea. Such a move would be a far more serious threat to free speech than anything we’ve seen in the United States to date.

Unfortunately, executives at Facebook and Twitter have both been very clear that they think regulation is “inevitable.” They’ve even offered to help draft the rules. But such statements don’t confer upon the government a moral right to regulate these platforms. Whether a company or a person invites a violation of their rights is immaterial to the legitimacy (morally and legally) of such a rights violation. Rights of this type cannot be forfeited.

Moreover, the fact that these huge platforms are open to regulation shouldn’t come as a surprise. Facebook and Twitter are market incumbents, and further regulation will only serve to cement that status. Imposing government-mandated standards would weaken or prohibit competition, effectively making them monopolies, in the legitimate sense, for the first time. Unlike potential new platforms, Facebook and Twitter have the capital and staff to handle onerous, complicated, and expensive new regulations. They can hire thousands of people to review content, and already have top-flight legal teams to handle the challenge of compliance. The best thing governments can do here is nothing. If this is a serious enough issue—and I think it is—competition will emerge if it’s able to do so.

We are the first human beings to witness the creation and growth of a platform that has more users than any country on the planet has people. And with that comes both triumph and failure at mind-bending scale. I’ve had the privilege of witnessing much of this from the inside at Facebook, and the biggest lesson I learned is this: When incredible circumstances create nuanced problems, that is precisely when we need principled thinking the most—not hot-takes, not pragmatic, range-of-the-moment action. Principles help us think and act consistently and correctly when dealing with complex situations beyond the scope of our typical intuitive experience.

That means that platforms, users, and governments need to go back to their fundamental principles, and ask: What is a platform’s role in supporting free expression? What responsibility must users take for our own knowledge and resilience? What does it mean for our government to protect our rights and not just “ban the bad”? These are the questions that I think should guide a principled approach toward platform speech.