The Discomforts of Being a Utilitarian

So what intuitions of right and wrong, fair and unfair, would we have if we were clairvoyant beings?

I recently answered the nine questions that make up The Oxford Utilitarianism Scale. My result: “You are very utilitarian! You might be Peter Singer.”

This provoked a complacent smile followed by a quick look around to ensure that nobody else had seen this result on my monitor. After all, outright utilitarians still risk being thought of as profoundly disturbed, or at least deeply misguided. It’s easy to see why: according to my answers, there are at least some (highly unusual) circumstances where I would support the torture of an innocent person or the mass deployment of political oppression.

Choosing the most utilitarian responses to these scenarios involves great discomfort. It is like being placed on a debating team and asked to defend a position you abhor. The idea of actually torturing individuals or oppressing dissent evokes a sense of disgust in me – and yet the scenarios in these dilemmas compel me not only to say such acts are permissible, they’re obligatory. Biting bullets is almost always uncomfortable, which goes a long way in explaining the lack of popularity utilitarianism enjoys. But this discomfort largely melts away once we recognize three caveats relevant to the Oxford Utilitarianism Scale and to moral dilemmas more generally.

The first of these relates to the somewhat misleading nature of these dilemmas. They are set up to appear as though you are being asked to imagine just one thing, like torturing someone to prevent a bomb going off, or killing a healthy patient to save five others. In reality, they are asking two things of you: imagining the scenario at hand, and imaging yourself to be a fundamentally different being – specifically, a being that is able to know with certainty the consequences of its actions.

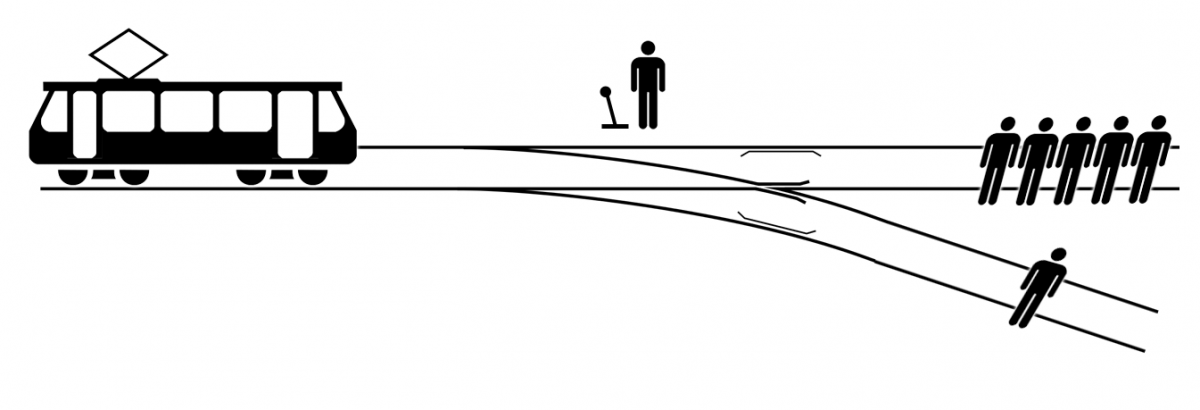

That is, in addition to imagining, say, having a captive who knows where a nuclear device has been hidden, or of being in a position to push a fat man in front of a trolley, you also have to imagine knowing that torturing the captive will work, or that the fat man really is fat enough to derail the trolley. But we are, of course, not clairvoyant beings. Every intuition we have about right and wrong, fair and unfair, has evolved or was instilled in the context of us being the sorts of creatures that cannot know the future as we know the present.

So what intuitions of right and wrong, fair and unfair, would we have if we were clairvoyant beings? What if we had access to knowledge about the consequences of our actions in the same way that we have access to knowledge about our current surroundings or posture? It’s difficult to imagine, but it certainly seems reasonable to assume we’d have evolved quite different moral intuitions. I’d say clairvoyant beings would probably have few (or at least fewer) qualms about utilitarianism.

On the other hand, the fact that we aren’t clairvoyant is not an argument against utilitarianism, it’s an argument for why human utilitarians – with their lack of foreknowledge – probably should not push the fat man or support political oppression. Not in the real world anyway. As for hypothetical worlds where we are also clairvoyant beings, it should be no surprise that our non-clairvoyant intuitions fail us there.

There is a second way in which these scenarios can be misleading: they ask us to assume that their stipulations – the blunt rules and conditions of the world they require us to imagine – are worth taking seriously. The Oxford Utilitarianism Scale is not particularly relevant here, but we can see this issue arise in other scenarios where we are asked, say, to imagine a world where slavery is the only way to maximize overall well-being. The implicit premise here is that it is conceivable that having a slave (i.e. a highly oppressed person leading an absolutely terrible life) could create more well-being than the well-being lost from being a slave. If we were to add up all the well-being slave owners gain from having slaves, it could be greater than all the well-being lost from others becoming slaves.

Is this plausible? It is, but only if you are picturing humans with a fundamentally different psychology to our own – one where being oppressed is not as bad as being an oppressor can be good. In reality, when applied to people as we know them, this simply makes no sense. (If you doubt this, see Greene and Baron’s experiments showing how bad we are, including philosophers, at thinking about declining marginal utility.)

We are being asked to apply our intuitions about well-being and suffering to hypothetical people who are wired up with a fundamentally different relationship to well-being and suffering. In other words, the stipulations of some of these scenarios don’t merely ask us to envision them, they often also implicitly ask us to imagine people who experience suffering and flourishing in critically different ways than we do. It should come as no surprise then that our moral intuitions fail us in these hypothetical worlds. The good news is, we don’t need to take these scenarios seriously. Some are just silly, failing even to tell us anything relevant about our own implicit beliefs or intuitions.

Finally, there is at least one more reason why utilitarian answers to these scenarios create discomfort: they typically imply that you are a failure. In fact, to be a utilitarian is, to some extent, to lead your life as a failure – and perhaps the worst kind of failure: a moral failure. This becomes self-evident when you answer in agreement to scenarios requiring you to sacrifice your own leg to save another person, or to give a kidney to a stranger who needs it. You say you would, but you probably won’t be donating your kidney any time soon. You are a moral failure by your own standards.

We could probably convince our consciences that these extreme actions would ultimately fail to maximize well-being, if at least for the horror toward utilitarianism it would create in others. Maximizing overall well-being would be better served if we took into account our psychological limitations and didn’t prescribe the sorts of actions that are likely to backfire by making everyone else terrified of the very idea of striving to maximize well-being. Maximization through moderation seems, paradoxically, the way to go.

But even the demands imposed by this curbed utilitarianism are quite burdensome: it still entails radical and uncomfortable changes to our lives – at least for many of those reading this – and most of us consequently won’t make those changes. But most of us also feel like we are good people, or at least not particularly bad ones. This self-perception is difficult to reconcile with the moral failure that utilitarianism insists you are. To accept such a label feels like a particularly bitter pill to swallow, especially for moral philosophers, who, more than any other group of individuals, may find it particularly insulting.

Perhaps for this reason more than any other, utilitarianism will probably remain a minority view. And yet, the discomfort of this label can also become uplifting if we change our relationship to what it means to be a moral failure. A moral failure need not be a bad person. They could merely be a person who acknowledges their limitations and strives to fail a little less each day. And hopefully, lab-grown kidneys will soon enough help them rationalize away their greedy desire to keep their extra one all to themselves.