AI Debate

Machine Learning, Deep Fakes, and the Threat of an Artificially Intelligent Hate-Bot

Fake news isn’t new. More than a century ago, newspapers owned by William Randolph Hearst and Joseph Pulitzer helped stir up enthusiasm for war against Spain by hyping the dubious claim that Spanish agents had used explosives to sink the USS Maine in Havana Harbor. The cry of “Remember the Maine! To hell with Spain!” even became a battle slogan.

But social media and other new technologies make the spread of fake news easier. Now everyone, not just government propagandists and major mass-media magnates, can get in on the action. That includes hostile foreign powers. And it remains an open question as to whether tech-supercharged fake news will make democracy seem incompatible with a free and open media environment.

One might hope that our elected leaders would discourage—or at least ignore—voter opinions that emerge from false information. But politics is no different from any other business: The most successful suppliers are the ones who realize that the customer is always right. As we’ve learned during the COVID pandemic, this applies to matters of life and death. If a self-selected media diet causes US Democratic voters to favor mandatory masking in kindergartens; and Republicans to oppose all vaccine mandates; then politicians on either side who buck their party members’ majority views can be expected to perform poorly in primaries.

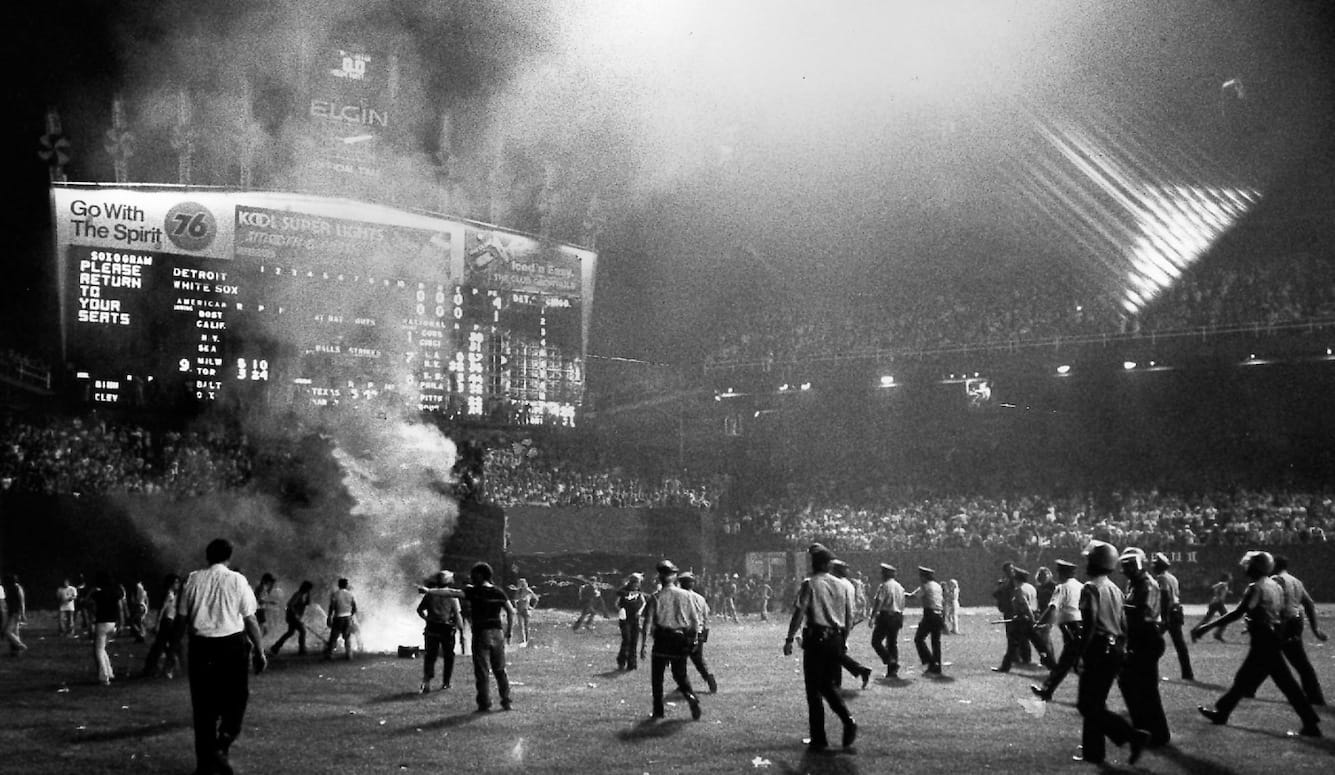

We have not yet begun to scratch the surface of how fake news can be weaponized by unscrupulous actors. Imagine that China has secretly decided to invade Taiwan, and wanted to complicate the US response by propelling Americans into another bout of internecine culture wars. Chinese propagandists would be eager to find and disseminate a video similar to that of police abusing George Floyd in 2020. But if they couldn’t find such material, why wouldn’t they manufacture it?

Computer algorithms can already create bogus but convincing “deepfake” videos of real people saying and doing anything the programmers want. (See, for example, these deepfakes of Tom Cruise.) This technology will soon spread, allowing pretty much anyone to create Hollywood-quality visual effects.

Through a combination of deepfake technology and coordinated post-release signal-boosting, China could inflame American society overnight. After a Floyd-style police-brutality video is released into social media, Chinese controlled accounts could purport to offer bogus authentication in the form of “this is true, I was there.” Real human agents in the United States could even make themselves available for interviews in which they’d testify to a video’s veracity.

My example has focused on China. But Russia might use a similar tactic if it wished to preoccupy America before embarking on some new military adventure in, say, Ukraine. In both cases, these governments’ control over much of their respective national media and internet services would prevent the West from effectively retaliating in kind. Thus does the asymmetric nature of these information weapons favor dictatorships.

On the other hand, even as the spread of deepfake technology might help convince millions of people that fictitious events are real, its prevalence may also make it easier to convince people that real events are fake. There must be millions of embarrassing videos stored in cellphones all over the world, each of which could destroy a career (or marriage). Deepfakes will give all of us some degree of plausible deniability if such videos are made public.

The best defense against the spread of deepfakes would be to train computers to identify them, a task well suited to the branch of Artificial Intelligence known as adversarial machine learning. Through machine learning, a program refines a set of algorithmic parameters so as to align the algorithm’s output with some real-life, human-collated data set. For example, a program designed to recognize handwritten numbers would iteratively self-correct in such a way that its evolved code could correctly analyze the handwritten samples that (human) programmers had fed it as training stock. Assuming such a sample were large enough, the final code would be able to correctly analyze new input that hadn’t been pre-categorized by humans. Under adversarial machine learning, two such programs compete against each other. In the deepfake example, one program would find the parameters associated with the best deepfakes. A second program would be fed the deepfakes created by the first, along with various real videos, with the goal of developing an algorithm that serves to distinguish the two. The first program, in turn, would self-refine in order to make even better deepfakes to fool the second program. And so on and so on. The key advantage of adversarial machine learning in this context is that both programs get better through competing with the other.

To the extent that deepfakes are indeed a national security threat, as I believe they are, expect the US government and perhaps even Big Tech (led by Apple, Google, Amazon, Microsoft, Facebook, and Twitter) to assign resources to this kind of detection technology. Unfortunately, to the extent that adversarial machine learning is the approach used to create deepfake detection ability, becoming good at identifying deepfakes necessarily will mean becoming good at creating them. Americans would have to hope that neither the government nor Silicon Valley ever exploits this technology to discredit opponents or otherwise advance their own interests.

While a deepfake George Floyd-like video could do temporary harm, the real danger is the long-term damage caused by exacerbating existing divisions. The Protestant Reformation of the 16th century was enabled by the then-new information technology of the printing press, which allowed dissenting thinkers such as Martin Luther to publicize their grievances against the Catholic Church. Had European society been unanimous in endorsing these grievances, the resulting reforms might have been peaceful. Unfortunately, that was not the case, and a lengthy period of religious war ensued. New advances in technology, analogous in some ways to the printing press, may make such centuries-old disagreements seem mild by comparison.

Consider the unsettling possibilities of an artificially intelligent printing press. GPT-3 is a text generating program trained on the Internet. It was developed by setting a machine learning program the task of predicting what comes next in a written paragraph. The program was trained by being presented with the first part of many texts, and then instructed to adjust its parameters so as to correctly predict what text would follow. GPT-3, consequently, can respond to prompts in a way that comes close to meeting human expectations. (Here is an example of GPT-3 responding to prompts that question whether a program such as GPT-3 can ever really understand what it is saying.)

Now imagine a future text-generating program, released by a hostile power, that’s designed not to respond as a human would, but to maximize anger. Specifically, the program sets its parameters so as to create as much controversy as possible with tweets and Facebook posts.

Machine-learning programs have achieved super-human capacity in several domains, including chess and the prediction of protein folding (an obscure-sounding field that is, in fact, vitally important to the development of new medical therapies). A program with a super-human ability to stoke anger and create divisions—call it AIMaxAnger—could greatly weaken society by giving us all new reasons to hate each other. (SlateStarCodex has a fictional story exploring this possibility.)

If AIMaxAnger would be profitable to deploy, then it’s only a matter of time till some domestic firm will implement it. Lots of popular Facebook troll farms are run from Eastern Europe. But the lure of money is universal. And even if these troll farms didn’t exist, American-made ones would eventually pop up.

It might turn out that AIMaxAnger isn’t profitable, of course: Just as you might stop watching a horror film that’s excessively scary, people might sign off from social media if sufficiently enraged. Alternatively, even if AIMaxAnger attracted users, it could be unprofitable because the hostility the program generated made users angry at any advertisers they see while interacting with the AI’s posts. An unprofitable AIMaxAnger might still be implemented by those hoping to weaken society, of course. But, fortunately, social media companies would have an incentive to block such programs. In this context, we could trust profit-seeking market actors to protect us from such malevolence.

Big Tech, as we now know it, is so dominated by American companies that the United States, and probably the rest of the West, likely won’t have to fear hostile foreigners attacking them via social media with the kind of ambitious AI-based strategy I’ve discussed. The real danger is that one of these companies would find out that there is no natural limit to the level of anger and division that can be monetized through artificially intelligent means. Sadly, it’s a hypothesis that most of us have done little to discourage.