Top Stories

Truth, Polarization, and the Nature of Our Beliefs

Like other Americans, I’m depressed by the growing level of political partisanship. There seem to be a lot more people with extreme beliefs yelling at us. The ends of the belief spectrum are engorged, the center hollowed out.

It’s frequently alleged that extremists don’t care about truth, that they don’t even believe there’s a distinction between truth and falsehood. But that’s an unhelpful, and philosophically suspect, way of stating what’s wrong with extremism.

Most of us think of philosophy as an arcane subject remote from our day-to-day concerns, the playground of navel gazers and other impractical people. But philosophy has a good deal to say about the nature of our beliefs, why some beliefs seem true and others false, why we find it so difficult to convince people to give up deeply held beliefs, and the real problem with extremism.

Beliefs as rules of action

From René Descartes in the 17th century through Immanuel Kant in the early 19th, philosophers tended to think of beliefs as pictures in the mind; if you believe it’s raining, for example, then you must have some sort of mental image of raindrops falling. But later in the 19th century, the American philosopher Charles Sanders Peirce, the founder of philosophical pragmatism, took a different tack: The important thing about beliefs was not that they were mental images, but that they were rules of action.

The essence of belief is the establishment of a habit; and different beliefs are distinguished by the different modes of action to which they give rise. If beliefs do not differ in this respect, if they appease the same doubt by producing the same rule of action, then no mere differences in the manner of consciousness of them can make them different beliefs, any more than playing a tune in different keys is playing different tunes. [Peirce quotations here and below are from his essays “The Fixation of Belief” (1878) and “How to Make Our Ideas Clear” (1879).]

The early pragmatists viewed each belief as embodying a distinct rule for specific actions; the belief that it’s raining, for example, would be a rule of action on the order of “Take an umbrella.” Contemporary pragmatists think in terms of systems of rules of action that cause us to take certain actions to achieve our desires: If I believe it’s raining and I don’t want to get wet, then I may take my umbrella, but whether I do will depend on my other beliefs—that my wife may need the umbrella, that a raincoat will be less bother, that I’ll only be outside briefly, that I’m likely to leave the umbrella in the restaurant, and so on. Belief systems plus desires yield actions. It’s a simple model that everyone uses.

If beliefs plus desires yield actions, people in different situations with different desires can be expected to differ in their beliefs and, consequently, their actions. Even though we might all want the same large things—a happy life, say—we can differ on less central desires; some will prefer quiet, others commotion. There are reasons why our beliefs differ. But within any single community, we might expect our beliefs about the world to trend toward uniformity. That doesn’t seem to be what’s happening.

Truth and correspondence

Most people assume that a belief is true only if it mirrors, or corresponds to, reality. But pragmatists reject this “correspondence” theory of truth. For them, beliefs are true to the extent they guide us to actions that help us satisfy our wants. If a belief is just a rule for action, then the proof of a belief is that it works—it helps us get to where we want to go. As Peirce put it,

It is certainly best for us that our beliefs should be such as may truly guide our actions so as to satisfy our desires; and this reflection will make us reject every belief which does not seem to have been so formed as to insure this result. … We may fancy that this is not enough for us, and that we seek, not merely an opinion, but a true opinion. But put this fancy to the test, and it proves groundless; for as soon as a firm belief is reached we are entirely satisfied, whether the belief be true or false. … The most that can be maintained is, that we seek for a belief that we shall think to be true. But we think each one of our beliefs to be true, and, indeed, it is mere tautology to say so.

While Peirce says that a belief must “truly guide our actions,” it’s clear that satisfaction of our desires is the test, not some notion of truth as correspondence to reality.

Ultimate agreement?

Peirce holds that true beliefs—true rules of action—are the ones you have at the end of the process of investigation. More precisely, they are the beliefs all investigators will hold if the process of investigation continues without limit.

The opinion which is fated to be ultimately agreed to by all who investigate, is what we mean by the truth, and the object represented in this opinion is the real. That is the way I would explain reality.

Just to be clear, neither Peirce nor I would deny that there is the same external reality for each of us, and that this external reality constrains our beliefs cum rules of action: We can’t just believe anything we want yet hope to satisfy all our wants. Eventually we’ll stub our toes, burn our fingers, or otherwise suffer the pains inflicted by an obdurate reality. But while the outside world may constrain our beliefs, it can’t determine them: There are many ways of conceptualizing our various portions of a single reality.

Peirce believed that our ongoing contacts with reality will “ultimately” cause us to agree. However, ultimate agreement—Peirce’s truth—is a long way off. So pragmatism leaves us with this problem: How can we reject extreme beliefs without falling back on the claim that they’re not “true”? Solving this problem will require a deeper dive into the dynamics of belief.

Beliefs from others

It’s hard to know what to do in this complicated world. Luckily, we don’t develop beliefs entirely on our own. Early in life we absorbed beliefs from our parents and teachers, and many of our beliefs still come from other people. When you run into one of life’s many perplexities, you’ll want to take your advice from someone familiar with that type of problem—a physician to mend your broken arm, an auto mechanic to make your car go, a local resident to point you toward Elm Street. We are all part of a social belief network.

Example: I’m not a climate scientist, so I lack first-hand acquaintance with the evidence for climate change. Few of my actions depend on my climate-change beliefs, and I have no way of testing the theory in my personal life. But as more and more of the sources I trusted were won over to the climate-change view, I came around. Not because I reviewed their evidence, but because I trusted their expertise.

We don’t always like the advice the experts give us: Does my car really need a new engine, my body a hip replacement? Must I give up drinking? But however we resist, we know from experience that we’re usually better off relying on expert advice. When I fly cross-country, I trust the expertise of the pilots, the aircraft mechanics, the air traffic controllers, and a host of others to get me there safely. We all depend on a vast network of experts, few of whom we know personally or whose jobs we could easily step into. Most of us have our own roles to play, and our playing them well benefits us all.

Our reliance on experts doesn’t always work perfectly: The chef may overcook the steak, the doctor prescribe the wrong medicine, the statesman blunder. People posing as experts may lack adequate experience, yet persuade us to hold beliefs that make it harder to satisfy our wants. But our social belief networks generally work, and the more extensive the networks, the greater the chances of satisfaction.

A role for belief conflict

Our belief systems aren’t adequate to give us all the things we want, so the systems are in constant flux. But belief systems won’t improve unless beliefs are constantly questioned, and effective questioning requires non-believers. Disagreement is therefore essential to the functioning of a belief community. When I get together with my friends, there are always matters on which we don’t see eye-to-eye. Indeed, the main reason for our getting together is to profit from exchanging, questioning, and defending our beliefs. Despite our disagreements, we remain friends, receptive to each other’s opinions.

Our belief communities aren’t monolithic. They encompass people who interact regularly but may have widely differing beliefs. While most community members take most of their beliefs from the mainstream parts of the network, there will be many disagreements within that mainstream: Mainstream beliefs aren’t unchallenged beliefs. But at some point a person’s beliefs may take them far from the mainstream. Flat-Earthers, anti-vaxxers, holocaust deniers, and conspiracy theorists of various stripes have drifted into the stagnant backwaters of the belief community.

Beliefs can move in and out of the mainstream. For example, Kennedy assassination conspiracy theories began as mainstream beliefs, but are no longer. In contrast, continental drift theories eventually made their way from backwater to mainstream.

Beliefs are hard to change

People deeply committed to political, religious, or social views often seem immune to evidence-based counter-arguments. But empirical evidence never points unambiguously to a single conclusion. You can always hang on to a deeply-held belief in the face of seemingly contrary evidence; you simply give up some less firmly held beliefs. As the philosopher W.V. Quine pointed out (in his influential 1952 essay, “Two Dogmas of Empiricism”):

The totality of our so-called knowledge or beliefs, from the most casual matters of geography and history to the profoundest laws of atomic physics or even of pure mathematics and logic, is a man-made fabric which impinges on experience only along the edges. … A conflict with experience at the periphery occasions readjustments in the interior of the field. Truth values have to be redistributed over some of our statements. … But the total field is so undetermined by its boundary conditions, experience, that there is much latitude of choice as to what statements to re-evaluate in the light of any single contrary experience. … [A]ny statement can be held true come what may, if we make drastic enough adjustments elsewhere in the system. Even a statement very close to the periphery can be held true in the face of recalcitrant experience by pleading hallucination or by amending certain statements of the kind called logical laws. [emphasis added]

People occasionally change their core beliefs, but seldom as the result of rational argument. People may construct, or uncritically accept, a formidable set of reasons for holding their preferred beliefs. It can take a major psychic disturbance—often painful, occasionally joyous—to shift a core belief.

Developing a belief system adequate to our wants and situation is the main business of growing up. The psychic investment is large, radical alterations psychically expensive. Abandoning a belief may require discomfiting changes in conduct. Even beliefs only loosely connected to action may be firmly held within one’s social circle, so belief change can strain inter-personal relations. At the limit, adopting too many non-conforming beliefs may force you to hang out with different people, to become in effect a different person.

The power of groupthink

We often cut and trim our beliefs to conform to our group. As the political commentator Ezra Klein puts it (in Why We’re Polarized):

[R]easoning is something we often do in groups, in order to serve group ends. This is not a wrinkle of human irrationality, but rather a rational response to the complexity and danger of the world around us. Collectively, a group can know more and reason better than an individual, and thus human beings with the social and intellectual skills to pool knowledge had a survival advantage over those who didn’t. We are their descendants. Once you understand that, the ease with which individuals, even informed individuals, flip their positions to fit the group’s needs make a lot more sense.

While groupthink can be problematic, it’s more often a strength.

Extremism

As new forms of transportation and communication have grown, belief communities that had existed in relative ignorance of each other began to be integrated into a global belief community, a community still only partially realized. A consequence of this globalization of belief was that many people have been brought into contact with new and disturbing modes of thought. Beliefs turned out to be more diverse than we had imagined. We became aware that there were significant numbers of evangelicals and atheists, teetotalers and drunkards, medical doctors and antivaxxers, puritans and sluts. Polarization often starts here: Having our fundamental beliefs questioned by new foreign ideas makes some of us buttress our previous opinions by adopting fantastical non-mainstream beliefs. And as people accept more non-mainstream beliefs, they begin to isolate themselves from the rest of the belief community; today’s extreme political conservatives, for example, have retreated to partisan bunkers, refusing to engage with more moderate sources of information (as noted in Yochai Benkler et al, Network Propaganda).

Globalism

A belief network works best when there are lots of people developing beliefs on lots of subjects. For example, there are a host of human diseases, with new pathogens arriving regularly. Combatting them requires armies of researchers, doctors, nurses, and other medical professionals. Similarly for other human problems: The more people developing beliefs about them, the better off we’ll be. It’s astonishing how specialized we’ve become: The credits of a minor Australian independent movie I recently watched listed 147 job titles for 180 individuals and 29 organizations, plus dozens of individuals and organizations that were merely thanked.

Another characteristic of successful belief communities is the large role given to institutions that encourage the development and testing of opposing theories, the issuance of corrections and retractions, and the modification or abandonment of beliefs in the face of discordant evidence. A news organization, for example, may have a political orientation, but if it allows a range of views and corrects its obvious mistakes, what it publishes can be wrong, but not “fake.” Peirce may have been too optimistic about “ultimate” unanimity, but he was right to emphasize investigation and agreement; his truths are determined by groups that are open to modifying their beliefs in light of experience.

All sorts of problems are best approached on a global scale, so that walling off beliefs advanced by people of other nations, races, religions, or political persuasions will limit the extent of our informed action. Even if we have different beliefs about lots of things, there will still be many opportunities for fruitful cooperation. Within the global belief community, most of us, with our differing wants, are likely to find beliefs that work for us.

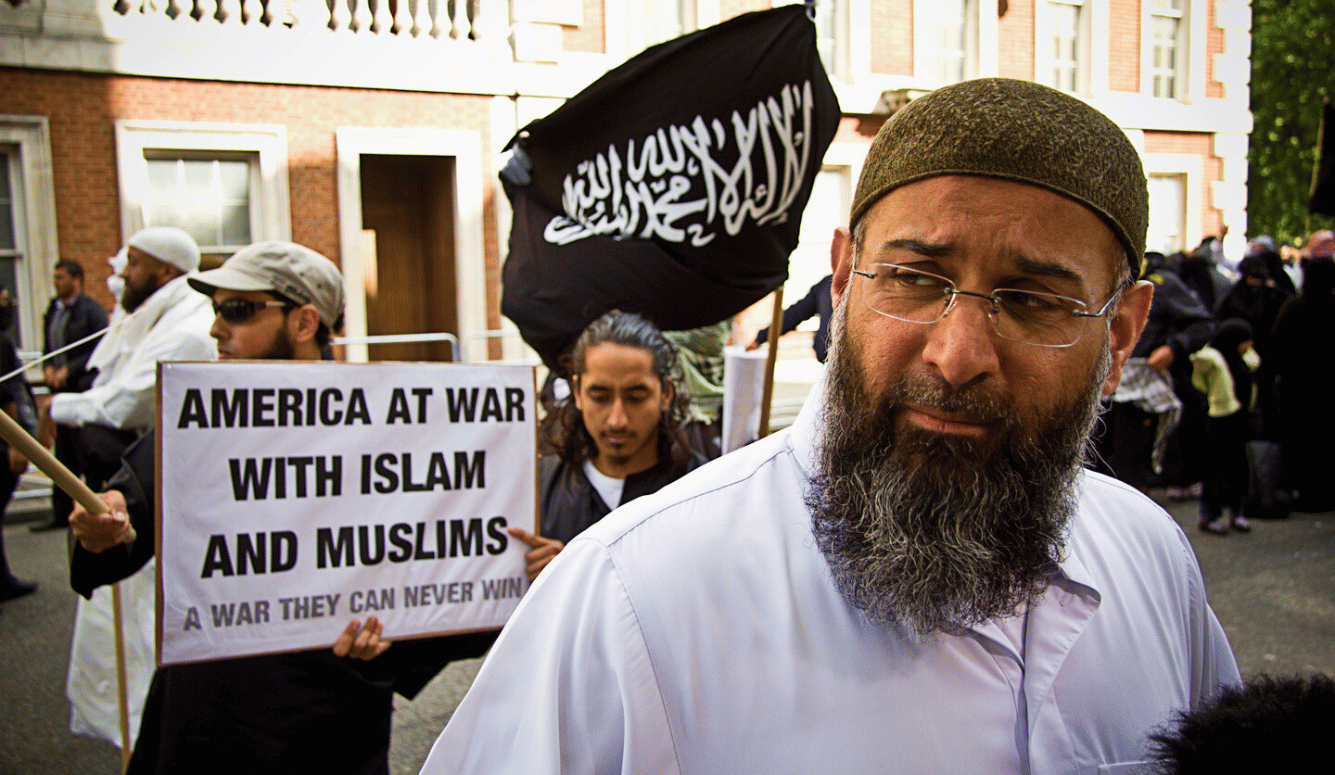

Since we don’t know how to satisfy all our wants, we’re better off living in a belief community that can generate diverse beliefs that are constantly questioned and tested. It’s the kind of community that makes extremists uncomfortable. And here we come to the fundamental problem with extremism: It’s not the extremists’ outlandish views that are the problem—it’s not unusual for people to cluster around opposing theories—but the extremists’ refusal to engage with the rest of the belief community. We all prefer that others agree with us rather than disagree—but extremists tend to extremes in this regard: They refuse to engage with people who disagree with them, to admit that they could be wrong. They want to destroy the global belief community, not simply be a discordant part of it. Their willed isolation bars them from the progress in beliefs and actions that we (and they) want. We may see the Amish and other self-isolating groups as quaintly interesting, but we’re happy there aren’t more of them, and that they’re not yelling at us.

So it’s not that the beliefs pedaled by extremists aren’t true—What could that mean?—but that the extremists have walled themselves off from the rest of the belief community. We are, most of us, engaged in a grand cooperative project to give us useful beliefs. We accept disagreement as an inevitable, even helpful, part of the project. Extremists, however, don’t want any part of that project—they would destroy the project entirely rather than have their beliefs questioned.

Why the anger?

We can explain why there’s a broad range of beliefs and why beliefs are so hard to change. But how do we explain the anger that can accompany polarization?

Part of the answer is that the potential for anger is built into our modes of explanation. We have two different ways of explaining events. One way relies on material factors and physical laws: “The water boiled (or the meat cooked) because the flame heated it.” The second way relies on beliefs, wants, and other psychological traits: “He crossed the road because he wanted to get to the other side.”

We generally favor the psychological mode to explain human actions, and the material mode to explain inanimate events. It’s usually problematic to propose a psychological explanation for a material event, as when pre-scientific peoples explain a violent storm as caused by an angry god. It’s an error we can still be prone to: I stub my toe on a table leg, and for a moment I’m angry at the table. A more insidious misuse of psychological explanation is to see events that result from the uncoordinated actions of many individuals on the model of the consciously planned actions of a single person.

People prone to conspiracy theories often see important events as not just happening, but as the outcomes of conscious plans. For example, some Americans tried to explain the COVID-19 pandemic as deliberately caused by Chinese scientists, while some Chinese media sources claimed that the pandemic was engineered by America’s Central Intelligence Agency.

Many Americans have been upset by events over the last few decades: The United States has turned out to be less white, less Christian, less prosperous, more promiscuous, and less the world-leader than they had anticipated. Candidate Trump’s 2016 campaign slogan, “Make America Great Again,” capitalized on these thwarted expectations.

Many who had looked forward to a more comforting country didn’t just feel that their future had been lost: Rather, they believed it had been stolen by a sinister cabal. Assuming psychological explanations for uncoordinated and distressing events left many people feeling betrayed and receptive to conspiracy theories.

Anger helps explain why extremists isolate themselves from mainstream beliefs: They feel that mainstream believers are immoral. Just as we don’t want to listen to neo-Nazis, today’s extremists don’t want to listen to immoral (to them) moderates. From The Protocols of the Elders of Zion to the Pizzagate follies, extremists have often accused their enemies of being monsters who prey on children—that is, people you shouldn’t talk to. It’s a sadly familiar trope.

Evolving belief

Heavily influenced by Darwin, the pragmatists sought to show how, in keeping with evolutionary theory, successful beliefs would push out unsuccessful ones. But when beliefs are held by government leaders, they may try to outlaw criticism of those beliefs. Controlling belief by barring competing ideas has become more difficult, however, as we’ve become more interconnected. The early Catholic world-view remained unquestioned for the best part of a millennium, but post-World War II communist ideology survived less than 50 years, despite massive Soviet repression.

Globalization isn’t going away, nor will the advantages of a global belief community. Humans have wants, and a belief system’s failure to satisfy those wants will eventually lead to its replacement by a more successful belief system. Things won’t change overnight, but they will change. Just give it time.