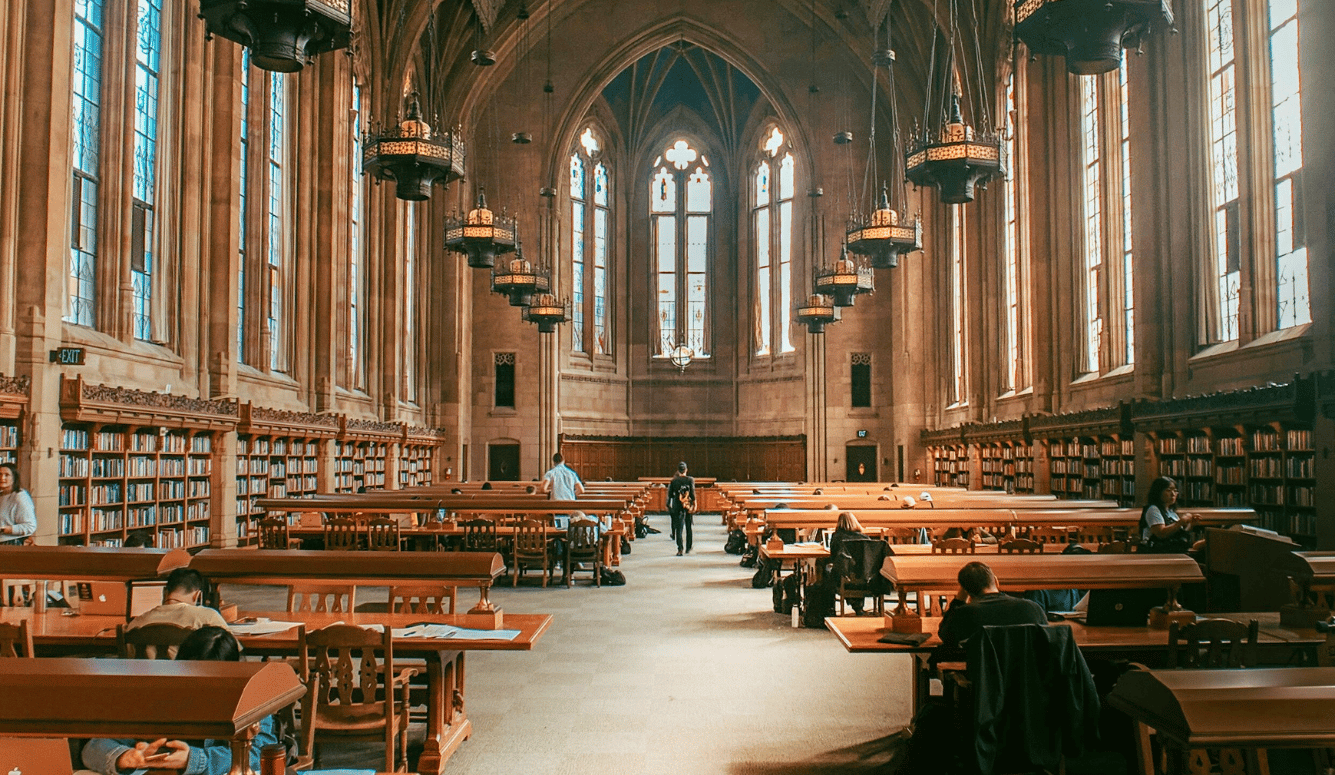

Education

Against Research Ethics Committees

The guidelines ethics committees follow present themselves as universal rules derived from reason.

Author Note: This article is based on a presentation at the Economic Society of Australia Annual Conference, and draws on an earlier discussion of an incident with an Australian university ethics committee “Why Ethics Committees Are Unethical” Agenda 10/2 2002. The views expressed here are personal, and should not be attributed to the organisations with which I am affiliated.

Ethics committees have been part of the life of medical researchers for some decades, based on guidelines which flow from the World Medical Association’s 1964 “Declaration of Helsinki.” This declaration was aimed at physicians and draws heavily on the Nuremberg Code developed during the trials of Nazi doctors after WWII. It has been joined by more recent guidelines such as the World Health Organization’s (WHO) “International Ethical Guidelines for Biomedical Research Involving Human Subjects” and many others.

In Australia, the National Health and Medical Research Council (NHMRC) issued its first Statement on Human Experimentation in 1966, and the current set of NHMRC guidelines, now issued jointly with the Australian Research Council (ARC) is the National Statement on Ethical Conduct in Research Involving Humans issued in 2007, and currently under review. Most English-speaking counties have similar procedures. The US has Institutional Review Boards (IRB) which have existed since the early 1970s. European counties often have even stricter rules.

It is interesting to read the brief histories in these documents and the supporting literature they have generated, where we can learn of the growth of ethics guidelines and approval procedures as a march from barbarism to civilisation, driven by lurid tales of ethical scandal. CA Heimer and J Petty’s work suggests these are classic Whig histories (that is, stories celebrating the inevitability and rationality of progress) and that such histories, along with tales of ethics committees as a response to harms caused by researchers, fail to consider the power of the “ethics industry” or “Big Ethics” and the alliance between ethics professionals and managerialism in universities.

We read of the 1932–1972 Tuskegee study in the US of untreated syphilis among poor blacks; the Willowbrook State School study in which mentally retarded students were infected with hepatitis; Stanley Milgram’s experiment regarding obedience to authority in which volunteers were instructed to deliver what they thought were electric shocks to another participant; the Tearoom Trade study in which the experimenter obtained information under false pretences from homosexuals looking for casual sex; and the since-discredited Stanford prison experiment in which volunteers were assigned warder and prisoner roles and seemed to lose something of their humanity in the process. The last three occurred in the 1970s, and the others came to light around the same time in the US.

An obvious problem with these histories of ethics committees as a prudent response to harms is that these and other scandals are concentrated after the growth of ethics regulation in the US, so their causal role is questionable. Another is that there is no mention in the histories of the internal dynamics of the growth of the ethics bureaucracy—of the agency of regulators and committee functionaries whose livelihoods and advancement depended on that growth.

An Inappropriate Medical Model for Social Science Research

What is new is not the rules, but the attempt to expand the reach of the rules to non-medical researchers, including economists. The argument seems to be that non-medical research also involves humans, or at least impacts humans, and so should be subject to similar procedures as medical research. A recent Oxford Handbook on Professional Economic Ethics produced by George De Martino and Deirdre McCloskey reflects the growing concern among economists and other social scientists with ethics regulation.

There is prima facie evidence that the medical model is inappropriate to economic studies. An example of the inappropriateness of the medical model is the treatment of monetary incentives. A Nobel Prize was awarded to Vernon Smith for his work in experimental economics and it is a significant and growing proportion of economics research. The ARC/NHMRC rules take a dim view of offering money to subjects participating in experiments, for reasons one can understand in a medical context. However, experimental economics often and properly investigates how subjects respond to monetary incentives of various kinds in different settings. Common sense often prevails eventually in the oversight of such experiments, but this is just one example of the problems created when medical rules are applied outside their original context.

Another example is randomised trials, now supposedly the gold standard for social policy research. In a randomised social policy trial, some subjects are assigned the treatment, for example a particular job search assistance program, while a control group of subjects is not, and the results compared by the researcher. Randomised trials of medical treatments have a long history and, unlike social policy trials, often carry some risk of harm, caused by either administering or withholding medical treatment. Ethics committees following ARC/NHMRC procedures designed for medical trials apply excessive and misplaced scrutiny to social policy trials.

Furthermore, economics and other social sciences which receive attention from human research ethics committees are characterised by competing theoretical approaches and controversy, much more so than the relatively methodologically unified medical sciences. It is difficult to see how ethics committees can make informed judgements about competing theoretical approaches in economics.

Another potential problem comes from an ethics committee’s requirement to scrutinise the content of the research and ensure benefit to the communities in which research is undertaken. One can imagine a zealous ethics committee member objecting to anything that has a whiff of the evil neoliberal economic order in which economists and their models are supposedly complicit.

A Particular (and Poorly Defended) Approach to Moral Philosophy

The guidelines ethics committees follow present themselves as universal rules derived from reason. This approach to ethics is presented as obvious and indisputable, but it is not clearly or fully described, let alone compared to alternatives or adequately defended in any set of human research ethics guidelines I have seen.

For instance the Australian ARC/NHMRC guidelines begin with some trite musings, which are worth quoting in full:

Since earliest times, human societies have pondered the nature of ethics and its requirements and have sought illumination on ethical questions in the writings of philosophers, novelists, poets, and sages, in the teaching of religions, and in everyday individual thinking. Reflection on the ethical dimensions of medical research, in particular, has a long history, reaching back to classical Greece and beyond. Practitioners of human research in many other fields have also long reflected upon the ethical questions raised by what they do. There has, however, been increased attention to ethical reflection about human research since the Second World War.

They then ask, “But what is the justification for ethical research guidelines as extensive as this National Statement, and for its wide-reaching practical authority?” Unfortunately, the answer involves no actual ethical argument, offering instead an enthusiastic defence of the range of issues and level of detail in the new guidelines.

The philosophical approach has to inferred from the detailed guidelines, and to the extent that it is coherent, it is a mixture of absolute rules of Kantian origin, derived from some unarticulated basis of human rights, and utilitarian reasoning. Absolute rules do not sits easily with consequentialism (that is, activities are acceptable if good consequences outweigh the bad), of which economists’ favourite moral philosophy—utilitarianism—is a special case.

The common threads in the ARC/NHMRC guidelines are universality and rules. However, formulating universal rules is only one approach to ethics among many, and certainly not uncontroversial. For instance, it is sharply inconsistent with the approach of virtue ethics advocated by Alasdair Macintyre among others.

My criticism of rule-based systems of ethics does not imply that rules and professional sanctions are unnecessary. They are of practical value in providing a focus for ethical reflection, in guiding economists towards the good, and sustaining ethical culture. It is good to see economics journals insisting on conflict of interest and funding disclosures, and even better to see breaches regarded as a professional negative. Esteem is a powerful motivator in academic and professional life. Perhaps even a code of ethics for economists as discussed by George De Martino has a place.

If the philosophical arguments for the committees’ particular approach to ethics are absent or deficient, then what explains their approach? Ethics guidelines like those of the ARC/NHMRC emerged from a bureaucratic process, and bureaucracy needs stable universal rules to function efficiently. Precedent and verifiability of decisions are essential to the proper functioning of committees and minimising risk for the participants—that is, those involved in ethics approval processes. They seem to have given themselves a set of rules that suits the growth of the ethics industry. It is hard to see how they could operate with some of the ethical alternatives available.

Perverse Incentives

Committees are not very good at ethical deliberation, especially those operating in a quasi-legal manner. It is even worse when committee members are subject to the reward structures of contemporary universities.

One problem is the asymmetric rewards for wrongly approving and rejecting proposals, respectively. A committee which accepts a proposal that turns out badly (for example, a scandal reported in the press) will suffer serious consequences. However, being over-cautious and rejecting a proposal that should have been accepted is likely to have minimal consequences for the committee, and occasional complaints from researchers may even reassure senior university administrators that the committee is doing a good job. The asymmetric reward structure suggests committees systematically will get it wrong.

Another problem is ratchet effects. In a rule-based system, it is far easier to invent new rules—scandals lead to a flurry of this sort of activity—than to delete or ignore bad ones. The dynamics of the system will tighten ethical requirements on researchers over time, and extend their reach, regardless of the appropriateness.

Self-righteousness is another danger. Ethics committee members may enjoy the warm inner glow that comes from their adherence to a set of ethical rules while the costs of this adherence are imposed on researchers and the general public. Still another is assertiveness—the understandable desire of the committee to avoid being seen as a rubber stamp. This, like the other tendencies, will lead to excessively tight rules and over-zealous committees.

Evasion, Substitution, and Jurisdiction Shopping

It is possible that increasing the strictness of research ethics rules could actually lower the average ethical standard of economics research. If regulation is sufficiently burdensome and distasteful to researchers then they will seek to evade the regulations.

Moreover, the increased costs of so-called ethical research will lead to its substitution by research which is not, thereby reducing the average standard of research conducted. The strength of this effect will depend on the how well universities can monitor research for ethical compliance. Another possibility is that research will move from institutions which have strict ethical approval procedures to those with lax procedures or monitoring of compliance, and this too will drive down ethical standards.

Research may also relocate overseas to jurisdictions with weaker procedures or monitoring. We are seeing this already with some types of medical research which does not fit the ethical predilections of Australian committees, or because the conditions they impose make it much more costly to conduct in Australia.

As well as affecting the location of research, the activities of ethics committees distort the selection of research topics and methods. Researchers today are loath to undertake projects that are at risk of being disallowed or gutted by ethics committees (especially risky projects that are likely to require a large investment of time before this is known). In economics, such projects might include experimental work or direct interaction with market participants to ascertain motivations, attention to the informal sector, and so on—the kind of research which many leading economists feel is more valuable than blackboard theory or sitting at a computer massaging traditional data sets. Committees have no taste for risk and innovation which pushes ahead the economics profession, and generates breakthroughs in our understanding.

Undermining Virtue, Trust, and Responsibility

My most fundamental concern about ethics committees is that they undermine ethical culture and weaken virtue by encouraging economists to treat ethics as someone else’s problem. Passing ethics on to others means economists lose the habit of ethical reflection, except for the most minimal calculation of what will get past the committee. Ethics becomes trivialised as a bothersome constraint on research. Ethical communities disintegrate as they are made redundant by coercive centralising committee process. A division of labour develops where the researcher does the work and a distant committee ensures adherence to certain ethical rules.

Trust between researchers and their institutions is undermined by the message the procedures send to researchers that they are neither competent nor responsible enough to make ethical judgements. Perhaps ethics committees will have the same effects on researchers’ ethical capacities as long term welfare dependency has had on the work capacities of its recipients.

Weakness in the Face of Power

Is it just the ethics committee that stands between us and the horrors of Nazi medical experimentation and collapse of civilisation as we know it—as some in the ethics industry suggest?

Suppose the Nazis had a Human Research Ethics Committee, and that all research had to be duly approved. It is unlikely that there would have been much difficulty in Nazi Germany in finding sufficient people of the required types (that is, eight including a lawyer, doctor, a non-scientist, and minister of religion) to sit on a properly constituted Human Research Ethics Committee under the ARC/NHMRC guidelines and approve Nazi experiments. A culture that generates those acts would have no difficulty generating committee members prepared to endorse them and construct justifications under almost any set of general principles. “Informed consent” could be obtained, harm of course is minimal as the experiments are not dealing with people, benefits for the state are great, and so on. In fact, Mengele’s notorious twin experiments at Auschwitz were approved by the Research Review Committee of the Reich Research Council. Ethics approval procedures and guidelines would seem to provide little real protection if the ethical culture is compromised or suppressed by power, as it was in Nazi Germany.

The Nazi era, as well as providing evidence that the existence of ethics committees and approvals mean little, cautions that well intentioned principles implemented by a bureaucratic state are not just meaningless, but potentially dangerous. The Nazi Doctors and the Nuremberg Code- Human Rights in Human Experimentation, edited by George Annas and Michael Grodin, documents the rise of the racial hygiene movement in pre-WWII Germany, suggesting that it was developed by well-meaning doctors and became “orthodox” in the German medical community before the Nazis came to power. Such an orthodoxy in the medical community could only became deadly when it gained the support of the Nazi state and was implemented with the help of the state’s efficient bureaucracy and coercive power. Annas and Grodin’s book also discusses the way ‘mercy killing’ evolved out of the original medical context to a social doctrine that justified the gassing Gypsies, Jews, homosexuals and other undesirables. It is particularly interesting the way ethical debate and individual dissent was crushed as these principles were appropriated, twisted and absolutised by a bureaucratic state. Committees functioned to co-opt doctors and spread Nazi ideology rather than restrain evil.

It is hard for the sort of bureaucratic procedures we have in ethics committees to do anything other than enforce and reinforce the ethics of the currently powerful. This is not just exemplified by the Nazi experience—the Stanford Prison experiment and other scandals were approved by ethics committees (as Heimer and Petty point out). The problem was not that norms were violated but that they change.

Contemporary examples of weakness in the face of power are readily available. Approvals from Australian committees seem quicker and less troublesome when projects are supported by large amounts of competitive research income that is vital for contemporary Australian universities. Here, the risk minimisation imperative of the ethics committee member is to avoid awkward and perhaps career-limiting conversations which their university research managers.

Another risk for the ethics committee is research about the university itself which may embarrass senior managers on whom the committee member’s future prospects depend. Recently, in Australia, we have seen the extraordinary tale of a university debauching ethics approval processes to block research into bullying at the University of New England. And the sordid story of the treatment of economist Paul Fritjers by UQ.

Conclusions

Ethics committees are not about ethics at all. They are about university managers insuring themselves against risk—providing them with a defence that the research was approved by an ethics committee should things go wrong—as well as augmenting the incomes and careers of those in the ethics industry. This is what we are buying with large amounts of public money and opportunity costs of research delays and distortions.

It is interesting to ask whether or not the activities of ethics committees would be allowed under their own criteria. They certainly have not sought informed consent of researchers—instead it is pretty heavy handed coercion. And it is hard to see how their activities would pass the utilitarian cost-benefit test.

Abolishing the ethics approval processes or making them voluntary should be on the agenda of policymakers looking to improve the value for money we get from higher education, though such a suggestion would no doubt elicit protest from the ethics industry. A more realistic possibility would be restricting ethics approval processes to the original medical context. Most of the issues in non-medical research can be dealt with by existing privacy legislation and normal professional processes.

As an economist, I can’t help thinking that competitive markets could be used to improve the efficiency with which ethics applications are dealt, and reduce the scope for the abuse of process by researcher’s institutional managers. Consider allowing researchers to submit to any properly constituted ethics committee, not necessarily at their own institution. Committees could charge for the submission of applications for ethical clearance, and for a market to operate well, charges would apply equally to applications from insiders. Ethics committees which impose requirements that cannot be defended according to the guidelines, which process applications too slowly, or charge too much, would quickly go out of business. All committees would, of course, need to be monitored for compliance with rules for committee composition, proper consideration of applications according to the guidelines, and conflicts of interest. Just as they must at the moment. I see no reason why the market could not be extended to non-university committees, and even to for-profit providers of ethical clearance.

It would be good to see more discussion of the university as an ethical community and better ethical leadership from senior academics, university administrators, and senior members of the economics profession. Such ethical leadership is not going to be generated from ever more zealous ethics committees.

Ethical education is important, provided it is serious and not just giving social scientists the philosophical tools to justify dubious actions, or instruction about how to get through the committee. Ethical formation is perhaps a better way of putting it than ethics education. Formation involves not just stocking the intellect but developing the personal and communal disciplines that sustain a good life. It works better if we are reaching for a good rather than motivated by fear and seeking to meet a fairly low bar—low in terms of substantive demands rather than bureaucratic. It is more powerful if it is integrated into the professional life of economists.

More discussion is needed of ethical leadership and education among social scientists, as we attempt to reclaim ethics from the bureaucratic ethics industry.