Education

The Other Crisis in Psychology

psychological scientists recognize unwarranted causal inferences when evaluating others’ research but miss it in their own, perhaps because of ideological and self-serving biases.

In July 2019, Christopher Ferguson published an article in Quillette on the replication crisis in psychology. As an academic psychologist, I appreciated his clear and concise discussion of some of the difficult issues facing psychology’s growth as a science, including publication bias and the sensationalizing of weak effects. I believe a related, but perhaps less-recognized, illness plagues psychology and related disciplines (including the health sciences, family studies, sociology, and education). That illness is the conflation of correlation with causation, and the latest research suggests that scientists, and not lay people and the media, are the underlying culprits.

Correlation and Causation

We have probably all heard the cliché “Correlation is not causation.” Of the criteria for documenting that one variable causes a change in another variable, correlation is just the first of three.

That is, the first criterion for documenting that one variable causes a change in another variable is evidence that the two variables covary together: as one goes up, the other tends to, too (a positive correlation; for example, students who score high on the SAT tend to also have a higher GPA in college),1 or as one variable goes up, the other tends to go down (a negative correlation; for example, people who have a stronger interest in working with people vs. things are less likely to major in inorganic disciplines such as computer science and physics).

The second criterion is that of temporal precedence: the presumed cause must come before the presumed effect. For example, people who are spanked during childhood tend to score lower on IQ tests during adolescence.2 Descriptions of temporal precedence tend to evoke cause and effect interpretations. For example, in the context of spanking and IQ, it is tempting to infer that spanking causes lower IQ. However, temporal precedence is necessary but not sufficient for inferring causality. As Steven Pinker described in The Blank Slate, if you set two alarms when you go to bed, one for 6:00am and the other for 6:15am, and the first alarm reliably goes off before the second alarm, you will have evidence of systematic covariance and temporal precedence, but that doesn’t mean that the first alarm caused the second alarm to go off. Likewise, spanking in childhood occurs before the measurement of IQ in adolescence, but that doesn’t provide evidence that spanking causes lower IQ. The tendency to infer causality from temporal precedence appears to underly belief in the well-refuted myth that vaccines cause autism3: Because vaccines are given before symptoms of autism reveal themselves, people are quick to mistakenly assume that the vaccines cause autism. By this logic, everything from crawling to walking is a cause of autism.

The third criterion is of utmost importance. To infer causality, researchers must address potential confounding variables—variables other than the presumed cause that could account for the association between the presumed cause and effect. In the case of spanking and IQ, for example, one can entertain all kinds of potential (and non-mutually exclusive) confounds: living in a high-stress, poverty-stricken environment could lead to both being spanked and suboptimal development of cognitive ability; lower parental IQ could be accounting for both the use of corporal punishment and children’s lower IQ scores; pre-existing low IQ scores in children could lead to both being spanked and continued lower IQ scores into adolescence; etc. To make the case for a specific cause (such as spanking), the cause must be isolated and then, via random assignment, imposed upon some individuals and not others (or varying levels of the cause must be imposed on different groups of individuals). Generally, this is accomplished through experimental design that includes manipulation of the presumed cause followed by measurement of the variable that is predicted to be affected by the manipulation.

No ethical researcher plans on randomly assigning parents to engage in varying degrees of corporal punishment to assess its isolated effects on children’s IQ. But other questions about humans can be tackled with experiments. For example, researchers who want to test the hypothesis that playing violent games increases aggression have used experimental designs4 in which some individuals are randomly assigned to play a violent video game for a specified period of time and others are assigned to play a similarly arousing but non-violent video game; after imposing the manipulation, individuals’ aggression is measured.

A controlled experiment—in which a specific causal variable is manipulated by the researcher, participants are randomly assigned to experience different levels of that manipulated variable, everything else is held constant, and the effects of that manipulation are then measured objectively—is the “gold standard” for documenting causality. Notably, documenting that a variable has a causal impact on another variable does not mean that it determines that other variable. In the case of violent video games and aggression, there may be evidence that exposure to violent video games has short-term influence on aggressive thoughts,5 but exposure to violent video games doesn’t determine how aggressive people are; it is just one of many variables that influence aggression.

Perhaps the distinction between correlation and causation makes perfect sense to you. Lucky you, because you are not in the majority. The tendency to conflate correlation and causation is well-known and discussed widely in books on logical thinking (such as Keith Stanovich’s What Intelligence Tests Miss: The Psychology of Rational Thought) and biases in thinking (such as Michael Shermer’s Why People Believe Weird Things).

Several years ago, my students and I published systematic evidence that the tendency to conflate correlation with causation occurs regardless of how educated people are. In one study, for example, we gave a group of community adults a hypothetical research vignette that described a correlational study of students’ self-esteem and academic performance, in which both variables were measured (observed) and neither was manipulated. To another group of participants, we gave a hypothetical research vignette that described an experimental study in which students’ self-esteem was manipulated (that is, by random assignment some students received self-esteem promoting messages and some students did not) and then the students’ academic performance was measured. For both groups of participants, the research vignette concluded with a statement that the study revealed a positive correlation between self-esteem and academic performance. Then, we asked the participants what inferences they could draw from the finding.

The participants in the two groups were equally likely to conclude that self-esteem leads to academic success, even though participants who read about the correlational study should not have drawn that conclusion. Moreover, participants who read about the correlational study were similarly likely to draw an erroneous causal inference regardless of how educated they were! (The inference that self-esteem enhances academic performance, by the way, actually goes against the latest science, which shows quite clearly that if self-esteem and academic success are causally connected, it is academic success that precedes self-esteem, not the reverse!)6

The Language of Causality

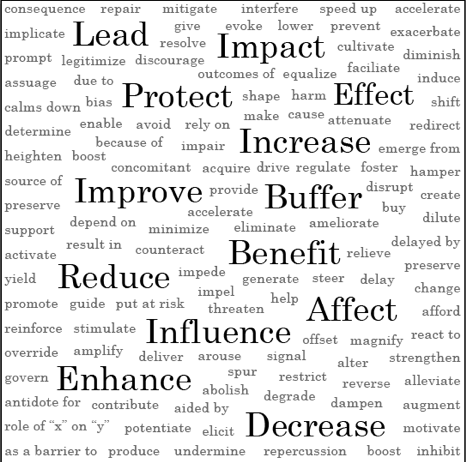

As a likely manifestation of the human bias toward inferring cause and effect, there are far more ways to describe cause and effect associations than there are ways to describe non-causal associations. When my colleagues and I pored through several hundred journal articles in psychology, we found more than 100 different words and phrases that were used to denote cause-and-effect relationships. These are shown in the word cloud below, with the most commonly used words in large font.

There are probably hundreds of ways of denoting cause and effect relationships, and the reason this is important is that people don’t really know what does and does not qualify as causal language,7 nor (as I described above) do they recognize the conditions under which causal language is warranted. So, if a description of research findings uses causal language without justification, the reader is unlikely to realize it, and hence they will be misled without having a clue they are being misled.

Scholars have repeatedly blamed the media for inappropriate use of causal language. In 2016, when Brian Resnick of Vox asked famous psychologists and social scientists what journalists get wrong when writing about research, conflating correlation and causation topped the list. Indeed, unwarranted causal inferences abound in the media. A quick search on nearly any news site will reveal headlines like “How Student Alcohol Consumption Affects GPA” and “Sincere Smiling Promotes Longevity” and “For Teens, Online Bullying Worsens Sleep and Depression,” all of which are causal claims made on the basis of non-causal (correlational) research with measured variables.

Recently, though, several studies have shown that unwarranted causal language begins with scientists themselves. For example, in medicine, one extensive review showed that over half of articles about correlational studies included cause and effect interpretations of the findings.8 And in education, a review of articles published in teaching and learning journals found that over a third of articles about correlational studies included causal statements.9 In psychology, my colleagues and I conducted two studies that reinforced the ubiquity of the problem. First, we reviewed a random sample of poster abstracts that had been accepted for presentation at an annual convention of the premier professional organization in psychology, the Association for Psychological Science. We were disappointed to find that over half of the abstracts that included cause and effect language did so without warrant (i.e., the research was correlational). Of course, poster presentations are held to a less rigorous standard than are formal talks or published journal articles, so in a follow-up study, we reviewed 660 articles from 11 different well-known journals in the discipline. Our findings replicated: over half of the articles with cause and effect language described studies that were actually correlational; in other words, the causal language was not warranted.

When I submitted our analysis of unwarranted causal language to a journal published by the Association for Psychological Science, the journal editor dismissed the submission, saying the human tendency to conflate correlation with causation is already well-known. Well, it may be a well-known bias, but it is obviously not easy to address if it is rampant in the poster presentations of one of psychology’s most popular professional conventions and just as prevalent in highly respected journals in the discipline. (We did proceed to publish our findings in a different journal whose editor asked us to submit to them.)

Failing to Consider Confounds

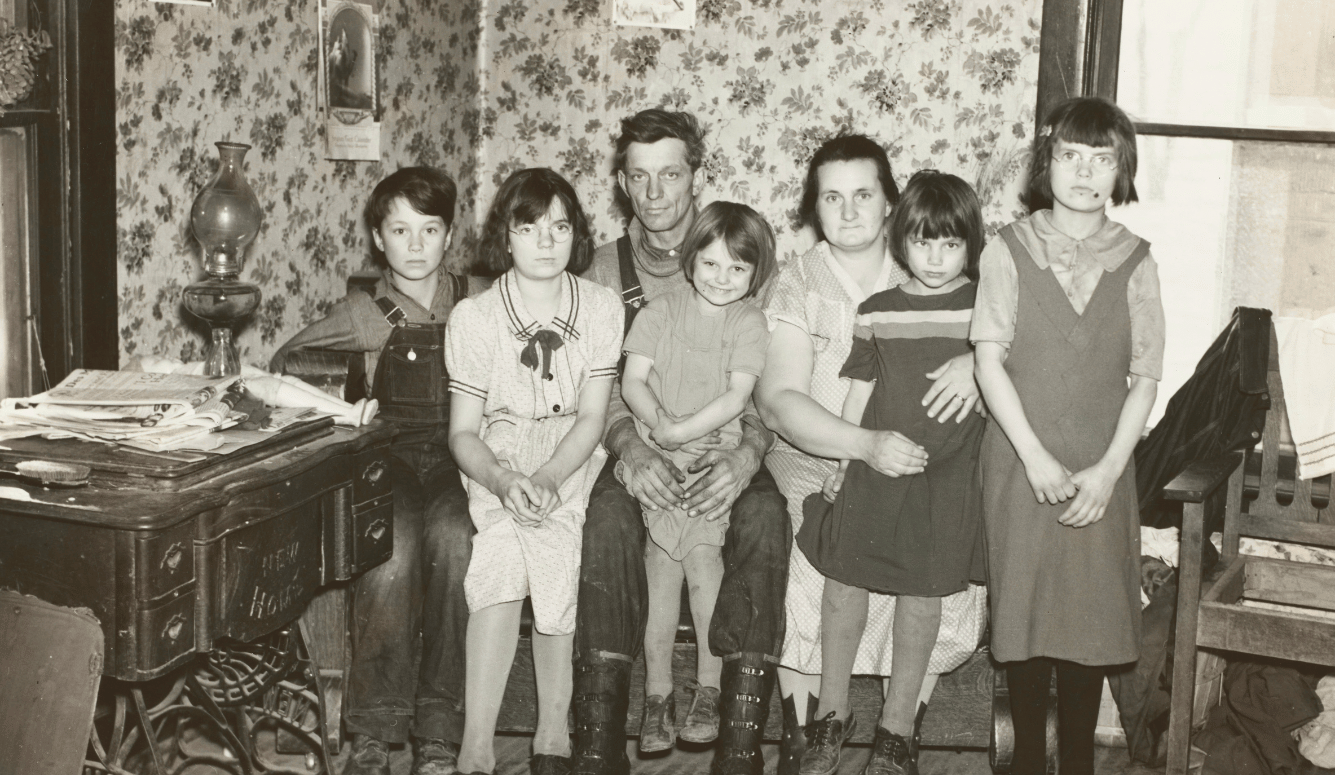

The failure to consider confounds and to erroneously infer causality from correlational data inhibits us from developing optimally effective solutions to the problems we face in society. Consider, for example, the massive variation among young children in their early language acquisition and subsequent school achievement. One of the most commonly referenced studies in early childhood development and education is Hart & Risley’s 1995 longitudinal study that demonstrated that children raised in low socioeconomic status homes had parents who spoke far fewer words to them than did children raised in high socioeconomic status homes, and these early differences in language experience predicted subsequent disparities between children in their vocabularies and school achievement.10 This link was interpreted as causal—that the verbal environment parents provide to their children is a key influence on their children’s verbal development—and it spurred many intensive and expensive programs that teach and support verbal interaction between parents and infants. However, Hart and Risley’s data were correlational. That is, the researchers did not manipulate the quantity and quality of verbal interactions that parents had with their young children; they did not randomly assign some parents to provide one form of language experience and other parents to provide another and then measure any change in children’s development as a result of the manipulation. To suggest that differences in early language experiences cause differences in children’s vocabularies and school achievement requires the elimination of confounds—that is, variables that could account for the correlation because they lead to both strong verbal interaction from parents and strong verbal ability in children.

Shared genetics is one potential confound. Parents of higher socioeconomic status tend to have higher cognitive ability than parents of lower socioeconomic status, and socioeconomic status and cognitive ability are both heritable.11 So, shared genes could be a third variable that influences both the quality of language experiences that parents provide and children’s verbal ability. To test this possibility, behavioral geneticists have taken advantage of “experiments of nature” in which some children are raised by their biological parents (sharing both genes and environment) and some children are raised by adoptive parents (sharing only environment). In typical families (like those in Hart and Risley’s study), how similar are children to their parents, with whom they share both genes and a rearing environment? In adoptive families, how similar are children to their parents, with whom they share only a rearing environment?

In fact, the answers to these questions were first documented in the 1920s12 and have replicated on multiple occasions by myriad researchers13: In biological families, children resemble their parents in vocabulary and verbal ability; in adoptive families, they do not. The key implication is that Hart and Risley’s finding of a link between parents’ verbal behavior and their children’s verbal ability does not warrant an inference that parents’ verbal behavior influences their children’s verbal ability. The link is better explained by shared genes, because the association only reveals itself when parents and children are genetic relatives. Stated another way, the findings imply that the type of parents who provide high-quality language experiences to their children differ systematically from those who provide lower-quality experiences; and children who evoke high-quality verbal reactions from their parents differ systematically from those who do not. Because developmental psychologists and educators continue to interpret correlational data like Hart and Risley’s as evidence of the causal impact of early language experiences on verbal ability, they continue to push interventions that, in the end, are likely to be relatively less effective than interventions that acknowledge and address both environmental and genetic differences between individuals and families.

Another domain in which conflation of correlation with causation may be leading us astray is with microaggressions. In the article that popularized this term, microaggressions were defined as “brief and commonplace daily verbal, behavioral, or environmental indignities, whether intentional or unintentional, that communicate hostile, derogatory, or negative racial slights and insults toward people of color.”14 The term was initially applied in the context of race and ethnicity but is now applied much more broadly. One key finding from correlational research on microaggressions is that individuals who self-report being microaggressed against are more likely than others to struggle with mental health issues.15 The data are correlational, yet have been interpreted as causal: that is, that being microaggressed against causes mental health issues.16 As such, it is now common in both the academic and corporate world to offer or require employee training on the various phrases, words, and actions that might qualify as microaggressions. I am not suggesting that being microaggressed against does not actually have a negative effect on individuals’ well-being; the causal path is certainly plausible. However, the causal inference is not valid in the absence of true experimental research that imposes microaggressions on some individuals and not others, with subsequent measurement of pre-specified outcomes. To say otherwise is telling more than we know.

As Scott Lilienfeld pointed out in his article17 calling for more rigorous research on microaggressions, a glaring confound is the personality trait of negative emotionality (neuroticism): Individuals who are high in negative emotionality are particularly likely to perceive themselves as microaggressed against and individuals who are high in negative emotionality are susceptible to mental health issues. The possibility that negative emotionality underlies both experiencing microaggressions and mental health concerns is quite reasonable given that microaggressions have no precise definition but rather are defined entirely in terms of the listener’s interpretation. I propose that microaggression workshops, to the degree that they are motivated by unwarranted assumptions of the causal impact of microaggressions on mental health, might actually backfire by making at-risk individuals more likely to perceive themselves as microaggressed against.

Indeed, in research that my colleagues and I presented last year, when we primed college students with the note that “people say all kinds of things, and sometimes they say things that can be harmful without even realizing it,” the students who scored higher in negative emotionality subsequently rated ambiguous statements like “You should take up running” to be more harmful than did students who scored low in negative emotionality. As Lukianoff and Haidt argued in The Coddling of the American Mind, microaggression training may not be preparing people to engage with each other respectfully (as it presumably aspires to), but rather to look for opportunities to take offense in others’ words.

Psychology Can Do Better

In the same way that psychological scientists have responded to the replication crisis by holding ourselves accountable for engaging in more responsible research and data analysis practices, I hope that psychological scientists can work together to overcome our tendency to infer causality from correlational data. How we overcome this tendency may depend on why, how, when, and to whom it happens. It is possible that, just like anyone else, psychologists have a difficult time distinguishing between correlation and causation; if that is the case, we need to supplement our scientific training to include more pointed practice with causal language and criteria for demonstrating causality.

Another possibility is that psychological scientists recognize unwarranted causal inferences when evaluating others’ research but miss it in their own, perhaps because of ideological and self-serving biases. If that is the case, we need to encourage individuals with competing viewpoints to provide constructive review of each other’s research, with correlation versus causation front of mind. It is also posible that scientists use unwarranted causal language intentionally, in an effort to draw more attention to their work. Luckily, recent research suggests that engaging in such causal “spin” is unnecessary, because press releases that are crafted with causal language and press releases that are crafted with non-causal language are picked up by news outlets at similar rates.

Regardless, it is up to psychological scientists to hold one another—and themselves—to a higher standard of (1) recognizing a causal statement when they see it, and (2) identifying whether or not the three criteria have been met for making that causal statement. In the scientific pursuit of truth, psychology must do better.

April L. Bleske-Rechek earned her BA in Psychology and Spanish from the University of Wisconsin-Madison (1996) and her PhD in Individual Differences and Evolutionary Psychology from the University of Texas at Austin (2001). She is currently a Psychology professor at the University of Wisconsin-Eau Claire.

References:

1 Sackett, P. R., Borneman, M. J., & Connelly, B. S. (2008). High-stakes testing in higher education and employment: Appraising the evidence for validity and fairness. American Psychologist, 63, 215-227. doi:10.1037/0003-066X.63.4.215

2 Straus, M. A., & Paschall, M. J. (2009). Corporal punishment by mothers and development of children’s cognitive ability: A longitudinal study of two nationally representative age cohorts. Journal of Aggression, Maltreatment & Trauma, 18, 459-583. doi:10.1080/10926770903035168

3 Madsen, K. M., Hviid, A., Vestergaard, M., Schendel, D., Wolfhart, J. et al. (2002). A population-based study of measles, mumps, and rubella vaccination and autism. The New England Journal of Medicine, 347, 1477-1482. doi:10.1056/NEJMoa021134

Honda, H., Shimizu, Y., & Rutter, M. (2005). No effect of MMR withdrawal on the incidence of autism: A total population study. Journal of Child Psychology and Psychiatry, 46, 572-579. doi:10.1111/j.1469-7610.2005.01425.x

4 Anderson, C. A., & Bushman, B. J. (2001). Effects of violent video games on aggressive behavior, aggressive cognition, aggressive affect, physiological arousal, and prosocial behavior: A meta-analytic review of the scientific literature. Psychological Science, 12, 353-359. doi:10.1111/1467-9280.00366

5 Anderson, C. A., & Bushman, B. J. (2001). Effects of violent video games on aggressive behavior, aggressive cognition, aggressive affect, physiological arousal, and prosocial behavior: A meta-analytic review of the scientific literature. Psychological Science, 12, 353-359. doi:10.1111/1467-9280.00366

6 Baumeister, R. F., Campbell, J. D., Krueger, J. J., & Vohs, K. D. (2008). Exploding the self-esteem myth. In S. O. Lilienfeld, J. Ruscio, & S. J. Lynn (eds.), Navigating the mindfield: A user’s guide to distinguishing science from pseudoscience in mental health, pp. 575-587. Amherst, NY, US: Prometheus Books.

7 Adams, R. C., Sumner, P., Vivian-Griffiths, S., Barrington, A., Williams, A., Boivin, J., Chambers, C. D.,& Bott, l. (2017). How readers understand causal and correlational expressions used in news headlines. Journal of Experimental Psychology: Applied, 23, 1-14. doi:10.1037/xap0000100

Mueller, J. F., & Coon, H. M. (2013). Undergraduates’ ability to recognize correlational and causal language before and after explicit instruction. Teaching of Psychology, 40, 288-293. doi:10.1177/0098628313501038

8 Lazarus, C., Haneef, R., Ravaud, P., & Boutron, I. (2015). Classification and prevalence of spin in abstracts of non-randomized studies evaluating an intervention. BMC Medical Research Methodology, 15, 85. doi:10.1186/s12874-015-0079-x

9 Robinson, D. H., Levin, J. R., Thomas, G. D., Pituch, K. A., & Vaugh, S. (2007). The incidence of ‘causal’ statements in teaching-and-learning research journals. American Educational Research Journal, 44, 400–413. doi:10.3102/0002831207302174

10 Hart, B., & Risley, T. R. (1995). Meaningful differences in the everyday experience of young American children. Paul H. Brookes Publishing Co.

11 Marioni, R. E., Davies, G., Hayward, C., Liewald, D., Kerr, S. M., Campbell, A.,…Deary, I. J. (2014). Molecular genetic contributions to socioeconomic status and intelligence. Intelligence, 44, 26-32. doi:10.1016/j.intell.2014.02.006

Trzaskowski, M., Harlaar, N., Arden, R., Krapohl, E., Rimfeld, K., McMillan, A.,…Plomin, R. (2014). Genetic influence on family socioeconomic status and children’s intelligence. Intelligence, 42, 83-88. doi:10.1016/j.intell.2013.11.002

12 Burks, B. S. (1928). The relative influence of nature and nurture upon mental development; a comparative study of foster parent-foster child resemblance and true parent-true child resemblance. Yearbook of the National Society for the Study of Education, Pt. I, 219-316.

13 Leahy, A. M. (1935). A study of adopted children as a method of investigating nature-nurture. Journal of the American Statistical Association, 30, 281-287. doi:10.1080/01621459.1935.10504170

Neiss, M., & Rowe, D. C. (2000). Parental education and child’s verbal IQ in adoptive and biological families in the National Longitudinal Study of Adolescent Health. Behavior Genetics, 30, 487-495.

Wadsworth, S. J., Corley, R. P., Hewitt, J. K., Plomin, R., & DeFries, J. C. (2002). Parent-offspring resemblance for reading performance at 7, 12 and 16 years of age in the Colorado Adoption Project. Journal of Child Psychology and Psychiatry, 43, 769-774. doi:10.1111/1469-7610.00085

14 Sue, D. W., Capodilupo, C. M., Torino, G. C., Bucceri, J. M., Holder, A. M. B., Nadal, K. L., & Esquilin, M. (2007). Racial microaggressions in everyday life: Implications for clinical practice. American Psychologist, 62, 271-286. doi:10.1037/0003-066X.62.4.271

15 Nadal, K. L., Griffin, K. E., Wong, Y., Hamit, S., & Rasmus, M. (2014). The impact of racial microaggressions on mental health: Counseling implications for clients of color. Journal of Counseling & Development, 92, 57-66. doi:10.1002/j.1556-6676.2014.00130.x

16 Note the causal language in the title of the article: Nadal, K. L., Griffin, K. E., Wong, Y., Hamit, S., & Rasmus, M. (2014). The impact of racial microaggressions on mental health: Counseling implications for clients of color. Journal of Counseling & Development, 92, 57-66. doi:10.1002/j.1556-6676.2014.00130.x

17 Lilienfeld, S. (2017). Microaggressions: Strong claims, inadequate evidence. Perspectives on Psychological Science, 12, 138–169. doi:10.1177/1745691616659391