recent

Deepfakes and the Threat to Privacy and Truth

In an age of heated polarization of it will be difficult for politicians to convince their opponents that damaging videos are in fact deepfakes.

You just crossed into the twilight zone.

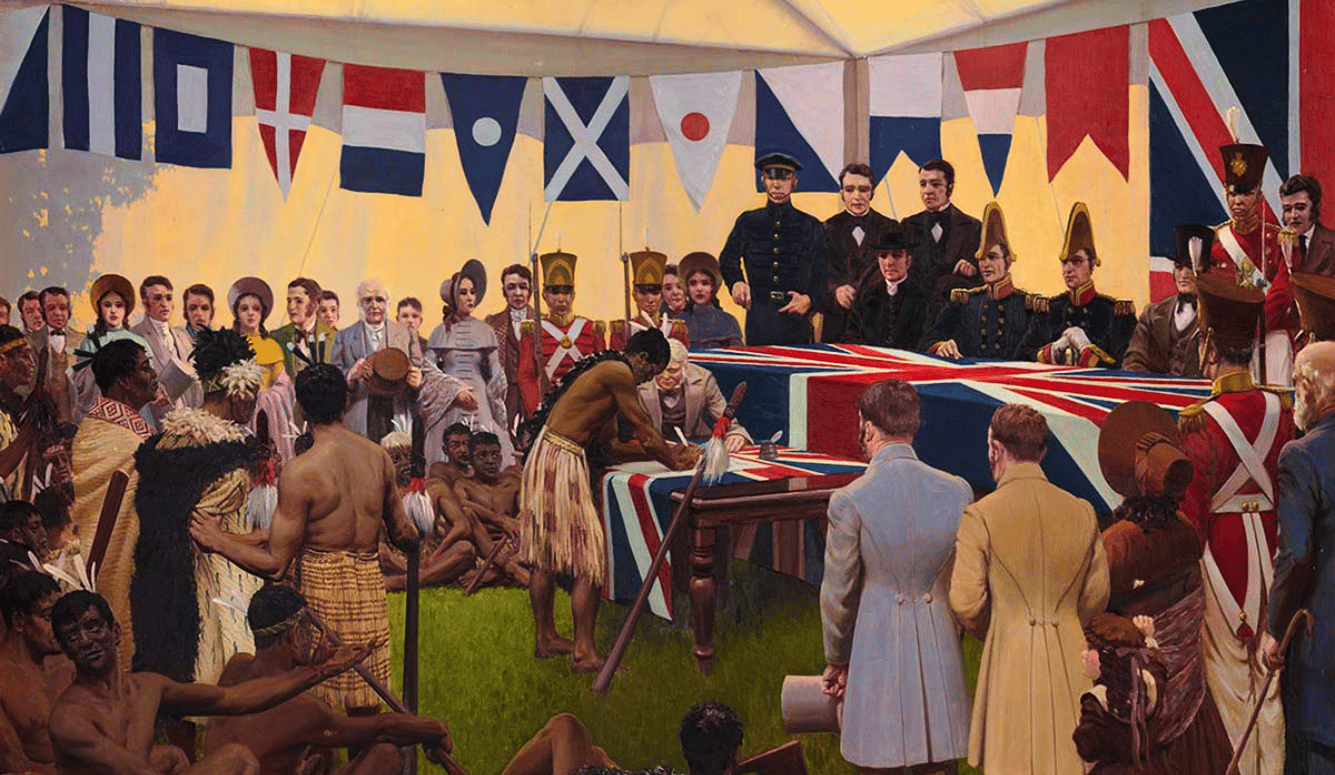

“Photographs furnish evidence,” wrote Susan Sontag in On Photography. “A photograph passes for incontrovertible proof that a given thing happened.” Sontag went on to write of how photographs can misrepresent situations. But do they even have to show real objects?

When you open the website “This Person Does Not Exist” you are met with the face of a man or woman. He or she looks normal—like the average person you would brush past on the way to work—but he or she does not exist. The website uses generative adversarial networks, which produce original data from training sets. Through analyzing vast numbers of real faces the website can generate new ones.

True, there are some glitches. The first man I saw—a cheerful, bald, middle-aged man who could have been a television evangelist or a salesman at a training seminar—had an inexplicable hole beneath his ear, which, once seen, gave him an unnerving reptilian appearance. More often than not, though, the faces are indistinguishable from the real thing.

You just crossed into the twilight zone.

Scientists from OpenAI, a research organization dedicated to investigating means of developing “safe” artificial intelligence have decided not to release their new machine learning system, which generates texts based on writing prompts, for fear that it could be used to “generate deceptive, biased, or abusive language at scale.”

The system generates text by understanding and replicating the linguistic and rhetorical logic of prose. To be sure, the small examples OpenAI has released—in which, for example, the model argues that recycling is bad and reports that Miley Cyrus has been caught shoplifting—contain some bizarre assertions, weird repetition, and ungrammatical phrases, but one could say the same of a lot of the prose that people write.

You just crossed into the twilight zone.

“Deepfakes”—a portmanteau of “deep learning,” which essentially entails machines learning by example, and “fake”—are used not just to create non-existent people but to misrepresent real ones. One can, for example, take someone’s face and put it onto someone else’s body.

In practice, in a grim reflection on our species, this tends to involve anonymous netizens creating videos in which the faces of celebrities have been grafted onto the bodies of porn stars. Until it was banned in 2018, the subreddit “deepfakes” was alive with users busily cooperating in the efforts to superimpose Gal Gadot or Emma Watson’s faces into hard-core porn.

In a recent interview with the Washington Post, Scarlett Johansson spoke of how dozens of videos online portray her in explicit sex scenes. “The fact is that trying to protect yourself from the Internet and its depravity is basically a lost cause,” Johansson said. “The Internet is a vast wormhole of darkness that eats itself.”

You are now crossing into the twilight zone.

Of course, people have been able to manipulate text, sound, and images for almost as long as man has been able to record them. The better part of a hundred years ago, Nikolai Yezhov was scrubbed out a photo with Joseph Stalin after the man that he had served with savage loyalty had had him executed.

Still, even as “photoshopping” technology has advanced it has had little impact on our impression of the world. “Airbrushing” has allowed the bodies of celebrities to seem eerily flawless, and occasional “fake” images have been accepted as real. For example, composite photos of John Kerry and Jane Fonda briefly allowed Republican activists to claim that Kerry was associated with the controversial anti-war/formerly pro-communist actress in 2004.

Photoshopping, though, is less vulnerable to bad actors than deepfakes. When an image has been photoshopped, as in the case of the Kerry/Fonda photo, one can generally find the original. With deepfakes, there are a whole mess of originals, which are far more difficult to trace. Video is also more convincing, and more compelling, than static images and has thus far greater potential to be used for licentious or cynical purposes.

As well as humiliating celebrities, deepfakes can be used to harass and harm ordinary people by portraying them in compromising situations. This is inherently hurtful, by damaging their sense of themselves and their control over their lives, and also threatens their relationships or their careers by dishonestly associating them with vice or crime. A sinister quality of deepfakes is how easy it has been for people to learn how to create them. Some Australian journalists easily created a simple deepfake of Malcolm Turnbull speaking (thus cleverly preempting suggestions that they learn to code).

This also raises concerns about policing. Now, if you have video of someone committing a crime it is all but impenetrable evidence of their guilt. As the technology with which to create deepfakes develops, the innocent could be framed and the guilty could have a clever new excuse.

In advanced nations this trend will be damaging and in developing nations it could be deadly. In India, dozens of people have been lynched after dark rumors have been spread about them on WhatsApp. One can only imagine the furore that could be whipped up if a rumor was supported by a video.

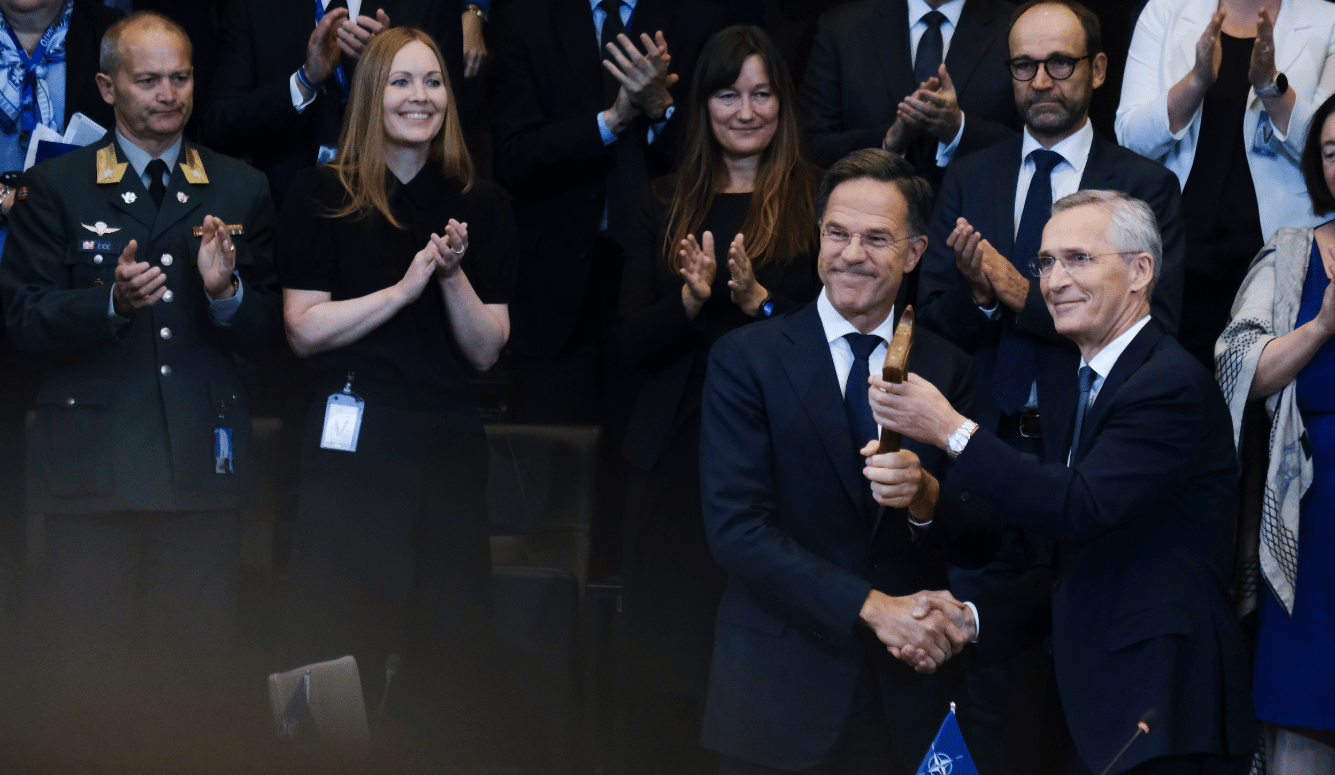

Political scandals could be manufactured as well. In an age of heated polarization of it will be difficult for politicians to convince their opponents that damaging videos are in fact deepfakes. On the flipside, if real videos emerge of sins and crimes they will be able to suggest that they have been invented. Some, like the political scientist Thomas Rid, have said they “do not understand the hype” when “the age of conspiracy is doing fine already.” To that I say, things can always get worse.

Platforms have hurried to contain the proliferation of deepfakes. Reddit banned /r/deepfakes, forcing its users to migrate to 4Chan, while Twitter, Gfycat, and Discord have banned Deepfake content and creators. I expect some contrarians to insist that deepfakes are examples of free expression but if the law offers any defense of our reputations and our privacy, malicious deepfakes should be banned not just on social media platforms but by law.

An important study of the potential for AI to be misused maliciously has also recommended restricting the availability of AI codes to block “less capable actors” from adding them to their arsenals. “Efforts to prevent malicious uses solely through limiting AI code proliferation,” however, they go on, “are unlikely to succeed fully, both due to less-than-perfect compliance and because sufficiently motivated and well-resourced actors can use espionage to obtain such code.”

As deep learning technology advances, methods of exposing fakes advance as well. Siwei Lyu of the University of Alabama, for example, produced a method of detecting deepfakes by analysing the frequency with which people in videos blinked. Recreating natural blinking habits in deepfakes has been hard, he explained, because it is much harder to find pictures of people with their eyes closed than with their eyes open.

Still, there is no guarantee that methods of detecting fake images, audio, and text will advance as rapidly as methods of producing them. Moreover, in an age of declining social trust it is increasingly difficult to convince people that something is true or false even if one has proof. The heat and pace of the news cycle appeal to our biases, not our rationality. As the scandal of the Covington students and Jussie Smollett have demonstrated, people make snap judgements based on scant information and changing their minds once they have been made is always difficult.

In a world where data can be so terribly unreliable—where our eyes can indeed be lying to us—we have to restrain those aggressive impulses that lead us to draw bold conclusions about people and events. We have to collectively acknowledge the importance of accurate data, and the possibility that it might defy our prejudices, even if we disagree on broader theories and ambitions.

When we take a photograph, Sontag wrote, we are “creating a tiny element of another world: the image-world that bids to outlast us all.” One of our important responsibilities is to struggle to align the image-world with our own.