Most writing experts agree that “direct, declarative sentences with simple common words are usually best.”1 However, most undergraduates admit to intentionally using complex vocabulary to give the impression of intelligence to their readers. Does using complex vocabulary in writing actually increase the perception of higher author intelligence among readers? According to Carnegie Mellon University professor Daniel M. Oppenheimer, the answer is No.2 Oppenheimer’s 2006 article published in the journal Applied Cognitive Psychology, “Consequences of Erudite Vernacular Utilized Irrespective of Necessity: Problems with Using Long Words Needlessly,” effectively makes the case against needless complexity in writing. The paper includes five experiments: the first three show the negative influence of increased complexity on perceived author intelligence, and the latter two investigate the role of fluency (ease of reading) more generally on judgments about author intelligence.

Oppenheimer’s work provides valuable information on how to avoid the common pitfalls of trying to sound smart when writing. The research also provides an insightful framework to explore how needless complexity may be harming public perception at larger scales, such as the perception of academic journals and disciplines. In what follows, I will briefly outline the experiments and findings in Oppenheimer’s paper and apply them to academia to show how disciplines vary in the degree of unnecessary complexity. This complexity comes at a cost, and fields like gender studies are paying the price.

* * *

Experiment 1: Does Increasing the Complexity of Text Make the Author Appear More Intelligent?

In the first experiment, Oppenheimer showed that using longer words causes readers to view the text and its author more negatively. Six personal statements for graduate admission in English Literature were taken from writing improvement websites that varied greatly in quality and content. Excerpts were taken from the six essays and three versions of each were prepared. Highly complex versions were made by replacing every noun, verb, and adjective with their longest respective entry in the Microsoft Office thesaurus. Moderately complex versions were made by only altering every third eligible word, and an original was left unchanged. Each of the 71 participants read one excerpt and decided whether or not to accept the applicant and to rate both the confidence in their decision and how difficult the passage was to understand.

The more complex an essay was, the less likely students were to accept the applicant regardless of the quality of the original. Interestingly, controlling for difficulty of reading nearly eliminated the relationship between complexity and acceptance. The opposite is not true, however, suggesting that differences in fluency are what cause the negative relationship between complexity and acceptance. Complex texts are rated poorly because they are hard to read.

Experiment 2: Is the Perceived Intelligence of the Author Affected by the Complexity of the Translation Used?

Even geniuses sound unintelligent when they use big words. Concerns about the word replacement algorithm in experiment one hurting the quality of the text due to imperfectly matched meanings led the author to a second, more natural test. One of two different translations of the first paragraph of Rene Descartes Meditation IV were given to 39 participants. Two independent raters agreed that one of the translations was considerably more complex despite comparable word counts. Participants read the text and rated both the intelligence of the author and how difficult the passage was to understand. In order to investigate the effects of a prior expectation of intelligence, half were told the author was Descartes and half were told it was an anonymous author.

Readers rated the author intelligence higher in the simple translation, whether or not they knew the author was Descartes. As in experiment 1, loss of fluency appeared to be the cause of poor ratings for the complex translation, but statistical significance was not reached for its mediating influence in this case.

Experiment 3: Does Decreasing the Complexity of Text Make the Author Appear More Intelligent?

Have a big vocabulary? Dumbing it down with this method might improve your writing. A dissertation abstract from the Stanford sociology department with an unusual number of long words (nine or more letters) was selected for the experiment. A simplified version of the text was made by replacing every long word with its second shortest entry in the thesaurus. The 85 participants were instructed to read one of the abstracts and rate both the intelligence of the author and how difficult the passage was to understand.

Readers rated the author intelligence higher and the difficulty of understanding lower in the simplified version. Again, fluency had the predicted effect, but failed to reach statistical significance. This supports experiment 1 by showing that a word replacement algorithm does not necessarily impair the quality of work and make it harder to understand. The message is clear—complex texts are harder to read and get rated more poorly.

Experiment 4: Does Any Manipulation that Reduces Fluency Reduce Intelligence Ratings?

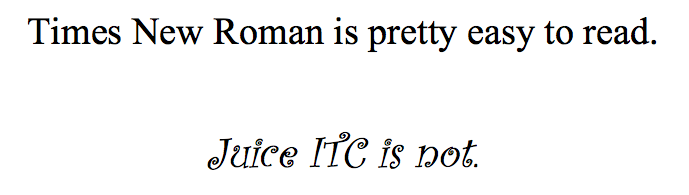

This experiment is a compelling argument against using silly fonts (I’m looking at you, Comic Sans). Presenting text in a hard to read font is an established way of reducing fluency. The unedited version of the highest quality essay from the first experiment was given to 51 participants in one of two fonts: italicized “Juice ITC” or normal “Times New Roman.” See below for a comparison:

The participants were instructed to read the text and rate the author’s intelligence. All of the instructions and rating scales were written in the corresponding text to prevent readers from assuming the author had chosen the font, which could be taken as a hint about their intelligence.

Readers who received the “Juice ITC” version rated the author as less intelligent than those who read it in “Times New Roman.” This establishes the effect of fluency independent of complexity, and supports the idea that complex texts are rated poorly because they reduce fluency.

Experiment 5: Do Manipulations of Fluency Have the Same Effect if the Source of the Decreased Fluency is Obvious?

People tend to discount the role of fluency when it has an obvious source. For example, Tversky and Kahneman found that although people typically use fluency as a cue for estimating surname frequency, if they are in the presence of obvious causes for fluency (personal relevance, famous name) they stop using fluency and even overcompensate.3 To test this, 27 participants were given an unedited essay from experiment one that was either printed normally, or with low toner in the printer making it light, streaky, and hard to read. Readers were asked to decide if they would accept the applicant as well as rate the confidence of their decision and the author’s intelligence.

Participants who read low toner texts were more likely to recommend acceptance than those who read the normal version. They also rated the intelligence of the author more highly in the low toner condition. When the source of fluency is obvious, people become aware of their bias and overcorrect.

* * *

The implications of the first four experiments can be neatly summarized: “Keep it simple, stupid.” As experts have long recommended, you should use short, common words and easy to read fonts. Someone who makes good points, but relies on complex words and jargon to do so, likely pays a price in terms of the perceived quality of their work and mind. Reading Oppenheimer’s paper made me wonder if the same rules hold true on the broader scale of academic disciplines. If a field tends to use needlessly complex language more than other fields, is it viewed as less credible?

To test this hypothesis I set out to compare the complexity of the top journals from various fields to their perceived credibility using average word length as a proxy for complexity and SCImago Journal Rank (SJR) as a proxy for credibility. Specifically, I chose to compare the top journal in astrophysics, biochemistry, sociology, and gender studies. To estimate word length I sampled the first five full articles from the latest issue of each journal and divided the number of characters by the word count. Each journal’s complexity score reflects the average word length of the five selected papers.

There was no relationship between SJR and complexity score (names of the journals and papers used for the analysis, as well as results, can be found here.) This could be for a number of reasons: the top journal of a field is not necessarily reflective of the field as a whole, average word length could be a bad proxy for complexity, SJR could be a bad proxy for credibility, small sample size, etc.

Or, could it be that I was confusing complexity with needless complexity?

The complexity score is similar for Gender and Society (5.5) and Annual Review of Astronomy and Astrophysics (5.3), but is the difficulty of understanding for the underlying content the same? Almost certainly not. Previous research has developed a Hierarchy of the Sciences that uses objective bibliometric criteria to assess the “softness” of an academic field.4Unsurprisingly, the ranks are as follows, from hardest to softest science: physical (physics, mathematics), biological-hard (molecular biology, biochemistry), biological-soft (plant and animal sciences, ecology), social (psychology, economics), and humanities (archaeology, gender studies). The authors state that as you progress from mathematics to humanities there is “a proportional loss of cognitive structure and coherence in their literature background.” In other words, Gender and Society uses equally complex language to describe “softer” phenomena. Therefore, the degree of needlesscomplexity is higher than in similarly complex texts where the underlying difficulty of the concepts is greater.

The point is clarified by the following examples taken from papers analyzed for this article. Excerpt 1 comes from an abstract in the Annual Review of Astronomy and Astrophysics,5 excerpt 2 comes from an abstract in Gender and Society.6

- In our modern understanding of galaxy formation, every galaxy forms within a dark matter halo. The formation and growth of galaxies over time is connected to the growth of the halos in which they form. The advent of large galaxy surveys as well as high-resolution cosmological simulations has provided a new window into the statistical relationship between galaxies and halos and its evolution.

- In Hegemonic Masculinity: Formulation, Reformulation, and Amplification, James Messerschmidt provides a comprehensive and nuanced explanation of hegemonic masculinity, including the sociohistorical context of its development, and an incisive analysis of research that has utilized the tricky concept.

I applied the methodology of experiment 3 from Oppenheimer’s paper to both (simplified long words). Let’s see how they change.

- In our modern understanding of galaxy creation, every galaxy forms within a dark matter halo. The creation and growth of galaxies over time is linked to the growth of the halos in which they form. The advent of large galaxy surveys as well as high-resolution cosmological models has provided a new window into the statistical link between galaxies and halos and its evolution.

- In Powerful Maleness: Design, Redesign, and Expansion, James Messerschmidt provides a full and nuanced account of powerful maleness, including the sociohistorical context of its development, and an incisive analysis of research that has utilized the tricky concept.

In the first excerpt there were nine words that had nine or more letters, and four could be replaced with a shorter word without distorting the meaning. In the second excerpt there were more long words, despite the fact that the passage is much shorter (38 vs. 64 words). Of the 12 words that met the criteria in excerpt 2, nine of them could be replaced without altering the meaning. Therefore, the density of both long words and needlessly long words is higher in the second excerpt.

Does this needless complexity harm the public perception of gender studies? Yes. Good luck convincing laymen about the oppression of women using terms like “subalterneity” and “phallogocentrism.” It will be difficult to understand, and therefore judged negatively. Take, for example, a passage from one of the most influential works from arguably the most influential gender theorist, “Performative Acts and Gender Constitution: An Essay in Phenomenology and Feminist Theory” by Judith Butler.7

When Beauvoir claims that ‘woman’ is a historical idea and not a natural fact, she clearly underscores the distinction between sex, as biological facticity, and gender, as the cultural interpretation or signification of that facticity. To be female is, according to that distinction, a facticity which has no meaning, but to be a woman is to have become a woman, to compel the body to conform to an historical idea of ‘woman,’ to induce the body to become a cultural sign, to materialize oneself in obedience to an historically delimited possibility, and to do this as a sustained and repeated corporeal project.

The above is a reasonable argument for separate definitions of sex and gender that is totally obscured by jargon and wordiness. It could be reworded as follows without losing any meaning:

According to Beauvoir, to be a woman is more than to be biologically female. It also involves behaving in the way women are expected to based on historical precedent.

* * *

In summary, a lack of fluency (often caused by needlessly complex words) causes readers to negatively judge a text and its author. The degree of needless complexity varies across academic fields and this needless complexity likely harms public perceptions of the fields where it is most pronounced. All of this raises one last question: why do disciplines like gender studies insist on needless jargon? Three hypotheses seem most likely: historical coincidence, physics envy, and conscious deception.

The first two hypotheses suggest well-meaning authors. It is possible that the early writers of certain fields like gender studies set a bad precedent by coining long, unintuitive words that are now important to the field, while other disciplines were fortunate to have such tractable terms as black holes and dark matter. Alternatively, given the widespread attitude (at least in some circles) that the humanities and social sciences are softer, less challenging, or less valuable than other fields of inquiry, one could see academics in those fields internalizing a sense of inferiority. This attitude is known as physics envy. In order to prove their intelligence, to themselves and to others, they might be tempted to complicate simple ideas and use complex vocabulary to describe them—just like the Stanford undergraduates interviewed by Oppenheimer.

The third hypothesis, that they are consciously deceiving readers, brings us back to Oppenheimer’s fifth experiment. If you recall, participants in that experiment rated more highly the text quality and author intelligence of text printed with low toner. It was obvious to them that the low toner was biasing their judgements in a negative way, so they overcompensated. The question remains, what sources of poor fluency are obvious enough to hit the inflection point where instead of hurting perception they begin to help it? Does heavy use of complex academic jargon count as an obvious enough source and, if so, are writers incentivized to use it? If laymen are self-aware of the jargon reducing fluency, they may give the text and the author benefit of the doubt when it is undeserved.

Ultimately, this is an empirical question waiting to be answered. In the meantime, if the writers who are producing needlessly complex pieces are doing so to deceive the public, we can take solace in the fact that it isn’t working very well— there is only one sociology journal in the top 100 (Administrative Science Quarterly, #78), and one gender studies journal in the top 1,000 (Gender and Society, #975) of journals ranked by SJR.

Andrew Bade is a PhD candidate studying Evolution, Ecology, and Organismal Biology at The Ohio State University.

1 Publication Manual of the American Psychological Association (4th ed.). 1996. Washington, D.C.: American Psychological Association.

2 Oppenheimer, D. M. 2006. Consequences of erudite vernacular utilized irrespective of necessity: problems with using long words needlessly. Applied Cognitive Psychology, 20: 139-156.

3 Tversky, A. and Kahneman, D. 1973. Availability: a heuristic for judging frequency and probability. Cognitive Psychology, 5(2), 207–232.

4 Fanelli, D. and Glänzel, W. 2013. Bibliometric Evidence for a Hierarchy of the Sciences. PLoS ONE 8(6): e66938.

5 Wechsler, R. H. and Tinker, J. L. 2018. The Connection Between Galaxies and Their Dark Matter Halos. Annual Review of Astronomy and Astrophysics, 56(1), 435-487.

6 Carian, E. K. 2018. Book Review: Hegemonic Masculinity: Formulation, Reformulation, and Amplification by James W. Messerschmidt. Gender and Society. https://doi.org/10.1177/0891243218809597

7 Butler, J. 1988. Performative Acts and Gender Constitution: An Essay in Phenomenology and Feminist Theory. Theatre Journal, 40(4), 519-531. doi:10.2307/3207893