Education

Reason and Reality in an Era of Conspiracy

Our memories are deeply fallible and highly suggestible.

Did Nelson Mandela die in prison or did he die years later? Many people reading this probably lived through such recent history, and know perfectly well that he died decades after his release. Many of our students, however, do not know this because they didn’t live through it, and they haven’t been taught it. That is trivially true, and not particularly worrisome. But in 2010 a quirky blogger named Fiona Broome noticed that many people she met – people who should know better – incorrectly believed that Mandela had died in prison. She dubbed this kind of widespread collective false memory the “Mandela Effect” and began gathering more cases, inspiring others to contribute examples to a growing online database. Ask your students if they’ve heard of the Mandela Effect and you will find that 95 percent are familiar with it. They are fascinated by it because it is a highly successful topic of countless YouTube videos and memes.

Examples of the Mandela Effect are amusing and easy to find online. Everyone thinks that Darth Vader said “Luke, I am your father” but in fact he said, “No, I am your father.” Everyone thinks Forrest Gump said “Life is like a box of chocolates” but he actually said, “life was like a box of chocolates.” The Queen in Snow White never said “Mirror, mirror on the wall,” but actually said, “Magic mirror on the wall.” The popular children’s book and show “The Berenstein Bears” is actually “The “Berenstain Bears.” Many people think evangelist Billy Graham died years ago but – at the time of this writing, at least – he lives on.

There’s nothing particularly surprising or even interesting about such failures of memory. Our memories are deeply fallible and highly suggestible. A recent study revealed that psychologists can easily coax subjects to ‘remember’ committing a crime they never actually committed (see Dr. Julia Shaw’s recent book The Memory Illusion). With the right coaching I can start to remember the time I assaulted a stranger, even if I never did. Moreover, cultural memes – like famous movie dialogue lines – naturally glitch, vary, and distort in the replication process, especially in mass media replication. The version an actor misstates on a late-night talk show, becomes the new urtext and rapidly replicates through the wider culture, replacing the original. All cultural transmission is a giant “telephone game.”

But here’s where it gets weird. Students – yes, current undergrads – think the explanation for these strange false memories is that a parallel universe is occasionally spilling into ours. In that parallel universe, apparently, Mandela did die in prison, and the Snow White mirror phrase is how we remember it. Alternatively, a number of students believe that we humans are moving between these parallel universes unknowingly, and also time-traveling so that our memories are distorted via the shifting time line.

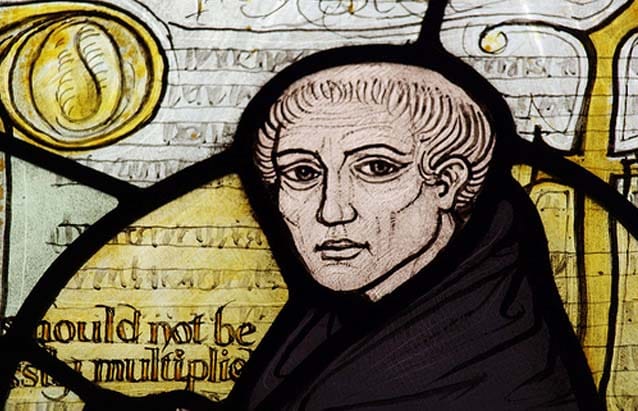

When I pressed my students, suggesting they were not really serious, they grew indignant. Like a gnostic elite, they frowned upon my failure to grasp the genius of their metaphysical conspiracy theory. As an antidote, I introduced Ockham’s Razor – a principle originally set forth by William of Ockham (c.1287-1347), that simpler explanations are preferable to metaphysically complex explanations of phenomena. When explaining an event, Ockham suggested that entities should not be multiplied unnecessarily. As he puts it in Summa Totius Logicae, “It is futile to do with more things that which can be done with fewer.” (i. 12) When you have two competing theories that make the same predictions, the simpler one is usually better. I don’t need a world of demonic possession, for example, to explain why the delusional person on my bus this morning was talking to his hand.

I turned to a student in the front row to illustrate the obviousness of Ockham’s Razor. “Imagine,” I said to him, “a series of weird bright lights appear over your neighborhood tonight around midnight. Now, which is easier to believe: that an alien invasion is happening, or that the military is testing a new technology?” Without blinking, the young man told me that the military would never be in his neighborhood, so aliens seemed more reasonable.

“But…but…” I stammered, “you have to assume a whole bunch of stuff to make the alien explanation work – like, aliens exist, aliens are intelligent, aliens have incredible technology, aliens have travelled across the solar system, aliens have evaded scientific corroboration, and also that aliens would come to your neighborhood while the military would not. Whereas, the list of assumptions for the military testing explanation is relatively small by comparison.” He just blinked in response.

Thus reproached, I switched to another example, asking students whether it was easier to believe a ghost slammed their door shut, or the wind did it. Some conceded that the wind was a leaner or more parsimonious explanation, but a great many students already believe strongly in the reality and ubiquity of ghosts, so referring to spirits did not seem to them like a violation of Ockham’s razor. Many ghost stories from family and friends, and YouTube videos had already served as a sort of ‘corroboration’ for their theory, so ghosts and wind had a comparably equal metaphysical status in their world.

I had arrived at the death of reductio ad absurdum arguments – where you demonstrate the absurdity of your opponent’s view, by showing that it has obviously ridiculous implications which your opponent has not yet appreciated. But one cannot offer such a refutation if every ridiculous implication seems equally reasonable to your opponent. You never get to an absurdity that both parties accept, and it’s just ‘beliefs’ all the way down. It is the bottomless pit of the undiscriminating mind. It is preferable to my students to think that alternate universes are colliding to create alternate Mandela histories and Darth Vader dialogue, than to think we simply remember stuff badly. Adiuva nos Deus.

Intense handwringing has attended the rise of fake news. The worries are justified, since the media has never been more unreliable, biased, and embattled. But the proposed solutions tend to focus on fixing the media, and no one dares suggest that we should be fixing the human mind. The human mind, however, is arguably broken, and educators must implement a rigorous curriculum of informal logic before our gathering gloom of fallacies, magical thinking, conspiracy theories, and dogma make the Dark Ages look sunny by comparison. Obviously, it would be nice if our politicians and pundits were reliable purveyors of truth, but since that isn’t about to happen, we should instead be striving to create citizens who see through these charlatans. Kids who believe their mistaken memories of movie lines are proof we’re all time-traveling or jumping between parallel realities are not those needed citizens.

Harvard legal scholars Cass R. Sunstein and Adrian Vermeule suggest that contemporary Americans are more credulous and committed to conspiracy theories because they have a “crippled epistemology.” When a person or community comes to believe that the twin towers fell because of a U. S. government plot, or that the 1969 moon landing never happened, or that AIDS was a manmade weapon, they reveal a crippled epistemology. And, according to Sunstein and Vermeule, as well as Cambridge political theorist David Runciman, the “crippling” results from a reduced number of informational sources or streams.1 When information enters a community through only a few restricted channels, then the group becomes isolated and their acceptance of ‘weird’ ideas doesn’t seem irrational or weird to those inside the group. We see lots of evidence of this, like when people get all their news on Facebook but the news is trickling through a single ideological pipeline.

On this view, increasing the number of informational sources reduces conspiracy gullibility. Closed societies, like North Korea and to a lesser degree China, are more susceptible to fake news, conspiracy, and collective delusion. Belief systems in these closed environments are extremely resistant to correction, because alternative perspectives are unavailable. Online information bubbles produce some of the same results as political media censorship.

My own experience in the Creation Museum in Kentucky confirms the idea that informational isolation is highly distorting. I spent time touring Noah’s Ark, watching animatronic dinosaurs frolic with Adam and Eve, and talking to the director Ken Ham. The Evangelical isolation became obvious, and was even worn as a badge of honor by Ham and others, as if to say, “We are uncontaminated by secular information.” The Bible-belt audience for Creation Science gets all its news about the outside world from Evangelical cable TV shows, Christian radio programs, blogs, podcasts, and of course church. An Evangelical museum, claiming the earth is 4000 years old, is just icing on the mono-flavored informational cake.

However, this kind of information isolation cannot explain our students’ gullibility. Yes, the average undergrad has a silo of narrow interests, but they are not deficient in informational streams. In fact, I want to argue they have the opposite problem. We now have almost unlimited information streams ready to hand on our laptops, tablets, smartphones, and other mass media. If I Google the 9/11 atrocity, it will be approximately 2 or 3 clicks to a guy in his mother’s basement, explaining in compelling detail how the Bush administration knew the attack was coming, engineered the collapse of the twin towers, and that the U. S. government is a puppet for the Illuminati, and so on. If you don’t already have a logical method or even an intuitive sense for parsing the digital spray of theories, claims, images, videos, and so-called facts coming at you, then you are quickly adrift in what seem like equally reasonable theories.

During the Renaissance, Europe had a similar credulity problem. So much crazy stuff was coming back from the New World – animals, foods, peoples, etc. – that Europeans didn’t quite know what to believe. The most we could do is collect all this stuff into wunderkammern or curiosity cabinets, and hope that systemic knowledge would make sense of it eventually. In a way, the current undergraduate mind is like the pre-Modern mind, chasing after weirdness, shiny objects, and connections that seem more like hermetic and alchemical systems. According to a recent survey by the National Science Foundation, for example, over half of young people today (aged between 18 and 24), believe that astrology is a science, providing real knowledge.2

During the last three years, I have surveyed around 600 students and found some depressing trends. Approximately half of these students believe they have dreams that predict the future. Half believe in ghosts. A third of them believe aliens already visit our planet. A third believe that AIDS is a man-made disease created to destroy specific social groups. A third believe that the 1969 moon landing never happened. And a third believe that Princess Diana was assassinated by the royal family. Importantly, it’s not the same third that believes all these things. There is not a consistently gullible group that believes every wacky thing. Rather, the same student will be utterly dogmatic about one strange theory, but dismissive and disdainful about another.

There appears to be a two-step breakdown in critical thinking. Unlimited information, without logical training, leads to a crude form of skepticism in students. Everything is doubtful and everything is possible. Since that state of suspended commitment is not tenable, it is usually followed by an almost arbitrary dogmatism.

From Socrates through Descartes to Michael Shermer today, doubting is usually thought to be an emancipatory step in critical thinking. Moderate skepticism keeps your mind open and pushes you to find the evidence or principles supporting controversial claims and theories. Philosopher David Hume, however, described a crude form of skepticism that leads to paranoia and then gullibility. He saw a kind of breakdown in critical thinking that presages our own.

“There is indeed a kind of brutish and ignorant skepticism,” Hume wrote, “which gives the vulgar a general prejudice against what they do not easily understand, and makes them reject every principle, which requires elaborate reasoning to prove and establish it.” Paranoid people, Hume explained, give their assent “to the most absurd tenets, which a traditional superstition has recommended to them. They firmly believe in witches; though they will not believe nor attend to the most simple proposition of Euclid.”3

Absent the smug tone, Hume is onto something. Creationists have just enough skepticism to doubt evolution, climate deniers have just enough skepticism to doubt global warming, and millennial students have just enough to believe in the latest conspiracy theory, as well as ghosts, fortune telling, astrology, and so on. In the Creationism case, the crippled epistemology results from too little information but in my Chicago undergrads the gullibility results from a tsunami of competing informational options. For our students, settling on a conspiracy closes an otherwise open confusion loop, converting distressing complexity and uncertainty into a reassuring answer – even if that answer is “time travel” or “aliens” or “the Illuminati.”

Another motive seems lurking in the background too, and it is insidious. The millennial generation does not like being wrong. They are unaccustomed to it. Their education – a unique blend of No Child Left Behind, helicopter parenting, and oppression olympics, has made them uncomfortable with Socratic criticism. When my colleague recently corrected the grammar on a student’s essay, the student scolded him for enacting “microaggressions” against her syntax. So, conspiracies no doubt seem especially attractive when they help to reinforce a student’s infallibility. Having an alternate dimension of Mandela Effect realities, for example, means the student is never wrong. I’m always right, it’s just reality that keeps changing.

There are two cures for all this bad thinking. One of them is out of our hands as educators, and involves growing up. While the demands of adult life are sometimes delayed – as graduates return to live at home and postpone starting families – there is an inevitable diminution of conspiracy indulgence when one is struggling to pay a mortgage, raise children, and otherwise succeed in the quotidian challenges of middle class. Interestingly, however, this reduction in wacky thinking is not provided by the light of reason and educational attainment, but rather by the inevitable suppression or inhibition resulting from the demands of the workaday world. Our students at 30-something and 40-something are not converts to rationality, but merely distracted from their old adventures in gullibility.

The other cure for conspiracy thinking, and the problems of too little and too much information, is something we educators can provide. And it’s relatively cheap. We could require an informal logic course for every undergraduate, preferably in their freshman or sophomore year. The course should be taught by philosophers or those explicitly trained in logic. I’m not talking about some vague ‘critical thinking’ course that has been robbed of its logic component. I’m talking about learning to understand syllogisms, fallacies, criteria for argument evaluation, deduction, induction, burden of proof, cognitive biases, and so on. The informal logic course (as opposed to formal symbolic logic) uses real life arguments as instances of these fundamentals, focusing on reasoning skills in social and political debate, news, editorials, advertising, blogs, podcasts, institutional communications, and so on. The students are not acquiring these skills by osmosis through other courses. It needs to be made explicit in the curriculum.

Studying logic gives students a way to weight the information that is coming at them. It gives them the tools to discriminate between competing claims and theories. In the currently frantic ‘attention economy,’ logic also teaches the patience and grit needed to follow complex explanations through their legitimate levels of depth. It won’t matter how sensitive to diversity our students become, or how good their self-esteem is, if a lack of logic renders them profoundly gullible. More than just a curative to lazy conspiracy thinking, logic is a great bulwark against totalitarianism and manipulation. The best cure for fake news is smarter citizens.

References:1 Sunstein, C. R. and Vermeule, A. (2009), Conspiracy Theories: Causes and Cures*. Journal of Political Philosophy, 17: 202–227. And see Runciman’s work on a 5-year funded research project called “Conspiracy and Democracy” at http://www.conspiracyanddemocracy.org

2 National Science Foundation, https://www.nsf.gov/statistics/seind14/index.cfm/chapter-7/c7h.htm

3 See David Hume’s Dialogues Concerning Natural Religion (Hackett, 2nd Ed. 1998), Book I.