Education

Gender Bias in STEM—An Example of Biased Research?

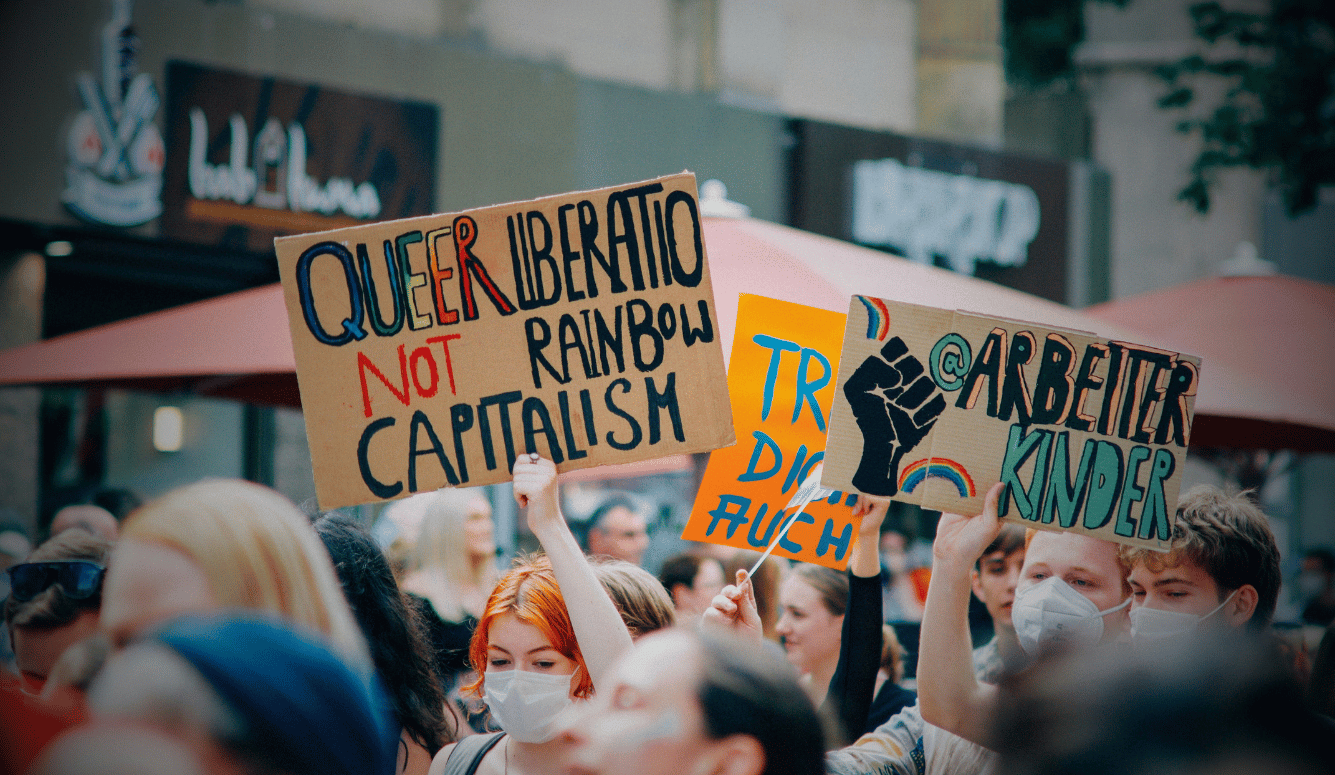

This can only go on for so long before people push back.

I don’t agree with everything in the infamous “Google Memo” written by James Damore, but I can understand why one might write such a memo after sitting through one too many training sessions on unconscious bias. I’m a professor in a STEM discipline, and like many STEM fields mine has substantially fewer women than men. Like every STEM professor that I know, I want my talented female students to have fair chances at advancing in the field. I’ve served on (and chaired!) hiring committees that produced “short lists” of finalists that were 50 percent women, I’ve recommended the hiring of female job applicants, I’ve written strong reference letters for female job applicants and tenure candidates, and I’ve published peer-reviewed journal articles with female student co-authors. At the same time, I’ve become increasingly frustrated by the official narratives promulgated about gender inequities in my profession arising from our unconscious biases. These narratives are, at best, awkward fits to the evidence, and sit in stark contradiction to first-hand observations.

My field is smaller than many other STEM fields, so for the sake of anonymity I will not name it, but all available data shows that the proportion of women in my discipline remains stable from the start of undergraduate studies and on through undergraduate degree completion, admission to graduate school, completion of the PhD, hiring as an assistant professor, and conferral of tenure. There have even been statistical studies (conducted by female investigators, FYI) showing that the number of departments with below-average proportions of women is wholly consistent with the normal statistical fluctuations expected from random chance in unbiased hiring processes. I cannot say that everyone in my field is perfectly equitable in all of their actions, but I can at least say that available evidence strongly suggests that the sexist actions of certain individuals do not leave substantial marks on the composition of our field. This should be a point of pride for us: Whatever sins might be committed by some individuals, as a community we have largely acted fairly and equitably in matters with tangible stakes for people’s careers.

Nor is my field unusual. In 2015, Professors Wendy Williams and Steven Ceci of Cornell University published a series of experimental findings in the Proceedings of the National Academy of Sciences (PNAS), and in their experiments they found that faculty reviewing hypothetical faculty candidates consistently preferred female candidates to male candidates. Moreover, Williams and Ceci cited literature showing that in real-world hiring women have an advantage over men.

My guess is that many readers will be surprised to hear me describe such findings. (After all, we’ve all sat through training sessions on purported biases in hiring processes.) Not being a social scientist myself, I cannot offer an in-depth defense of the work of Williams and Ceci, but I have searched in vain for informed critiques by experts. Alas, every critical summary that I’ve found reveals that the author did not actually read the paper. For instance, many people express incredulity at the assertion that real-world hiring data supports the finding of an advantage for female scientists in academic hiring. However, references 16 and 30-34 of the Williams and Ceci article make exactly that case. Are these references representative of the wider literature? Do they show data that was collected and analyzed via sound methods? I have yet to see a critic make that case, but if an informed expert can point to flaws in those references I would gratefully read their analysis.

Another common criticism is that Williams and Ceci ignored the famous “Lab Manager Study” of Corinne Moss-Racusin et al., also published in PNAS in 2012, which found that faculty were willing to offer higher salaries to hypothetical applicants for a lab manager position if the name on the resume was male rather than female. However, Williams and Ceci did not ignore this study; they actually cited it in the main text of the article (reference 6) and then discussed it at length on page 25 of the supplemental materials. The response of Williams and Ceci is that faculty hiring involves highly-accomplished applicants for high-status jobs, not less-accomplished new college graduates applying for lower-status jobs, and so different psychological factors may come into play when people are evaluating the prospective hires. Are they right? I don’t know enough about the relevant psychological literature to venture an informed opinion, but I’d love to read a response by a critic who acknowledges that Williams and Ceci actually discussed these findings, rather than one who dismisses them by asserting that they ignored the work of Moss-Racusin.

So, although I cannot assert with complete confidence that STEM fields are wholly free of sexism, I can point to strong evidence that disparities in STEM are not driven by hiring bias, and I must regretfully note that there has been little informed engagement with such findings. It is not intellectually healthy to have so little informed, critical dialogue around work with potentially high significance for such an important issue. Meanwhile, for those of us working in STEM, it is demoralizing to see that when researchers find evidence that we are working actively and fruitfully to remedy gender gaps in our profession, the response is not to celebrate our success but rather to offer uninformed critiques. It seems to be impermissible to question whether our purported sexism continues to drive inequality in our community.

If this were just about one study then we could (and should) react with stiff upper lips, and not let it colour our perception of the debate around gender in the STEM disciplines. Alas, there is a pattern (bias?) in research on bias in academic science. For instance, in the same year that PNAS published the work of Williams and Ceci, they also published a study of gender bias in science by van der Lee and Ellemers, purportedly showing that female scientists in the Netherlands are more likely than male peers to have their grant proposals rejected. However, the numbers provided in the article clearly show that the disparities in funding success result from how women are distributed among disciplines, not differential treatment of men and women in the review process: Women in the Netherlands are more likely to be in fields like biology (with low funding success rates) than physics (with comparatively higher funding success rates), but within each field women and men have similar success rates for their grant proposals. This point was quickly noted by a reader, and the editors of PNAS published a critical comment within two months of the original article’s publication.

Perhaps it is a sign of healthy scientific communication when published work sparks informed discussion of alternative explanations and the journal editors make room for that discussion, but it is worrisome that such a basic error was allowed to slip through the initial review process. It’s even more worrisome when one examines the “Acknowledgments” section of the Williams and Ceci article, which I will quote in part: “We thank [names of colleagues who provided advice], seven anonymous reviewers, one anonymous statistician who replicated our findings, and the editor.” It is very unusual for an article to be reviewed by seven separate peer reviewers before publication (the most I’ve ever had was four, and I’ve published in some rather high-impact journals), and even more unusual for a journal to insist that the raw data be sent to an anonymous statistical consultant for independent verification of the results. One cannot help but wonder if Williams and Ceci were held to a higher standard than van der Lee and Ellemers because Williams and Ceci offered work that contradicted a common narrative while van der Lee and Ellemers offered work that allegedly affirmed the conventional wisdom.

To put these articles in context, keep in mind the place that PNAS occupies in the hierarchy of academic journals. PNAS is not merely a high-status, high-impact, widely-read journal. There are many such journals; indeed, every field of science has at least one such publication venue (and often more than one). What makes PNAS stand out is that it’s one of the few well-respected journals to publish work spanning the entire breadth of science and engineering, ranging from psychology to materials engineering to marine biology. My colleagues and I don’t usually read psychology journals but we do read PNAS. It’s unlikely that we’ll ever have a lunch conversation about an article published in a specialty venue for social scientists, but it’s entirely possible that we’ll pass a lunch time discussing some social science finding published in PNAS. An editorial slant in such a respected and well-read journal will have consequences for the narratives that gain traction in our field.

So much for the big picture. What about the small scale? Everyone has heard anecdotes about sexist treatment of women, and I confess that I’ve witnessed a few such incidents. (I tried to do what I could when I witnessed them, but it isn’t always easy to process what you’ve seen quickly enough to respond in a timely fashion, especially when issues of power and status loom large.) At the same time, I’ve also witnessed compensatory measures, and even over-compensation. I’ve seen “diverse” colleagues get away with conduct bordering on fraud because nobody wanted to call them out for it. I’ve seen middling female students lavished with praise and encouragement when they were ambivalent about whether to apply to graduate school, while similarly weak male students were met with (quite appropriate!) skepticism about their interest in graduate study. I’ve seen hiring committees bend over backwards to paper over a female applicant’s weaknesses while rigorously critiquing a male applicant.

Of course, I’ve seen white and male colleagues get away with certain things as well, so I can’t say that the situation is entirely one of “reverse sexism” or “political correctness” or some such thing. What I can say is that my ground-level observations are largely consistent with the big-picture data: Sexist things do happen, but people work conscientiously to compensate and even over-compensate, resulting in an employment landscape that is at the very least level and often somewhat favorable to women. But it is impermissible to vocalise this observation, so we are left with no choice but to nod and agree as we are scolded for shameful internal biases that allegedly leave their mark on our professional community, a community that many of us care deeply about improving.

This can only go on for so long before people push back. I certainly have my criticisms of Damore’s arguments, and I would be the first to agree that he is clueless about how to navigate workplace politics. Nonetheless, if we keep hearing that conscientious and hard-working people are at fault for gender gaps, disparities that they themselves have actively worked to combat, and that have even seen peers perhaps over-correct for, eventually people will start responding with something other than enthusiastic confessions of privilege and bias. People will start pointing to contradictory data, and even sympathetic people might start grumbling about excesses of political correctness that they may have witnessed. Some of us will do it pseudonymously, both for our own comfort and the comfort of co-workers, but some people will do like James Damore and speak out under their own names, making the workplace uncomfortable (to put it mildly).

We have a choice before us. One option is to celebrate the progress that has been made, stop pointing the blame at the alleged biases of conscientious people, and steer the conversation to the true origin of disparities, earlier “in the pipeline” as they say. The other option is to keep admonishing generally well-meaning professionals to stop behaving in such an allegedly biased manner, and then act shocked and scandalised when somebody draws attention to the countervailing data. The first path will mean fewer silly training sessions, but it might also mean awkward conversations about how and why people become interested in different paths of work and study. Whether these factors arise from nature, nurture, or the interaction thereof, they come into play long before anybody gets to a STEM career, and moving past bias explanations means that people who are concerned about the makeup of the profession will have to be able to confront these questions. The second path will avoid those awkward conversations, but at the cost of resentment that might occasionally pour out. I can’t speak for everyone in STEM, but as a scholar I’d rather see conscientious people confront data and discuss its implications, not paper over it with misplaced blame.