Science / Tech

Elon Musk, Mark Zuckerberg, and the Importance of Taking AI Risks Seriously

With the very real possibility that such technology will be able to make changes to itself, even a slight diversion from goals that match our own could be disastrous.

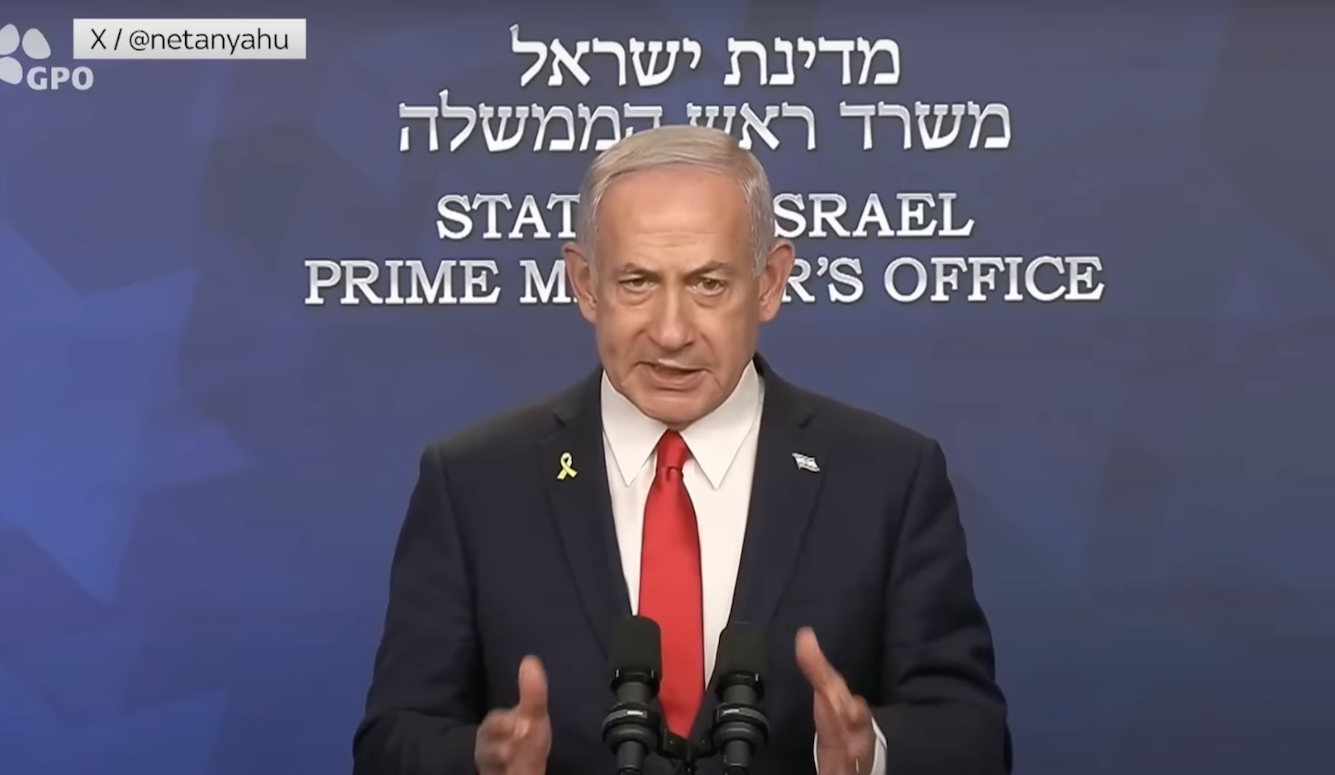

A recent public disagreement between Elon Musk and Mark Zuckerberg was picked up widely in the media. It concerned their vastly different views on the topic of artificial intelligence. Musk has been saying for years that AI represents ‘an existential risk for human civilisation’; Zuckerberg believes that such claims are ‘irresponsible’.

Mark Zuckerberg says Elon Musk is drumming up "doomsday scenarios" about artificial intelligence https://t.co/NB5qJrGQSi pic.twitter.com/A4L7roZvum

— FORTUNE (@FortuneMagazine) July 25, 2017

Some insisting that Elon Musk is wrong about AI focus on the benefits of artificial intelligence (as though the founder of Tesla isn’t aware of the benefits of, say, automated vehicles). It is undoubtedly true that AI has the potential to be the very best technological advancement of human history, and by a very large margin. Yet the obvious upsides do not somehow eliminate the possibility of existential risk.

Others decided that Musk is simply trying to promote his personal brand as a tech superhero, and that he isn’t concerned about the future of humanity at all. If true, this would reveal a disappointingly flawed human being – and reveal absolutely nothing regarding the problem of artificial intelligence safety.

From my perspective, the most worrying were the articles that dismissed the story as being inherently silly:

Geek fight! Musk says Zuckerberg naive about killer robots https://t.co/XDL8i1fNym pic.twitter.com/xX760BrIqX

— Reuters Science News (@ReutersScience) July 25, 2017

This meme, that anyone concerned about artificial intelligence is afraid of Terminator 2: Judgment Day becoming reality, is not new. But when communicated through respected platforms it represents dangerously lazy journalism.

From Musk to Stephen Hawking to Bill Gates – serious thinkers who view artificial intelligence safety as arguably our most important problem, are never talking about cartoonish killer robots. It may be that no level of engagement will convince many to align with their views – but we must have a baseline obligation to address genuine positions.

I think the proliferation of ‘killer robot’ headlines could be all but eliminated by everyone reading Nick Bostrom’s excellent book Superintelligence. But it seems that for many, this topic is so ridiculous that to even open such a book would be a little too close to joining a geeky cult.

So, if these weirdos aren’t worried about killer robots, what are they worried about?

Consider your reaction when a mosquito buzzes past as you’re trying to eat dinner with your family. It’s annoying, and if you manage to swat it – problem solved. Your family would not shriek with horror at the senseless murder of a living being, nor despair at the descent of a loved one to such an evil state. You would not be a killer-human, merely a human. The goals of the mosquito do not align with your goals, and for most of us it seems ethically unimportant to even pause to consider that difference. In aggregate, human beings don’t care about mosquitos – at all. A superintelligence may not care about human beings – at all. The concern is not that we will face an army of Terminators bent on the destruction of humanity, it is of super-intelligent indifference.

There is a natural inclination to be skeptical the possibility of super intelligent AI. In his Ted Talk ‘Can we build AI without losing control over it,’ Sam Harris outlines that to doubt the possibility, or even inevitability of super intelligent AI, we need to find a problem with one of the following three premises:

1) Intelligence is the product of information processing.

2) We will continue to improve our intelligent machines.

3) We are not near the summit of possible intelligence.

As Harris points out, these concerns don’t even rely on creating a machine that is far smarter than the smartest human to have ever lived. Electronic circuits function about a million times faster than biochemical circuits. Therefore, a general AI whose intelligence growth stopped at a high human level would still think a million times faster than humans can. So, over the course of one week, it will produce 20,000 years of human level progress by virtue of speed alone. It will continue to make progress at this level week after week. Keep in mind, the idea that AI intelligence would stop at a human level is almost certainly a mirage. How long before this machine stands to us as we stand to insects?

With the very real possibility that such technology will be able to make changes to itself, even a slight diversion from goals that match our own could be disastrous. We simply don’t know how long it will take to develop necessary safety measures to ensure that human flourishing remains a key feature of the technology, and with perceived unprecedented gains for the first developers — we’re facing the risk of an arms race that treats this problem as an afterthought.

Even if this machine were created with perfect safety, and perfect value alignment, and it did whatever its creators asked of it – what would be the political consequences of its development? With around 14,000 nukes still scattered between the U.S and Russia, the attitude today regarding the nuclear threat is alarmingly non-alarmist. But with a six month head start on artificial general intelligence being so meaningful, it’s rational to suggest that Russia could justify a pre-emptive strike on Silicon Valley if they knew (or just suspected) Google was on the precipice of a key breakthrough on a technology that would likely give its developers global dominance. This seems an extraordinarily important question, and one that is often lost in sarcastic talk of killer robots.

Sometimes AI safety concerns are dismissed because of time. “Sure, that might all be true, but it won’t happen for 100 years.” Why would time be a relevant factor here ethically? When we talk about climate change, we’re not relieved to hear that catastrophic changes will occur 50 years from now.

More from the author.

Musk’s concerns are not an advertisement for a reality that mirrors Blade Runner or 2001: A Space Odyssey. It’s not just that Musk isn’t talking about a world where heroic humans will have to do battle with nefarious smarter-than-human robots – it’s that such a world could not exist. As soon as the best chess player in the world was a computer, there would be no looking back; the best chess player in the universe will never be human again. If the being with the highest fluid intelligence is ever a computer, there will be no going back; the smartest being in the universe will never be human again. And having lost that control, the intelligence gap between ourselves and our creation will be so large as to make the idea of regaining it untenable. In this scenario, our only hope is that these smartest beings have goals and values that align with our interests – and our only hope for that outcome is to take this topic incredibly seriously.