Education

Evergreen State and the Battle for Modernity Part 2: True Believers, Fence Sitters, and Group Conformity

Evergreen State fiasco drew national attention, and since then it appears that the college has only chosen to double down on the insanity.

Over a month has passed since the Evergreen State fiasco drew national attention, and since then it appears that the college has only chosen to double down on the insanity. According to one report, in the wake of Professor Bret Weinstein’s appearance on Tucker Carlson’s show on Fox, many of his colleagues demanded his resignation for putting their community “at risk.” I will spare all the details, as they can be found all over the internet, but for a quick overview, feel free to go ahead and go over to part 1 for a recap.

Instead, this essay attempts to answer one of the biggest questions that emerged from the previous article—namely how could it be possible that so many people, large cohorts of students, and indeed entire academic disciplines be so bamboozled into believing much of postmodernist rhetoric, including that science is a symbol of the patriarchy (you’ve got to click on the link, the title is “Science: A masculine disorder?”) and the concept of health is merely another tool of Western colonial oppression? In so doing, I will use sound psychological principles and peer-reviewed research to come to some unsettling conclusions, but which will hopefully provide somewhat of a roadmap for tackling virulent, pathogenic ideologies that rob people of their reason and common sense.

Noted skeptic Michael Shermer, in his book Why People Believe Weird Things, identified two psychological phenomena, attribution bias (which is the tendency to believe one’s reasoning is better than others’) and confirmation bias (which is the tendency to pick and choose evidence that only confirms one’s existing opinion), that reinforce faulty thinking. But this doesn’t explain why the Evergreen State mob all seemed to act of one mind, or why, according to Heterodox Academy, 89% of academics all appear to have similar (left-of-center) political views. Why do so many people share the exact same beliefs, no matter how weird they may be (see above)?

Asked differently, why do so many people conform over the same weird ideas?

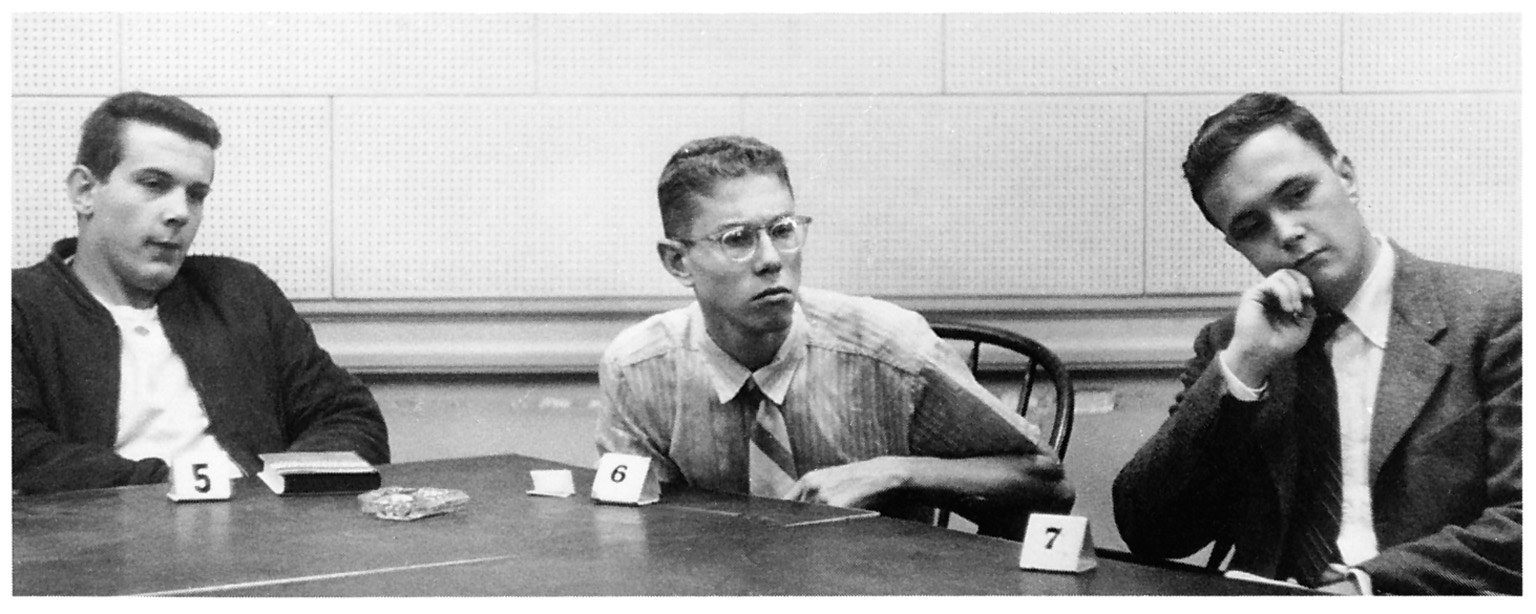

To answer this question, psychologist Solomon Asch in the 1950s conducted a series of experiments to test his subjects’ levels of conformity. Subjects would enter a room and be seated with other participants who were, unknown to the subject, in on the experiment (also called “confederates”). The subject would then be shown a line segment and asked to identify a matching line from a grouping of three other segments of differing lengths. Each participant would verbally announce the matching line segment.

Here’s where things got interesting. On a number of trials, the confederates were told by the researcher to purposively provide the wrong answer in order to see how the participant would respond, even when presented with blatantly false information. Surprisingly, 75% of participants also picked the wrong answer at least once in order to match the other respondents (confederates). When privately asked to provide the answer, participants were correct 98% of time, indicating they had picked the wrong answer in the group setting even when they knew the answer was obviously wrong.

75% is a rather large number, making it seem easy to dismiss the results of the Asch Conformity Experiments as a fluke. Except that these results have been replicated in even more outrageous circumstances. In the infamous Smoke Filled Room experiment of the late 1960s, participants were placed in a small room that slowly began filling up with mysterious smoke. When alone, participants invariably got up and opened the door to leave and investigate. However, when placed in the room with 2 or 3 other individuals who were in on the experiment and told to react as if they did not notice the smoke, a whopping 90% of respondents chose to remain in their seats, coughing, rubbing their eyes, waving off fumes, and opening windows—but not leaving the room to report the smoke.

Other classic psychology experiments (from an era pre-dating independent ethics committees) clearly show that conformity is a powerful aspect of group processes and formation. Some of these studies include the Robber Cave experiment, the Milgram obedience experiment—which found that 65% of subjects administered what they thought were maximum level shocks to another participant when directed by an authority figure—to the Stanford Prison experiment have demonstrated the far-ranging behaviors individuals will take based on their need for conformity. Although these studies’ methodologies (and ethical standards) do not hold up today, they still provide some insight into why even large groups so often share utterly unilateral and sometimes bizarre viewpoints.

Surely, the college town setting must be feel like an intense pressure cooker for those who choose to abstain from conformist thinking. As more and more individuals conform to the dominant viewpoint, the group of abstainers continues to shrink, exerting even more pressure on the diminishing minority to conform or entirely risk banishment from the in-group. In this way, the small towns and communities in which most colleges are located invariably serve as stand-alone conformity experiments in action.

In his seminal book The True Believer: Thoughts on the Nature of Mass Movements, Eric Hoffer describes the “true believer” as a discontented person seeking to place their control outside of themselves and onto a strong leader or ideology. In this way, they seek “self-renunciation” by subsuming their own personal beliefs and ideas into a larger collective. Most importantly, the true believer identifies with the movement so strongly, nay needs the movement in order to fulfil some psychological requirement, that even when presented with contrary evidence, the true believer has no resort except to double down and intensify the belief.

This is exactly what happened with the Seekers, a Chicago-based cult that predicted an end times alien cataclysm that would occur on Dec 21, 1954. The disciples sold all their property in anticipation of the apocalypse, but when the event didn’t occur, instead of retreating into reflecting on where they went wrong, they instead courted the press in order to draw more awareness and attract more converts to their cause. Stanford psychologist Leon Festinger, who studied this case, summarized in the following way: “A man of conviction is a hard man to change. Tell him you disagree and he turns away. Show him facts or figures and he questions your sources. Appeal to logic and he fails to see your point.”

This case would seem to indicate that trying to convince the true believer is a lost cause. But large movements are not comprised of only true believers. In fact, I would argue that true believers (along with the people at the very top who are often grifters, but I digress) make up only a small percentage of any movement. One can go through each of the most vile moments in history, such as the Nazi period for example, and find a similar pattern, that a certain ideology may have held large-scale soft support, but only a tiny fraction were directly accountable for the actions of the movement.

It is this soft underbelly that is worthy of further consideration and is most directly relevant to the previous research on conformity. I call this group the “Fence Sitters” since they may be operating on unscrutinized beliefs, but like the subjects in the Asch and Smoke Filled Room studies, merely want to fit in and conform to group standards. As a result, they may be much more likely to change their opinions when exposed to alternative group norms. Indeed, one of Asch’s greatest realizations from his series of experiments was that levels of conformity were based on group size. Conformity increased with the size of the in-group, but levelled off once the size reached four or five. In addition, conformity also increased when other group members were perceived to have higher social status.

Taking these conclusions into consideration, the university/college setting is the conformist’s wet dream. From the very first day, freshmen are exposed to the ideas and beliefs of authority figures (professors) and older peers (upper classmen) and often encounter these same ideas in small group class break out sessions. In this environment, most young, impressionable co-eds are powerless to combat or contradict any idea thrown their way, no matter how nonsensical. Using this picture-perfect set-up, one could conceivably convert an entire cadre of young minds into believing anything from the earth is flat to biological sex is a social construct (oh wait). The ideas themselves don’t matter, they are merely interchangeable software; it is the setting, the hardware itself, that acts as a powerful meme generating machine, and that must be changed before it breaks away from human design and takes on a life of its own, as it’s done at Evergreen State.

But just because people state they believe in some idea doesn’t mean they internally do. Psychologists have identified several different types of conformity. For example, normative conformity refers to changing behavior to fit group norms, while informational conformity is when a person looks to the group to decide what to think or believe. I would identify those individuals experiencing informational conformity as the aforementioned fence sitters. They may not have strong beliefs, or possess some beliefs that contradict the prevailing attitudes, but decide to conform to groupthink merely to conform. This group may be very large and may account for some of the most interesting social phenomena of recent decades.

Social scientist Timur Kuran, in his book Private Truths, Public Lies identifies a concept he calls “preference falsification,” in which individuals articulate preferences that are socially appropriate but do not reflect what they truly believe. This explains why a number of social movements, such as the Russian and Iranian Revolutions completely took observers by surprise. Most recently, the United States was stunned when Donald Trump defied virtually every single major poll to win the Presidential election. The theory of preference falsification suggests that large undercurrents of sentiment exist beyond social awareness and only need to be tapped into for the flood gates to open and for large scale changes to take place.

Let’s now takes all of these ideas to their natural conclusions in order to gain some understanding of how modernists can combat dangerously ridiculous belief systems, within the academy and without:

1) The campus environment is an ideal environment for conformity creep, starting from professors and then escalating quickly to the student body.

2) A high percentage of individuals will state things publicly that they know are patently wrong in order to conform.

3) Based on historical precedent, they likely embody a much higher percentage of any movement than true believers.

4) These “fence sitters” may quickly change their opinion en masse if they feel enough social support to challenge status quo conformity.

5) Because conformity is highest when individuals are exposed to the views of groups of at least 4 or 5, it is imperative that fence sitters are exposed to numerous examples of contradictory evidence.

So, what are the broad implications? I can explore this further in a part 3 if there is enough interest, but in brief, opponents of postmodernism-run-amok must continue to articulate their viewpoints as clearly and as often as possible. All of the articles, commentaries, and essays add up. People need to see that there is a large movement of individuals who disagree with anti-science so that it then becomes safer to align with them. In addition, universities should add requirements for all humanities students to take at least one or two classes in the hard sciences in order to expose them to alternative viewpoints—such as you know, science. I’m sure other readers and commenters will have their own ideas to add, but hopefully this essay provides a rough framework and clear justifications for creating a clear and organized modernist opposition to pseudoscience. The stakes have never been higher.