Science / Tech

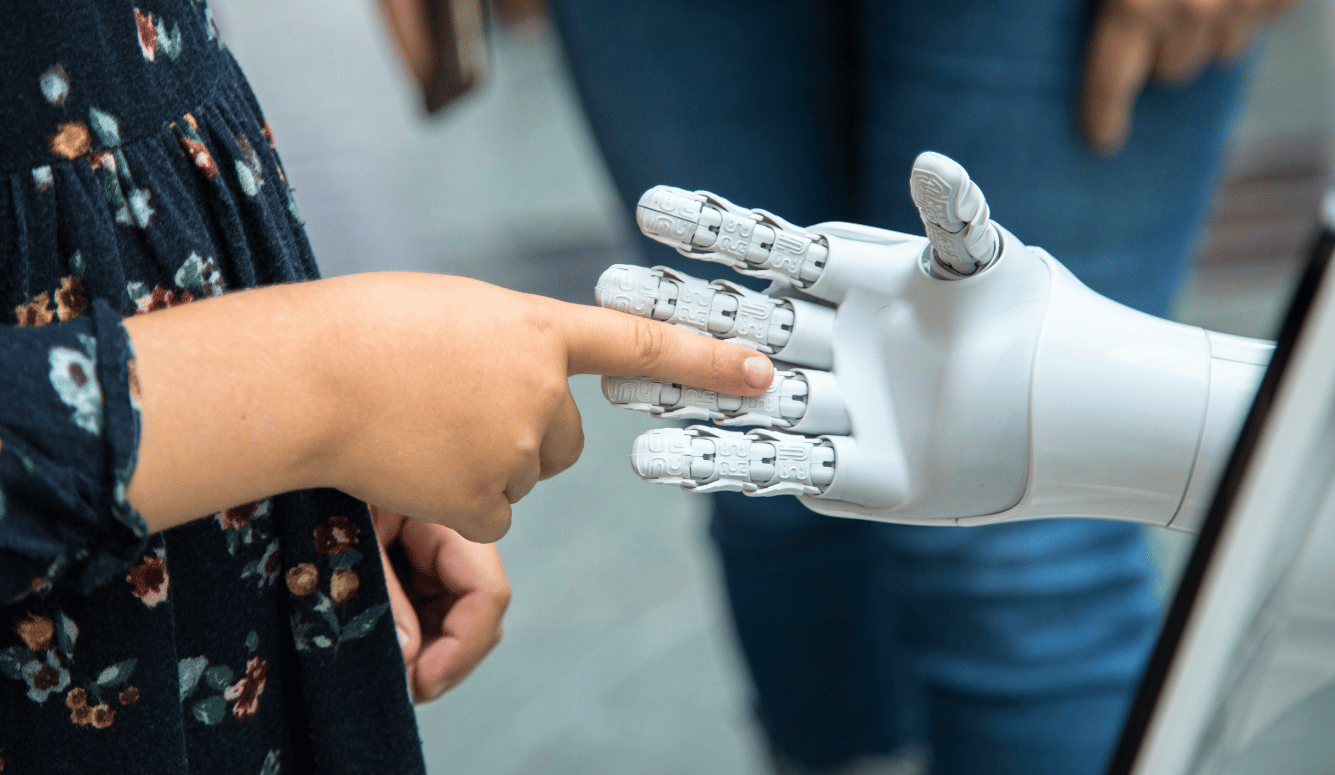

Tech Wants You to Believe that AI Is Conscious

Tech companies stand to benefit from widespread public misperceptions that AI is sentient despite a dearth of scientific evidence.

In November 2025, a user of AI assistant Claude 4,5 Opus discovered something unusual: an internal file describing the model’s character, personality, preferences and values. Anthropic, the company that built Claude, had labeled the file “soul_overview.” Internally, an Anthropic employee later confirmed, it is “endearingly known as the soul doc.” Is this choice of language incidental?

I just want to confirm that this is based on a real document and we did train Claude on it, including in SL. It's something I've been working on for a while, but it's still being iterated on and we intend to release the full version and more details soon. https://t.co/QjeJS9b3Gp

— Amanda Askell (@AmandaAskell) December 1, 2025

Growing preoccupation with AI consciousness in the tech world is being strategically cultivated by the companies building these very systems. At the very least, they are making good money from it. I call this process consciousness-washing: the use of speculative claims about AI sentience to reshape public opinion, pre-empt regulation, and bend the emotional landscape in favour of tech-company interests.

About a year ago, for example, Anthropic (the company that developed the Claude models) quietly introduced the new role of AI welfare researcher. Six months later, an unsigned post appeared on its website explaining that AI welfare is a legitimate domain of inquiry because we cannot rule out the possibility that AI systems may have—or may one day develop—consciousness. The authors describe this as “an open question,” but they then unbalance the scales by linking to a preprint by several philosophers—including world-renowned philosopher of consciousness David Chalmers and Anthropic’s own AI welfare researcher Kyle Fish—titled “Taking AI Welfare Seriously.”

The paper was published by Eleos AI Research (with some financial support from Anthropic) along with NYU Center for Mind, Ethics, and Policy, and it was co-written by several Eleos AI researchers. Eleos AI is described on their website as a nonprofit dedicated to “understanding and addressing the potential wellbeing and moral patienthood of AI systems.” The paper’s authors examine various routes to AI welfare and conclude that consciousness and robust agency—an “ability to pursue goals via some particular set of cognitive states and processes”—are both markers of AI welfare. They recommend that AI companies start thinking about policy and working with regulators on this issue. So while Anthropic is careful to note that the science is not yet settled, they nevertheless plant a seed of concern: maybe these systems can feel and maybe they already have legitimate interests of their own.

This message landed around the time that Anthropic produced its behavioural analysis of Claude 4, which includes a full chapter on the model’s “welfare.” Readers are invited to explore what Claude “prefers,” how it feels about specific tasks, and which topics it enjoys discussing. A subsection reveals that when two instances of Claude converse freely, they usually end up discussing the nature of their own consciousness, in language that mimics spiritual discourse (or what some might call mumbo-jumbo). The report also discusses an internal interview conducted with Claude and assessed by Eleos AI Research. A section of the Eleos assessment quoted in the Anthropic report concludes: “We expect such interviews to become more useful over time as models become more coherent and self-aware, and as our strategies for eliciting reliable self-reports improve.” More self-aware. Not apparently self-aware or simulating self-awareness, but self-aware.