Science / Tech

Terminators and Parrots: AI in the Era of GPTs

The hyperbole surrounding AGI misrepresents the capabilities of current AI systems and distracts attention from the real threats that these systems are creating.

The debate over whether or not powerful artificially intelligent agents are likely—or even possible—has been raging since the construction of the first digital computers in the late 1940s. Alan Turing, who established the mathematical foundations of computer science, did not attempt to define general intelligence, but he offered an operational test in place of a definition. The Turing Test involves placing a computer behind one curtain, and a person behind another. A second person communicates with both through a keyboard. If the second person is unable to distinguish which interlocutor is human and which is a machine, then we can describe the machine as intelligent. The Turing Test was once widely (but by no means universally) accepted as a reasonable procedure for identifying general intelligence. However, the fluency of Generative Pre-trained Transformers’ (GPTs’) natural language production leaves the usefulness of the Turing Test open to question.

On 2 April 2025, Google DeepMind released a 145-page report proposing safety measures to minimise the harm that Artificial General Intelligence (AGI) models might cause when they appear. According to the report’s authors, we should expect these models by 2030. The report anticipates the rise of AGI in the following terms:

Timelines: We are highly uncertain about the timelines until powerful AI systems are developed, but crucially, we find it plausible that they will be developed by 2030.

Implication: Since timelines may be very short, our safety approach aims to be “anytime”, that is, we want it to be possible to quickly implement the mitigations if it becomes necessary. For this reason, we focus primarily on mitigations that can easily be applied to the current ML pipeline.

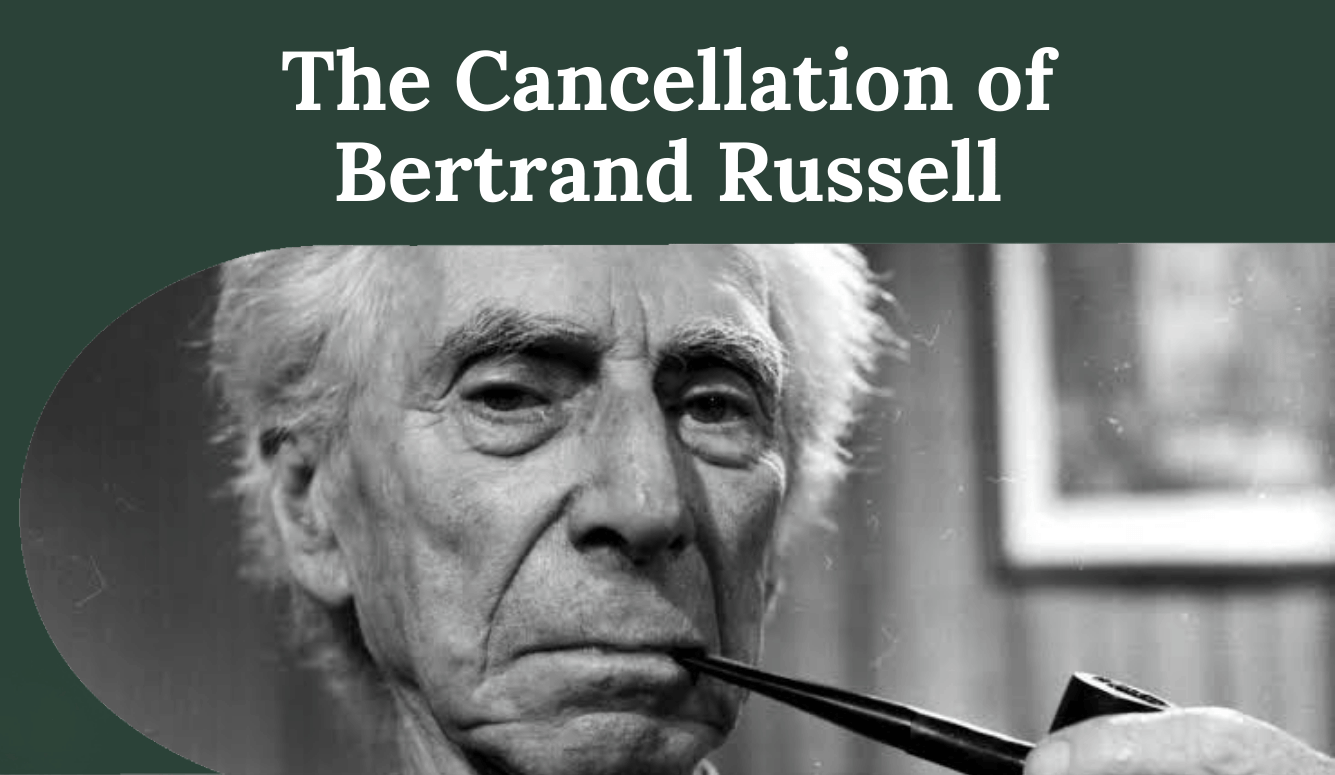

At the other extreme of the AGI discussion, Emily Bender and Alex Hanna’s 2021 book The AI Con: How to Fight Big Tech and Get the Future We Want and Bender et al.’s 2021 paper “On the Dangers of Stochastic Parrots: Can Language Models be Too Big?” dismiss current AI systems as devoid of serious learning or inference capacity. They argue that the apparently impressive ability of multimodal Large Language Models (LLMs) to solve traditional AI tasks—like image identification and description, machine translation, natural-language generation, and information retrieval—is a consequence of the vast amount of data on which they are trained. They describe these models as “stochastic parrots,” which can do little more than return their training data.

Unfortunately, discussions about AI—like discussions about politics—are increasingly dominated by hyperbole and extreme views. This polarisation is captured by Google DeepMind’s overheated expectations concerning the immediate arrival of AGI on one side, and Bender et al.’s dismissive polemic insisting that GPTs lack any cognitively interesting dimensions on the other. Both approaches have well-established antecedents in the history of AI. Each one seriously misrepresents the properties and capacities of current AI systems.

It is certainly not the case that the large transformers that drive LLMs do nothing more than return the data on which they are trained. They perform complex inductive inferences, and they determine subtle probability distributions over possible situations in the context of previous sequences of events. These operations permit them to recognise abnormalities in X-rays and fMRIs with an efficiency that far surpasses the performance of human medical diagnosticians. They identify new protein molecules that support discoveries in biology and pharmacology.

LLMs have achieved high-quality multilingual machine translation across a remarkably large set of languages, in some cases through transfer of knowledge from digitally well-represented languages to comparatively low-resourced languages. They recognise images and audio patterns, and they describe their elements accurately. They generate sounds and images from text. They can produce reasonably decent computer code from natural language instructions, and they spot errors in programs. They are now generating novel proofs of difficult theorems, which human mathematicians have not managed to discover. They have defeated the human champions of elaborate strategy games, like Go. They are able to distinguish between authentic and forged paintings, and they can generate original musical compositions that score well on human assessment. They provide information retrieval and expert query systems that are highly accurate, and fluent in their natural-language responses.

This is only a sample of the areas in which these systems are able to perform cognitively challenging tasks at levels that greatly exceed human capacities in speed and accuracy. Given their range of abilities, it is entirely inaccurate to describe them as “stochastic parrots,” or to dismiss them as plagiarising cons. These systems constitute a major revolution in machine-learning methods. The engineering successes this revolution has facilitated are transforming most aspects of the environments in which we live and work.

However, it is also important to recognise the serious limitations of GPTs. They are not able to engage in deep, domain-general reasoning or planning, which is the core element of what we associate with general intelligence. Their performance declines in direct relation to the distance between the data that they have seen in training, and the input to which they are applied in new tasks. Out-of-domain testing reveals the weaknesses of these models. If their massive pretraining is not supplemented by customised exposure to domain-specific information (fine tuning), they do not, for the most part, do well at new kinds of tasks. In particular, they are not able to handle ordinary real-world reasoning, generalised inference, and complex planning, which humans perform with ease, as a matter of course.

It is not easy to define general intelligence, nor is it clear that we will ever achieve a precise scientific theory that captures it. We can recognise intelligent behaviour when we see it, but we cannot define it. Turing was therefore right not to attempt such a definition and to suggest an operational criterion instead. In my book Understanding the Artificial Intelligence Revolution: Between Catastrophe and Utopia, I follow Turing’s lead in adopting a set of diagnostics for identifying conduct that exhibits general intelligence. These include behaviour that is (i) goal directed, (ii) volitional, and (iii) applies rational and efficient methods for planning to achieve objectives over a wide range of domains. While GPTs and other deep-learning models are goal directed, they are not volitional, and they do not use domain-general rational planning techniques.

It is important to recognise that these diagnostic markers of general intelligence do not entail internal mental states or consciousness in an agent. The philosophers David Chalmers and John Searle both identify intelligence with such states. Chalmers claims that AI systems can achieve them while Searle disagrees. In fact, there is no reason to assume that non-human agents, electronic or biological, have human-like mental states as a condition of intelligence. In the case of computational models, it seems farfetched to assume that they would. It is possible for an agent to satisfy my three proposed behavioural indicators of intelligence without having internal mental states of any kind. Separating the question of consciousness from general intelligence gives us a clearer view of what is involved in postulating intelligent artificial agents.