Science / Tech

AI and the Transformation of the Human Spirit

The first stage of grief is denial, but despair is also misplaced.

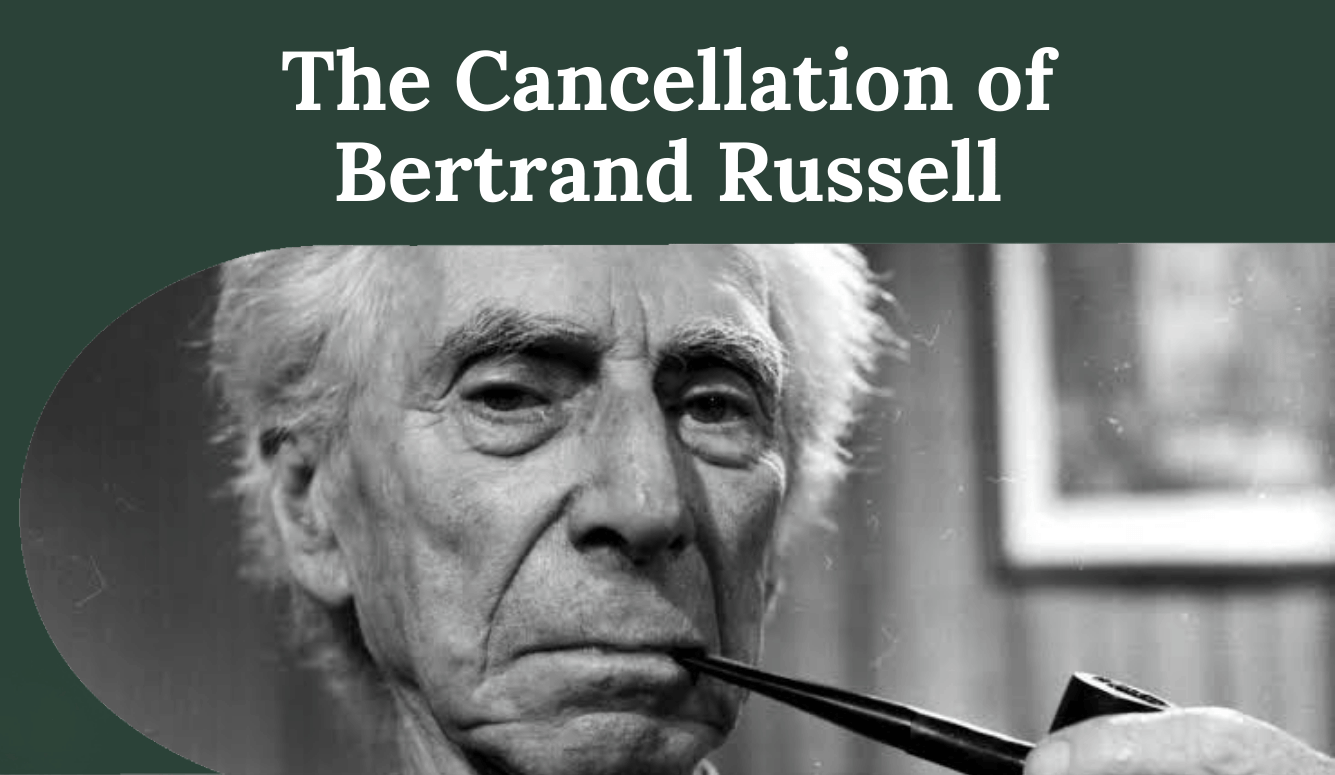

The human approach to computers has long been to sniff that we are still superior because only we can ___ (fill in the blank). The objection goes back to the mathematician, and Countess of Lovelace, Ada King in the 19th century. After she wrote the first computer program—a means of calculating Bernoulli numbers on Charles Babbage’s Analytical Engine—she declared that human thought would always be superior to machine intelligence because we alone are capable of true originality. Computers are purely deterministic, she insisted, predictably and mechanically churning out their results. Yes, a pocket calculator might be quicker and less prone to errors, but it is doing no more than what we can do more slowly with a pencil and paper. It is certainly not conceiving of original theorems and proving them.

When Deep Blue beat chess champion Garry Kasparov in 1997, Kasparov immediately accused the IBM scientists behind Deep Blue of cheating, precisely because he saw true originality and creativity in the computer’s moves. This is King’s complaint all over again—only humans are really creative, and a computer that appears to be must secretly have a little man behind the curtain pushing buttons and pulling levers. Kasparov is not the only one. When AlphaGo defeated Go champion Lee Sedol, he said that the computer’s strategies were so inventive that they upended centuries of Go wisdom. Even Alan Turing rejected King’s argument back in 1950, observing that computers had often surprised him with what they did, and if originality was unpredictability, then computers had it.

At this point in the development of artificial intelligence, software is better than nearly every human being at a huge range of mental tasks. No one can beat DeepMind’s AlphaGo at Go or AlphaZero at chess, or IBM’s Watson at Jeopardy. Almost no one can go into a microbiology final cold and ace it, or pass an MBA final exam at the Wharton School without having attended a single class, but GPT-3 can. Only a classical music expert can tell the difference between genuine Bach, Rachmaninov, or Mahler from original compositions by Experiments in Musical Intelligence (EMI). AlphaCode is now as good at writing original software to solve difficult coding problems as the median professional in a competition of 5,000 coders. There are numerous examples of AIs that can produce spectacular visual art from written prompts alone.

One would think that by now the casual self-confidence in human creative superiority should be long destroyed. But no. English PhDs continue to declare themselves unimpressed by GPT-3’s writing. EMI’s music isn’t as good as Bach, scoff the music critics, and Midjourney AI isn’t Picasso. Those criticisms, if not bad faith, seem to be born of desperate fear. GPT-3 is a better writer than the average first-year student at a typical state college. Most people certainly couldn’t write a competent poem in Anglo-Saxon on the superiority of Rottweilers as a dog breed, or a grocery list in the style of Edgar Allan Poe (prompts I successfully tried on GPT-3).

Critics have backed away from “all humans are better at any task than computers” to “the most expert humans are better at some tasks than computers.” Now trapped in their ever-shrinking corner of pride, they insist that AI is doing no more than copying human excellence, or assembling a bricolage of what we have already done. That is not how it works, but even if it were, the remixings of hip-hop musicians and the collages of Robert Rauschenberg are still genuinely creative works of art. Furthermore, the apparent expectation that true AI will display its own sui generis style sets a standard that few, if any, human beings meet. No composer, artist, writer, scholar, or even coder lacks influences.

More troubling is the widespread ostrich approach to the AI future. Too many critics are seeing the infant Hercules and harrumphing that he cannot beat them at wrestling. It is astonishing that so many skeptics think this is just a parlor trick, or a handy invention like the cordless drill or Wikipedia, instead of trying to imagine what it might be like in five, 10, or 20 years. To pick another metaphor, we are witnessing Kitty Hawk, 1903. The wrong response is to insist that we will never get to the moon. If you think, “I am ever so smart and talented and no language model will ever write better and more inventively than I can,” spare a minute to consider that Garry Kasparov and Lee Sedol entertained similar thoughts. When Watson beat Ken Jennings at Jeopardy, he said, “I for one welcome our computer overlords.” That is a far more realistic attitude.

Alexander Winton, who built and sold the first gasoline-powered car in America, recalled being told by his banker, “You’re crazy if you think this fool contraption you’ve been wasting your time on will ever displace the horse.” A newspaper columnist of the time wrote, “The notion that [automobiles] will be able to compete with railroad trains for long-distance traffic is visionary to the point of lunacy. The fool who hatched out this latest motor canard was conscience-stricken enough to add that the whole matter was still in an exceedingly hazy state. But, if it ever emerges from the nebulous state, it will be in a world where natural laws are all turned topsy-turvy, and time and space are no more. Were it not for the surprising persistence of this delusion, the yarn would not be worthy of notice.” The analogy should be obvious. If there is one trait that seems persistently human, it is a lack of imagination and foresight that the status quo might ever change profoundly, even when the agent of that change is on your doorstep.

Thomas Edison, for one, foresaw the horseless future of transportation, but of course he was visionary to the point of lunacy. Some people do get it about AI; musician, music producer, and YouTuber Rick Beato flatly states that right now AI can write competitive pop music. “You have no idea what’s coming next,” reports Alex Mitchell in Billboard magazine. He is the founder/CEO of Boomy, a music-creation platform that can compose an instrumental at the click of an icon. In the latest issue of Communications of the Association for Computing Machinery, computer scientist Matt Welsh sees the handwriting on the wall for coders. “It seems totally obvious to me,” he writes, “that of course all programs in the future will ultimately be written by AIs.” He advises computer scientists to evolve their thinking, instead of just sitting here waiting for the meteor to hit.

None of this is going to stop philosophers from touting our consciousness and denying it to silicon machines. For sure, the nature of consciousness is a deep and difficult problem, but at this point, obsessing about consciousness is like focusing on the superiority of equine mentality in 1900. “Horses have their own mind and free will; they can make decisions based on environmental and situational conditions—no car is ever going to do that. Cars are just clinking, clanking gears and pistons, no warmth, no companionship, no bonding like that between a man and his horse.” True or not, it just didn’t matter; cars weren’t merely faster horses. They were a completely different kind of thing, better in every way that mattered. Automobiles changed the world in a way that even Winton never anticipated. AI will transfigure our future in a way that we cannot predict, any more than we could have predicted that ARPANET’s descendants would slay brick-and-mortar shopping, revolutionize publishing, and give rise to social media.

Researchers truly worried about the AI future largely dread the singularity—the risk of a recursively self-improving superintelligence possessing goals unaligned with those of humanity. Welsh reports that no one knows how today’s large AI models work, and they are capable of doing things that they were not explicitly trained to do. Reliable critics like Gary Marcus continue to insist (correctly) that the AI systems we have now are not the holy grail of Artificial General Intelligence (AGI), and doubt that the research programs they represent will lead to AGI. These critics may be right about both points, but there is a real sense in which it just doesn’t matter. There is still plenty to concern us in the near term, even if the singularity event never comes to pass, and even if AGI turns out to be generations away.

One problem is that AI will turbocharge malevolent agents spreading misinformation, fraudulent impersonations, deep fakes, and propaganda. Russian troll farms will soon look quaintly artisanal, like local cheese and hand-knitted woolens, replaced by an infinite troll army of mechanized AI bots endlessly spamming every site and user on the Internet. Con artists no longer need to perfect their art when the smoothest bullshitter in the world is available at the push of a button. Bespoke conspiracy theories with the complexity and believability of an Umberto Eco novel will be generated on demand. To paraphrase Orwell, “If you want a picture of the future, imagine a bot stamping on a human face—forever.”

A second problem is the risk of technological job loss. This is not a new worry; people have been complaining about it since the loom, and the arguments surrounding it have become stylized: critics are Luddites who hate progress. Whither the chandlers, the lamplighters, the hansom cabbies? When technology closes one door, it opens another, and the flow of human energy and talent is simply redirected. As Joseph Schumpeter famously said, it is all just part of the creative destruction of capitalism. Even the looming prospect of self-driving trucks putting 3.5 million US truck drivers out of a job is business as usual. Unemployed truckers can just learn to code instead, right?

Those familiar replies make sense only if there are always things left for people to do, jobs that can’t be automated or done by computers. Now AI is coming for the knowledge economy as well, and the domain of humans-only jobs is dwindling absolutely, not merely morphing into something new. The truckers can learn to code, and when AI takes that over, coders can… do something or other. On the other hand, while technological unemployment may be long-term, its problematicity might be short-term. If our AI future is genuinely as unpredictable and as revolutionary as I suspect, then even the sort of economic system we will have in that future is unknown.

A third problem is the threat of student dishonesty. During a conversation about GPT-3, a math professor told me “welcome to my world.” Mathematicians have long fought a losing battle against tools like Photomath, which allows students to snap a photo of their homework and then instantly solves it for them, showing all the needed steps. Now AI has come for the humanities and indeed for everyone. I have seen many university faculty insist that AI surely could not respond to their hyper-specific writing prompts, or assert that at best an AI could only write a barely passing paper, or appeal to this or that software that claims to spot AI products. Other researchers are trying to develop encrypted watermarks to identify AI output. All of this desperate optimism smacks of nothing more than the first stage of grief: denial.

While there is already a robust arms race between AI and AI-detection, we’re only trying to stuff the genie back into the bottle; it is hard to foresee any outcome besides AI’s total victory. Soon AI will allow the sophistication of its output to be adjusted, so students can easily hit that sweet spot between “too illiterate to pass” and “sounds suspiciously like Ivy League faculty.” Just throw in a couple of typos, dumb it down a little, and score that B. More enterprising students will take an AI’s essay, alter it by 30 percent, and add proper references. Or they will feed their own half-baked original work into an AI and tell it to make improvements. Or use it to write needed code which they then annotate by hand. Or, or, or.

Nothing short of a surveillance state that watches every student’s pen-stroke as they sit inside a Faraday cage has a chance. (Even that might not work; cf. the recent chess cheating scandal.) AI is the sea, and faculty are reassuring each other that their special push-broom will hold back the tide. The battle against plagiarism is already lost, completely and comprehensively. Faculty will soon take the predictable next step and persuade themselves that AI is not different in kind from a pocket calculator, and is no more than a harmless tool to serve the all-important god of efficiency.

Those concerns, while all real and significant, take a back seat to the deep transformation that artificial intelligence will bring to the human spirit. In zombie movies, the zombies are themselves brainless and to survive must feast on the brains of others. Philosophical zombies are creatures that can do the same things we can, but lack the spark of consciousness. They may write books, compose music, play games, prove novel theorems, and paint canvases, but inside they are empty and dark. From the outside they seem to live, laugh, and love, yet they wholly lack subjective experience of the world or of themselves. Philosophers have wondered whether their zombies are even possible, or if gaining the rudimentary tools of cognition must eventually build a tower topped in consciousness. If zombies are possible, then why are we conscious and not the zombies, given that they can do everything we can? Why do we have something extra?

The risk now is that we are tessellating the world with zombies of both kinds: AIs that are philosophical zombies, and human beings who have wholly outsourced original thinking and creativity to those AIs, and must feast on their brainchildren to supplant what we have given up. Why bother to go through the effort of writing, painting, composing, learning languages, or really much of anything when an AI can just do it for us faster and better? We will just eat their brains.

We can all be placed on a bell curve of ambition, drive, and grit. At the high end are hyper-driven people like Michael Shermer, who after founding and cycling in one of the hardest athletic events in the world, the Race Across America, got a PhD in the philosophy of science and became a best-selling author; or Myron Rolle who was a Rhodes Scholar, a safety in the National Football League, and is now a neurosurgeon with a residency at Harvard Medical School; or Jessica Watkins, who was a rugby champion at Stanford, earned a geology PhD, and then became an astronaut. At the other end are stereotypical couch potatoes playing video games amid bags of Cheetos and cans of Red Bull. Most of us, though, are somewhere in the fat middle of the bell curve, with modest achievements and humble dreams.

The fear of zombification is that AI will undercut human motivation—why bother striving only to be beaten by the machines in the end?—and we will all drift down to the slacker end. That fear is misplaced. It is more plausible to see individuals as each having an ambition set point fixed somewhere along the curve, and we all operate within a few degrees of it. We might be a little more ambitious at one time or a little less at another, but always near our own set point. Stephen King and J.K. Rowling could spend the rest of their lives sleeping on giant piles of money but they still write. Even the couch spuds try to win the boss fights in Call of Duty.

We invented motorcycles but many prefer bicycles; we engineered helicopters that can fly us to the top of Kilimanjaro, but many would rather have the challenge of climbing. We ban performance-enhancing drugs in sports precisely because we want to know what human beings, unaided, alone, can do. I will never ride my Cannondale as fast as a Ducati, and I will never paint as well as DALL-E2 or play chess better than AlphaZero. But those are still activities worthy of pursuit, even in their imperfection, and perhaps even because of it. As Robert Browning observed in Andrea del Sarto, “a man’s reach should exceed his grasp, or what’s a heaven for?”

In Concluding Unscientific Postscript, Kierkegaard offered the insight that in a world where everything is being made easier, maybe, out of the same humanitarian impulse, he could make things harder. “When there is only one want left … people will want difficulty.” Kierkegaard was right on the money; after all, games are little more than unnecessary actions made unnecessarily difficult, but we enjoy them nonetheless. Steamships and telegraphs made life easier in Kierkegaard’s day, but when AI lifts the burden of working out our own thoughts, it is then that we must decide what kinds of creatures we wish to be, and what kinds of lives of value we can fashion for ourselves. What do we want to know, to understand, to be able to accomplish with our time on Earth? That is far from the question of what we will cheat on and pretend to know to get some scrap of parchment. What achievements do we hope for? Knowledge is a kind of achievement, and the development of an ability to gain it is more than AI can provide. GPT-5 may prove to be a better writer than I am, but it cannot make me a great writer. If that is something I desire, I must figure it out for myself.

When Nietzsche first declared the death of God in The Gay Science, he knew he was ahead of his time. The earth-shaking transformation of killing off God as a world-explanation was too hard to grasp for most of his readers. “My time is not yet,” the madman in The Gay Science laments, “This tremendous event is still on its way, still wandering; it has not yet reached the ears of men. Lightning and thunder require time; the light of the stars requires time; deeds, though done, still require time to be seen and heard. This deed is still more distant from them than most distant stars—and yet they have done it themselves.”

By building artificial intelligence, we have plunged the knife into our own ego. We are not uniquely creative, as Ada King thought, we are not superior in every mental task to our silicon offspring. But perhaps instead of becoming shuffling zombies, brainless and hungry, we can see the AI future as an opportunity for transfiguration, for self-overcoming. Nietzsche wrote:

Indeed, we philosophers and “free spirits” feel, when we hear the news that “the old god is dead,” as if a new dawn shone on us; our heart overflows with gratitude, amazement, premonitions, expectation. At long last the horizon appears free to us again, even if it should not be bright; at long last our ships may venture out again, venture out to face any danger; all the daring of the lover of knowledge is permitted again; the sea, our sea, lies open again; perhaps there has never yet been such an “open sea!”

We are living in a time of change regarding the very meaning of how a human life should go. Instead of passively sleepwalking into that future, this is our chance to see that the sea, our sea, lies open again, and that we can embrace with gratitude and amazement the opportunity to freely think about what we truly value and why. This, at least, is something AI cannot do for us. What it is to lead a meaningful life is something we must decide for ourselves.