Constructivism

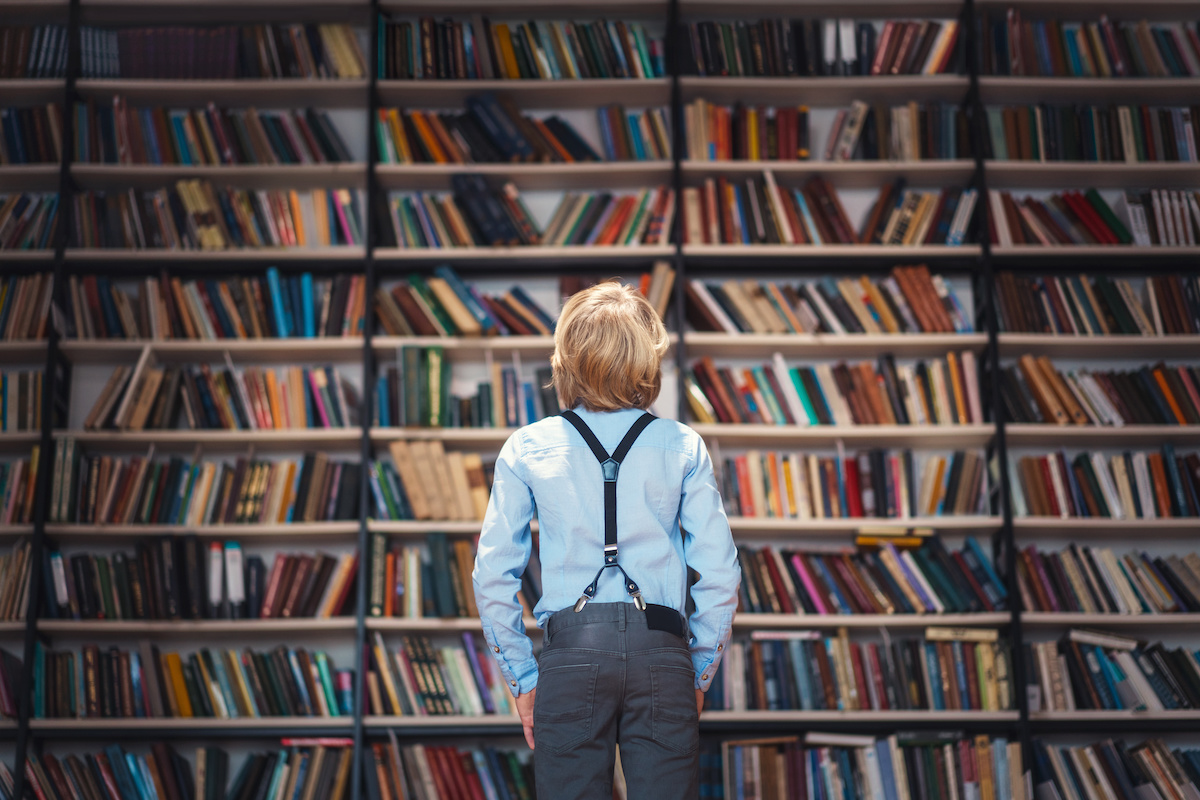

Shoulders of Giants

Concepts do not have a fixed lifespan. Some concepts are more enduring than others because they have more value.

Editor’s note: This is an excerpt from Chapter One of The Power of Explicit Teaching and Direct Instruction by Greg Ashman.

In a letter to Robert Hooke in 1676, Isaac Newton wrote: “If I have seen further it is by standing on ye sholders of Giants.” Newton was alluding to those who had come before, such as Galileo Galilei, on whose work he had built. The metaphor, implying the ratchet-like progress of human understanding, privileging accumulated culture over the gifted and inspired individual, was not original. A little research establishes an earlier form attributed to Bernard of Chartres by John of Salisbury. Given the nature of the saying, its history is satisfying. Or it should be. The stuff of teaching is to pass on accumulated culture so that our children and their children may see further than we ever did. And yet the forces ranged against such a simple and obvious proposition, as intelligible to Newton as it is to us today, are formidable.

There are those who see education as a development from within. Kieran Egan charts the historical influence of this idea in Getting it Wrong from the Beginning (Egan, 2004). Nineteenth- and early 20th-century “progressivist” philosophers of education, such as Herbert Spencer, mixed older, romantic ideas about childhood and nature with a form of pseudoscience inspired by the recently developed theory of evolution to claim that the development of individual children recapitulates the evolutionary ascent of humanity as a species. The educator’s role was to get out of the way of this natural process. Instead of placing children on the shoulders of giants, we should place them in a woodland glade to play, discover, and learn.

If children are to develop naturally through experience, there is little need to directly teach them boring facts about the past in an artificial environment such as a classroom. Instead, the educator’s role, if any, is to manipulate the environment in order to ensure that the child’s experiences are rich enough. Although on initial inspection it may seem absurd, such ideas have gained considerable purchase in the education world, particularly on those rarefied mountain tops least concerned with practicalities—the education conference circuit, the column inches produced by broadsheet pundits and the education faculties of our universities.

I qualified to teach by completing a Postgraduate Certificate of Education (PGCE) at the Institute of Education in London. Early in the course, a lecturer referred to a famous quote from Plutarch, a quote that is often misattributed to Socrates or even W.B. Yeats. The sense in which the quote was used was to suggest that children are not “empty vessels” into which teachers must pour knowledge. Instead, we must light a fire inside them—an inspiring idea.

I accepted this message and carried it with me for a long time. It sounded profound and I understood it to represent a significant psychological truth. I trusted my lecturers. I trusted that they understood the research. I felt guilty about using explicit approaches to teaching because I didn’t think they were supported by the evidence.

Years later, I decided to investigate the Plutarch quote for myself. His advice is not for teachers (Plutarch, 1927). He is advising a young man, Nicander, on how he should listen to lectures: Don’t just bask in the glow of a warm, erudite lecture; mull over the concepts and take something away with you; make it your own.

If anyone is unsure about the role that Plutarch perceives for students, then a different quote from the same text clarifies the matter:

As skilful horse-trainers give us horses with a good mouth for the bit, so too skilful educators give us children with a good ear for speech, by teaching them to hear much and speak little. (Plutarch, 1927)

For some reason, my lecturer had left that part out.

The textbooks I was assigned at university had a focus on students’ misconceptions. They described the thinking of Piaget, Vygotsky, and Bruner, and a theory known as “constructivism.” I now realise that constructivism played a dominant role in framing the training I received.

The theory of constructivism posited that:

The teacher’s role is to facilitate learning. For pupils simply to acquire a body of knowledge or set of facts is not a constructive approach or adequate achievement for the pupil. The teacher must set the classroom in a way that allows pupils to enquire, by posing problems, creating a responsive environment and giving assistance to the pupils to achieve autonomous discoveries. This applies to all areas of education, from discovering prose and its meaning in English to design problems in technology. (Capel et al., 1996)

This description of constructivism will annoy some readers who will insist that it is not a theory of how to teach, but instead is a theory of learning. Adding further confusion, there are a number of related constructivist theories such as social constructivism and radical constructivism that vary in key ways. Perhaps of most interest to cognitive scientists is cognitive constructivism and a view that the mind consists of mental “schemas” that new learning has to interact with, either to fit in to a current schema or to change it (Derry, 1996). If true, constructivism describes the way that people learn whether they are independently discovering concepts or listening to lectures.

I am sympathetic to this view of constructivism as a theory of learning, but I must insist, in turn, that this is not the view of many protagonists who clearly see the theory as having implications for the way we teach. Plucked from its philosophical and scientific roots, constructivism in the classroom usually equates to asking students to find something out for themselves—to “construct” knowledge rather than passively receive it like empty vessels. Perhaps it is the case that an older idea has found a new, more scientific tone of expression.

It is as if the giant is there, offering children his shoulders, but instead we are asking them to construct a ladder out of sticky tape and drinking straws.

As is often the case, we can find a prototypical version of constructivism in the writings of John Dewey, way back in his 1916 work, Democracy and Education:

Why is it, in spite of the fact that teaching by pouring in, learning by a passive absorption, are universally condemned, that they are still so entrenched in practice? That education is not an affair of “telling” and being told, but an active and constructive process, is a principle almost as generally violated in practice as conceded in theory. Is not this deplorable situation due to the fact that the doctrine is itself merely told?

However, anyone so bold as to interpret Dewey in this way is likely to be told they have misunderstood him.

So far, I have conflated two distinct concepts. The first is that there is a body of knowledge that is worth teaching—i.e., that it is worth standing on the giant’s shoulders—and the second is the concept of directly teaching this knowledge, the main subject of this book. In theory, these are separable. We could, for instance, insist that there is a body of knowledge worth teaching, but that the best way to teach it is to place students in carefully crafted situations where we ensure that they discover this key knowledge for themselves. Alternatively, we could insist that there is no specific body of knowledge worth passing on to students, but that teachers should still be involved in the process of direct teaching, perhaps by teaching general-purpose skills instead. Both of these approaches have currency. We could argue that some forms of science teaching are examples of the former, and perhaps the teaching of reading comprehension strategies is an example of the latter.

However, as I will go on to argue, explicit teaching—an interactive method where new concepts are fully explained to students before responsibility is gradually passed from teacher to student—is particularly effective at transmitting knowledge from one person to another. This tends to lead to two general gravitational influences that are hard to avoid. Those who want students to gain a specific body of knowledge tend to be drawn, inevitably if sometimes slowly, to explicit forms of teaching. Those who dislike direct teaching in favour of more experiential approaches tend to find themselves arguing that transmitting a body of knowledge is not, and never has been, the point of education; that it is more about the acquisition of experiences that shape the character or, in its more modern variation, develop various generic skills such as critical thinking (see, for example, Macfarlane, 2020). It is not about standing on the giant’s shoulders, but the experience of acting like a giant and thinking like a giant.

This is not a new idea. Writing about the curriculum in 1918, Franklin Bobbitt advocated a play-based approach to the formative years of schooling and advised:

There is not to be too much teaching. What the children crave and need is experience. The school’s main task is to supply opportunities so varied and attractive that, like the two boys when they arrived at port, pupils want to plunge in and to enjoy the opportunities that are placed before them. (Bobbitt, 1918)

It is hard to hold on to the idea of passing on a specific body of knowledge while also committing to following the contours of children’s varying interests and the experiences that may be devised in pursuit of them, although Bobbitt insists that his approach lays the “solid foundations for the later industrial studies.”

Nevertheless, an emphasis on play, especially in the first few years of formal education, is understandable. Children learn a great deal of things through play, such as the rules of games and the attendant social skills to navigate them. Notably, most children learn to speak their native language with very little explicit teaching apart from the occasional correction such as, “It’s brought not bringed.” You do not need schools for this.

So, from the perspective of an early 20th-century reformer, surveying the landscape of little school houses with their recitation, slates, and harsh discipline, an obvious reform would be to make learning more joyous and playful. Perhaps nature was showing us the way and our factory-farmed industrial mindset was refusing to see it. Children need to graze in green pastures, yet we were cramming them in to feed lots.

There is, however, a fundamental difference between learning to understand someone who is speaking your native language and learning to read it. For most of humanity’s time on Earth, writing has not existed. Its origins span a few millennia. Even then, for much of those few millennia, its secrets were held by the priestly, clerical, and elite of society. Mass literacy is less than a couple of centuries old in industrialised societies and even more recent elsewhere.

Yet, since the deep, unrecorded past, we have been speaking and listening to each other. It is hard to know exactly when speech arose or reached its current level of sophistication because spoken words leave no fossils, but it is reasonable to assume that it has been subject to the process of evolution. According to David Geary (1995), an evolutionary psychologist, this could represent a key distinction. There are some bodies of knowledge and skills that we have evolved to learn, with play and experience being the ways we have evolved to learn them. In contrast, there are more recent products of human culture that we have not had time to evolve to learn. If Geary is right, play may not necessarily be the best way to learn these more recently created bodies of knowledge and skills. This frees us to seek evidence for the best way to learn them, and we will see that the evidence points towards explicit teaching.

Before we set out to teach in the most effective way possible, it is important to decide what to teach. What are the products of more recent human culture that are worth learning? This question must be answered, to a greater or lesser extent, by all who would design a school curriculum.

There are a number of principles we may keep in mind. We may take a functional view—what knowledge will students need in the future? What will they need for the work they will do? The trouble with a question like this is that it dissolves the more you look at it. On one level, you can continue to exist without most of the things we learn in school. You can even be highly successful. Jeremy Clarkson, a prominent reviewer of cars, has a habit of appearing every year on the day that 18-year-olds in England receive the results of national exams, pointing out that he failed his exams and noting that he is nevertheless capable of affording the tasteless trappings of conspicuous wealth (Mylrea, 2017).

Another approach is to ask essentially the same question but frame it probabilistically: What is likely to be most useful in the future? This is the point at which a small army of commentators reach for their crystal balls and make confident predictions about the unknowable: There will be a rise in the use of artificial intelligence or robots or self-propelled dirigibles (I made up that one) that will lead to an increase or maybe a decrease in manufacturing. What is certain is that students will not need to know facts any longer because machines can do that for them. Instead, they will need to develop the uniquely human qualities that machines cannot emulate and, by a remarkable coincidence, this will require the kind of education advocated for by 19th-century progressivists, along with perhaps some computer programming (see, for example, Monbiot, 2017; Benn, 2020).

I find this unconvincing. The only certainty about the future is its unpredictability. When I was at secondary school in the late 1980s and early 1990s, I remember being instructed in how to use the word-processing software packages Edword and View on a mouse-less BBC microcomputer. During one lesson, we were taught the commands required to justify text in View. No doubt, the thinking behind this lesson was that it would be functionally useful—that it would in some way prepare us for the future. It did not, of course. That knowledge was ephemeral, obsolescent. Nevertheless, the linear equations, the history of the Industrial Revolution and the German for “What did you do at the weekend?” that I learnt are as valid today as they were then.

This illustrates a key principle for curriculum design. Concepts do not have a fixed lifespan. Some concepts are more enduring than others because they have more value. How, then, are we to pick the more valuable ones—the ones that are likely to persist in being valuable long into the future? One indication is that they have already endured, that these concepts have survived change in the past. If we have found a cultural artefact to be valuable for 50, a hundred or maybe a thousand years, then it is certainly possible that it will be rendered obsolete by impending technological or societal change. However, this seems less likely than for a concept that has been around for just a few months or years. Although possible, I doubt that linear equations will become obsolete any time soon, or that the Industrial Revolution will cease to have happened or have been important, or that people in Germany will stop asking each other what they did at the weekend or alter the language they use to do this.

References

Benn, M. (May 1st, 2020) This curious revolution avoids conflict and sidesteps inequality. Schools Week. Retrieved from: https://schoolsweek.co.uk/this-curious-revolution-avoids-conflict-and-sidesteps-inequality/

Bobbitt, J. (1918) The Curriculum. Boston, MA: Houghton Mifflin.

Capel, S., Leask, M. and Turner, T. (1996) Learning to Teach in the Secondary School: A Companion to School Experience. London: Routledge.

Derry, S.J. (1996) Cognitive schema theory in the constructivist debate. Educational Psychologist, 31(3–4): 163–74.

Dewey, J. (1916) Democracy and Education. Project Gutenberg. Retrieved from: www.gutenberg.org/files/852/852-h/852-h.htm

Egan, K. (2004) Getting it Wrong from the Beginning: Our Progressivist Inheritance from Herbert Spencer, John Dewey, and Jean Piaget. New Haven, CT: Yale University Press.

Geary, D.C. (1995) Reflections of evolution and culture in children’s cognition: Implications for mathematical development and instruction. American Psychologist, 50(1): 24.

Macfarlane, R. (April 29th, 2020) Knowledge is not enough, growing effective learners must become our aim. Big Education. Retrieved from https://bigeducation.org/lfl-content/knowledge-is-not-enough-growing-effective-learners-must-become-our-aim/

Monbiot, G. (February 15th, 2017) In an age of robots, schools are teaching our children to be redundant. Retrieved from: www.theguardian.com/commentisfree/2017/feb/15/robots-schools-teaching-children-redundant-testing-learn-future

Mylrea, H. (August 17th, 2017) Jeremy Clarkson is back with his annual reminder that grades aren’t everything. Retrieved from: www.nme.com/blogs/nme-blogs/jeremy-clarkson-annual-reminder-grades-arent-everything-2125647

Plutarch (1927) De auditu (On listening to lectures). In Moralia, trans. F.C. Babbitt (Loeb Classical Library). Available online at: penelope.uchicago.edu/Thayer/E/Roman/Texts/Plutarch/Moralia/ (accessed September 13th, 2013).