AI Debate

Irrational AI-nxiety

Concerns over the potential harm of new technologies are often sensible, but they should be grounded in fact, not flights of fearful fancy.

The development of full artificial intelligence could spell the end of the human race.

Stephen Hawking

[AI Poses] vastly more risk than North Korea.

Elon Musk

Very smart people tell us to be very worried about AI. But very smart people can also be very wrong and their paranoia is a form of cognitive bias owed to our evolved psychology. Concerns over the potential harm of new technologies are often sensible, but they should be grounded in fact, not flights of fearful fancy. Fortunately, at the moment, there is little cause for alarm.

Some fear that AI will reach parity with human intelligence or surpass it, at which point it will threaten harm, displace, or eliminate us. In November, Stephen Hawking told Wired, “I fear that AI may replace humans altogether. If people design computer viruses, someone will design AI that replicates itself. This will be a new form of life that will outperform humans.” Similarly, Elon Musk has said that AI is “a fundamental existential risk for human civilization.”

This fear seems to be predicated on the assumption that as an entity gets smarter, especially compared to people, that it becomes more dangerous to people. Does intelligence correlate with being aggressively violent or imperial? Not really. Ask a panda, three-toed sloth, or baleen whale, each of which is vastly smarter than a pin-brained wasp or venomous centipede.

It may be true that predators tend to be brainier because it is more difficult to hunt than it is to graze, but that does not mean that intelligence necessarily entails being more aggressive or violent. We ought to know this because we’ve engineered animals to be, in some fashion, smarter and simultaneously tamer through domestication. Domesticated dogs can help the blind navigate safely, sniff out bombs, and perform amusing tricks on command. So why do we fear AI so readily?

Pre-civilization human life was vastly more violent and dangerous. Without courts, laws, rights, and the superordinate state to provide them, violence was a common mode of dispute resolution and a profitable means of resource acquisition. Socially unfamiliar and out-group humans were always a potential threat. Even mere evidence of unfamiliar minds (an abandoned campsite, tools or other artifacts found) could induce trepidation because it meant outsiders were operating nearby and might mean you harm. Natural selection would have favored a sensitivity to even small clues of outsider minds, such as tracks in the dirt that triggered wariness, if not fear. The magnitude of such apprehension should be a function of the capability and formidability suggested by the evidence. The tracks of 20 are scarier than one. Finding a sophisticated weapon is scarier than finding a fishing pole. Perhaps this is why “strong” AI, in particular, worries us.

These biases penetrate many areas of our psychology. People still tend to fear, hate, dehumanize, scapegoat, and attack out-group members. So far, I have discussed human-human interactions. AI is a new (non-human) kid on the block. Is there any evidence these biases apply to non-human minds that otherwise match the description of intelligent out-group agents? I will describe two: aliens and ghosts.

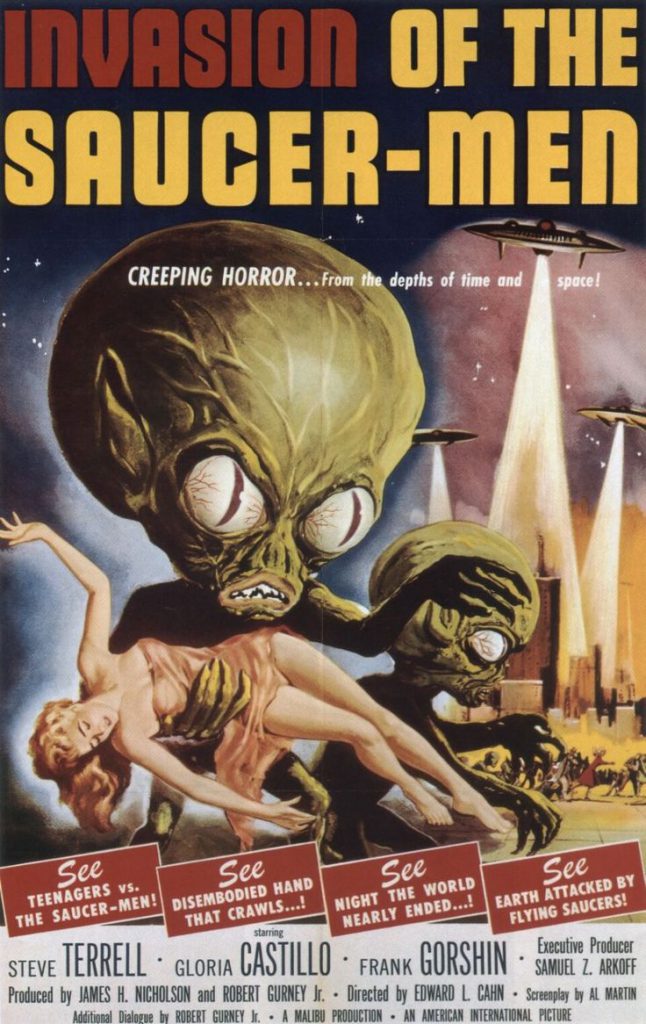

For as long as the concept of life on other worlds has existed, fear of intelligent extra-terrestrials has been its shadow. Smart aliens are not always cast as sinister, but dangerous ETs have been ubiquitous in science fiction. Hostile aliens intend to abduct, kill, sexually molest, or enslave people. We fear they will conquer our world and plunder its resources. In other words, precisely the same fears people have about other groups of people. There is an absurd self-centeredness about the assumption that any intelligent agents in the galaxy simply must want the same things we want and travel vast interstellar distances just to acquire them. Conquest and material acquisition are human obsessions, but it does not follow that they must therefore be features of all intelligent species anywhere in the universe.

Even beyond science fiction, people seem afraid of possible alien contact. Perhaps not coincidentally, Stephen Hawking has also intimated that alien contact could go badly for humans. Supermarket tabloids report the delusional fantasies of people who seem to genuinely believe that they have been abducted, probed, or otherwise violated by aliens. Note that these delusions are generally fear-based rather than the positive sort people have about psychics or faith healers because those entities are part of one’s human in-group. The extra-terrestrial out-group, on the other hand, will travel a thousand light-years just to probe your rectum and mutilate your cows.

Similarly, according to almost every ghost story, dying turns a normal person into a remorseless, oddly determined psychopath even if the rest of their mind (such as memories, language or perception) is completely unaltered. Believers have probably never stopped to question why this is the default assumption. There is no a priori reason to assume this about death, even a tragic or traumatic death. Many thousands of people have had traumatic near-death experiences without this rendering them transfigured monsters. People may intuitively ascribe sinister motives to ghosts simply because the ghost exist outside of our natural and social world, so its motives and purposes are unknown and therefore suspect. A ghost has no apparent needs and can’t sustain injury. In spite of that, it is fixated on people just as inexplicably as aliens are presumed to be.

The essence of any good ghost story is not so much what a ghost does but the mere presence of a foreign and unknown entity in our personal space. Even those who have no belief in alien visitors or ghosts–myself included–tend to find such stories compelling and enjoyable because they exploit this evolved anxiety: unseen agents could mean you harm. The glass catwalk over the Grand Canyon makes your palms sweat, even though you are completely safe for the same reason: our minds evolved in the world of the past, not the present. A past that did not have safe glass catwalks or benign AIs.

Our intuitions and assumptions about aliens and ghosts make no sense at all until you factor in the innate human distrust of possibly hostile outsiders. It is reasonable to fear other people some of the time because of the particular properties of the human mind. We can be aggressive, violent, competitive, antagonistic, and homicidal. We sometimes steal from, hurt, and kill each other. However, these attributes are not inseparable from intelligence. Adorable, vegetarian panda bears descended from the same predator ancestor as carnivorous bears, raccoons, and dogs. Ancestry is hardly destiny, and pandas are no dumber than other bears for their diminished ferocity.

Whereas blind evolutionary forces shaped most animals, we are the shapers of whatever AI we wish to make. This means we can expect them to be more like our favorite domesticated species, the dog. We bred them to serve us and we will make AI to serve us, too. Predilections for conquest, dominance, and aggression simply do not appear spontaneously or accidentally, whether we are speaking of artificial or natural selection (or engineering). In contrast to our intuitive assumptions, emotions for things like aggression are sophisticated cognitive mechanisms that do not come free with human-like intelligence. Like all complex behavioral adaptations, they must be painstakingly chiseled over thousands or millions of years and only persist under conditions that continue to make them useful.

The most super-powered AI could also have the ambitions of a three-toed sloth or the temperament of a panda bear because it will have whatever emotions we wish to give it. Even if we allow it to ‘evolve’ it is we who will set the parameters about what is ‘adaptive,’ just as we did with dogs.

If you’re still unconvinced a powerful artificial mind could be markedly different from ours in its nature, maybe it doesn’t matter. Consider human behavioral flexibility. The rate of violence in some groups of humans is hundreds of times higher than others. The difference isn’t in species, genes, or neurons, but in environment. If a human is made vastly more peaceable and pro-social by the simple accident of being born in a free, cooperative society, why worry that AI would be denied the same capacity?

In the 1950s, when computers were coming of age, technology experts thought that very soon machines would be able to do ‘simple’ things like walking and understanding speech. After all, the logic went, walking is easier than predicting the weather or playing chess. Those prognosticators were woefully mistaken because these experts weren’t psychologists and they did not understand the complexity of the problem of something like walking. Moreover, their human minds made them prone to make this error: our sophisticated brains are sophisticated in order to make walking seem easy to us.

No person that I know of is qualified to predict the inevitable future of AI because they would need to understand psychology as well as they understand artificial intelligence and engineering. And psychologists themselves did not always understand that a ‘simple’ feat like walking was a computationally complex adaptation. There may not even be sufficient research in these fields to support an informed opinion at this point. Sensible prediction isn’t impossible, but it is very difficult because both fields are changing and expanding rapidly.

This is not to say that nobody has any expertise. Psychologists and AI researchers and engineers have relevant and current knowledge required to locate ourselves on the scale of techno-trepidation (full disclosure: I fancy myself just such a psychologist). As of now, available evidence recommends the alert status “cautiously optimistic.”

Lastly, the current state of AI research bears explanation. In college, I took a class in neural-network modeling. What I learned about AI then remains true today. We make two kinds of AI. One kind resembles bits of the human nervous system and can do almost nothing; the other kind is nothing like it but can do amazing things, like beat us at chess or perform medical diagnoses. We’ve created clever AIs meant to fool us, but a real human-like AI is unlikely in the next few decades. As a recently released report on AI progress from MIT noted:

Tasks for AI systems are often framed in narrow contexts for the sake of making progress on a specific problem or application. While machines may exhibit stellar performance on a certain task, performance may degrade dramatically if the task is modified even slightly. For example, a human who can read Chinese characters would likely understand Chinese speech, know something about Chinese culture and even make good recommendations at Chinese restaurants. In contrast, very different AI systems would be needed for each of these tasks.

The director of Sinovation Ventures’ Artificial Intelligence Institute, meanwhile, opined in The New York Times:

At the moment, there is no known path from our best A.I. tools (like the Google computer program that recently beat the world’s best player of the game of Go) to “general” A.I. — self-aware computer programs that can engage in common-sense reasoning, attain knowledge in multiple domains, feel, express and understand emotions and so on.

This cannot be remedied by media-touted technosorcery of the day like machine learning or AI self-replication because these only work to make human-like minds given the right mental architecture and the right selection pressures. These are questions we still struggle to understand about humans, things we largely do not know. They are not ours to gift to a nascent AI, no matter how impressive its computational power.

Selecting for task performance does not make for gains in human-like intelligence in animals either. Efforts to breed rodents for maze-running did indeed seem to produce better maze-runners. But those rodents weren’t better at anything else and, as it turns out, they may not have been any smarter at mazes either (Innes 1992). Crows have turned out to have remarkable abilities to creatively solve problems by making tools for specific tasks. But they don’t have language or ultra-sociality and can’t do long division.

Hawking, Gates, et al are right about one thing. We should proceed with caution and some regulation. This is just what we have successfully done (sometimes better, sometimes worse) with many other potentially dangerous technologies. The day will come when prudence demands limiting certain uses or types of AI research. So let prudence demand it. Not primeval fears of digital ghosts among the shadows.

Reference:

Innis, N. K. (1992). Tolman and Tryon: Early research on the inheritance of the ability to learn. American Psychologist, 47(2), 190-197.