Science / Tech

A Tale of Two Bell Curves

The truth, surprising as it may seem today, is this: The Bell Curve is not pseudoscience.

“The great enemy of the truth is very often not the lie, deliberate, contrived and dishonest, but the myth, persistent, persuasive and unrealistic” ~ John F. Kennedy 1962

To paraphrase Mark Twain, an infamous book is one that people castigate but do not read. Perhaps no modern work better fits this description than The Bell Curve by political scientist Charles Murray and the late psychologist Richard J. Herrnstein. Published in 1994, the book is a sprawling (872 pages) but surprisingly entertaining analysis of the increasing importance of cognitive ability in the United States. It also included two chapters that addressed well-known racial differences in IQ scores (chapters 13-14). After a few cautious and thoughtful reviews, the book was excoriated by academics and popular science writers alike. A kind of grotesque mythology grew around it. It was depicted as a tome of racial antipathy; a thinly veiled expression of its authors’ bigotry; an epic scientific fraud, full of slipshod scholarship and outright lies. As hostile reviews piled up, the real Bell Curve, a sober and judiciously argued book, was eclipsed by a fictitious alternative. This fictitious Bell Curve still inspires enmity; and its surviving co-author is still caricatured as a racist, a classist, an elitist, and a white nationalist.

Myths have consequences. At Middlebury college, a crowd of disgruntled students, inspired by the fictitious Bell Curve — it is doubtful that many had bothered to read the actual book — interrupted Charles Murray’s March 2nd speech with chants of “hey, hey, ho, ho, Charles Murray has got to go,” and “racist, sexist, anti-gay, Charles Murray go away!” After Murray and moderator Allison Stanger were moved to a “secret location” to finish their conversation, protesters began to grab at Murray, who was shielded by Stanger. Stanger suffered a concussion and neck injuries that required hospital treatment.

It is easy to dismiss this outburst as an ill-informed spasm of overzealous college students, but their ignorance of The Bell Curve and its author is widely shared among social scientists, journalists, and the intelligentsia more broadly. Even media outlets that later lamented the Middlebury debacle had published – and continue to publish – opinion pieces that promoted the fictitious Bell Curve, a pseudoscientific manifesto of bigotry. In a fairly typical but exceptionally reckless 1994 review, Bob Hebert asserted, “Murray can protest all he wants, his book is just a genteel way of calling somebody a n*gger.” And Peter Beinart, in a defense of free speech published after the Middlebury incident, wrote, “critics called Murray’s argument intellectually shoddy, racist, and dangerous, and I agree.”

The Bell Curve and its authors have been unfairly maligned for over twenty years. And many journalists and academics have penned intellectually embarrassing and indefensible reviews and opinions of them without actually opening the first few pages of the book they claim to loathe. The truth, surprising as it may seem today, is this: The Bell Curve is not pseudoscience. Most of its contentions are, in fact, perfectly mainstream and accepted by most relevant experts. And those that are not are quite reasonable, even if they ultimately prove incorrect. In what follows, we will defend three of the most prominent and controversial claims made in The Bell Curve and note that the most controversial of all its assertions, namely that there are genetically caused race differences in intelligence, is a perfectly plausible hypothesis that is held by many experts in the field. Even if wrong, Herrnstein and Murray were responsible and cautious in their discussion of race differences, and certainly did not deserve the obloquy they received.

Claim 1: There is a g factor of cognitive ability on which individuals differ.

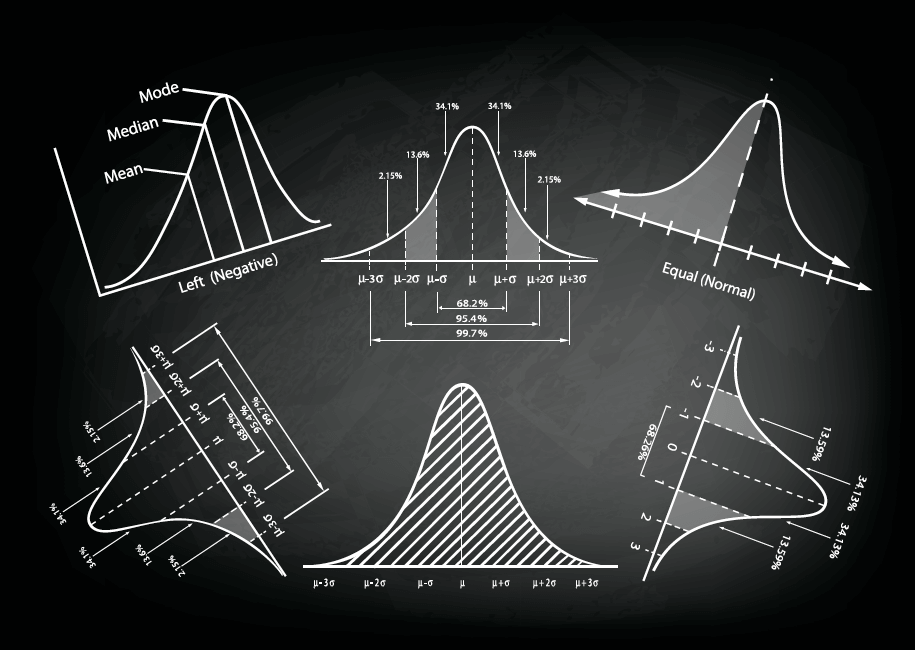

First discovered in 1904 by Charles Spearman, an English psychologist, the g factor is a construct that refers to a general cognitive ability that influences performance on a wide variety of intellectual tasks. Spearman noted that, contrary to some popular myths, a child’s school performance across many apparently unrelated subjects was strongly correlated. A child who performed well in mathematics, for example, was more likely to perform well in classics or French than a child who performed poorly in mathematics.

He reasoned there was likely an underlying cognitive capacity that affected performance in each of these disparate scholastic domains. Perhaps a useful comparison can be made between the g factor and an athletic factor. Suppose, as seems quite likely, that people who can run faster than others are also likely to be able to jump higher and further, throw faster and harder, and lift more weight than others, then there would be a general athletic factor, or a single construct that explains some of the overall variance in athletic performance in a population. This is all g is: A single factor that explains some of the variance in cognitive ability in the population. If you know that Sally excels at mathematics, then you can reasonably hypothesize that she is better than the average human at English. And if you know that Bob has an expansive vocabulary, then you can reasonably conclude that he is better than an average human at mathematics.

Despite abstruse debates about the structure of intelligence, most relevant experts now agree that there is indeed a g factor. Sociologist and intelligence expert, Linda Gottfredson, for example, wrote that: “The general factor explains most differences among individuals in performance on diverse mental tests. This is true regardless of what specific ability a test is meant to assess [and] regardless of the test’s manifest content (whether words, numbers or figures)…” Earl Hunt, in his widely praised textbook on intelligence, noted that, “The facts indicate that a theory of intelligence has to include something like g.” (pg. 109). And Arthur Jensen, in his definitive book on the subject, wrote that, “At the level of psychometrics, g may be thought of as the distillate of the common source of individual variance…g can be roughly likened to a computer’s central processing unit.” (Pg. 74).

Claim 2: Intelligence is heritable.

Roughly speaking, heritability estimates how much differences in people’s genes account for differences in people’s traits. It is important to note that heritability is not a synonym for inheritable. That is, some traits that are inherited, say having five fingers, are not heritable because underlying genetic differences do not account for the number of fingers a person has. Possessing five fingers is a pan-human trait. Furthermore, heritability is not a measure of malleability. Some traits that are very heritable are quite responsive to environmental inputs (height, for example, which has increased significantly since the 1700s, but is highly heritable).

Most research suggests that intelligence is quite heritable, with estimates from 0.4-0.8, meaning that roughly 40 to 80 percent of the variance in intelligence can be explained by differences in genes. The heritability of intelligence increases across childhood and peaks during middle adulthood.

At this point, the data, from a variety of sources including adoptive twin studies and simple parent-offspring correlations, are overwhelming and the significant heritability of intelligence is no longer a matter of dispute among experts. For example, Earl Hunt contended, “The facts are incontrovertible. Human intelligence is heavily influenced by genes.” (Pg. 254). Robert Plomin, a prominent behavioral geneticist, asserted that, “The case for substantial genetic influence on g is stronger than for any other human characteristic.” (Pg. 108). And even N. J. Mackintosh, who was generally more skeptical about g and genetic influences on intelligence, concluded, “The broad issue is surely settled [about the source of variation in intelligence]: both nature and nurture, in Galton’s phrase, are important.” (Pg. 254).

Claim 3: Intelligence predicts important real world outcomes.

It would probably surprise many people who criticize the fictitious Bell Curve that most of the book covers the reality of g and the real world consequences of individual differences in g, including the emergence of a new cognitive elite (and not race differences in intelligence). Herrnstein and Murray were certainly not the first to note that intelligence strongly predicts a variety of social outcomes, and today their contention is hardly disputable. The only matter for debate is how strongly intelligence predicts such outcomes. In The Bell Curve, Herrnstein and Murray analyzed a representative longitudinal data set from the United States and found that intelligence strongly predicted many socially desirable and undesirable outcomes including educational attainment (positively), socioeconomic status (positively), likelihood of divorce (negatively), likelihood of welfare dependence (negatively) and likelihood of incarceration (negatively).

Since the publication of The Bell Curve, the evidence supporting the assertion that intelligence is a strong predictor of many social outcomes has grown substantially. Tarmo Strenze, in a meta-analysis (a study that collects and combines all available studies on the subject), found a reasonably strong relation between intelligence and educational attainment (0.53), intelligence and occupational prestige (0.45), and intelligence and income (0.23). In that paper, he noted that “…the existence of an overall positive correlation between intelligence and socioeconomic success is beyond doubt.” (Pg. 402). In a review on the relation between IQ and job performance, Frank Schmidt and John Hunter found a strong relation of .51, a relation which increases as job complexity increases. In a different paper, Schmidt candidly noted that “There comes a time when you just have to come out of denial and objectively accept the evidence [that intelligence is related to job performance].” (pg. 208). The story is much the same for crime, divorce, and poverty. Each year, more data accumulate demonstrating the predictive validity of general intelligence in everyday life.

Claim 4a: There are race differences in intelligence, with East Asians scoring roughly 103 on IQ tests, Whites scoring 100, and Blacks scoring 85.

Of course, most of the controversy The Bell Curve attracted centered on its arguments about race differences in intelligence. Herrnstein and Murray asserted two general things about race differences in cognitive ability: (1) there are differences, and the difference between Blacks and Whites in the United States is quite large; and (2) it is likely that some of this difference is caused by genetics. The first claim is not even remotely controversial as a scientific matter. Intelligence tests revealed large disparities between Blacks and Whites early in the twentieth century, and they continue to show such differences. Most tests that measure intelligence (GRE, SAT, WAIS, et cetera) evince roughly a standard deviation difference between Blacks and Whites, which translates to 15 IQ points. Although scholars continue to debate whether this gap has shrunk, grown, or stayed relatively the same across the twentieth century, they do not debate the existence of the gap itself.

Here are what some mainstream experts have written about the Black-White intelligence gap in standard textbooks:

“It should be acknowledged, then, without further ado that there is a difference in average IQ between blacks and whites in the USA and Britain.” (Mackintosh, p. 334).

“There is a 1-standard deviation [15 points] difference in IQ between the black and white population of the U.S. The black population of the U.S. scores 1 standard deviation lower than the white population on various tests of intelligence.” (Brody, p. 280).

“There is some variation in the results, but not a great deal. The African American means [on intelligence tests] are about 1 standard deviation unit…below the White means…” (Hunt, p. 411).

Claim 4b: It is likely that some of the intelligence differences among races are caused by genetics.

This was the most controversial argument of The Bell Curve, but before addressing it, it is worth noting how cautious Hernstein and Murray were when forwarding this hypothesis: “It seems highly likely to us that both genes and environment have something to do with racial differences. What might that mix be? We are resolutely agnostic on that issue; as far as we can determine, the evidence does not yet justify an estimate.” (p. 311). This is far from the strident tone one would expect from reading secondhand accounts of The Bell Curve!

There are two issues to address here. The first is how plausible is the hereditarian hypothesis (the hypothesis that genes play a causal role in racial differences in intelligence); and the second is should responsible researchers be allowed to forward reasonable, but potentially inflammatory hypotheses if they might later turn out false.

Although one would not believe it from reading most mainstream articles on the topic (with the exception of William Saletan’s piece at Slate), the proposal that some intelligence differences among races are genetically caused is quite plausible. It is not our goal, here, to cover this debate exhaustively. Rather, we simply want to note that the hereditarian hypothesis is reasonable and coheres with a parsimonious view of the evolution of human populations . Whether or not it is correct is another question.

Scholars who support the hereditarian hypothesis have marshalled an impressive array of evidence to defend it. Perhaps the strongest evidence is simply that there are, as yet, no good alternative explanations.

Upon first encountering evidence of an IQ gap between Blacks and Whites, many immediately point to socioeconomic disparities. But researchers have long known that socioeconomic status cannot explain all of the intelligence gap. Even if researchers control for SES, the intelligence gap is only shrunk by roughly 30% (estimates vary based on the dataset used, but almost none of the datasets finds that SES accounts for the entire gap). This is excessively charitable, as well, because intelligence also causes differences in socioeconomic status, so when researchers “control for SES,” they automatically shrink some of the gap.

Another argument that is often forwarded is that intelligence tests are culturally biased—they are designed in such a way that Black intelligence is underestimated. Although it would be rash to contend that bias plays absolutely no role in race differences in intelligence, it is pretty clear that it does not play a large role: standardized IQ and high stakes tests predict outcomes equally well for all native-born people. As Earl Hunt argued in his textbook, “If cultural unfairness were a major cause of racial/ethnic differences in test performance, we would not have as much trouble detecting it as seems to be the case.” (p. 425).

Of course, there are other possible explanations of the Black-White gap, such as parenting styles, stereotype threat, and a legacy of slavery/discrimination among others. However, to date, none of these putative causal variables has been shown to have a significant effect on the IQ gap, and no researcher has yet made a compelling case that environmental variables can explain the gap. This is certainly not for lack of effort; for good reason, scholars are highly motivated to ascertain possible environmental causes of the gap and have tried for many years to do just that.

For these reasons, and many more, in a 1980s survey, most scholars with expertise rejected the environment-only interpretation of the racial IQ gap, and a plurality (45%) accepted some variant of the hereditarian hypothesis. Although data are hard to obtain today, this seems to remain true. In a recent survey with 228 participants (all relevant experts), most scholars continued to reject the environment-only interpretation (supported by 17%), and a majority believed that at least 50% of the gap was genetically caused (52%). Many scholars in the field have noted that there is a bizarre and unhealthy difference between publicly and privately expressed views. Publicly, most experts remain silent and allow vocal hereditarian skeptics to monopolize the press; privately, most concede that the hereditarian hypothesis is quite plausible. Here, we’ll leave the last word to the always judicious Earl Hunt: “Plausible cases can be made for both genetic and environmental contributions to [racial differences in] intelligence…Denials or overly precise statements on either the pro-genetic or pro-environmental side do not move the debate forward. They generate heat rather than light.” (p. 436).

Whatever the truth about the cause of racial differences in intelligence, it is not irresponsible to forward reasonable, cautiously worded, and testable hypotheses. Science progresses by rigorously testing hypotheses, and it is antithetical to the spirit of science to disregard and in fact rule out of bounds an entirely reasonable category of explanations (those that posit some genetic causation in intelligence differences among racial groups). The Bell Curve is not unique for forwarding such hypotheses; it is unique because it did so publicly. Academics and media pundits quickly made Murray an effigy and relentlessly flogged him as a warning to others: If you go public with what you know, you too will suffer this fate.

There are two versions of The Bell Curve. The first is a disgusting and bigoted fraud. The second is a judicious but provocative look at intelligence and its increasing importance in the United States. The first is a fiction. And the second is the real Bell Curve. Because many, if not most, of the pundits who assailed The Bell Curve did not and have not bothered to read it, the fictitious Bell Curve has thrived and continues to inspire furious denunciations. We have suggested that almost all of the proposals of The Bell Curve are plausible. Of course, it is possible that some are incorrect. But we will only know which ones if people responsibly engage the real Bell Curve instead of castigating a caricature.