Long Read

The Impasse Between Modernism and Postmodernism

The battlefield is indeed the university. How, then, does he characterize these two opponents?

Buying textbooks, writing syllabi, and putting on armor. This is how many students and teachers prepared to return to campus this past fall. The last few years have witnessed an intensifying war for the soul of the university, with many minor skirmishes, and several pitched battles. The most dramatic was last spring at Evergreen State, shortly before the end of the spring semester.1 Perhaps the most dramatic since then have been at Reed College and Wilfrid Laurier University.2 There is no shortage of examples, filling periodicals left and right. Wherever it next explodes, this war promises more ferocity, causing more casualties—careers, programs, ideals.

What’s at stake? According to Michael Aaron, writing after the battle at Evergreen, the campus war is symptomatic of a broader clash of three worldviews contesting the future of our culture: traditionalism, modernism, and postmodernism.3 The traditionalists, he writes, “do not like the direction in which modernity is headed, and so are looking to go back to an earlier time when they believe society was better.” Whether they oppose changes to sexual mores or American demographics, Aaron adds, “these folks include typical status-quo conservatives, Evangelical Christians as well as more nefarious types such as white nationalists and the ‘alt right’.” In his estimation, they are done.

He concedes that the election of Trump has empowered them, but he believes “they have largely been pushed to the fringes in terms of their social influence.” A few hours in front of FoxNews, or browsing the massive comment threads of some PragerU videos, would disabuse him of this illusion. Traditionalists are very influential in the national culture of the U.S.A, if not other countries, and hopeful predictions of their retreat have all proven false. But Aaron is correct, in a way. When these traditionalists talk about the future, they suspend their intellects, projecting onto it the past—and more often than not, an imaginary version of the past.4 Make America Great … again?

Aaron is right, then, if by “social influence” he means the society of the academy, where many of the intelligent, informed, and innovative conversations about the future occur. With a few notable exceptions—Robert George of Princeton, for instance—traditionalists of that sort are marginal to these conversations. Nationally, most of them are speaking to each other, preferring the training camp to the front lines. Rod Dreher’s Benedict Option (2017) openly summons them to this withdrawal. “It is between the modernists and postmodernists,” Aaron rightly claims, “where the future of society is being fought,” and the battlefield is indeed the university. How, then, does he characterize these two opponents?

“Postmodernists,” he says, “eschew any notion of objectivity, perceiving knowledge as a construct of power differentials.” That’s as good a short summary as any, and Aaron adduces plenty of examples to show how this philosophical attitude ripples through the beliefs and behavior of people who may never have read Foucault, Derrida, or Lyotard. Focusing on “the Weinstein/Evergreen State affair,” he argues that it “poses a significant crossroads to modern society, extending well beyond the conflict occurring on campus.” Weinstein’s well-reasoned letter against the Day of Presence could have been debated rationally, on its objective merits, in the manner of modernism. Instead, it was treated as a racist incident, an exertion of white power, a move in the postmodern game of knowledge construction.

Just because postmodernism corrupts a university in this way, however, the vindication of modernism does not follow. That would follow only if two conditions were satisfied: if the three options Aaron states are really the only ones, and the other two (traditionalism and postmodernism) have been soundly eliminated. Let us assume that traditionalism of the sort Aaron describes has indeed been soundly eliminated. Let us also assume that postmodernism as a viable ideology for a functioning university has also been soundly eliminated. Isn’t that enough to satisfy those two conditions and render Aaron’s argument sound? In a word, no.

To see why, let us begin with the elimination of postmodernism. Evergreen is indeed the reductio ad absurdum of postmodernism as a viable ideology for a functioning university. Students wandering campus with baseball bats in search of a professor, administrators not permitting campus police to protect him, more than fifty faculty members demanding his punishment for taking his argument to the media … this is madness, and it will inevitably self-destruct, if it hasn’t already.5 But that does not invalidate postmodern critiques of modernism and its aspiration to seek objective truth.

I. Postmodern Critiques

The best of them stem from Nietzsche. Postmodernists particularly cherish an essay he wrote early in his career, On Truth and Lies in a Nonmoral Sense.6 “In some out of the way corner of the universe,” he began it, “there was a star on which clever beasts invented knowing.” The star is quickly extinguished, the beasts and their knowledge disappear, and nothing is lost. Nietzsche compares the philosophers’ traditional glorification of knowledge—as something oriented toward reality, as something good for its own sake—to the gnat’s pride in flying. We humans value it not because it helps us live more truly, but because it makes us proud. Knowledge and its truths do not show us the world as it is. They operate in another direction, helping us create a flattering “reality” for ourselves. Deception, in sum, is the purpose of our vaunted cognition.

“Truths are illusions,” Nietzsche wrote in the same essay, “which we have forgotten are illusions.” Societies no less than individuals deceive themselves in order to believe a flattering ‘truth’; the same holds for our whole species. Whenever human intellect comes to the brink of some real truth that might disillusion us, humiliate us, we turn away in shame or fear, followed immediately by anger at whoever dared try to educate us. Hasn’t that been an effect of much modern science, which keeps displacing us from the center of the cosmos? The Galileo case is iconic, but more illustrative is that of Darwin. He suffered no inquisition, thanks to the freedom of speech enjoyed by the British of his era, but his account of human nature has nearly always been met with scorn from religious believers, whether in Kansas or Turkey.7

With this scorn, such traditionalists have retreated from the battle for our intellectual future, however, so the greater scandal is that Darwinism is still largely ignored—still!—by thinkers in the humanities and social sciences whose work it most of all concerns. These thinkers generally respond to evolutionary arguments about gender, for example, the way Republicans respond to the arguments of climate science—not usually by reading them and offering objective assessments of their content, but more often by contemptuously neglecting them as irrelevant or excoriating their proponents with vague ad hominem attacks. Witness the controversy over James Damore’s Google memo. The contempt and indignation of most of his critics was inversely proportional to their understanding of the relevant science.8

Was Nietzsche therefore right that truths are illusions? Hardly, a champion of the modern Enlightenment will retort: widespread stubbornness to assimilate complex and politically incorrect scientific truths does not vindicate postmodernism. Professors, journalists, and the people whose opinions they shape may adhere to illusory articles of “knowledge” and “truth,” but it does not follow—as the postmodernists would have it—that empirical science is pointless. Far from it. What about the Darwinian truths that exposed these specific illusions as such? What about scientific truths more generally? We may be swimming in a sea of prejudice, propaganda, and popular myths, but empirical science is a saving buoy. Only by knowing the truth can we see the illusions for what they are. Postmodernism does not subvert knowledge and truth, it covertly presumes them.

Knowledge and truth, mind you, not empirical science. For empirical science is nothing more than a method, a discipline, a way of seeking knowledge and truth. It cannot succeed unless its strictures be obeyed. There are always individuals who fail to follow these strictures—usually accidentally, sometimes deliberately—and this is why it is best done in a community. Rituals such as blind peer-review, for example, minimize the risk of sloppy or deceitful conclusions gaining credence. But the errors of individuals can become the errors of communities.9 This is why every era has had its spurious sciences. The 19th century had phrenology, which we now dismiss as quackery, while forgetting the prestige it enjoyed for a few decades as well as the principal scientists who promoted it. More troubling are the spurious sciences whose main figures we still remember and celebrate.

The greatest figure of the modern Enlightenment, Kant, is still studied reverently for, among other achievements, developing an ethics for which persons were obliged by moral duties and protected by moral rights thanks to their rationality. Act only on those maxims, he instructed, which could reasonably function as universal laws. His ethics appears to have been rationalist and universalist: it applied to every rational being. But who counted as rational? Critical race theorists have drawn attention to Kant’s racism, which was never hidden, but propounded by the philosopher himself in a series of lectures and texts that proved to be seminal for “scientific” racism.

Kant divided humanity into four races, as follows: first, “humanity exists in its greatest perfection in the white race”; second, “the yellow Indians have a smaller amount of Talent”; third and fourth, “The Negroes are lower and the lowest are a part of the American peoples.”10 Kant’s ethics were not universalist, argues Charles Mills, because they were conjoined to a theory of the races that deemed only whites fully rational.11 Twenty years later, Kant himself denounced slavery and colonialism, but he never renounced the racism that could still rationalize both by denying non-whites moral rights (to freedom and property, for example).

He was not alone. Other major Enlightenment philosophers—e.g., Locke, Voltaire, Hume—were also racist, so today’s political thinkers who wish to draw from their tradition hope to purify its central insights of the inegalitarian elements inherited from its foundation. The most prominent such effort has been John Rawls’s A Theory of Justice (1971), which bases its political prescriptions on an imaginary scenario, “the original position,” in which pure rational agents contract a political order. They will decide according to their individual interests, he proposes, but because they are behind a “veil of ignorance,” pure of particular identities (Rawls mentions social status, class, intelligence, and strength, but remarkably neither race nor sex), none will promote the interests of one group above the others. The result will therefore be just.

This strategy of purification came under attack from many quarters for this very conceit. Feminists such as Carole Pateman, for instance, have argued that the ostensibly sexless rational agents are in fact men because the original position occludes the distinctly female contribution to their existence: childbirth and mothering. Just as Kant’s ethics were a rationalization for slavery and colonialism, then, Rawls used his theory of justice to “confirm ‘our’ intuitions, which include patriarchal relations of subordination.”12 Ironically, Pateman’s general critique finds support from evolutionary psychology, which teaches that the intuitions of men and women—as populations, mind you, and only on some matters—are different.13

Again, then, Darwinism confirms an important postmodern insight. Has empirical science therefore shown itself again as a sure route to knowledge and truth? Well, an empirical science is only as reliable as the community that sustains it. How reliable, then, is the scientific community? In its own self-conception, needless to say, it is quite reliable. Phrenology and “scientific” racism are sad chapters in its history; yet it was empirical science in the end that discredited them. With merit, scientists thus take pride in their rationality. However irrational they may be at times, especially when they speak outside their specialties, when they speak within them nowadays, they put aside their feelings, identities, and private ambitions to be objective, dispassionate, rational. Or so they feel.

But unless they were to rely on their feelings here, and oddly here alone, they should subject this self-conception to scientific investigation. Fortunately, some of them already have.

II. The Science of Reason

The phenomenon of confirmation bias is by now widely recognized.14 When people hold beliefs, they actively seek evidence to confirm those beliefs, while ignoring contrary evidence. Correlatively, they ignore evidence that supports rival beliefs, while actively seeking evidence against them. The mechanism is thus better known as myside bias, and the best scientific account of rationality—the Darwinian account of Hugo Mercier and Dan Sperber—explains why it was advantageous for humans to develop it.15

On the face of it, myside bias in human reasoning presents a challenge to Darwinism. Wouldn’t it have been more advantageous for our species to have developed reasoning that was oriented toward the truth rather than toward myside’s belief? Wouldn’t a species doomed to always trust its own beliefs, no matter how wrong, eventually lose the struggle for survival? No. An orientation of reasoning toward truth would appear to be more advantageous only when we imagine an individual reasoning and trying to find the truth on his own. An early man inquires whether he should move to higher ground. Believing that he need not do so, yet hesitating to reason about it, his inquiry would be compromised by his myside bias—he would focus on the weather’s similarity to regular patterns, for example, and ignore its similarities to dangerous ones. If he’s wrong, the error could cost him his life.

Our distant ancestors, however, were rarely left to their own devices. Instead, they reasoned in groups. Rather than everyone in a group considering the problem—a collective, if you will, of individual reasoners—it was more efficient for camps within the group to argue for rival positions before an audience. One side, believing it imperative to move to higher ground before an impending flood, could articulate the best arguments for doing so and the best objections to not doing so. By contrast, the other side, believing this would be a waste of resources because the flood would not come, could marshal the best arguments to counter its opponent. Each camp’s inquiry would be compromised by myside bias, but the whole group would benefit from highly motivated advocates on both sides of the question.

What would motivate them? Reason itself, whose purpose is social. “Giving reasons to justify oneself and reacting to the reasons given by others are, first and foremost,” write Mercier and Sperber, “a way to establish reputations and coordinate expectations.”16 Before the tribal council, where you propose that everyone move to higher ground, or whatever, if you cannot supply reasons for your belief, you begin to lose credibility. When you can supply reasons, not to mention objections to rival views, if your arguments prove persuasive, people begin to trust you, you gain status within the group, and thus power. Your power will erode, by contrast, if the beliefs for which you have argued turn out to be false. If this happens often, your power will be gone. So reason must heed reality to work its purpose. But its purpose is not to heed reality. Its purpose is to acquire status and power.

Foucault was thus onto something when he said that “‘truth’ is linked in a circular relation with systems of power which produce and sustain it.”17 Following Nietzsche’s little essay, though, he went too far. For even if our claims to knowledge and truth are all justified within social institutions, even if they are all proposed by humans guilty of myside bias and greedy for honor, they are not all thereby illusions. Some of them are wrong, to be sure, but some of them are right. Such exaggeration has cost postmodern philosophy much credibility among circumspect thinkers. Nevertheless, good science has again confirmed its central tenet, at least when it is soberly qualified: science itself functions to some extent like any other human practice; its practitioners reason in order to secure status and power.

Foucault wrote histories that exposed practices, institutions, and ideologies of truth and justice as in fact regimes of power. In other words, he showed them to be pretentious and hypocritical, and he was very often right. The modern American prison system, for example, pretends to rehabilitate criminals in its so-called correctional facilities, rather than subject them to the cruel and unusual punishments prohibited by the framers of the American constitution (e.g., drawing and quartering). In fact, however, it throws them into an environment designed to torture them in soul as well as body.18 Foucault exposed such hypocrisy in courts and prisons, medicine and psychiatry, churches and schools, among other modern regimes of truth-power.

Accordingly, to call for a return to modernism, as if postmodernism has simply been a temporary fit of cultural madness, is itself an illusion. Those who make this call, such as Aaron, are showing myside bias on a grand scale. They are not alone. A mirror image of this bias can be found among advocates of postmodernism. Both sides are focusing on what is good about their own camp and bad about its rival, while ignoring what is good about its rival and bad about their own camp.

III. Modernism vs. Postmodernism

Advocates of modernism, on one hand, would have us focus on the wonders of empirical science, along with the rights and freedoms of constitutional democracy. These are the two proudest legacies—the first theoretical, the second political—of Enlightenment philosophies. They should be praised, defended, and preserved. But these advocates would also have us ignore modern philosophy’s shortcomings. And yet only now, and still not widely enough, are proponents of this tradition coming to terms with the racism and sexism integral to it. The Enlightenment philosophers were racist and sexist, granted, but can’t their practical philosophies be purified of their personal failings and marginal writings?

Maybe, maybe not, but let’s imagine that they could. You can still make a decent living trying to solve the theoretical puzzles introduced by the philosophies of the 17th and 18th centuries. If the world is composed ultimately of inanimate matter, as many of them proposed, it is still hard to see how it is possible to have animate minds capable of knowledge, let alone choosing freely to act rightly in such a world.19 Only now, and still not widely enough, are proponents of these philosophies coming to terms with the Nietzschean critiques that undermine them. For decades in Anglo-American philosophy it was acceptable to ignore these critiques, dismissing Nietzsche as a madman or a proto-Nazi, but those dismissals now appear defensive.

The revival of Nietzsche’s popularity is to a large extent due to his postmodern epigones. Surveying the failures of modern philosophies, advocates of postmodernism have shown a myside bias of their own, focusing on its sound critiques of modern theories and practices, while ignoring its own theoretical and political weaknesses. After all, any philosophy that rejects the notions of truth, knowledge, and goodness, while presenting itself as true, known, or at least better than its predecessors, soon appears as hypocritical as it showed them to be. Yet if advocates of postmodernism are not saying that its philosophies and politics are at least better than their modernist rivals, what are they saying?

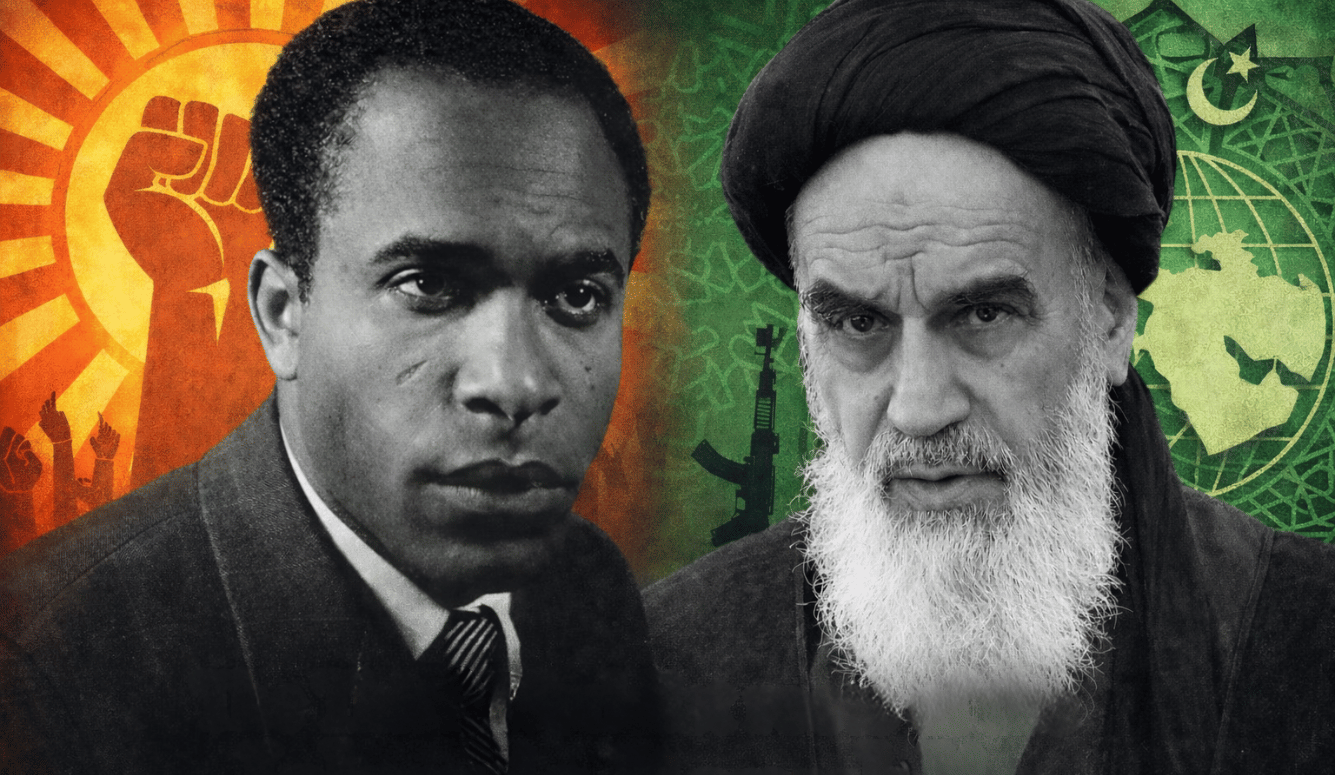

Even when this theoretical problem is ignored, the egalitarian pretenses of its advocates are belied by the politics of both its founders and its recent permutations. Nietzsche was a proto-fascist, celebrating war as healthy for a state, slavery a requirement for its greatness.20 Foucault, for his part, supported Ayatollah Khomeini’s revolutionary movement, writing that it “impressed me in its attempt to open a spiritual dimension in politics.”21 Seen against this background, the illiberal and sometimes violent protests of left-wing students at Evergreen, Middlebury, and Berkeley should come as no surprise.

Nor should it come as a surprise that postmodern rhetoric—which rejects moral judgments—has been adopted by violent right-wing counterparts. Consider, for example, Richard Spencer, who coined the term “alt-right” and has achieved national notoriety for two videos: in one, shortly after the presidential election, he is seen leading fellow white-supremacists with chants of “Hail, Trump!” to which some in his audience reply with Hitler salutes; in the other, he is giving an interview on the street after the Trump inauguration and is sucker-punched by an antifa protestor.

Apparently new to street-brawling, Spencer is more accustomed to quarreling with left-wing intellectuals. He was, after all, a doctoral candidate in the humanities at Duke University, a center for postmodern philosophy. There he absorbed the identity-politics that characterize the humanities in such places. “Trump’s victory was, at its root,” Spencer has said, “a victory for identity politics.” Indeed, anyone familiar with the politics of the progressive professoriate will notice how he has adapted it for the regressive purpose of promoting white identity. Of the influence of social-justice warriors on his movement, he said tersely: “They made us.”22

There are thus assets and liabilities to both philosophical approaches—the modernist, on one hand, and the postmodernist, on the other. Recognizing this is not to call a draw, nor to propose an incoherent compromise. Remembering the purpose of reasoning from Mercier and Sperber, what we need to adjudicate this dispute is something like a massive cultural council, a forum in which the advocates of these two alternatives may present their cases, a forum where their evidence would be evaluated and their arguments would be assessed for their soundness. Simply to call for reasoned assessment is to prejudice the contest against postmodernism. So be it. Does modernism thus win by default? Only if there are no other alternatives.

IV. The Limits of Science

Science exerts a strong pull on the conscience of everyone who values reasoned assessment. How could it not, when it has yielded so many marvels in so short a time? In the global tribal council, so to speak, empirical science has established its credibility more often than not. The history of science reminds scholars of phrenology or cold fusion, but these failures are eclipsed by its successes. And prominent among these successes are those which have discredited elements of traditional worldviews, from Galileo to Franklin to Darwin, right up to the present. Does esteem for science thus decide the epochal question finally for all but reactionary traditionalists and intransigent postmodernists? No: not because it is a participant in the contest, but because it is incapable of rendering such a judgment.

First of all, empirical science describes the world, it does not prescribe any way to live in it. Empirical scientists can build a nuclear bomb, for example, but their expertise cannot tell you whether you should drop it, let alone where or when. Clashes of values cannot be resolved by the scientific community; clashes of worldview are way beyond their pale. Scientists can subvert or confirm any empirical claims made within a worldview (geocentrism, divine lightning, a decisive fall from innocent paradise, and so on), but the worldview and its basic injunctions will always be immune to scientific critique or corroboration. Physical science cannot disprove the existence of an immaterial spirit or an omnipotent God. Nor can it legitimately conclude that humans have a right to life, or property, or happiness.

So, postmodernists zealous to achieve gender equality by presuming that gender is entirely a social construction can be tamed by evolutionary psychology’s findings about natural sex differences. Judith Butler is wrong that “what gender ‘is,’ is always relative to the constructed relations in which it is determined.”23 But whether she is also wrong that sex and gender are essential to who we really are—our personal identity, or subjectivity, in the jargon of philosophers—is not a question empirical scientists can answer. How could a scientist operationalize the notion of who we really are? This not a hypothesis at all; it’s a first principle of a worldview.

In Butler’s case, it is the Nietzschean worldview according to which there is no person, no subject, no agent doing one’s deeds; one simply is the deeds themselves.24 Butler adds the twist that some of these deeds are gendered—performances such as wearing make-up or wrestling in the schoolyard—so that becoming a person requires simultaneously becoming a gender.25 For Kant, and most other philosophers, ancient or modern, there is a person doing one’s deeds, a free agent responsible for them, and in many accounts a soul that will be judged by them. No scientist could decide this question, or for that matter stake a claim anywhere in such a debate. On such questions, empirical science is silent.

Similarly, whether truth is power and all knowledge claims but pretentious power-grabs—these are not hypotheses that scientists could test empirically. Like many postmodern theses, these are first principles of a worldview as old as the Greek Sophists.26 The best refutation of it can still be found in the works of Plato, beginning with his Theaetetus, where Socrates engages with the best of the original truth-relativists, Protagoras, and exposes his hypocrisy.27 He traveled the Greek world selling his expertise, mastery of persuading crowds. Pushed to account for it, he denies that it is knowledge of anything really true; it is merely an ability to substitute better appearances for worse. Better, though, by which measure? By appearances, to be consistent. Protagoras is not really an expert, then, he only appears to be one. But in his worldview there is no difference.

Modernists believe there is a difference between appearance and reality, that something which seems true can be discredited by showing that it is not really so. Thus, if they still believe a universal morality can be derived from humans’ empathy for one another, they should investigate social psychology’s findings about it (and tribalism) to revise their belief.28 But whether humans nonetheless have universal rights—upon some other basis, perhaps personhood, however that be understood—is not a hypothesis empirical scientists can test. The notion of rights cannot be operationalized any more than person could be. Social psychologists may of course study cultures’ beliefs about rights. But what about real rights, the kind governments are morally bound to respect, whatever a culture’s beliefs?

Locke argued that European settlers had earned a right to their American properties by mixing their agricultural labor with the land in a way the native hunter-gatherers of that continent had not done. Marx saw such arguments as fixtures of capitalist ideology, the sort of fictions that bewitch the mind of the proletariat and keep them from simply taking what they need to achieve equality. Who was right: Locke or Marx? No scientist could decide such a question, let alone stake a claim anywhere in the debate. For it’s not a question of empirical science. If you desire to maximize wealth, empirical science can help you achieve your desire. So likewise for the desire to achieve equality (between races, sexes, classes, etc.). But whether you should desire such things, and whether you should make them the goals of your political efforts—these are not scientific questions.

Empirical science cannot even fully underwrite its own claims to knowledge and truth. Do scientific experiments yield knowledge? Do they show us the truth? These are not scientific questions, properly speaking, because they cannot be answered by empirical means. Any experiment designed to underwrite the validity of experimentation would of course beg the question. More fundamentally, though, empirical science relies on the senses, but are the senses reliable? This cannot be shown scientifically without begging the question. The science of optics may very well teach us when and where our eyes function well. But even when they are functioning well, do they present us with the real world, or only an appearance of it?

So how can these questions be answered? How can the claims of empirical science be underwritten? Postmodernist philosophers, on one hand, won’t bother to try, preoccupied as they are with the criticism of these very claims. Modernist philosophers, on the other hand, have been trying since the scientific revolution to underwrite them … and failing. Postmodern critiques, rooted in skeptical puzzles that worried some of the best Enlightenment thinkers (e.g., Hume), are evidence of this failure. Empirical science has nevertheless proceeded undeterred, with most empirical scientists remaining indifferent to philosophic doubts, while achieving marvelous insights.

But the practice of science cannot be long sustained without the co-operation of our wider culture (legally, economically, pedagogically). This is the theoretical half of our present crisis. As the culture becomes more doubtful of scientific legitimacy—whether through postmodern philosophy, the rise of fundamentalism and superstition, or some other means—proponents of empirical science cannot remain indifferent to these doubts if the practice is to flourish. The best of them, the ones entertained by thoughtful people, must be addressed. But how can the skeptical critiques of modern science and philosophy be met? How can such puzzles be solved? The answers to these questions lie in an unexpected place.

Assuming that reality is consistently ordered, a cosmos, Plato argues that the senses do not give us reality as it is because the appearances they present to us are inconsistent. Later skeptics would elaborate this argument, highlighting all the many ways that our sense-data are contradictory. The mountains look blue from a distance, but green close-up. Why assume that proximity gives us their accurate appearance? Maybe it is distance that reveals their real color instead. The pool-water feels cold after you’ve been lying in the sun, but warm as compared with the windy air. Which is it, warm or cold? The examples are endless, and Sextus Empiricus is the place to go if you ever want more of them.

But hasn’t empirical science silenced these doubts? Doesn’t our science of optics tell us the real color of the mountains by measuring the objective wavelength of the light they reflect? Doesn’t our science of kinetics tell us the real temperature of the water by measuring the objective motion of its molecules? Not, one last time, without begging the question. Upon what evidence, after all, have we become so sure of the sciences of optics and kinetics? The evidence of our senses: from the colors, sounds, and textures of innumerable screens, sensors, and instruments. What underwrites our confidence in these sense-data, which are susceptible to the same doubts?

Not even neuroscience can close this gap, despite all the fascinating lessons it has been teaching us in recent decades about our brains, including how they process sense-data. For neuroscience is like any other empirical science: its evidence comes from the senses, the same range of colors, sounds, and textures that constitute all our other observations.

Read Part Two of this essay: “Premodernism of the Future,” here.

Endnotes

[1] http://www.chronicle.com/article/A-Radical-College-s-Public/241577

https://www.wsj.com/articles/the-campus-mob-came-for-meand-you-professor-could-be-next-1496187482

[2] https://www.theatlantic.com/education/archive/2017/11/the-surprising-revolt-at-reed/544682/

https://www.chronicle.com/article/She-Showed-a-Video-in-Class/241976

[3] https://quillette.com/2017/06/08/evergreen-state-battle-modernity/

[4] https://hyperallergic.com/383776/why-we-need-to-start-seeing-the-classical-world-in-color/

[5] https://www.wsj.com/articles/inside-the-madness-at-evergreen-state-1506034740

[6] https://en.wikipedia.org/wiki/On_Truth_and_Lies_in_a_Nonmoral_Sense

[7] https://www.nytimes.com/2017/06/23/world/europe/turkey-evolution-high-school-curriculum.html

[8] https://quillette.com/2017/08/31/google-memo-economist-nothing/

[9] https://heterodoxacademy.org/2017/01/27/why-viewpoint-diversity-also-matters-in-the-hard-sciences/

[10] From Kant’s Physical Geography, translated and quoted by Eze (1997: 118).

[11] Mills 2017.

[12] Pateman 1988: 42.

[13] https://heterodoxacademy.org/2017/08/10/the-google-memo-what-does-the-research-say-about-gender-differences/

[14] https://en.wikipedia.org/wiki/Confirmation_bias

[15] Mercier and Sperber 2017: 218–19.

[16] Mercier and Sperber 2017: 143.

[17] Gordon 1980: 133.

[18] Foucault 1977.

[19] http://www.thenewatlantis.com/publications/the-illusionist

[20] See, for example, The Greek State, and essay contemporaneous with On Truth and Lies in the Nonmoral Sense. Both are available in Pearson and Large 2006.

[21] “What are the Iranians Dreaming About,” Le Nouvel Observateur, October 16–22, 1978. Also excerpted on pp. 203–9 Afary and Anderson 2005. The original article is available here: http://press.uchicago.edu/Misc/Chicago/007863.html

[22] http://www.motherjones.com/politics/2016/10/richard-spencer-trump-alt-right-white-nationalist

[23] Butler 1999, 15

[24] On the Genealogy of Morality 1.13.

[25] Butler 1993: 232.

[26] http://www.3quarksdaily.com/3quarksdaily/2016/11/truth-in-the-age-of-trump.html

[27] Theaetetus 161–67.

[28] http://bostonreview.net/forum/paul-bloom-against-empathy

https://theconversation.com/does-empathy-have-limits-72637

http://nymag.com/daily/intelligencer/2017/09/can-democracy-survive-tribalism.html

[29] For a fuller account, see Miller 2011, Ch. 4.